| 文件存储编码格式 | 建表时如何指定 | 优点弊端 | |

| textfile | 文件存储就是正常的文本格式,将表中的数据在hdfs上 以文本的格式存储 ,下载后可以直接查看,也可以使用cat命令查看 | 1.无需指定,默认就是 2.显示指定stored as textfile 3.显示指定 STORED AS INPUTFORMAT 'org.apache.hadoop.mapred.TextInputFormat' OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat' | 1.行存储使用textfile存储文件默认每一行就是一条记录, 2.可以使用任意的分隔符进行分割。 3.但无压缩,所以造成存储空间大。可结合Gzip、Bzip2、Snappy等使用(系统自动检查,执行查询时自动解压),但使用这种方式,hive不会对数据进行切分,从而无法对数据进行并行操作 |

| sequencefile | 在hdfs上将表中的数据以二进制格式编码,并且将数据压缩了,下载数据 以后是二进制格式,不可以直接查看,无法可视化。 | 1.stored as sequecefile 2.或者显示指定: STORED AS INPUTFORMAT 'org.apache.hadoop.mapred.SequenceFileInputFormat' OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveSequenceFileOutputFormat' | 1.sequencefile存储格有压缩,存储空间小,有利于优化磁盘和I/O性能 2.同时支持文件切割分片,提供了三种压缩方式:none,record,block(块级别压缩效率跟高).默认是record(记录) 3.基于行存储 |

| rcfile | 在hdfs上将表中的数据以二进制格式编码,并且支持压缩。下载后的数据不可以直接可视化。 | 1.stored as rcfile 2.或者显示指定: STORED AS INPUTFORMAT 'org.apache.hadoop.hive.ql.io.RCFileInputFormat' OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.RCFileOutputFormat' | 1.行列混合的存储格式,基于列存储。 2.因为基于列存储,列值重复多,所以压缩效率高。 3.磁盘存储空间小,io小。 |

1.文本文件

在 Spark 中读写文本文件很容易。

当我们将一个文本文件读取为 RDD 时,输入的每一行 都会成为 RDD 的 一个元素。

也可以将多个完整的文本文件一次性读取为一个 pair RDD, 其中键是文件名,值是文件内容。

在 Scala 中读取一个文本文件

val inputFile = "file:///home/common/coding/coding/Scala/word-count/test.segmented"

val textFile = sc.textFile(inputFile)在 Scala 中读取给定目录中的所有文件

val input = sc.wholeTextFiles("file:///home/common/coding/coding/*/word-count")保存文本文件,Spark 将传入的路径作为目录对待,会在那个目录下输出多个文件

textFile.saveAsTextFile("file:///home/common/coding/coding/Scala/word-count/writeback")

//textFile.coalesce(1).saveAsTextFile 就能保存成一个文件对于dataFrame文件,先使用.toJavaRDD 转换成RDD,然后再使用 coalesce(1).saveAsTextFile

2.JSON

JSON 是一种使用较广的半结构化数据格式。

读取JSON,书中代码有问题所以找了另外的一段读取JSON的代码

build.sbt

"org.json4s" %% "json4s-jackson" % "3.2.11"

代码

import org.apache.spark.SparkContext

import org.apache.spark.SparkContext._

import org.apache.spark.SparkConf

import org.json4s._

import org.json4s.jackson.JsonMethods._

import org.json4s.jackson.Serialization

import org.json4s.jackson.Serialization.{read, write}

/**

* Created by common on 17-4-3.

*/

case class Person(firstName: String, lastName: String, address: List[Address]) {

override def toString = s"Person(firstName=$firstName, lastName=$lastName, address=$address)"

}

case class Address(line1: String, city: String, state: String, zip: String) {

override def toString = s"Address(line1=$line1, city=$city, state=$state, zip=$zip)"

}

object WordCount {

def main(args: Array[String]) {

val inputJsonFile = "file:///home/common/coding/coding/Scala/word-count/test.json"

val conf = new SparkConf().setAppName("WordCount").setMaster("local")

val sc = new SparkContext(conf)

val input5 = sc.textFile(inputJsonFile)

val dataObjsRDD = input5.map { myrecord =>

implicit val formats = DefaultFormats

// Workaround as DefaultFormats is not serializable

val jsonObj = parse(myrecord)

//val addresses = jsonObj \ "address"

//println((addresses(0) \ "city").extract[String])

jsonObj.extract[Person]

}

dataObjsRDD.saveAsTextFile("file:///home/common/coding/coding/Scala/word-count/test1.json")

}

}

读取的JSON文件

{"firstName":"John","lastName":"Smith","address":[{"line1":"1 main street","city":"San Francisco","state":"CA","zip":"94101"},{"line1":"1 main street","city":"sunnyvale","state":"CA","zip":"94000"}]}

{"firstName":"Tim","lastName":"Williams","address":[{"line1":"1 main street","city":"Mountain View","state":"CA","zip":"94300"},{"line1":"1 main street","city":"San Jose","state":"CA","zip":"92000"}]}

输出的文件

Person(firstName=John, lastName=Smith, address=List(Address(line1=1 main street, city=San Francisco, state=CA, zip=94101), Address(line1=1 main street, city=sunnyvale, state=CA, zip=94000)))

Person(firstName=Tim, lastName=Williams, address=List(Address(line1=1 main street, city=Mountain View, state=CA, zip=94300), Address(line1=1 main street, city=San Jose, state=CA, zip=92000)))

3.逗号分割值与制表符分隔值

逗号分隔值(CSV)文件每行都有固定数目的字段,字段间用逗号隔开(在制表符分隔值文件,即 TSV 文 件中用制表符隔开)。

如果恰好CSV 的所有数据字段均没有包含换行符,你也可以使用 textFile() 读取并解析数据,

build.sbt

"au.com.bytecode" % "opencsv" % "2.4"3.1 读取CSV文件

import java.io.StringReader

import org.apache.spark.SparkContext

import org.apache.spark.SparkContext._

import org.apache.spark.SparkConf

import org.json4s._

import org.json4s.jackson.JsonMethods._

import org.json4s.jackson.Serialization

import org.json4s.jackson.Serialization.{read, write}

import au.com.bytecode.opencsv.CSVReader

/**

* Created by common on 17-4-3.

*/

object WordCount {

def main(args: Array[String]) {

val input = sc.textFile("/home/common/coding/coding/Scala/word-count/sample_map.csv")

val result6 = input.map{ line =>

val reader = new CSVReader(new StringReader(line));

reader.readNext();

}

for(result <- result6){

for(re <- result){

println(re)

}

}

}

}

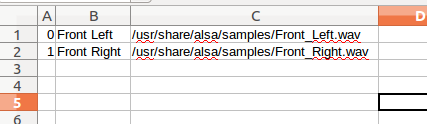

CSV文件内容

输出

0

Front Left

/usr/share/alsa/samples/Front_Left.wav

1

Front Right

/usr/share/alsa/samples/Front_Right.wav如果在字段中嵌有换行符,就需要完整读入每个文件,然后解析各段。如果每个文件都很大,读取和解析的过程可能会很不幸地成为性能瓶颈。

import java.io.StringReader

import org.apache.spark.SparkContext

import org.apache.spark.SparkContext._

import org.apache.spark.SparkConf

import org.json4s._

import org.json4s.jackson.JsonMethods._

import org.json4s.jackson.Serialization

import org.json4s.jackson.Serialization.{read, write}

import scala.collection.JavaConversions._

import au.com.bytecode.opencsv.CSVReader

/**

* Created by common on 17-4-3.

*/

case class Data(index: String, title: String, content: String)

object WordCount {

def main(args: Array[String]) {

val conf = new SparkConf().setAppName("WordCount").setMaster("local")

val sc = new SparkContext(conf)

val input = sc.wholeTextFiles("/home/common/coding/coding/Scala/word-count/sample_map.csv")

val result = input.flatMap { case (_, txt) =>

val reader = new CSVReader(new StringReader(txt));

reader.readAll().map(x => Data(x(0), x(1), x(2)))

}

for(res <- result){

println(res)

}

}

}

输出

Data(0,Front Left,/usr/share/alsa/samples/Front_Left.wav)

Data(1,Front Right,/usr/share/alsa/samples/Front_Right.wav)或者

import java.io.StringReader

import org.apache.spark.SparkContext

import org.apache.spark.SparkContext._

import org.apache.spark.SparkConf

import org.json4s._

import org.json4s.jackson.JsonMethods._

import org.json4s.jackson.Serialization

import org.json4s.jackson.Serialization.{read, write}

import scala.collection.JavaConversions._

import au.com.bytecode.opencsv.CSVReader

/**

* Created by common on 17-4-3.

*/

case class Data(index: String, title: String, content: String)

object WordCount {

def main(args: Array[String]) {

val conf = new SparkConf().setAppName("WordCount").setMaster("local")

val sc = new SparkContext(conf)

val input = sc.wholeTextFiles("/home/common/coding/coding/Scala/word-count/sample_map.csv") //wholeTextFiles读出来是一个RDD(String,String)

val result = input.flatMap { case (_, txt) =>

val reader = new CSVReader(new StringReader(txt));

//reader.readAll().map(x => Data(x(0), x(1), x(2)))

reader.readAll()

}

result.collect().foreach(x => {

x.foreach(println); println("======")

})

}

}

输出

0

Front Left

/usr/share/alsa/samples/Front_Left.wav

======

1

Front Right

/usr/share/alsa/samples/Front_Right.wav

======3.2 保存CSV

import java.io.{StringReader, StringWriter}

import org.apache.spark.SparkContext

import org.apache.spark.SparkContext._

import org.apache.spark.SparkConf

import org.json4s._

import org.json4s.jackson.JsonMethods._

import org.json4s.jackson.Serialization

import org.json4s.jackson.Serialization.{read, write}

import scala.collection.JavaConversions._

import au.com.bytecode.opencsv.{CSVReader, CSVWriter}

/**

* Created by common on 17-4-3.

*/

case class Data(index: String, title: String, content: String)

object WordCount {

def main(args: Array[String]) {

val conf = new SparkConf().setAppName("WordCount").setMaster("local")

val sc = new SparkContext(conf)

val inputRDD = sc.parallelize(List(Data("index", "title", "content")))

inputRDD.map(data => List(data.index, data.title, data.content).toArray)

.mapPartitions { data =>

val stringWriter = new StringWriter();

val csvWriter = new CSVWriter(stringWriter);

csvWriter.writeAll(data.toList)

Iterator(stringWriter.toString)

}.saveAsTextFile("/home/common/coding/coding/Scala/word-count/sample_map_out")

}

}

输出

"index","title","content"4.SequenceFile 是由没有相对关系结构的键值对文件组成的常用 Hadoop 格式。

SequenceFile 文件有同步标记, Spark 可 以用它来定位到文件中的某个点,然后再与记录的边界对齐。这可以让 Spark 使 用多个节点高效地并行读取 SequenceFile 文件。SequenceFile 也是Hadoop MapReduce 作 业中常用的输入输出格式,所以如果你在使用一个已有的 Hadoop 系统,数据很有可能是以 S equenceFile 的格式供你使用的。

import org.apache.hadoop.io.{IntWritable, Text}

import org.apache.spark.SparkContext

import org.apache.spark.SparkContext._

import org.apache.spark.SparkConf

/**

* Created by common on 17-4-6.

*/

object SparkRDD {

def main(args: Array[String]) {

val conf = new SparkConf().setAppName("WordCount").setMaster("local")

val sc = new SparkContext(conf)

//写sequenceFile,

val rdd = sc.parallelize(List(("Panda", 3), ("Kay", 6), ("Snail", 2)))

rdd.saveAsSequenceFile("output")

//读sequenceFile

#val rdd2 = sc.sequenceFile[String, Int](dir + "/part-00000")

#val file = sc.sequenceFile[BytesWritable,Text]("/seq")

val output = sc.sequenceFile("output", classOf[Text], classOf[IntWritable]).

map{case (x, y) => (x.toString, y.get())}

output.foreach(println)

}

}

SequenceFile的内容:

912

912

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?