Kubenets部署

通过minikube部署

最快速体验 Kubernetes 的方式是通过社区提供的 minikube 工具。

minikube 工具支持快速在本地安装一套 Kubernetes 集群。

项目地址https://github.com/kubemetes/minikube

官网https://minikube.sigs.k8s.io/docs/

minikube 启动集群有两种模式:

一种是先在本地创建一个虚拟机,然后在里面创建 Kubemetes 集群;

另一种是在本地直接创建集群,需要指定–vm-driver=none 参数

这里如果选用第一种模式,需要先安装kvm的软件

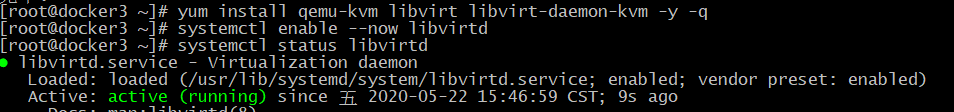

安装KVM

yum install qemu-kvm libvirt libvirt-daemon-kvm -y

systemctl enable --now libvirtd

第二种模式配置好docker就可以了

安装kubectl

kubectl 是 Kubernetes 提供的客户端,使用它可以操作启动后的集群。

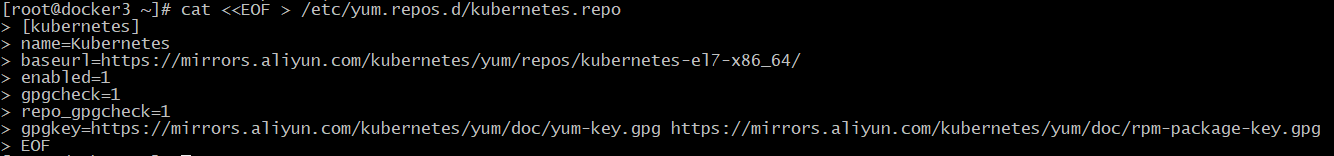

配置yum源

cat <<-EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

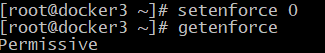

设置selinux

setenforce 0

安装kubectl

yum list kubectl --showduplicates

#--showduplicates 在 list/search 命令下,显示源里重复的条目(用来列出可用软件包的全部版本)

#kubectl软件包,v1.18.0之后有许多参数废弃,但部分功能增加,以1.17版本为例

yum install -y kubectl-1.17.2-0.x86_64

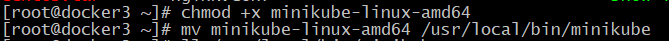

安装minikube

curl -Lo minikube-linux-amd64 https://github.com/kubernetes/minikube/releases/download/v1.10.1/minikube-linux-amd64

chmod +x minikube-linux-amd64

mv minikube-linux-amd64 /usr/local/bin/minikube

启动集群

参考https://yq.aliyun.com/articles/221687

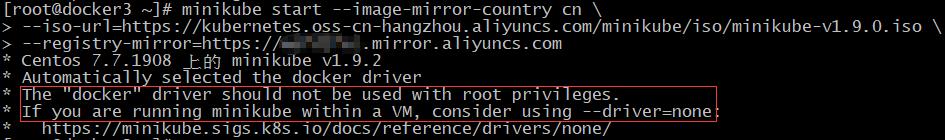

minikube start --image-mirror-country cn \

--iso-url=https://kubernetes.oss-cn-hangzhou.aliyuncs.com/minikube/iso/minikube-v1.9.0.iso \

--registry-mirror=https://xxxxxx.mirror.aliyuncs.com

出现错误提示

https://minikube.sigs.k8s.io/docs/drivers/none/

Most users of this driver should consider the newer Docker driver, as it is significantly easier to configure and does not require root access. The ‘none’ driver is recommended for advanced users only.

该驱动程序的大多数用户应考虑使用较新的Docker驱动程序,因为它易于配置,并且不需要root访问权限。建议仅高级用户使用“无”驱动程序。

老版用–vm-driver,新版推荐使用–driver

–driver=’’: Driver is one of: virtualbox, parallels, vmwarefusion, kvm2, vmware, none, docker, podman (experimental) (defaults to auto-detect)

–vm-driver=’’: DEPRECATED, use

driverinstead

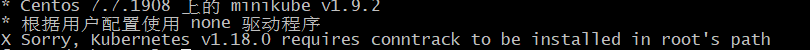

minikube start --image-mirror-country cn \

--iso-url=https://kubernetes.oss-cn-hangzhou.aliyuncs.com/minikube/iso/minikube-v1.9.0.iso \

--registry-mirror=https://xxxxxx.mirror.aliyuncs.com \

--driver=none

错误提示

#安装conntrack

yum install conntrack -y

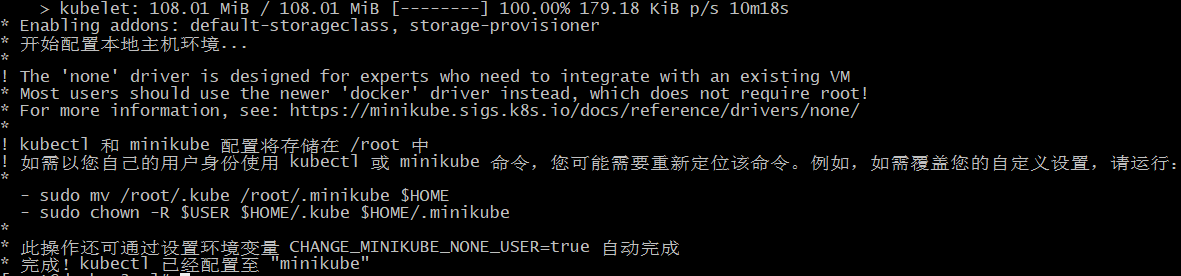

再次启动

minikube start --image-mirror-country cn \

--iso-url=https://kubernetes.oss-cn-hangzhou.aliyuncs.com/minikube/iso/minikube-v1.9.0.iso \

--registry-mirror=https://xxxxxx.mirror.aliyuncs.com \

--driver=none

可以查看到自动拉取了镜像,创建了容器

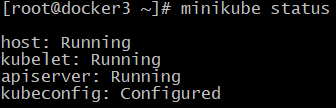

获取本地 kubernetes 集群状态

minikube status

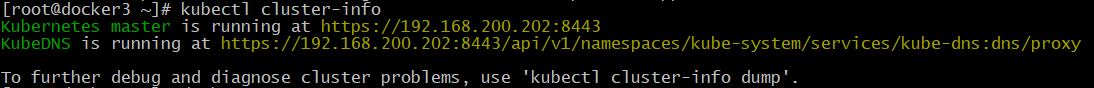

显示集群信息

kubectl cluster-info

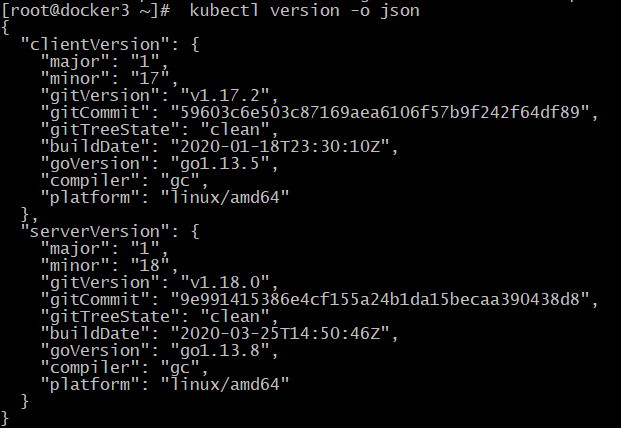

输出 client 和 server 的版本信息

kubectl version -o json

#-o, --output='': One of 'yaml' or 'json'.

部署Pods(容器组)

编写yaml文件

mkdir minikube-nginx&&cd minikube-nginx

vim nginx-deploy.yaml

nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-nginx

labels:

app: nginx

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

创建pods

kubectl apply -f nginx-deploy.yaml

查看

kubectl get pods#查看容器组详情

kubectl get deployment#查看部署信息

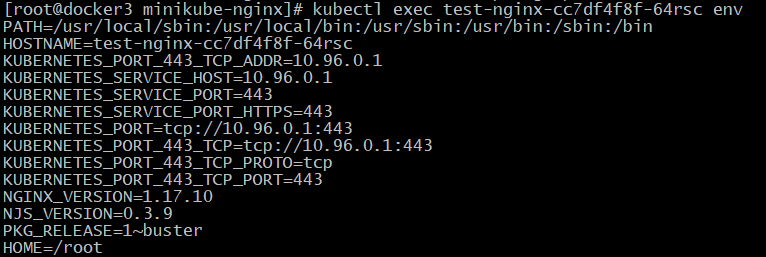

kubectl exec 执行一个命令显示test-nginx 环境信息

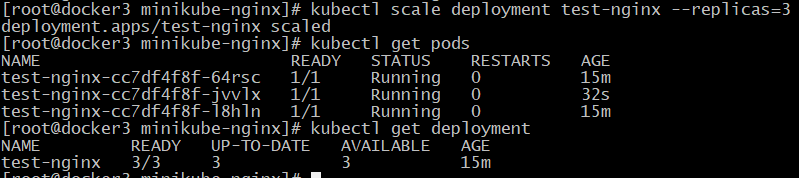

扩展Pods

kubectl scale deployment test-nginx --replicas=3

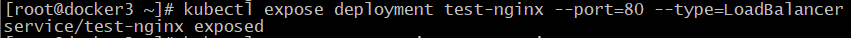

发布服务

生成集群ip和外部端口

kubectl expose deployment test-nginx --port=80 --type=LoadBalancer

确认状态

kubectl get service test-nginx

获取外部地址及端口

minikube service test-nginx --url

测试

curl http://192.168.200.202:32171

删除服务及pods

kubectl delete services test-nginx

kubectl delete deployment test-nginx

通过kubeadm部署

环境准备

所有节点。

echo "192.168.200.200 docker1">>/etc/hosts

echo "192.168.200.201 docker2">>/etc/hosts

修改/etc/docker/daemon.json

"exec-opts": ["native.cgroupdriver=systemd"]

#将systemd作为docker驱动程序,否则kubeadm init会警告

#centos7的cgroup driver为systemd,docker默认的cgroup driver为cgroupfs,使用两种cgroup driver控制资源的话会导致资源分配不均。

#解决方法:修改docker的cgroup driver为systemd

systemctl daemon-reload

systemctl restart docker

关闭swap

swapoff -a

vim /etc/fstab

#/dev/mapper/centos-swap swap swap defaults 0 0

#也可以这样sed -i.bak '/swap/s/^/#/' /etc/fstab

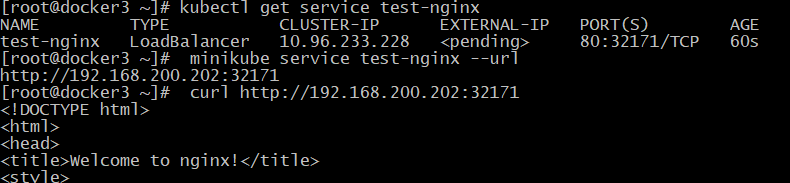

开启net.bridge.bridge-nf-call-iptables

cat <<-EOF >> /etc/sysctl.d/99-sysctl.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

cat /proc/sys/net/bridge/bridge-nf-call-iptables

cat /proc/sys/net/bridge/bridge-nf-call-ip6tables

配置yum源

cat <<-EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

关闭selinux

setenforce 0

安装kube tools并开启kubelet服务自启

yum list kubelet kubeadm kubectl --showduplicates

yum install kubectl-1.17.2-0.x86_64 \

kubeadm-1.17.2-0.x86_64 \

kubelet-1.17.2-0.x86_64 -y

systemctl enable kubelet #先别运行

配置Master节点

docker1节点

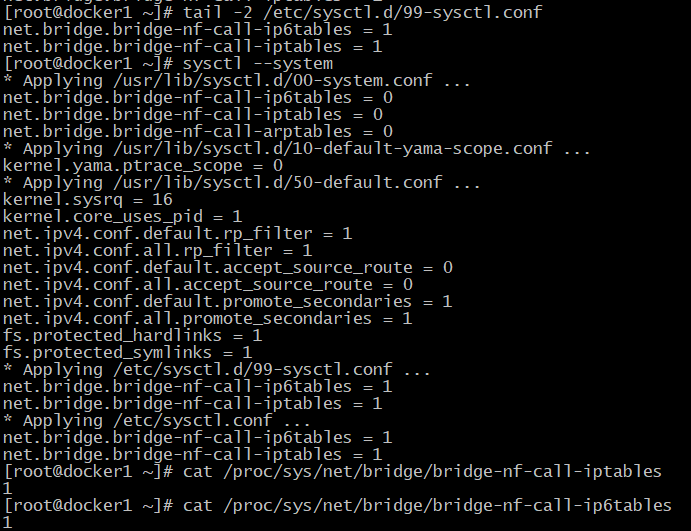

初始化

kubeadm init \

--apiserver-advertise-address=192.168.200.200 \

--pod-network-cidr=10.244.0.0/16 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version=1.17.2

由于配置原因,有可能会造成配置超时,需要执行:kubeadm reset重置后再运行上述命令

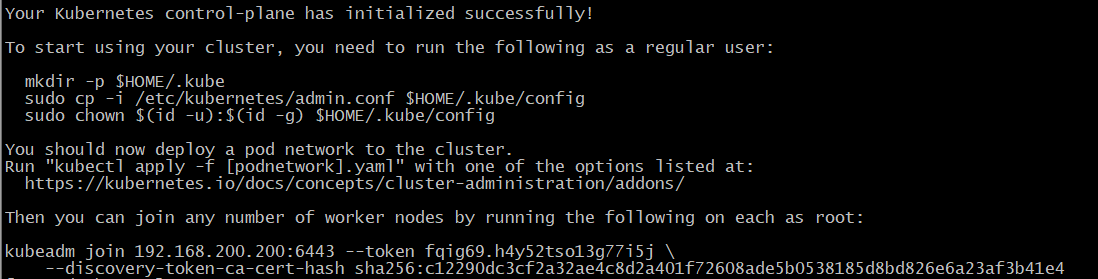

返回的信息很有用,尤其要记住含token的这条命令用来后面worker节点加入集群

kubeadm join 192.168.200.200:6443 \

--token fqig69.h4y52tso13g77i5j \

--discovery-token-ca-cert-hash sha256:c12290dc3cf2a32ae4c8d2a401f72608ade5b0538185d8bd826e6a23af3b41e4

设置环境

根据上图命令输出提示

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

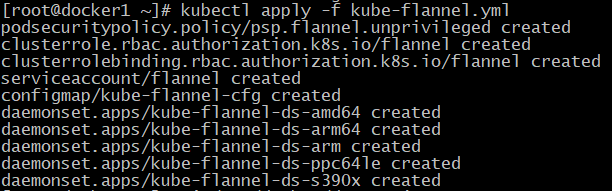

配置Pod的flannel网络

项目地址https://github.com/coreos/flannel/releases

wget https://github.com/coreos/flannel/releases/download/v0.11.0/flanneld-v0.12.0-amd64.docker

docker load -i flanneld-v0.12.0-amd64.docker

#国内

#docker pull quay-mirror.qiniu.com/coreos/flannel:v0.12.0-amd64

#docker tag quay-mirror.qiniu.com/coreos/flannel:v0.12.0-amd64 \

#quay.io/coreosflannel:v0.12.0-amd64

#docker rmi quay-mirror.qiniu.com/coreos/flannel:v0.12.0-amd64

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

报错The connection to the server localhost:8080 was refused - did you specify the right host or port?

参考https://www.cnblogs.com/taoweizhong/p/11545953.html

原因:kubenetes master没有与本机绑定,集群初始化的时候没有设置

解决办法:执行以下命令

export KUBECONFIG=/etc/kubernetes/admin.conf

这个文件主要是集群初始化的时候用来传递参数的

export KUBECONFIG=/etc/kubernetes/admin.conf

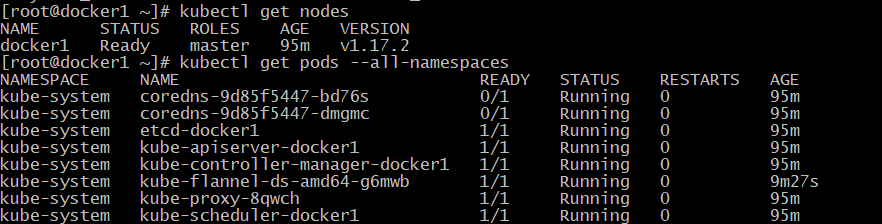

查看master节点状态

kubectl get nodes

kubectl get pods --all-namespaces

配置work节点

docker2。

载入flanneld镜像

scp root@192.168.200.200:/root/flanneld-v0.12.0-amd64.docker .

docker load -i flanneld-v0.12.0-amd64.docker

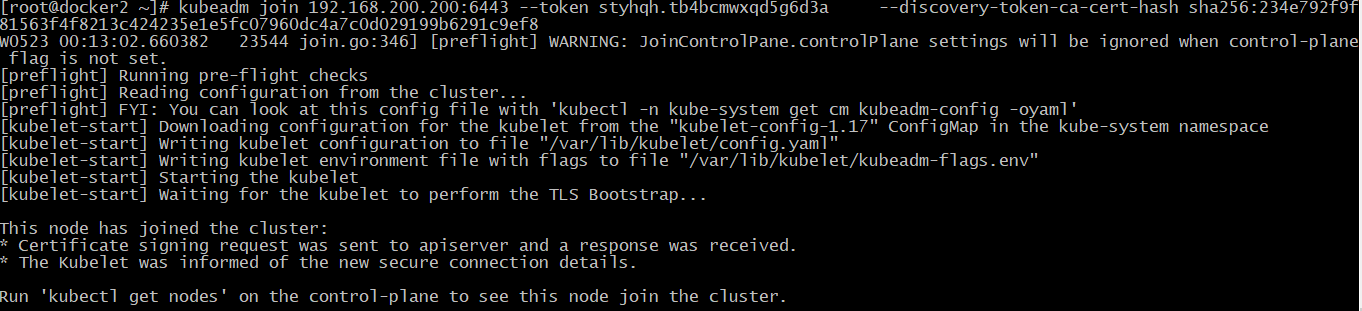

加入集群

kubeadm join 192.168.200.200:6443 \

--token fqig69.h4y52tso13g77i5j \

--discovery-token-ca-cert-hash sha256:c12290dc3cf2a32ae4c8d2a401f72608ade5b0538185d8bd826e6a23af3b41e4

error execution phase preflight: couldn’t validate the identity of the API Server: abort connecting to API servers after timeout of 5m0s

网上说 原因:master节点的token过期了

解决:创建新的token

#得到token

kubeadm token create#得到discovery-token-ca-cert-hash

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'方法二

kubeadm token create --print-join-command

kubeadm join 192.168.200.200:6443 \

--token {新token} \

--discovery-token-ca-cert-hash sha256:{新token-ca-cert-hash}

结果还是不行,报相同错误,

ss -antulp | grep :6443看到端口也正常的

原来是master节点防火墙端口没打开

firewall-cmd --add-port=6443/tcp --permanent

firewall-cmd --reload

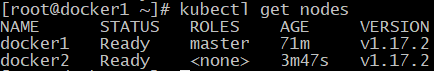

master节点查看

kubectl get nodes

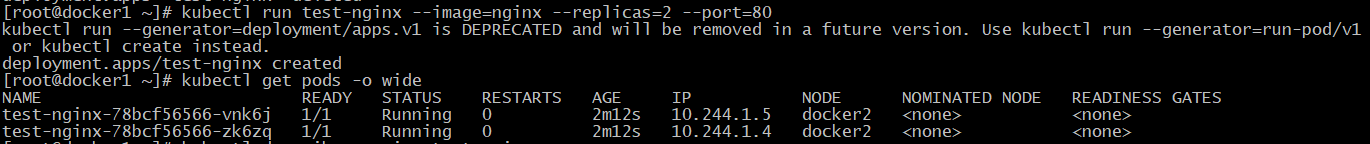

创建pods

kubectl run test-nginx --image=nginx --replicas=2 --port=80

kubectl get pods -o wide

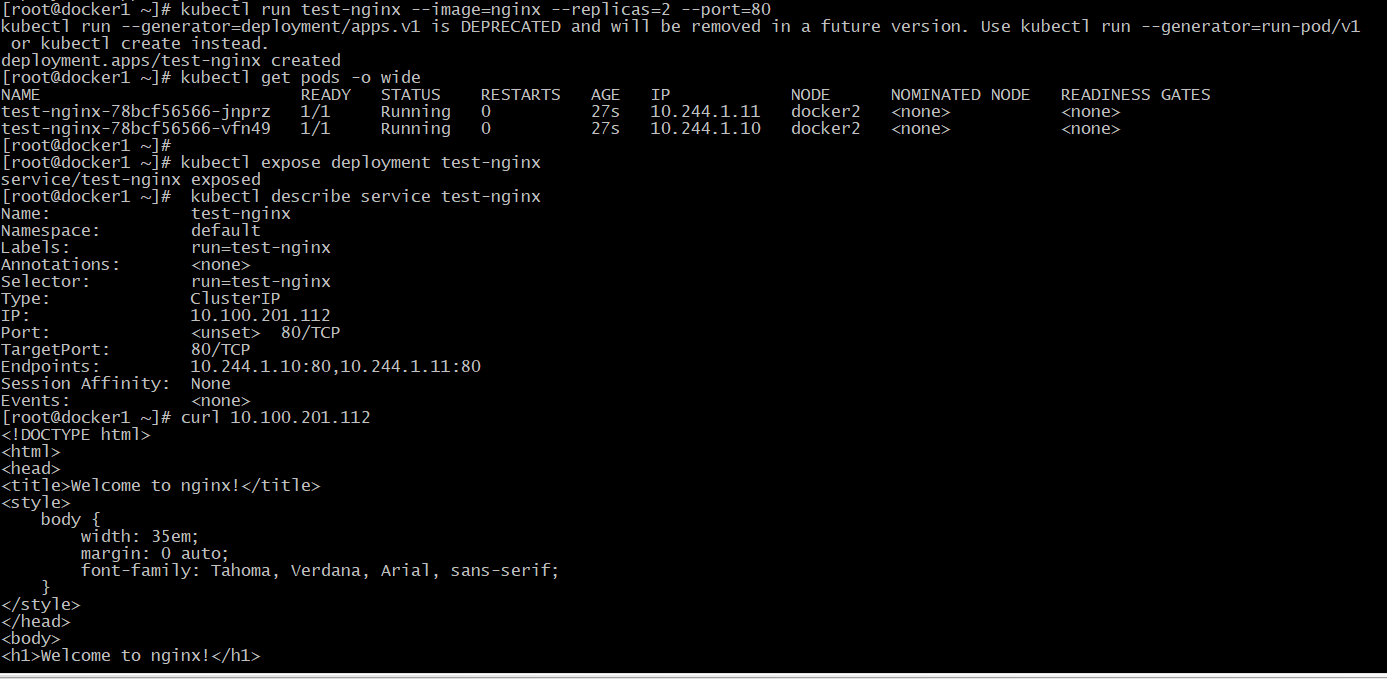

创建服务

kubectl expose deployment test-nginx

kubectl describe service test-nginx

测试

curl {IP:80}

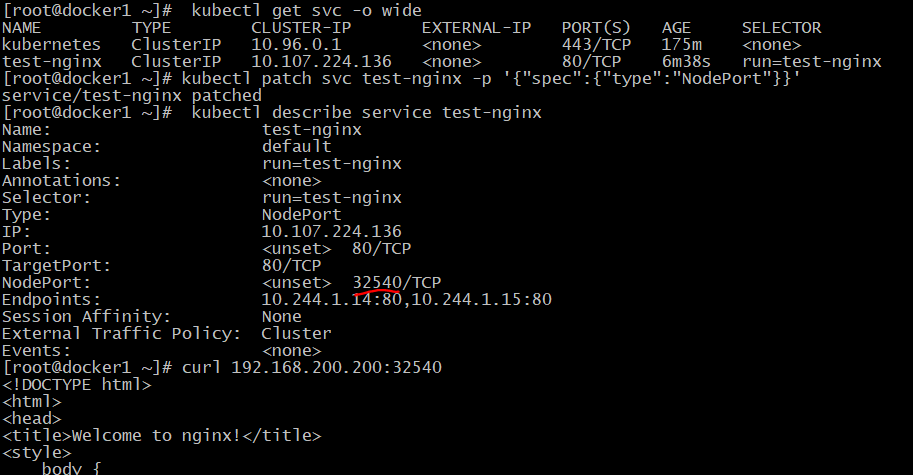

允许外部访问

查看端口

kubectl get svc -o wide

暴露端口

kubectl patch svc test-nginx -p '{"spec":{"type":"NodePort"}}'

测试

curl {外部ip:端口}

其它问题

一些防火墙设置(把防火墙关掉了,未测试)

https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/install-kubeadm/

Control-plane node(s)

Protocol Direction Port Range Purpose Used By TCP Inbound 6443* Kubernetes API server All TCP Inbound 2379-2380 etcd server client API kube-apiserver, etcd TCP Inbound 10250 Kubelet API Self, Control plane TCP Inbound 10251 kube-scheduler Self TCP Inbound 10252 kube-controller-manager Self Worker node(s)

Protocol Direction Port Range Purpose Used By TCP Inbound 10250 Kubelet API Self, Control plane TCP Inbound 30000-32767 NodePort Services† All

firewall-cmd --add-port=6443/tcp --permanent

firewall-cmd --add-port=2379-2380/tcp --permanent

firewall-cmd --add-port=10250-10252/tcp --permanent

firewall-cmd --add-port=30000-32767/tcp --permanent #暴露服务的端口的默认范围

firewall-cmd --reload

firewall-cmd --list-all

firewall-cmd --add-port=10250/tcp --permanent

firewall-cmd --add-port=30000-32767/tcp --permanent

firewall-cmd --reload

firewall-cmd --list-all

访问master很慢,访问worker很快

[root@docker1 ~]# kubectl describe service test-nginx

Name: test-nginx

Namespace: default

Labels: run=test-nginx

Annotations: <none>

Selector: run=test-nginx

Type: NodePort

IP: 10.107.224.136

Port: <unset> 80/TCP

TargetPort: 80/TCP

NodePort: <unset> 32540/TCP

Endpoints: 10.244.1.14:80,10.244.1.15:80

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>

#curl master:NodePort 慢

curl docker1:32540

#curl worker:NodePort 快。test-nginx跑在worker上

curl docker2:32540

#curl serviceIP 慢

curl 10.107.224.136:80

#curl flannel分配给容器的ip 快

curl 10.244.1.14:80

curl 10.244.1.15:80

原文链接:https://blog.csdn.net/comeyes/article/details/106123409

归因:flannel网络设置将发送端的checksum打开了,但是flannel想利用了Checksum offloading的机制,自己不计算checksum,想留给网卡硬件来计算,这样的目的是不消耗CPU,利用网卡硬件分担CPU消耗,这原本是没问题的,但是flannel下层还有个ens33,这个ens33才是真的NIC,但是对ens33来说,从flannel发过来的是属于报文,ens33是否会对上层协议报文进行校验,然后将值填写到udp层报文内容去呢?这个我感觉不会,如果会的话,还要再了解下flannel的虚拟网络及硬件网卡之间的offloadinig机制。

原文链接:https://t.du9l.com/2020/03/kubernetes-flannel-udp-packets-dropped-for-wrong-checksum-workaround/A little more Googling shows that this could be caused by “Checksum offloading“. That means if the kernel wants to send a packet out on a physical ethernet card, it can leave the checksum calculation to the card hardware. In this case, if you capture the packet from kernel, it will show a wrong checksum, since it has yet to be calculated; but, the same packet captured on the receiving end will have a different and correct checksum, calculated by the sender’s network card hardware.

您可以使用以下代码创建服务/etc/systemd/system/xiaodu-flannel-tx-off.service,然后启用并启动它。(可以使用此链接下载服务文件。)sudo tee /etc/systemd/system/xiaodu-flannel-tx-off.service > /dev/null << EOF [Unit] Description=Turn off checksum offload on flannel.1 After=sys-devices-virtual-net-flannel.1.device [Install] WantedBy=sys-devices-virtual-net-flannel.1.device [Service] Type=oneshot ExecStart=/sbin/ethtool -K flannel.1 tx-checksum-ip-generic off EOF sudo systemctl enable xiaodu-flannel-tx-off sudo systemctl start xiaodu-flannel-tx-off对于systemd> = 245,他们将

TransmitChecksumOffload参数添加到* .link单元。您可以阅读文档并亲自尝试,也可以只使用上面的服务。*如果您想了解有关Flannel和Kubernetes网络如何工作的更多信息,我强烈建议您阅读此博客文章,该文章分步演示了如何将数据包从一个Pod发送到另一个Pod。

暂时关闭校验

sudo ethtool -K flannel.1 tx-checksum-ip-generic off

2262

2262

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?