SpringBoot 集成消息中间件(kafka篇)

本文为使用SpringBoot集成kafka,其中包括 [ SpringBoot 的搭建,kafka + zookeeper 安装、使用,以及最后如何使用SpringBoot来集成kafka]:

- 如何搭建SpringBoot项目以及环境

- 下载kafka、zookeeper在服务器进行安装

- 如何使用SpringBoot进行集成kafka,进行消息通讯

下载IDEA编程工具

1.IDEA旗舰版分享(百度网盘分享)

地址:http://pan.baidu.com/s/1sl38o5f

说明:其中包含IDEA安装文件,注册码破解.txt,本地licenseServer搭建

2.如何使用licenseServer进行注册破解

a.

b.根据自身电脑位数选择使用的文件32 / 64

c.运行破解文件后,双击打开已经安装好的IDEA,选择licenseServer

在license Server 中输入 127.0.0.7:1071

d.这个时候就已经完成破解了;(部分人)

e.有部分人电脑可能每次都要打开破解文件才能破解(可使用文件启动程序)

**注意:(下面 // 注释在使用时请删除)**

echo start //开始

echo IDEA_LINCENSE START //开始启动破解文件:搭建本地服务

//进行启动:指向破解文件

start /d "E:\IDEA\activate" IntelliJIDEALicenseServer_windows_amd64.exe

echo IDEA START //开始启动IDEA

//进行启动:指向IDEA的启动文件

start /d "E:\IDEA\IntelliJ IDEA 2016.3.1\bin" idea64.exe

echo IDEA_LICENSE打开完成!

//将本地服务启动延迟4秒(因为有可能IDEA启动没那么快)

ping 127.0.0.1 -n 4

//关闭破解文件,进行关闭服务

taskkill /f /im IntelliJIDEALicenseServer_windows_amd64.exe

echo 延时4秒关闭!

goto openie

exit //退出

搭建SpringBoot项目

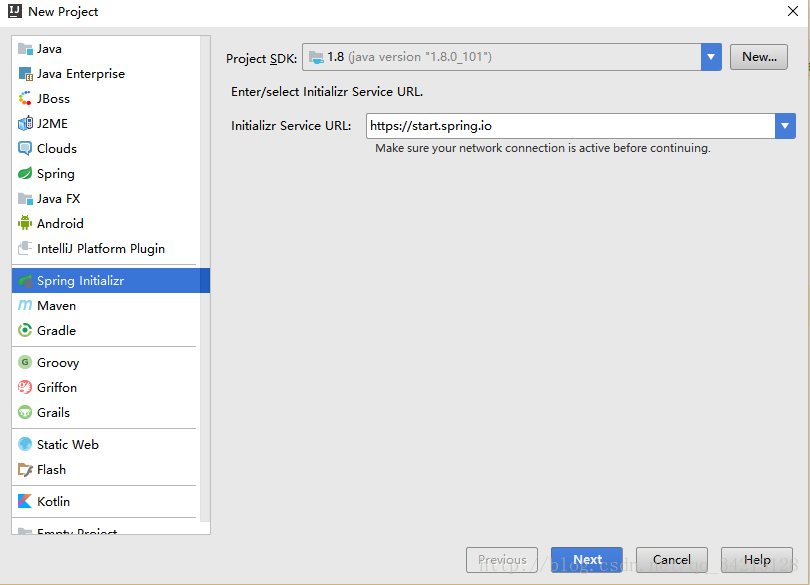

1.打开已经安装好的IDEA(其中需要JDK,先要安装JDK)

2.选择下一步(进行填写项目内容)

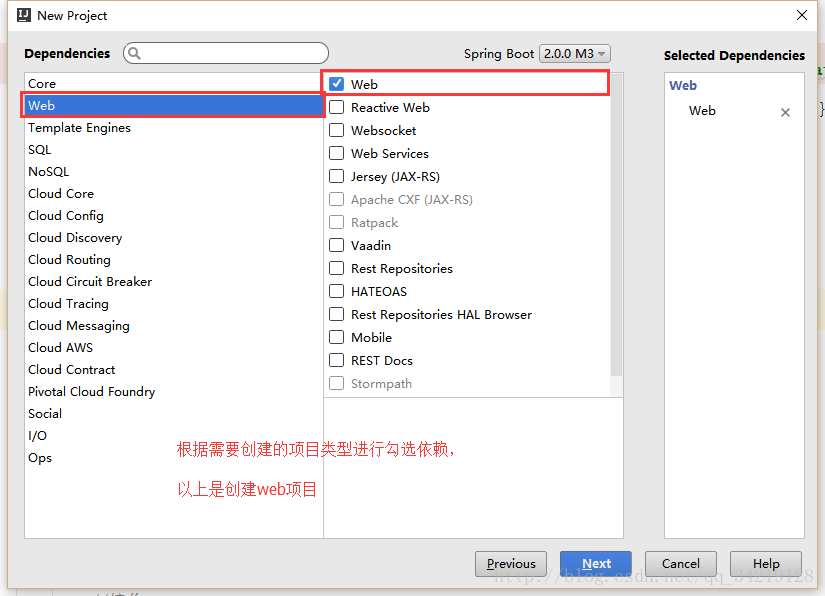

3.选择要添加的依赖

注意:到这里简单的 SpringBoot项目就已经搭建完毕;

下载kafka、zookeeper

1.下载kafka

下载地址:http://kafka.apache.org/downloads

2.下载zookeeper

下载地址:http://apache.fayea.com/zookeeper/

在服务器安装kafka、zookeeper

安装kafka、zookeeper

a.解压kafka安装包 :tar -zxvf kafka_2.11-0.11.0.0.tgzb.进入解压的kafka目录:cd kafka_2.11-0.11.0.0

c.安装zookeeper:http://blog.csdn.net/lk10207160511/article/details/50526404

d.启动zookeeper:bin/zookeeper-server-start.sh config/zookeeper.properties

e.启动kafka:bin/kafka-server-start.sh config/server.properties

f.后台运行:nohup bin/kafka-server-start.sh config/server.properties &

注意:e选项中启动非后台启动,只要关闭窗口后kafka也关闭了,f选项为后台运行,关了窗口也会运行;

使用SpringBoot集成kafka

1.添加SpringBoot跟kafka的依赖

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

<version>1.1.1.RELEASE</version>

</dependency>

2.编写消费者操作类

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.common.serialization.ByteArrayDeserializer;

import org.apache.kafka.common.serialization.BytesDeserializer;

import org.apache.kafka.common.serialization.StringDeserializer;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.kafka.annotation.EnableKafka;

import org.springframework.kafka.config.ConcurrentKafkaListenerContainerFactory;

import org.springframework.kafka.config.KafkaListenerContainerFactory;

import org.springframework.kafka.core.ConsumerFactory;

import org.springframework.kafka.core.DefaultKafkaConsumerFactory;

import org.springframework.kafka.listener.ConcurrentMessageListenerContainer;

import org.springframework.kafka.listener.KafkaMessageListenerContainer;

import org.springframework.kafka.listener.config.ContainerProperties;

import java.util.HashMap;

import java.util.Map;

@Configuration

@EnableKafka

public class KafkaConsumerConfig {

/**

* 监听工厂配置

* @return

*/

@Bean

public KafkaListenerContainerFactory<ConcurrentMessageListenerContainer<String, String>> kafkaListenerContainerFactory() {

ConcurrentKafkaListenerContainerFactory<String, String> factory = new ConcurrentKafkaListenerContainerFactory<>();

factory.setConsumerFactory(consumerFactory());

factory.setConcurrency(3);

factory.getContainerProperties().setPollTimeout(3000);

return factory;

}

/**

* 及客户工厂

* @return

*/

public ConsumerFactory<String, String> consumerFactory() {

return new DefaultKafkaConsumerFactory<>(consumerConfigs());

}

/**

* 客户配置

* @return

*/

public Map<String, Object> consumerConfigs() {

Map<String, Object> propsMap = new HashMap<>();

propsMap.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "192.168.6.181:9092"); //消息对列地址:端口

propsMap.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG, false);

propsMap.put(ConsumerConfig.AUTO_COMMIT_INTERVAL_MS_CONFIG, "100");

propsMap.put(ConsumerConfig.SESSION_TIMEOUT_MS_CONFIG, "15000"); // 超时时间

propsMap.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class); //key序列化

propsMap.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, ByteArrayDeserializer.class); //value 序列化

propsMap.put(ConsumerConfig.GROUP_ID_CONFIG, "test-platform"); //用户组

propsMap.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "latest");

return propsMap;

}

@Bean

public Listener listener() {

return new Listener();

}

3.编写消费者监听类

import com.hqweb.base.socket.MyWebSocket;

import com.hqweb.base.util.LoggerDB;

import net.sf.json.JSONObject;

import org.apache.log4j.Logger;

import org.springframework.kafka.annotation.KafkaListener;

import javax.annotation.Resource;

import java.io.IOException;

/**

* Created by HayLeung on 2017/4/20.

* 话题监听器

*/

public class Listener {

//日志对象

private Logger logger = Logger.getLogger(Listener.class);

/**

* 使用注解监听话题,多个用逗号隔开

* @param message :这里使用的byte[]

*/

@KafkaListener(topics = {"tp_paa","tp_dm","tp_info","tp_phone","tp_vc","tp_vm"})

public void receiveVehicleInfo(byte[] message) throws IOException {

//这里编写监听消息后的处理

}

}

4.编写生产者操作类

import java.util.HashMap;

import java.util.Map;

import org.apache.kafka.clients.producer.ProducerConfig;

import org.apache.kafka.common.serialization.ByteArrayDeserializer;

import org.apache.kafka.common.serialization.StringDeserializer;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.kafka.annotation.EnableKafka;

import org.springframework.kafka.core.DefaultKafkaProducerFactory;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.kafka.core.ProducerFactory;

import org.springframework.stereotype.Component;

@Configuration

@EnableKafka

@Component

public class KafkaProducerConfig {

public static Map<String, Object> producerConfigs() {

Map<String, Object> props = new HashMap<>();

props.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, "192.168.6.181:9092");

props.put(ProducerConfig.RETRIES_CONFIG, 0);

props.put(ProducerConfig.BATCH_SIZE_CONFIG, 4096);

props.put(ProducerConfig.LINGER_MS_CONFIG, 1);

props.put(ProducerConfig.BUFFER_MEMORY_CONFIG, 40960);

props.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, org.apache.kafka.common.serialization.StringSerializer.class);

props.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, org.apache.kafka.common.serialization.ByteArraySerializer.class);

return props;

}

public static ProducerFactory<String,byte[]> producerFactory() {

return new DefaultKafkaProducerFactory<String,byte[]>(producerConfigs());

}

@Bean

public static KafkaTemplate<String,byte[]> kafkaTemplate() {

return new KafkaTemplate<String,byte[]>(producerFactory());

}

}

到这里就已经配置完了,可以使用集成的处理类进行连接kafka,自行而是消费、生产话题消息;

1299

1299

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?