一、src

用excel做配置文件,配置flg的信息,然后读取,生成flg文件,然后从hive表查,生成dat.gz文件并且编码为GBK。

入参有selectList,whereFilter

二、知技

1. rdd保存为gz文件

rdd的saveAsTextFile方法只能指定目录且编码不能改,TextOutputFormat中编码是写死的utf-8,当前需求是要在该目录下放多个日期的的文件,所以要先保存到一个临时文件,然后合并复制到指定目录下。亲测,gz文件是可以多个合并为1个的。

spark直接把rdd写为gz,读直接用textfile()即可,自动解压

rdd.saveAsTextFile(结果目录,classOf[GzipCodec])

2. rdd通过自定义OutputFormat保存为gz且编码改为GBK

3. df保存为gz文件

{1} 用如下代码构造schema

此处要注意:new 类调用的是构造方法,只有直接用类()调用的才是apply()方法。StructType的apply方法可以传入StructFiled的数组,而filter刚好返回的是Array[StructFiled]

val schema = StructType(df.schema.filter(field => {

field.name == ""

}))

{2} 然后把df转成rdd然后在map中构造Row(arr:_*)构造RDD[Row],注意Row中的类型要跟schema的类型一致,比较麻烦

SparkSession spark = SparkSession

.builder()

.appName("spark-job")

.getOrCreate();

RuntimeConfig conf = spark.conf();

// text compress

conf.set("mapreduce.output.fileoutputformat.compress", "true");

conf.set("mapreduce.output.fileoutputformat.compress.type", SequenceFile.CompressionType.BLOCK.toString());

conf.set("mapreduce.output.fileoutputformat.compress.codec", "org.apache.hadoop.io.compress.GzipCodec");

conf.set("mapreduce.map.output.compress", "true");

conf.set("mapreduce.map.output.compress.codec", "org.apache.hadoop.io.compress.GzipCodec");

spark

.read()

.parquet("hdfs://aaaa")

.select("abc")

.write()

.format("text")

.mode(SaveMode.Overwrite)

.save("hdfs://bbbb");

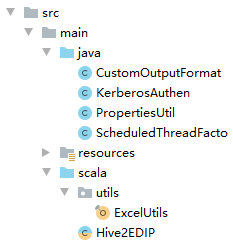

三、code

在公司环境已经验证过,成功运行,这里把一些繁琐步骤省略了

1. KerberosAuthen

import org.apache.commons.lang.StringUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.security.UserGroupInformation;

import java.io.IOException;

import java.util.concurrent.Executors;

import java.util.concurrent.ScheduledExecutorService;

import java.util.concurrent.TimeUnit;

public class KerberosAuthen {

private static ScheduledExecutorService scheduledExecutor = Executors.newScheduledThreadPool(1, new ScheduledThreadFactory());

public static void kerberosAuthen() {

krbAuth();

/*

每5分钟执行1次向kerberos进行认证的方法

*/

scheduledExecutor.scheduleAtFixedRate(() -> krbAuth(), 5L, 5L, TimeUnit.MINUTES);

}

/**

* 向kerberos认证

*/

private static void krbAuth() {

String krbConf = PropertiesUtil.getRequiredStringProperty("krb.conf");

String krbKeyTab = PropertiesUtil.getRequiredStringProperty("hive.krb.key");

String krbPrincipal = PropertiesUtil.getRequiredStringProperty("hive.krb.principal");

if (StringUtils.isEmpty(krbConf) || StringUtils.isEmpty(krbKeyTab) || StringUtils.isEmpty(krbPrincipal)) {

throw new RuntimeException("===============================kerberos认证文件不存在===============================");

}

//java程序本身自带kerberos客户端,需要krbConf,可以进行当前结点的krb认证

System.setProperty("java.security.krb5.conf", krbConf);

Configuration configuration = new Configuration();

configuration.set("hadoop.security.authentication", "kerberos");

//指定keytab和principal

configuration.set("keytab.file", krbKeyTab);

configuration.setBoolean("hadoop.security,authorization", true);

configuration.set("kerberos.principal", krbPrincipal);

try {

UserGroupInformation.setConfiguration(configuration);

UserGroupInformation.loginUserFromKeytab(krbPrincipal, krbKeyTab);

} catch (IOException ioException) {

System.err.println(ioException.getMessage());

}

}

}

2. PropertiesUtil

import org.apache.commons.lang.StringUtils;

import org.apache.commons.logging.Log;

import org.apache.commons.logging.LogFactory;

import java.io.IOException;

import java.io.InputStream;

import java.util.Properties;

public class PropertiesUtil {

private static Log log = LogFactory.getLog(PropertiesUtil.class);

private static Properties props = new Properties();

private static String propertyFileName = "/system.properties";

static {

try {

if (props.size() == 0) {

log.info("Start read the constv.properties file.");

InputStream input = PropertiesUtil.class.getResourceAsStream(propertyFileName);

props.load(input);

input.close();

}

} catch (IOException ioException) {

log.error(ioException.getMessage());

log.debug(ioException);

}

}

public static String getRequiredStringProperty(String propertyName) {

String str = getStringProperty(propertyName, null);

if (StringUtils.isBlank(str)) {

throw new RuntimeException(propertyName + " not in property file " + propertyFileName);

}

return str;

}

public static String getStringProperty(String propertyName, String defaultValue) {

if (props.containsKey(propertyName) == true) {

return (String) props.get(propertyName);

}

return defaultValue;

}

}

3. ScheduledThreadFactory

import java.util.concurrent.ThreadFactory;

import java.util.concurrent.atomic.AtomicInteger;

public class ScheduledThreadFactory implements ThreadFactory {

private static final AtomicInteger poolNumber = new AtomicInteger(1);

private final ThreadGroup group;

private final AtomicInteger threadNumber = new AtomicInteger(1);

private final String namePrefix;

public ScheduledThreadFactory() {

SecurityManager s = System.getSecurityManager();

group = (s != null) ? s.getThreadGroup() : Thread.currentThread().getThreadGroup();

namePrefix = "Scheduled Pool-" + poolNumber.getAndIncrement() + "-Thread-";

}

@Override

public Thread newThread(Runnable r) {

Thread t = new Thread(group, r, namePrefix + threadNumber.getAndIncrement());

/*

设置为守护进程,所在的jar执行完就推出,如果不是守护进程,linux运行时,即使业务进程执行完,这个进程也不会关

*/

t.setDaemon(true);

if (t.getPriority() != Thread.MAX_PRIORITY) {

t.setPriority(Thread.NORM_PRIORITY);

}

return t;

}

}

4. CustomOutputFormat

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.compress.CompressionCodec;

import org.apache.hadoop.io.compress.GzipCodec;

import org.apache.hadoop.mapreduce.RecordWriter;

import org.apache.hadoop.mapreduce.TaskAttemptContext;

import org.apache.hadoop.mapreduce.TaskID;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.ReflectionUtils;

import java.io.DataOutputStream;

import java.io.IOException;

import java.io.UnsupportedEncodingException;

import java.text.NumberFormat;

public class CustomOutputFormat<K, V> extends FileOutputFormat<K, V> {

public static String SEPERATOR = "mapreduce.output.textoutputformat.separator";

private static final NumberFormat NUMBER_FORMAT = NumberFormat.getInstance();

/*

因为业务上每个表每天生成的文件可以覆盖,所以这里只用name + 分区号 + extension来命名

name是由配置中的mapreduce.output.basename参数设置的

*/

public synchronized static String getUniqueFile(TaskAttemptContext context,

String name,

String extension){

TaskID taskId = context.getTaskAttemptID().getTaskID();

int partition = taskId.getId();

StringBuilder result = new StringBuilder();

result.append(name);

result.append('-');

result.append(NUMBER_FORMAT.format(partition));

result.append(extension);

return result.toString();

}

protected static class LineRecordWriter<K, V>

extends RecordWriter<K, V> {

// private static final String utf8 = "UTF-8";//此处修改

private static final String encod = "GBK";

private static final byte[] newline;

static {

try {

newline = "\n".getBytes(encod);

} catch (UnsupportedEncodingException uee) {

throw new IllegalArgumentException("can't find " + encod + " encoding");

}

}

protected DataOutputStream out;

private final byte[] keyValueSeparator;

public LineRecordWriter(DataOutputStream out, String keyValueSeparator) {

this.out = out;

try {

this.keyValueSeparator = keyValueSeparator.getBytes(encod);

} catch (UnsupportedEncodingException uee) {

throw new IllegalArgumentException("can't find " + encod + " encoding");

}

}

public LineRecordWriter(DataOutputStream out) {

this(out, "\t");

}

/**

* Write the object to the byte stream, handling Text as a special

* case.

*

* @param o the object to print

* @throws IOException if the write throws, we pass it on

*/

private void writeObject(Object o) throws IOException {

//此处修改

// if (o instanceof Text) {

// Text to = (Text) o;

// out.write(to.getBytes(), 0, to.getLength());

// } else {

out.write(o.toString().getBytes(encod));

}

public synchronized void write(K key, V value)

throws IOException {

boolean nullKey = key == null || key instanceof NullWritable;

boolean nullValue = value == null || value instanceof NullWritable;

if (nullKey && nullValue) {

return;

}

if (!nullKey) {

writeObject(key);

}

if (!(nullKey || nullValue)) {

out.write(keyValueSeparator);

}

if (!nullValue) {

writeObject(value);

}

out.write(newline);

}

public synchronized void close(TaskAttemptContext context) throws IOException {

out.close();

}

}

public RecordWriter<K, V>

getRecordWriter(TaskAttemptContext job

) throws IOException, InterruptedException {

Configuration conf = job.getConfiguration();

boolean isCompressed = getCompressOutput(job);

String keyValueSeparator = conf.get(SEPERATOR, "\t");

CompressionCodec codec = null;

String extension = "";

if (isCompressed) {

Class<? extends CompressionCodec> codecClass =

getOutputCompressorClass(job, GzipCodec.class);

codec = (CompressionCodec) ReflectionUtils.newInstance(codecClass, conf);

extension = codec.getDefaultExtension();

}

Path file = getDefaultWorkFile(job, extension);

FileSystem fs = file.getFileSystem(conf);

if (!isCompressed) {

//修改FSDataOutputStream fileOut = fs.create(file, false);

FSDataOutputStream fileOut = fs.create(file, true);

return new CustomOutputFormat.LineRecordWriter<K, V>(fileOut, keyValueSeparator);

} else {

//修改FSDataOutputStream fileOut = fs.create(file, false);

FSDataOutputStream fileOut = fs.create(file, true);

return new CustomOutputFormat.LineRecordWriter<K, V>(new DataOutputStream

(codec.createOutputStream(fileOut)),

keyValueSeparator);

}

}

}

5. log4j.properties

### 设置###

log4j.rootLogger=error,stdout,D,E

### 输出信息到控制抬 ###

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.Target=System.out

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=[%-5p] %d{yyyy-MM-dd HH:mm:ss,SSS} method:%l%n%m%n

### 输出DEBUG 级别以上的日志到=E://logs/error.log ###

log4j.appender.D=org.apache.log4j.DailyRollingFileAppender

log4j.appender.D.File=E://logs/log.log

log4j.appender.D.Append=true

log4j.appender.D.Threshold=DEBUG

log4j.appender.D.layout=org.apache.log4j.PatternLayout

log4j.appender.D.layout.ConversionPattern=%-d{yyyy-MM-dd HH:mm:ss} [ %t:%r ] - [ %p ] %m%n

### 输出ERROR 级别以上的日志到=E://logs/error.log ###

log4j.appender.E=org.apache.log4j.DailyRollingFileAppender

log4j.appender.E.File=E://logs/error.log

log4j.appender.E.Append=true

log4j.appender.E.Threshold=ERROR

log4j.appender.E.layout=org.apache.log4j.PatternLayout

log4j.appender.E.layout.ConversionPattern=%-d{yyyy-MM-dd HH:mm:ss} [ %t:%r ] - [ %p ] %m%n

6. ExcelUtils

package utils

import java.io.{File, FileOutputStream}

import org.apache.poi.ss.usermodel.CellType

import org.apache.poi.xssf.usermodel.XSSFWorkbook

object ExcelUtils {

/*

* 将指定的excel的单元格全部转为文本并写入到临时文件。临时文件名称为源文件后面加Tmp

* */

def toSTRING(excelFile: File) = {

val excel = new XSSFWorkbook(excelFile)

val iter = excel.sheetIterator()

while (iter.hasNext) {

val sheet = iter.next()

val rowIter = sheet.rowIterator()

while (rowIter.hasNext) {

val row = rowIter.next()

val cellIter = row.cellIterator()

while (cellIter.hasNext) {

val cellOp = Option(cellIter.next())

if (cellOp.isDefined) {

val cell = cellOp.get

cell.setCellType(CellType.STRING)

} else {

println("cell为null")

}

}

}

}

//临时文件的输出流

val path = s"${excelFile.getParent}\\${excelFile.getName.substring(excelFile.getName.indexOf(".") + 1)}-Tmp.xlsx"

val tmpOut = new FileOutputStream(path)

excel.write(tmpOut)

path

}

}

7. Hive2EDIP

import java.io.{File, FileOutputStream, OutputStreamWriter, PrintWriter}

import java.util.function.Consumer

import org.apache.hadoop.io.{NullWritable, SequenceFile, Text}

import org.apache.hadoop.io.compress.GzipCodec

import org.apache.poi.ss.usermodel.{Row, Sheet}

import org.apache.poi.xssf.usermodel.XSSFWorkbook

import org.apache.spark.SparkConf

import org.apache.spark.sql.SparkSession

import org.slf4j.LoggerFactory

import utils.ExcelUtils

import scala.collection.mutable.ArrayBuffer

object Hive2EDIP {

val log = LoggerFactory.getLogger(classOf[Hive2EDIP])

def main(args: Array[String]): Unit = {

/*

环境

*/

KerberosAuthen.kerberosAuthen()

val sparkConf = new SparkConf().setAppName("Hive2EDIP")

val sparkSession = SparkSession.builder()

.config(sparkConf)

.enableHiveSupport()

.getOrCreate()

val sparkContext = sparkSession.sparkContext

/*

参数

分为2部分,第一部分为(db.table,selectList,whereFilter,etl_date),第二部分(保存的总目录,flg信息Excel)

*/

val excelPathStr = args.last

val resultDir = args(args.length - 2)

val conditions = args.dropRight(2)

/*

根据conditions循环执行生成edip的原子方法

*/

val conditionsTuple: ArrayBuffer[Tuple4[String, String, String, String]] = ArrayBuffer[(String, String, String, String)]()

for (i <- Range(0, conditions.length, 4)) {

conditionsTuple.append((conditions(i), conditions(i + 1), conditions(i + 2), conditions(i + 3)))

}

//解析全部转为文本的临时excel

val excel = new XSSFWorkbook(new File(ExcelUtils.toSTRING(new File(excelPathStr))))

conditionsTuple.foreach {

case (dbTableName: String, selectList: String, whereFilter: String, etl_date: String) => {

excel.sheetIterator().forEachRemaining(new Consumer[Sheet] {

override def accept(sheet: Sheet): Unit = {

if (dbTableName.substring(dbTableName.indexOf(".") + 1).toUpperCase.equals(sheet.getSheetName.toUpperCase)) {

genDat(sheet, sparkSession, dbTableName, selectList, whereFilter, etl_date, resultDir)

}

}

})

}

}

}

/**

* 根据flg生成GBK格式的以gz压缩的文件

*/

private def genDat(sheet: Sheet, sparkSession: SparkSession, dbTableName: String, selectList: String, whereFilter: String, etl_date: String, resultDir: String): Unit = {

val arr: ArrayBuffer[(Int, Int, String)] = genFlg(sheet, selectList, resultDir)

//从hive中获取dfs

val sql =

s"""

| SELECT $selectList FROM $dbTableName

| WHERE $whereFilter

""".stripMargin

import org.apache.spark.sql.functions._

val df = sparkSession.sql(sql).drop(col("ETL_DATE"))

val hadoopConf = sparkSession.sparkContext.hadoopConfiguration

hadoopConf.set("mapreduce.output.fileoutputformat.compress", "true");

hadoopConf.set("mapreduce.output.fileoutputformat.compress.type", SequenceFile.CompressionType.BLOCK.toString());

hadoopConf.set("mapreduce.output.fileoutputformat.compress.codec", "org.apache.hadoop.io.compress.GzipCodec");

hadoopConf.set("mapreduce.map.output.compress", "true");

hadoopConf.set("mapreduce.map.output.compress.codec", "org.apache.hadoop.io.compress.GzipCodec");

hadoopConf.set("mapreduce.output.basename", s"$dbTableName-$etl_date")

df.rdd

.map((row: org.apache.spark.sql.Row) => {

var rowStr = ""

arr.foreach {

case (indexTable, length, type_) => {

var value: Any = ""

type_.toUpperCase match {

case "STRING" => value = row.getString(indexTable)

case "TIMESTAMP" => value = row.getTimestamp(indexTable)

case _ => value = row.getDecimal(indexTable)

}

/*

判断需要添加多少空格

先判断是否是全角字符

*/

}

}

rowStr

})

.map(NullWritable.get() -> new Text(_))

.saveAsNewAPIHadoopFile(resultDir, classOf[NullWritable], classOf[Text], classOf[CustomOutputFormat[NullWritable, Text]], hadoopConf)

//df.rdd.map(NullWritable.get() -> _).saveAsTextFile("", classOf[GzipCodec])

}

/**

* 将excel转成flg文件,保存到hdfs并返回一个数据结构

*

* @param sheet

* @return

*/

private def genFlg(sheet: Sheet, selectList: String, resultDir: String): ArrayBuffer[(Int, Int, String)] = {

val rowIter = sheet.rowIterator()

val formatStr = "%1$s$$%2$s"

val selectArr = selectList.split(",")

val pw = new PrintWriter(new OutputStreamWriter(new FileOutputStream(sheet.getSheetName)))

val arr = ArrayBuffer[(Int, Int, String)]()

rowIter.forEachRemaining(new Consumer[Row] {

override def accept(row: Row): Unit = {

val name = row.getCell(3).getStringCellValue

if (selectArr.contains(name)) {

val indexFlg = selectArr.indexOf(name).toString

val indexTable = row.getCell(2).getStringCellValue

val length = row.getCell(2).getStringCellValue

val type_ = row.getCell(2).getStringCellValue

val rowFlg = formatStr.format(indexFlg, length)

pw.println(rowFlg)

arr += ((indexTable.toInt, length.toInt, type_))

}

}

})

pw.close()

arr

}

}

class Hive2EDIP {}

1669

1669

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?