1、创建ceph-secret这个k8s secret对象,这个secret对象用于k8s volume插件访问ceph集群,获取client.admin的keyring值,并用base64编码,在master1-admin(ceph管理节点)操作

[root@master1-admin ~]# ceph auth get-key client.admin | base64

QVFDOWF4eGhPM0UzTlJBQUJZZnVCMlZISVJGREFCZHN0UGhMc3c9PQ==2.创建ceph的secret,在k8s的控制节点操作:

[root@master ceph]# cat ceph-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: ceph-secret

data:

key: QVFDOWF4eGhPM0UzTlJBQUJZZnVCMlZISVJGREFCZHN0UGhMc3c9PQ==

[root@master ceph]# kubectl apply -f ceph-secret.yaml

secret/ceph-secret created3.回到ceph 管理节点创建pool池,然后再创建一个块设备

[root@master1-admin ~]# ceph osd pool create k8stest 56

pool 'k8stest' created

You have new mail in /var/spool/mail/root

[root@master1-admin ~]# rbd create rbda -s 1024 -p k8stest

[root@master1-admin ~]# rbd feature disable k8stest/rbda object-map fast-diff deep-flatten

[root@master1-admin ~]# ceph osd pool ls

rbd

cephfs_data

cephfs_metadata

k8srbd1

k8stest4、创建pv

[root@master ceph]# cat pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: ceph-pv

spec:

capacity:

storage: 1Gi

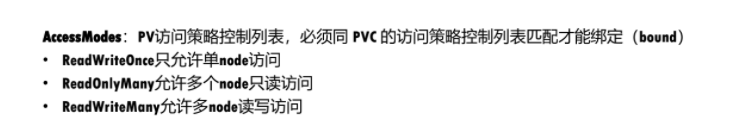

accessModes:

- ReadWriteOnce

rbd:

monitors:

- '192.168.0.5:6789'

- '192.168.0.6:6789'

- '192.168.0.7:6789'

pool: k8stest

image: rbda

user: admin

secretRef:

name: ceph-secret

fsType: xfs

readOnly: false

persistentVolumeReclaimPolicy: Recycle

[root@master ceph]# vim pv.yaml

[root@master ceph]# kubectl apply -f pv.yaml

persistentvolume/ceph-pv created

[root@master ceph]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM

ceph-pv 1Gi RWO Recycle Available [root@master ceph]# cat pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: ceph-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

[root@master ceph]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

ceph-pvc Bound ceph-pv 1Gi RWO 4s

[root@master ceph]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

ceph-pv 1Gi RWO Recycle Bound default/ceph-pvc 5h23m[root@master ceph]# cat pod-2.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 2 # tells deployment to run 2 pods matching the template

template: # create pods using pod definition in this template

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

volumeMounts:

- mountPath: "/ceph-data"

name: ceph-data

volumes:

- name: ceph-data

persistentVolumeClaim:

claimName: ceph-pvc

[root@master ceph]# kubectl apply -f pod-2.yaml

deployment.apps/nginx-deployment created

[root@master ceph]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-d9f89fd7c-v8rxb 1/1 Running 0 12s 10.233.90.179 node1 <none> <none>

nginx-deployment-d9f89fd7c-zfwzj 1/1 Running 0 12s 10.233.90.178 node1 <none> <none>通过上面可以发现pod是可以以ReadWriteOnce共享挂载相同的pvc的

注意:ceph rbd块存储的特点

- ceph rbd块存储能在同一个node上跨pod以ReadWriteOnce共享挂载

- ceph rbd块存储能在同一个node上同一个pod多个容器中以ReadWriteOnce共享挂载

- ceph rbd块存储不能跨node以ReadWriteOnce共享挂载

- 如果一个使用ceph rdb的pod所在的node挂掉,这个pod虽然会被调度到其它node,但是由于rbd不能跨node多次挂载和挂掉的pod不能自动解绑pv的问题,这个新pod不会正常运行

#将之前所在的节点关闭,可以看到不能使用pvc

[root@master ceph]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-867b44bd69-nhh4m 1/1 Running 0 9m30s

nfs-client-provisioner-867b44bd69-xscs6 1/1 Terminating 2 18d

nginx-deployment-d9f89fd7c-4rzjh 1/1 Terminating 0 15m

nginx-deployment-d9f89fd7c-79ncq 1/1 Terminating 0 15m

nginx-deployment-d9f89fd7c-p54p9 0/1 ContainerCreating 0 9m30s

nginx-deployment-d9f89fd7c-prgt5 0/1 ContainerCreating 0 9m30s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 11m default-scheduler Successfully assigned default/nginx-deployment-d9f89fd7c-p54p9 to node2

Warning FailedAttachVolume 11m attachdetach-controller Multi-Attach error for volume "ceph-pv" Volume is already used by pod(s) nginx-deployment-d9f89fd7c-79ncq, nginx-deployment-d9f89fd7c-4rzjh

Warning FailedMount 25s (x5 over 9m26s) kubelet, node2 Unable to attach or mount volumes: unmounted volumes=[ceph-data], unattached volumes=[ceph-data default-token-cwbdx]: timed out waiting for the condition

Deployment更新特性:

deployment触发更新的时候,它确保至少所需 Pods 75% 处于运行状态(最大不可用比例为 25%)。故像一个pod的情况,肯定是新创建一个新的pod,新pod运行正常之后,再关闭老的pod。

默认情况下,它可确保启动的 Pod 个数比期望个数最多多出 25%

问题:

结合ceph rbd共享挂载的特性和deployment更新的特性,我们发现原因如下:

由于deployment触发更新,为了保证服务的可用性,deployment要先创建一个pod并运行正常之后,再去删除老pod。而如果新创建的pod和老pod不在一个node,就会导致此故障。

解决办法:

1,使用能支持跨node和pod之间挂载的共享存储,例如cephfs,GlusterFS等

2,给node添加label,只允许deployment所管理的pod调度到一个固定的node上。(不建议,这个node挂掉的话,服务就故障了)

552

552

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?