原文链接: tfjs posenet

上一篇: requestAnimationFrame 摄像头数据绘制到canvas

下一篇: tf hub mobile_net 使用

tfjs-model

https://github.com/tensorflow/tfjs-models/tree/master/posenet

react

https://github.com/jscriptcoder/tfjs-posenet

util

import * as posenet from '@tensorflow-models/posenet';

function isAndroid() {

return /Android/i.test(navigator.userAgent);

}

function isiOS() {

return /iPhone|iPad|iPod/i.test(navigator.userAgent);

}

export function isMobile() {

return isAndroid() || isiOS();

}

function toTuple({y, x}) {

return [y, x]

}

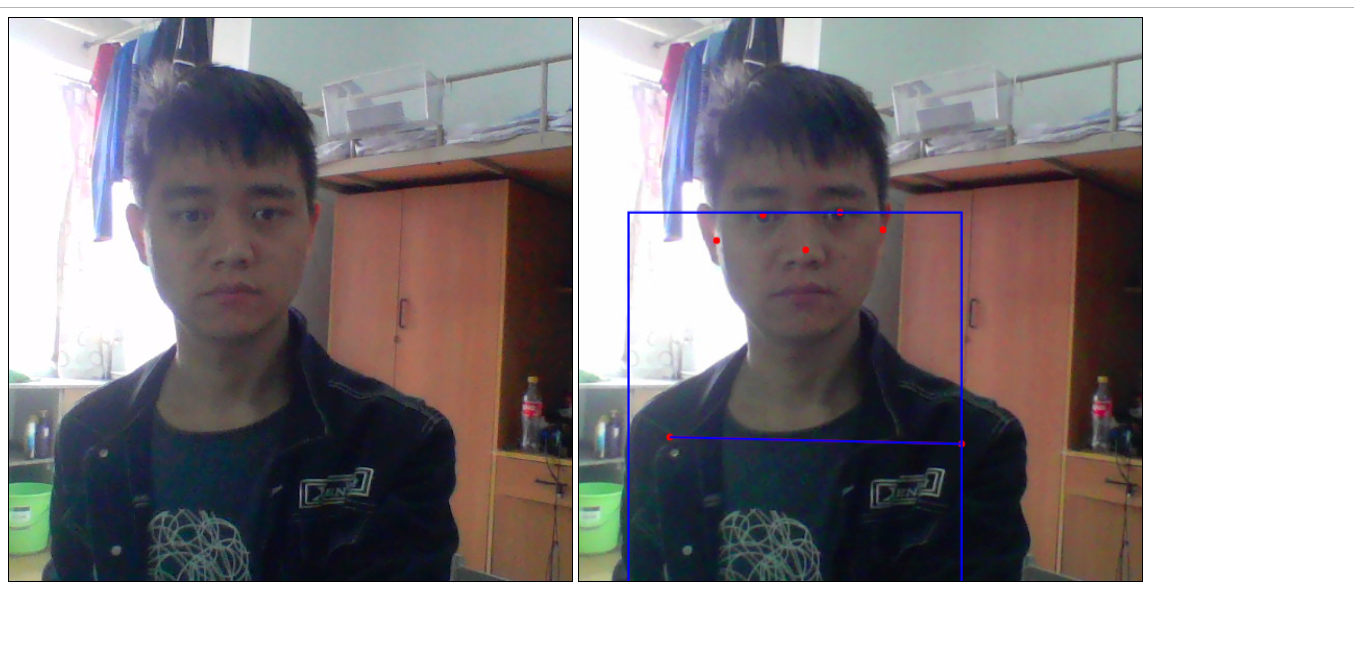

export function drawBoundingBox(keypoints, ctx,boundingBoxColor='blue') {

const boundingBox = posenet.getBoundingBox(keypoints);

ctx.rect(

boundingBox.minX, boundingBox.minY, boundingBox.maxX - boundingBox.minX,

boundingBox.maxY - boundingBox.minY);

ctx.strokeStyle = boundingBoxColor;

ctx.stroke();

}

export function drawSegment([ay, ax], [by, bx], color, scale, ctx, lineWidth = 2) {

ctx.beginPath();

ctx.moveTo(ax * scale, ay * scale);

ctx.lineTo(bx * scale, by * scale);

ctx.lineWidth = lineWidth;

ctx.strokeStyle = color;

ctx.stroke();

}

/**

* Draws a pose skeleton by looking up all adjacent keypoints/joints

*/

export function drawSkeleton(keypoints, minConfidence, ctx, scale = 1, color = 'red') {

const adjacentKeyPoints =

posenet.getAdjacentKeyPoints(keypoints, minConfidence);

adjacentKeyPoints.forEach((keypoints) => {

drawSegment(

toTuple(keypoints[0].position), toTuple(keypoints[1].position), color,

scale, ctx);

});

}

export function drawPoint(ctx, y, x, r, color) {

ctx.beginPath();

ctx.arc(x, y, r, 0, 2 * Math.PI);

ctx.fillStyle = color;

ctx.fill();

}

export function drawKeypoints(keypoints, minConfidence, ctx, scale = 1, color = 'red') {

for (let i = 0; i < keypoints.length; i++) {

const keypoint = keypoints[i];

if (keypoint.score < minConfidence) {

continue;

}

const {y, x} = keypoint.position;

console.log(x, y)

drawPoint(ctx, y * scale, x * scale, 3, color);

}

}

vue

<template>

<div>

<video id="video" class="video"></video>

<canvas id="pose" class="pose"></canvas>

</div>

</template>

<script>

import * as posenet from '@tensorflow-models/posenet';

import {isMobile, drawKeypoints, drawSkeleton, drawBoundingBox} from './utils'

let VConsole = require('vconsole/dist/vconsole.min.js');

let vConsole = new VConsole();

const imageScaleFactor = 1;

const outputStride = 16;

const flipHorizontal = false;

const videoWidth = 500

const videoHeight = 500

async function setupCamera() {

if (!navigator.mediaDevices || !navigator.mediaDevices.getUserMedia) {

throw new Error(

'Browser API navigator.mediaDevices.getUserMedia not available');

}

const video = document.getElementById('video');

video.width = videoWidth;

video.height = videoHeight;

const mobile = isMobile();

const stream = await navigator.mediaDevices.getUserMedia({

'audio': false,

'video': {

facingMode: 'user',

width: videoWidth,

height: videoHeight,

},

});

video.srcObject = stream;

console.log(video)

return new Promise((resolve) => {

video.onloadedmetadata = () => {

resolve(video);

};

});

}

async function loadVideo() {

const video = await setupCamera();

console.log(video)

video.play();

return video;

}

const minPoseConfidence = 0.1

const minPartConfidence = 0.5

let video = undefined

let net = undefined

let ctx = undefined

let cvs = undefined

async function draw_frame() {

if (!video || !net || !ctx || !cvs)

return

const pose = await net.estimateSinglePose(video, imageScaleFactor, flipHorizontal, outputStride);

let {score, keypoints} = pose

requestAnimationFrame(draw_frame)

ctx.drawImage(video, 0, 0, cvs.width, cvs.height)

if (score >= minPoseConfidence) {

drawKeypoints(keypoints, minPartConfidence, ctx);

drawSkeleton(keypoints, minPartConfidence, ctx);

drawBoundingBox(keypoints, ctx)

}

}

export default {

async mounted() {

video = await loadVideo();

net = await posenet.load();

cvs = document.getElementById('pose')

ctx = cvs.getContext('2d')

cvs.height = 500

cvs.width = 500

draw_frame()

}

}

</script>

<style scoped>

.video {

max-width: 500px;

max-height: 500px;

border: 1px solid black;

}

.pose {

width: 500px;

height: 500px;

border: 1px solid black;

}

</style>

注意版本目前2019年3月10日并不能再1.0下运行

"@tensorflow-models/posenet": "^0.1.1",

"@tensorflow/tfjs": "^0.11.4",

4902

4902

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?