filebeat是一个轻量级的日志收集器

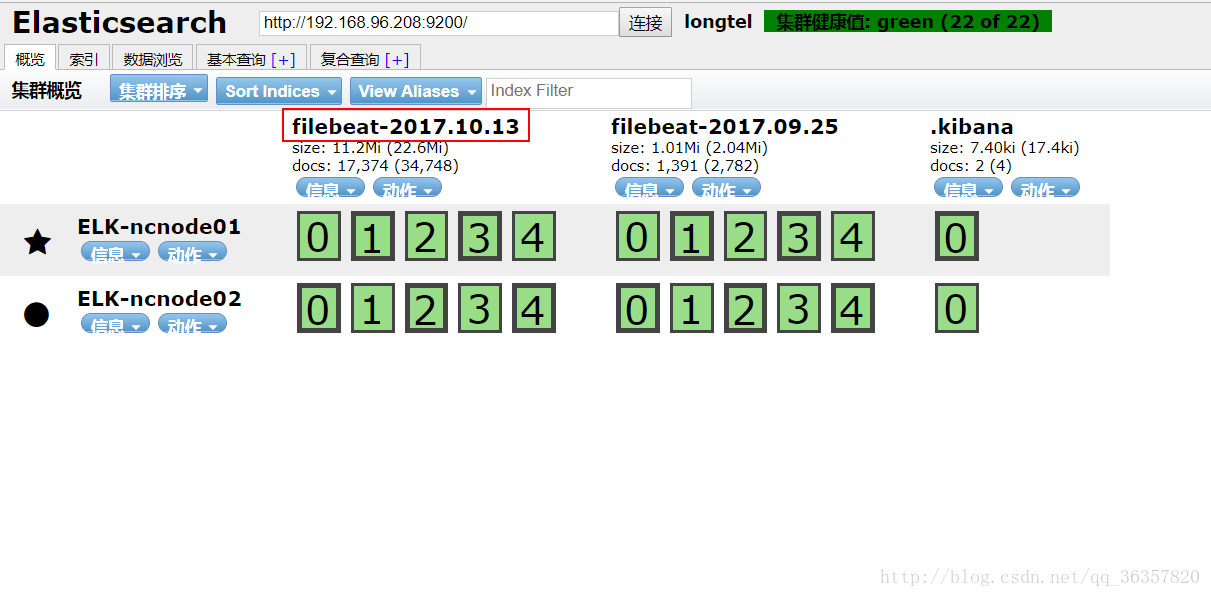

此处我的ELK架构是两台elasticsearch+logstash+kibana+filebeat

之前写过elasticsearch+logstash+kibana这三个组件的安装及使用,这里只介绍filebeat组件的部署及使用

客户端操作

Apt

#wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -

#sudo apt-get install apt-transport-https

#echo "deb https://artifacts.elastic.co/packages/5.x/apt stable main" | sudo tee -a /etc/apt/sources.list.d/elastic-5.x.list

#sudo apt-get update && sudo apt-get install filebeat

#/etc/init.d/filebeat start

yum

#sudo rpm --import https://packages.elastic.co/GPG-KEY-elasticsearch

#vi /etc/yum.repos.d/filebeat.repo

[elastic-5.x]

name=Elastic repository for 5.x packages

baseurl=https://artifacts.elastic.co/packages/5.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

#sudo yum install filebeat

#sudo chkconfig --add filebeat

deb

curl -L -O https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-5.6.3-amd64.deb

sudo dpkg -i filebeat-5.6.3-amd64.deb

rpm

curl -L -O https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-5.6.3-x86_64.rpm

sudo rpm -vi filebeat-5.6.3-x86_64.rpm

配置文件

# vi /etc/filebeat/filebeat.yml

filebeat.prospectors:

- input_type: log

# Paths that should be crawled and fetched. Glob based paths.

paths:

- /var/log/*/*.log 需要收集的日志文件路径,这里收集所有日志

#- /var/log/keystone/*.log

#- c:\programdata\elasticsearch\logs\*

output.elasticsearch: 这里是直接输出到elasticsearch

hosts: ["192.168.96.208:9200"]

#----------------------------- Logstash output --------------------------------

output.logstash:

# The Logstash hosts

hosts: ["192.168.96.209:5044"] 这里是输出到logstash

注意:因为我安装了logstash在服务端,所以此处选择输出到logstash

配置文件改完之后,可以测试一下是否正确

root@controller:/usr/bin# ./filebeat.sh -configtest -e

2017/10/13 05:28:47.807772 beat.go:297: INFO Home path: [/usr/share/filebeat] Config path: [/etc/filebeat] Data path: [/var/lib/filebeat] Logs path: [/var/log/filebeat]

2017/10/13 05:28:47.807831 beat.go:192: INFO Setup Beat: filebeat; Version: 5.6.3

2017/10/13 05:28:47.807970 logstash.go:90: INFO Max Retries set to: 3

2017/10/13 05:28:47.807970 metrics.go:23: INFO Metrics logging every 30s

2017/10/13 05:28:47.808113 outputs.go:108: INFO Activated logstash as output plugin.

2017/10/13 05:28:47.808262 publish.go:300: INFO Publisher name: controller

2017/10/13 05:28:47.809109 async.go:63: INFO Flush Interval set to: 1s

2017/10/13 05:28:47.809133 async.go:64: INFO Max Bulk Size set to: 2048

Config OK

root@controller:/usr/bin# pwd

/usr/bin

root@controller:/usr/bin#

重启服务

#/etc/init.d/filebeat restart

logstash端操作

[root@ELK-ncnode02 logstash]# pwd

/usr/share/logstash

[root@ELK-ncnode02 logstash]# bin/logstash -f /root/logstash.conf logstash.conf为自定义的pipeline.conf文件

我此处的pipeline.conf文件的配置如下

input {

beats {

port => 5044

}

}

# The filter part of this file is commented out to indicate that it is

# optional.

# filter {

#

# }

output {

stdout { codec => rubydebug

}

elasticsearch {

hosts => "192.168.96.208:9200"

manage_template => false

index => "%{[@metadata][beat]}-%{+YYYY.MM.dd}"

document_type => "%{[@metadata][type]}"

}

}

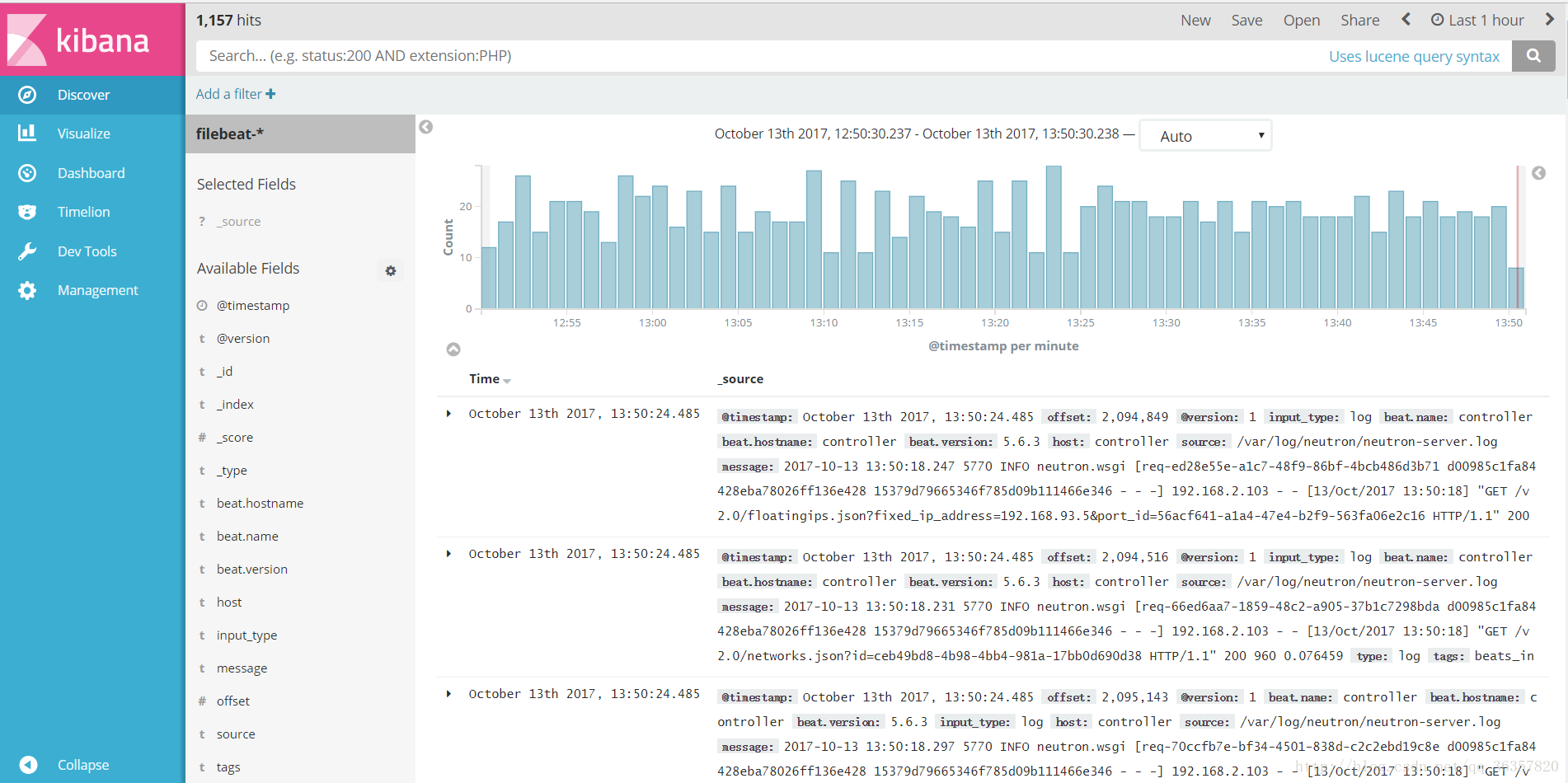

将filebeat收集到的日志通过logstash的解析发送到elasticsearch和kibana

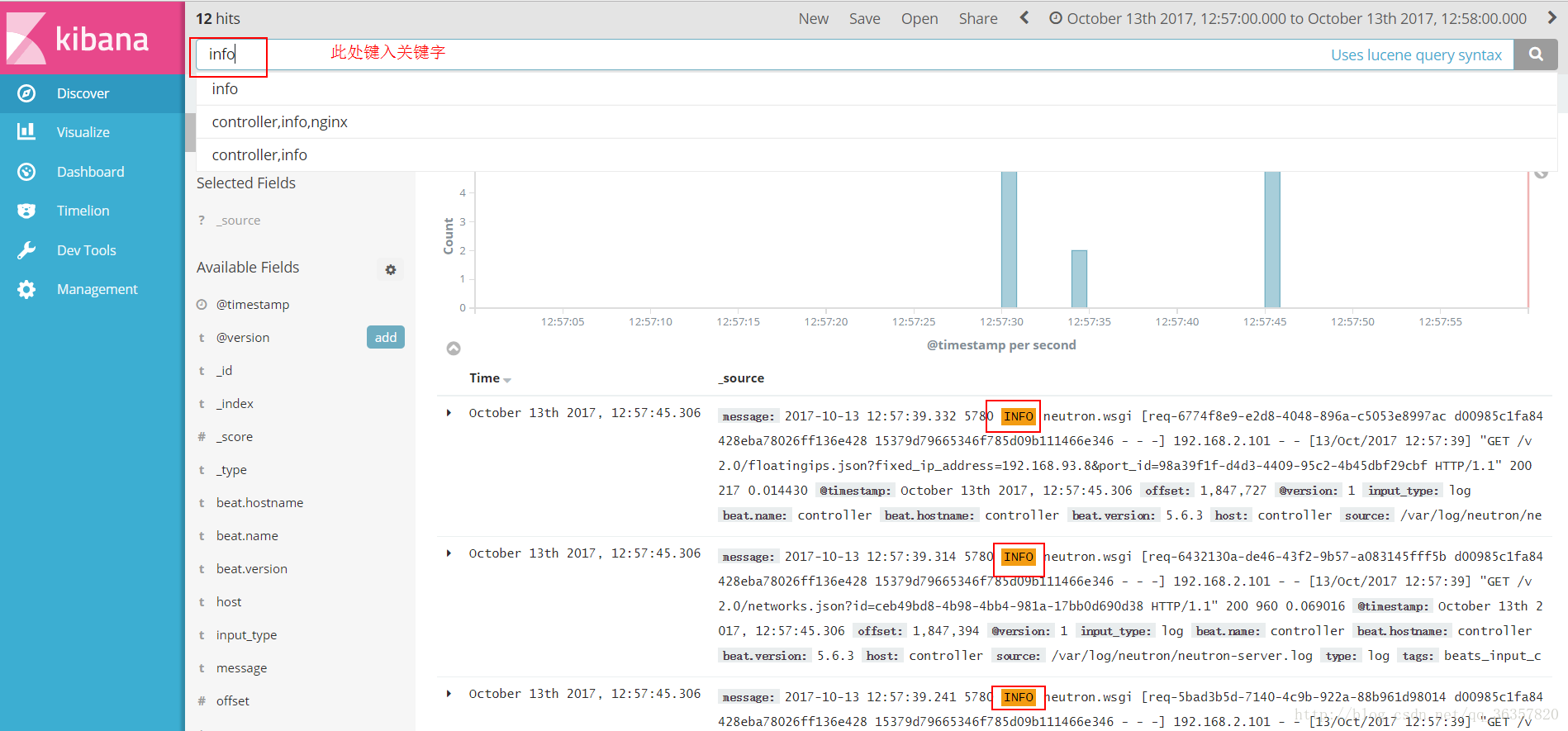

kibana有过滤功能,如下:

有误的地方欢迎大家指正

256

256

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?