TDH集群间配置kerberos互信

| 测试集群 | |||

| 主机名 | IP | kerberos realm | kdc server |

| bdc001 | 36.0.12.31 | TDH | bdc001 bdc004 |

| bdc003 | 36.0.12.33 | ||

| bdc004 | 36.0.12.34 | ||

| 开发集群 | |||

| 主机名 | IP | kerberos realm | kdc server |

| bdcdev001 | 36.0.12.4 | TDH.DEV | bdcdev001 bdcdev002 |

| bdcdev002 | 36.0.12.5 | ||

| bdcdev003 | 36.0.12.6 | ||

1.检查两个集群的时间,时间必须要一致。

2.关闭两个集群的防火墙。

配置/etc/hosts

修改两个集群的/etc/hosts文件,分别加入对端集群的主机名和ip信息。

添加principal

在bdc001和bdcdev001,分别执行以下命令:

-- 登录到kadmin命令行:

shell$> sudo kadmin.local -e "aes256-cts-hmac-sha1-96:normal"

kadmin.local:> addprinc -requires_preauth krbtgt/TDH@TDH.DEV

kadmin.local:> addprinc -requires_preauth krbtgt/TDH.DEV@TDH

修改/etc/krb5.conf

注意:集群中的每个节点都需要修改。

测试集群(bdc001、bdc003、bdc004)如下:

[logging]

default = FILE:/var/log/krb5libs.log

kdc = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

[libdefaults]

default_realm = TDH

dns_lookup_realm = false

dns_lookup_kdc = false

ticket_lifetime = 24h

renew_lifetime = 7d

forwardable = true

default_ccache_name = KEYRING:session:%{uid}

kdc_timeout = 3000

[realms]

TDH = {

kdc = bdc001

kdc = bdc004

admin_server = bdc001

admin_server = bdc004

}

TDH.DEV = {

kdc = bdcdev001

kdc = bdcdev002

admin_server = bdcdev001

admin_server = bdcdev002

}

[domain_realm]

bdcdev001 = TDH.DEV

bdcdev002 = TDH.DEV

bdc001 = TDH

bdc004 = TDH

开发集群(bdcdev001、bdcdev002、bdcdev003):

[logging]

default = FILE:/var/log/krb5libs.log

kdc = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

[libdefaults]

default_realm = TDH.DEV

dns_lookup_realm = false

dns_lookup_kdc = false

ticket_lifetime = 24h

renew_lifetime = 7d

forwardable = true

default_ccache_name = KEYRING:session:%{uid}

kdc_timeout = 3000

[realms]

TDH.DEV = {

kdc = bdcdev001

kdc = bdcdev002

admin_server = bdcdev001

admin_server = bdcdev002

}

TDH = {

kdc = bdc001

kdc = bdc004

admin_server = bdc001

admin_server = bdc004

}

[domain_realm]

bdcdev001 = TDH.DEV

bdcdev002 = TDH.DEV

bdc001 = TDH

bdc004 = TDH

修改hadoop.security.auth_to_local参数

分别修改两个集群的hdfs和yarn的hadoop.security.auth_to_local参数:

1.测试集群(bdc001、bdc003、bdc004)

RULE:[1:$1@$0](^.*@TDH\.DEV$)s/^(.*)@TDH\.DEV$/$1/g RULE:[2:$1@$0](^.*@TDH\.DEV$)s/^(.*)@TDH\.DEV$/$1/g DEFAULT

2.开发集群(bdcdev001、bdcdev002、bdcdev003)

RULE:[1:$1@$0](^.*@TDH$)s/^(.*)@TDH$/$1/g RULE:[2:$1@$0](^.*@TDH$)s/^(.*)@TDH$/$1/g DEFAULT

注意:修改参数后需重启服务。

添加dfs.namenode.kerberos.principal.pattern参数

分别对两个集群的hdfs组件,添加dfs.namenode.kerberos.principal.pattern参数,参数值为*。

注意:添加参数后需重启服务。

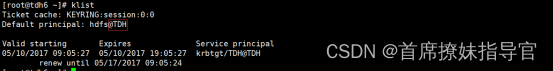

测试

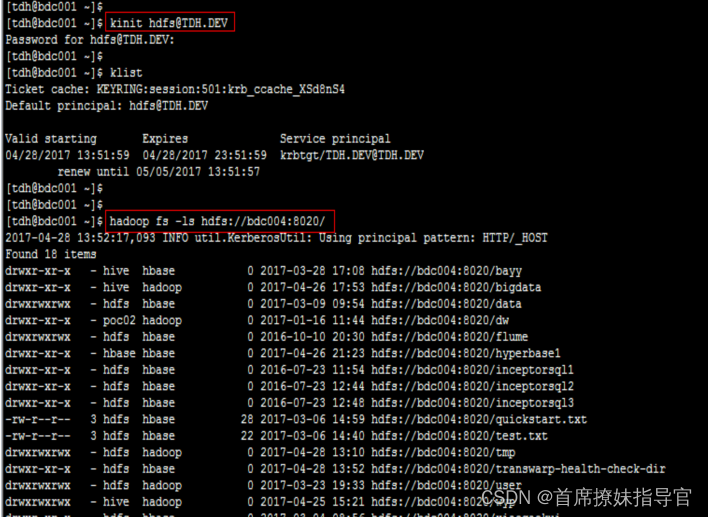

1.测试集群:以开发集群hdfs用户登录,然后分别访问开发集群和本地的hdfs

2.开发集群:以测试集群hd户登录,然后分别访问测试集群和本地的hdfs

集群间distcp传输数据

将测试集群/tmp/test/t1.txt拷贝到开发集群的/tmp目录:

-- 在测试集群服务器(如bdc001)

1.kinit hive@TDH.DEV

2.hadoop distcp hdfs://bdc004:8020/tmp/test/t1.txt hdfs://bdcdev001:8020/tmp/

注意:不要使用hdfs用户(即不要kinit hdfs)执行distcp,因为不允许hdfs用户向yarn提交作业,会导致复制失败。

其它

当访问对端hdfs失败时,可使用如下参数输出debug详细信息:

export HADOOP_ROOT_LOGGER="DEBUG,console"

export HADOOP_OPTS="-Dsun.security.krb5.debug=true"

TDH到TOS集群 distcp配置手册

- 概述

本文档讲述通过配置tdh集群和tos集群某个租户两者间guardian互信,用户可以在两个集群登录操作hdfs,运用distcp工具将tdh集群中hdfs文件导入到tos租户hdfs。

- 配置互信

- 查看两个集群使用不同的域

从上图可知,tdh集群使用域名为tdh,tos midas租户使用域名为tos,需要确定两个集群使用不同的域名

- TDH集群配置

生成密码,建议生成大于26的密码

命令为:echo `date +%s | sha256sum | base64 | head -c 26`,本次生成的密码为:OWM2MDgwMDQ3MGY1OTI5Y2M0OG

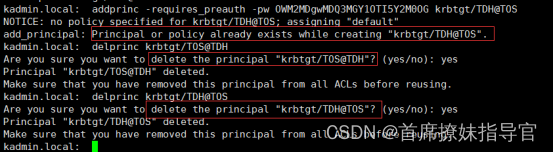

增加TGT

ssh登录kadmin server服务器,登录kadmin,然后增加TGT

>addprinc -requires_preauth -pw OWM2MDgwMDQ3MGY1OTI5Y2M0OG krbtgt/TOS@TDH

>addprinc -requires_preauth -pw OWM2MDgwMDQ3MGY1OTI5Y2M0OG krbtgt/TDH@TOS

配置core-site.xml

管理界面配置参数,配置服务,停止集群所有服务,然后重启集群所有服务

<name>hadoop.security.auth_to_local</name>

<value>RULE:[1:$1@$0](^.*@TOS$)s/^(.*)@TOS$/$1/g RULE:[2:$1@$0](^.*@TOS$)s/^(.*)@TOS$/$1/g DEFAULT</value>

配置/etc/krb5.conf

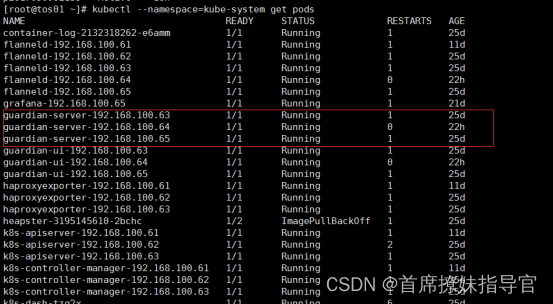

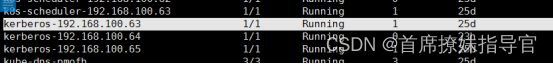

命令为:kubectl --namespace=kube-system get pods

在tdh环境的所有guradian服务器中修改/etc/krb5.conf

注意事项: 通过页面修改配置的时候, 会将krb5.conf覆盖掉. 需要再手动改一边krb5.conf

- TOS集群配置

本次选择tos测试环境61集群,madis租户。

增加TGT

找到 kerberos的节点. docker exec 进入容器.

![]()

>addprinc -requires_preauth -pw OWM2MDgwMDQ3MGY1OTI5Y2M0OG krbtgt/TOS@TDH

TDH@TOS>addprinc -requires_preauth -pw OWM2MDgwMDQ3MGY1OTI5Y2M0OG krbtgt/

配置 /etc/krb5.conf

需要配置三个容器:

- kerberos容器

- terminal容器

- yarn resoucemanager容器

admin_server = 192.168.100.103

admin_server = 192.168.100.104

- 验证互信

- TDH验证

- 验证/etc/krb5.conf配置是否正确

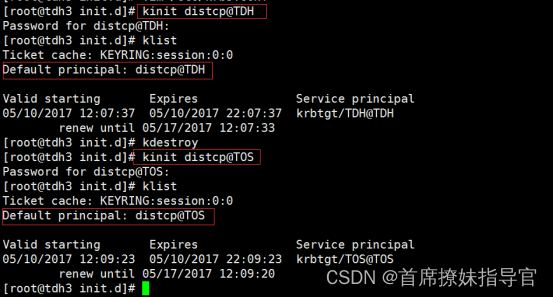

tdh环境,选择一个用户如 distcp 用户, kinit distcp@TDH , kinit distcp@TOS 都可以登录.

-

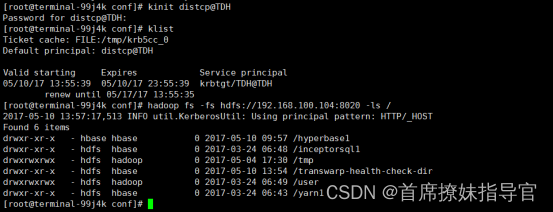

- TOS验证

- 验证distcp是否可以和hdfs正常通信和etc/krb5.conf配置是否正确

tos环境登录terminal容器,执行 kinit distcp@TDH 后,

执行 hadoop fs -fs hdfs://192.168.100.104:8020 -ls /

执行 hadoop fs -fs hdfs://192.168.100.104:8020 -ls / 和 hdfs dfs -ls / 是否都没问题

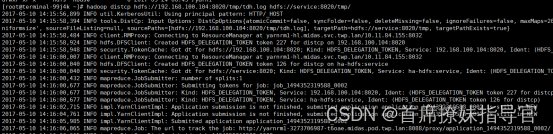

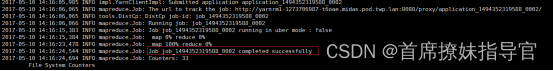

tos环境进入ternimal容器,执行kinit distcp@TOS,

hadoop distcp hdfs://192.168.100.104:8020/tmp/out hdfs://service:8020/tmp

- 错误提示

- hdfs 不能通过互信

Using builtin default etypes for default_tgs_enctypes

default etypes for default_tgs_enctypes: 18 17 16 23 1 3.

>>> CksumType: sun.security.krb5.internal.crypto.RsaMd5CksumType

>>> EType: sun.security.krb5.internal.crypto.Aes256CtsHmacSha1EType

>>> KrbKdcReq send: kdc=192.168.100.63 UDP:88, timeout=30000, number of retries =3, #bytes=555

>>> KDCCommunication: kdc=192.168.100.63 UDP:88, timeout=30000,Attempt =1, #bytes=555

>>> KrbKdcReq send: #bytes read=148

>>> KdcAccessibility: remove 192.168.100.63

>>> KDCRep: init() encoding tag is 126 req type is 13

>>>KRBError:

cTime is Mon Feb 26 07:40:27 CST 1996 825291627000

sTime is Tue May 09 13:42:06 CST 2017 1494308526000

suSec is 871200

error code is 7

error Message is Server not found in Kerberos database

crealm is TDH

cname is distcp

realm is TOS

sname is hdfs/node1

msgType is 30

KrbException: Server not found in Kerberos database (7) - LOOKING_UP_SERVER

原因:

这里sname用的是hdfs/node1 而不是ip地址, 所以需要在domain_realm中加入 node1=TDH, 解析为TDH域才可以.

- 配置错误, yarn的resource manager配置的krb5.conf有问题

2017-05-09 15:31:24,962 INFO impl.YarnClientImpl: Application submission is not finished, submitted application application_1493971181007_0003 is still in NEW

2017-05-09 15:31:26,997 INFO impl.YarnClientImpl: Application submission is not finished, submitted application application_1493971181007_0003 is still in NEW

2017-05-09 15:31:28,425 INFO impl.YarnClientImpl: Submitted application application_1493971181007_0003

2017-05-09 15:31:28,427 INFO mapreduce.JobSubmitter: Cleaning up the staging area /user/yarn/user/distcp/.staging/job_1493971181007_0003

2017-05-09 15:31:28,525 ERROR tools.DistCp: Exception encountered

java.io.IOException: Failed to run job : Failed on local exception: java.io.IOException: Couldn't setup connection for yarn/tos@TOS to 192.168.100.51/192.168.100.51:8020; Host Details : local host is: "yarnrm1-2625464666-gfd1n.midas.pod.twp.lan/10.11.66.93"; destination host is: "192.168.100.51":8020;

at org.apache.hadoop.mapred.YARNRunner.submitJob(YARNRunner.java:300)

at org.apache.hadoop.mapreduce.JobSubmitter.submitJobInternal(JobSubmitter.java:432)

at org.apache.hadoop.mapreduce.Job$10.run(Job.java:1285)

at org.apache.hadoop.mapreduce.Job$10.run(Job.java:1282)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1855)

at org.apache.hadoop.mapreduce.Job.submit(Job.java:1282)

at org.apache.hadoop.tools.DistCp.execute(DistCp.java:162)

at org.apache.hadoop.tools.DistCp.run(DistCp.java:121)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.hadoop.tools.DistCp.main(DistCp.java:401)

- auth_to_local没有配置或没有生效. 可以在namenode日志中发现Illegal principal name的打印

2017-05-09 15:06:30,146 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: there are no corrupt file blocks.

2017-05-09 15:06:30,161 INFO org.apache.hadoop.ipc.Server: Socket Reader #1 for port 8020: readAndProcess from client 192.168.100.62 threw exception [java.lang.IllegalArgumentException: Illegal principal name distcp@TOS]

java.lang.IllegalArgumentException: Illegal principal name distcp@TOS

at org.apache.hadoop.security.User.<init>(User.java:50)

at org.apache.hadoop.security.User.<init>(User.java:43)

at org.apache.hadoop.security.UserGroupInformation.createRemoteUser(UserGroupInformation.java:1405)

at org.apache.hadoop.ipc.Server$Connection.getAuthorizedUgi(Server.java:1218)

at org.apache.hadoop.ipc.Server$Connection.saslProcess(Server.java:1289)

at org.apache.hadoop.ipc.Server$Connection.saslReadAndProcess(Server.java:1238)

at org.apache.hadoop.ipc.Server$Connection.processRpcOutOfBandRequest(Server.java:1878)

at org.apache.hadoop.ipc.Server$Connection.processOneRpc(Server.java:1755)

at org.apache.hadoop.ipc.Server$Connection.readAndProcess(Server.java:1519)

at org.apache.hadoop.ipc.Server$Listener.doRead(Server.java:750)

at org.apache.hadoop.ipc.Server$Listener$Reader.doRunLoop(Server.java:624)

at org.apache.hadoop.ipc.Server$Listener$Reader.run(Server.java:595)

Caused by: org.apache.hadoop.security.authentication.util.KerberosName$NoMatchingRule: No rules applied to distcp@TOS

at org.apache.hadoop.security.authentication.util.KerberosName.getShortName(KerberosName.java:389)

at org.apache.hadoop.security.User.<init>(User.java:48)

... 11 more

1189

1189

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?