前言,看着官网的教程还是有坑的,so总结了一下

一.环境准备

centos7

hadoop3.2.2

jdk1.8

yum install rsync -y

ssh:最小化安装的系统中已有ssh,不用安装

二.开始安装

1 首先安装rsync

yum install rsync -y2 关闭防火墙

systemctl stop firewalld.service3.hadoop地址

下载地址 Index of /apache/hadoop/common

4.下载之后解压并移动目录

tar -zxvf hadoop-3.2.2.tar.gz

mv hadoop-3.2.2 /usr/local/hadoop5.在hadoop的配置文件etc/hadoop/hadoop-env.sh中配置java环境,文件末尾追加

cd /usr/local/hadoop

vim etc/hadoop/hadoop-env.shexport JAVA_HOME=/usr/local/jdk1.8

wq保存之后

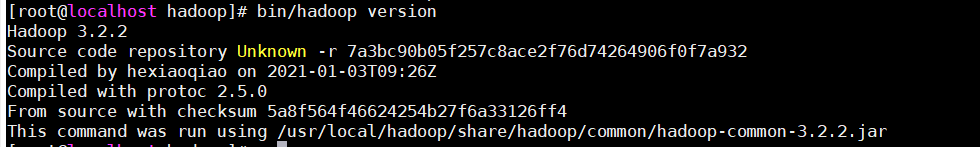

bin/hadoop version

hadoop的运行方式一(单机运行)

1 创建目录input

mkdir input2 将hadoop的所有配置文件拷贝到input目录中,作为测试的数据

cp etc/hadoop/*.xml input

ls inputcapacity-scheduler.xml hadoop-policy.xml httpfs-site.xml kms-site.xml yarn-site.xml

core-site.xml hdfs-site.xml kms-acls.xml mapred-site.xml

3 运行hadoop,搜索input目录下的所有文件,按照规则’dfs[a-z.]+'匹配,结果输出到output目录下

bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.2.jar grep input output 'dfs[a-z.]+'4 查看结果输出

ll outputtotal 4

-rw-r–r--. 1 root root 11 Jan 25 14:55 part-r-00000

-rw-r–r--. 1 root root 0 Jan 25 14:55 _SUCCESS

cat output/part-r-000001 dfsadmin

5 删除input和output文件夹

rm -vfr input outputhadoop的运行方式二(伪分布式)

vim etc/hadoop/core-site.xml<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://192.168.1.150:9000</value>

</property>

</configuration>

vim etc/hadoop/hdfs-site.xml<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

检查ssh是否能免密码登录

ssh localhostThe authenticity of host ‘localhost (::1)’ can’t be established.

ECDSA key fingerprint is SHA256:MJxZUIDNbbnlfxCU+l2usvsIsbc6/NTJ06j/TO4g8G0.

ECDSA key fingerprint is MD5:d1:8f:94:dd:80:e2:cf:6b:a7:45:74:e3:6b:2f:f2:0a.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added ‘localhost’ (ECDSA) to the list of known hosts.

root@localhost’s password:

Last login: Fri Jan 25 14:30:29 2019 from 192.168.1.1

解释: 看到如上内容说明还不能免密码登录

4 配置ssh免密码登录

ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

chmod 0600 ~/.ssh/authorized_keys5 再次检查是否能免密码登录

ssh localhostLast login: Fri Jan 25 15:04:51 2019 from localhost

解释: 说明可以免密码登录了

6 格式化文件系统

bin/hdfs namenode -format2019-01-25 15:09:23,273 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = localhost/127.0.0.1

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 3.2.0

…

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at localhost/127.0.0.1

************************************************************/

修改sbin/start-dfs.sh和sbin/stop-dfs.sh,在文件头分别加入以下内容

vim sbin/start-dfs.sh

vim sbin/stop-dfs.shHDFS_DATANODE_USER=root

HADOOP_SECURE_DN_USER=root

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

启动hadoop服务

sbin/start-dfs.shWARNING: HADOOP_SECURE_DN_USER has been replaced by HDFS_DATANODE_SECURE_USER. Using value of HADOOP_SECURE_DN_USER.

Starting namenodes on [localhost]

Last login: Fri Jan 25 15:07:02 CST 2019 from localhost on pts/1

Starting datanodes

Last login: Fri Jan 25 15:14:50 CST 2019 on pts/0

Starting secondary namenodes [localhost.localdomain]

Last login: Fri Jan 25 15:14:53 CST 2019 on pts/0

localhost.localdomain: Warning: Permanently added ‘localhost.localdomain’ (ECDSA) to the list of known hosts.

浏览器访问namenode节点

http://192.168.1.150:9870

可以看到hadoop的信息

测试调用hadoop搜索功能

首先创建用户目录

bin/hdfs dfs -mkdir /user

bin/hdfs dfs -mkdir /user/root查看当前用户下的文件

bin/hdfs dfs -ls什么也没有

准备实验的数据(将etc/hadoop下面的所有xml文件拷贝到input目录下)

mkdir input

cp etc/hadoop/*.xml input

ls inputcapacity-scheduler.xml hadoop-policy.xml httpfs-site.xml kms-site.xml yarn-site.xml

core-site.xml hdfs-site.xml kms-acls.xml mapred-site.xml

将input目录上传到hadoop上命名为input1

bin/hdfs dfs -put input input1查看hadoop上已有的实验文件

bin/hdfs dfs -lsFound 1 items

drwxr-xr-x - root supergroup 0 2019-01-25 15:24 input1

bin/hdfs dfs -ls input1Found 9 items

-rw-r–r-- 1 root supergroup 8260 2019-01-25 15:24 input1/capacity-scheduler.xml

-rw-r–r-- 1 root supergroup 884 2019-01-25 15:24 input1/core-site.xml

-rw-r–r-- 1 root supergroup 11392 2019-01-25 15:24 input1/hadoop-policy.xml

-rw-r–r-- 1 root supergroup 868 2019-01-25 15:24 input1/hdfs-site.xml

-rw-r–r-- 1 root supergroup 620 2019-01-25 15:24 input1/httpfs-site.xml

-rw-r–r-- 1 root supergroup 3518 2019-01-25 15:24 input1/kms-acls.xml

-rw-r–r-- 1 root supergroup 682 2019-01-25 15:24 input1/kms-site.xml

-rw-r–r-- 1 root supergroup 758 2019-01-25 15:24 input1/mapred-site.xml

-rw-r–r-- 1 root supergroup 690 2019-01-25 15:24 input1/yarn-site.xml

调用hadoop,搜索input1目录下的所有文件,按照规则’dfs[a-z.]+'匹配,结果输出到output目录下

bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.0.jar grep input1 output 'dfs[a-z.]+'查看hadoop上生成的output目录

bin/hdfs dfs -lsFound 2 items

drwxr-xr-x - root supergroup 0 2019-01-25 15:24 input1

drwxr-xr-x - root supergroup 0 2019-01-25 15:26 output

[root@localhost hadoop-3.2.0]# bin/hdfs dfs -ls output

Found 2 items

-rw-r–r-- 1 root supergroup 0 2019-01-25 15:26 output/_SUCCESS

-rw-r–r-- 1 root supergroup 29 2019-01-25 15:26 output/part-r-00000

bin/hdfs dfs -cat output/part-r-000001 dfsadmin

1 dfs.replication

这里可以看到搜索的结果.

实验完毕,关闭伪分布式hadoop

sbin/stop-dfs.shWARNING: HADOOP_SECURE_DN_USER has been replaced by HDFS_DATANODE_SECURE_USER. Using value of HADOOP_SECURE_DN_USER.

Stopping namenodes on [localhost]

Last login: Fri Jan 25 15:14:57 CST 2019 on pts/0

Stopping datanodes

Last login: Fri Jan 25 15:30:53 CST 2019 on pts/0

Stopping secondary namenodes [localhost.localdomain]

Last login: Fri Jan 25 15:30:54 CST 2019 on pts/0

666

666

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?