文章目录

- Hadoop

- Hive

- 1. Cannot find hadoop installation: $HADOOP_HOME or $HADOOP_PREFIX must be set or hadoop must be in the path

- 2. Unable to instantiate org.a pache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

- 3. MetaException(message:Version information not found in metastore. )

- 4. Could not create ServerSocket on address 0.0.0.0/0.0.0.0:9083.

- 未解决

Hadoop

1. because hostname cannot be resolved

DataNode 有守护进行,但 hdfs web 页面上显示没有存活的 DataNode

Hadoop 报错

2017-06-21 17:44:59,513 ERROR org.apache.hadoop.hdfs.server.datanode.DataNode: Initialization failed for Block pool BP-1394689615-10.85.123.43-1498038283287 (Datanode Uuid null) service to /10.85.123.43:9000 Datanode denied communication with namenode because hostname cannot be resolved (ip=10.85.123.44, hostname=10.85.123.44): DatanodeRegistration(0.0.0.0:50010, datanodeUuid=e086ba2d-fe65-4ba7-a6c2-a4829ac9e708, infoPort=50075, infoSecurePort=0, ipcPort=50020, storageInfo=lv=-56;cid=CID-9bfeb191-6823-4f9e-9daa-e31c797e70df;nsid=523094968;c=0)

at org.apache.hadoop.hdfs.server.blockmanagement.DatanodeManager.registerDatanode(DatanodeManager.java:873)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.registerDatanode(FSNamesystem.java:4529)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.registerDatanode(NameNodeRpcServer.java:1286)

at org.apache.hadoop.hdfs.protocolPB.DatanodeProtocolServerSideTranslatorPB.registerDatanode(DatanodeProtocolServerSideTranslatorPB.java:96)

at org.apache.hadoop.hdfs.protocol.proto.DatanodeProtocolProtos$DatanodeProtocolService$2.callBlockingMethod(DatanodeProtocolProtos.java:28752)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:616)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:982)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2049)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2045)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1698)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2043)

1.1 产生原因

在配置 Hadoop 时使用 IP 进行配置

1.2 解决方法

在 hdfs-site.xml 文件中加入如下内容

<property>

<name>dfs.namenode.datanode.registration.ip-hostname-check</name>

<value>false</value>

</property>

1.3 参考

Hive

1. Cannot find hadoop installation: $HADOOP_HOME or $HADOOP_PREFIX must be set or hadoop must be in the path

Hive 版本:2.1.1

启用hive时报以下错误:

Cannot find hadoop installation: $HADOOP_HOME or $HADOOP_PREFIX must be set or hadoop must be in the path

1.1 解决方法

首先查看是否生成了 hive-env.sh 文件

若未生成,切换到 Hive 解压目录下的 conf 文件夹下,执行

cp hive-env.sh.template hive-env.sh

并在 hive-env.sh 文件中加入如下内容

# HADOOP_DIR 为 Hadoop 解压目录

export HADOOP_HOME=HADOOP_DIR

然后执行

source hive-env.sh

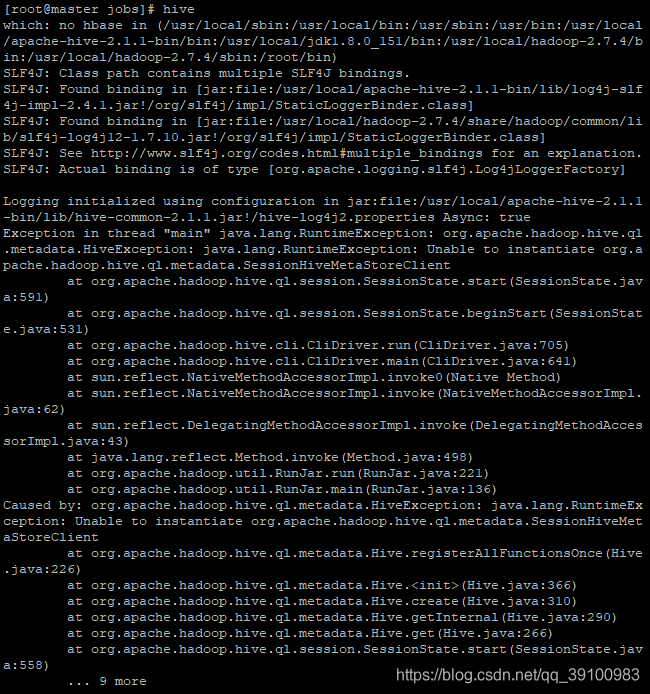

2. Unable to instantiate org.a pache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

进入 Hive 时提示如下信息

2.1 解决方法

hive --service metastore &

2.2 参考

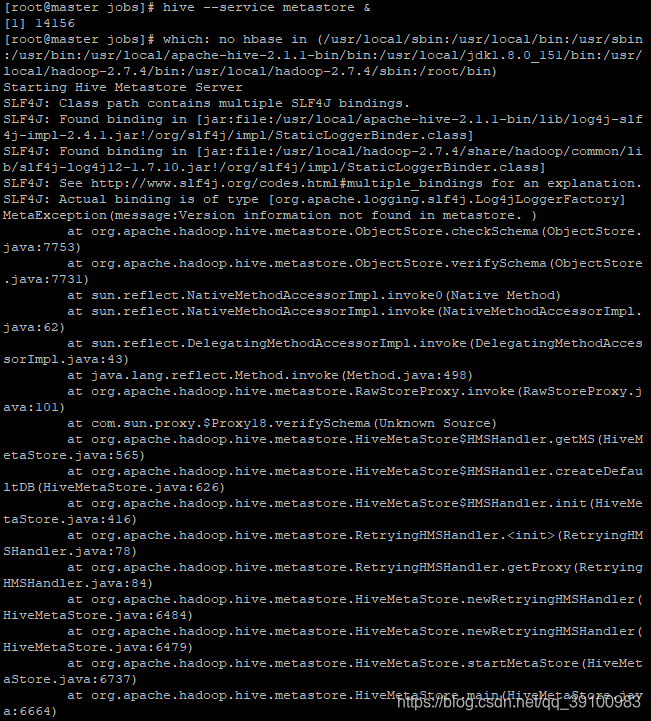

3. MetaException(message:Version information not found in metastore. )

服务端执行如下命令时出现异常

hive --service metastore &

3.1 解决方法

在 Hive 服务端的 hive-site.xml 中加入如下信息

<property>

<name>hive.metastore.schema.verification.record.version</name>

<value>false</value>

</property>

注:设置 hive.metastore.schema.verification 为 false 不管用时

3.2 参考

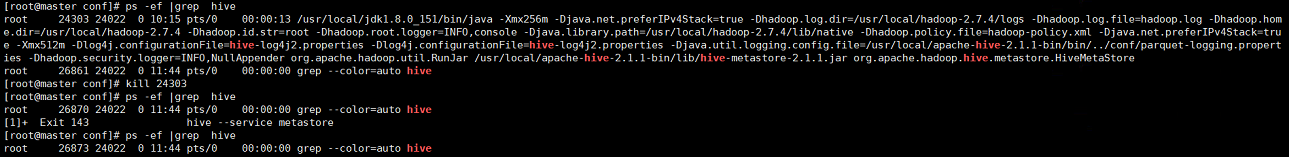

4. Could not create ServerSocket on address 0.0.0.0/0.0.0.0:9083.

服务端执行 hive --service metastore & 报错

3.1 解决方法

出现这种情况的原因是 Hive 相关进程已经启动,kill 掉即可

ps -ef |grep hive

3.2 参考

未解决

1. insert overwrite

Hive 在执行 insert overwrite 操作时,报错

Application application_1558724705046_0002 failed 2 times due to Error launching appattempt_1558724705046_0002_000002. Got exception: java.net.ConnectException: Call From master.novalocal/127.0.0.1 to localhost:44969 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

at sun.reflect.GeneratedConstructorAccessor47.newInstance(Unknown Source)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:792)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:732)

at org.apache.hadoop.ipc.Client.call(Client.java:1480)

at org.apache.hadoop.ipc.Client.call(Client.java:1413)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:229)

at com.sun.proxy.$Proxy83.startContainers(Unknown Source)

at org.apache.hadoop.yarn.api.impl.pb.client.ContainerManagementProtocolPBClientImpl.startContainers(ContainerManagementProtocolPBClientImpl.java:96)

at sun.reflect.GeneratedMethodAccessor14.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:191)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102)

at com.sun.proxy.$Proxy84.startContainers(Unknown Source)

at org.apache.hadoop.yarn.server.resourcemanager.amlauncher.AMLauncher.launch(AMLauncher.java:119)

at org.apache.hadoop.yarn.server.resourcemanager.amlauncher.AMLauncher.run(AMLauncher.java:250)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.net.ConnectException: Connection refused

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:531)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:495)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:615)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:713)

at org.apache.hadoop.ipc.Client$Connection.access$2900(Client.java:376)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1529)

at org.apache.hadoop.ipc.Client.call(Client.java:1452)

... 15 more

. Failing the application.

2. load data from local

从本地导入 Hive 数据时报错

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. MetaException(message:Got exception: org.apache.hadoop.hive.metastore.api.MetaException javax.jdo.JDODataStoreException: You have an error in your SQL syntax; check the manual that corresponds to your MySQL server version for the right syntax to use near ‘OPTION SQL_SELECT_LIMIT=DEFAULT’ at line 1

2.1 未测试的解决方案

mysql JDBC 版本过高或过低,替换 JDBC jar 包

284

284

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?