1. 检测docker网卡

先看下网卡有哪些没有则创建

网卡的作用docker compose多个服务可以使用服务名

docker network ls

我这里是有zk-net网卡你没有就使用命令创建

docker network create -d bridge zk-net

2. 搭建 zookeeper 集群

zookeeper.yaml

version: '3.1'

networks:

zk-net:

name: zk-net # 网络名

services:

zoo1:

image: zookeeper:3.8.0

container_name: zoo1 # 容器名称

restart: always # 开机自启

hostname: zoo1 # 主机名

ports:

- 2181:2181 # 端口号

environment:

ZOO_MY_ID: 1

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

networks:

- zk-net

zoo2:

image: zookeeper:3.8.0

container_name: zoo2

restart: always

hostname: zoo2

ports:

- 2182:2181

environment:

ZOO_MY_ID: 2

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

networks:

- zk-net

zoo3:

image: zookeeper:3.8.0

container_name: zoo3

restart: always

hostname: zoo3

ports:

- 2183:2181

environment:

ZOO_MY_ID: 3

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

networks:

- zk-net

启动集群

docker compose -f zookeeper.yaml up -d

3. 搭建kafka集群+eagle

kafka.yaml

version: '3.1'

networks:

zk-net:

name: zk-net # 网络名

services:

kafka1:

container_name: kafka1 # 容器名称

image: confluentinc/cp-kafka:7.3.1

ports:

- "9092:9092"

- "29092:29092"

environment:

KAFKA_BROKER_ID: 1

KAFKA_ZOOKEEPER_CONNECT: 'zoo1:2181,zoo2:2181,zoo3:2181'

KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT

KAFKA_ADVERTISED_LISTENERS: 'PLAINTEXT://kafka1:9092,PLAINTEXT_HOST://localhost:29092'

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 3

KAFKA_GROUP_INITIAL_REBALANCE_DELAY_MS: 0

KAFKA_JMX_PORT: 19092

networks:

- zk-net

kafka2:

container_name: kafka2 # 容器名称

image: confluentinc/cp-kafka:7.3.1

ports:

- "9093:9092"

- "29093:29093"

environment:

KAFKA_BROKER_ID: 2

KAFKA_ZOOKEEPER_CONNECT: 'zoo1:2181,zoo2:2181,zoo3:2181'

KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT

KAFKA_ADVERTISED_LISTENERS: 'PLAINTEXT://kafka2:9092,PLAINTEXT_HOST://localhost:29093'

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 3

KAFKA_GROUP_INITIAL_REBALANCE_DELAY_MS: 0

KAFKA_JMX_PORT: 19093

networks:

- zk-net

kafka3:

container_name: kafka3 # 容器名称

image: confluentinc/cp-kafka:7.3.1

ports:

- "9094:9092"

- "29094:29094"

environment:

KAFKA_BROKER_ID: 3

KAFKA_ZOOKEEPER_CONNECT: 'zoo1:2181,zoo2:2181,zoo3:2181'

KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT

KAFKA_ADVERTISED_LISTENERS: 'PLAINTEXT://kafka3:9092,PLAINTEXT_HOST://localhost:29094'

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 3

KAFKA_GROUP_INITIAL_REBALANCE_DELAY_MS: 0

KAFKA_JMX_PORT: 19094

depends_on:

- zoo1

networks:

- zk-net

eagle:

container_name: eagle # 容器名称

image: nickzurich/efak:3.0.1

volumes: # 挂载目录

- ./conf/system-config.properties:/opt/efak/conf/system-config.properties

environment: # 配置参数

EFAK_CLUSTER_ZK_LIST: zoo1:2181,zoo2:2181,zoo3:2181

depends_on:

- kafka1

- kafka2

- kafka3

ports:

- 8048:8048

networks:

- zk-net

注意这几个配置一定要配对

- KAFKA_ZOOKEEPER_CONNECT:zookeeper连接的ip地址可以有多个

- KAFKA_BROKER_ID:broker的全局唯一编号,不能重复

- KAFKA_INTER_BROKER_LISTENER_NAME:用于配置broker之间通信使用的监听器名称

- KAFKA_LISTENER_SECURITY_PROTOCOL_MAP:定义了每个监听器名称要使用的安全协议的键/值对

- KAFKA_ADVERTISED_LISTENERS:监听的服务端口有多个

- KAFKA_JMX_PORT JMX监听端口

eagle配置文件

system-config.properties

######################################

# multi zookeeper&kafka cluster list

######################################

kafka.eagle.zk.cluster.alias=cluster1

cluster1.zk.list=zoo1:2181,zoo2:2181,zoo3:2181

######################################

# zk client thread limit

######################################

kafka.zk.limit.size=25

######################################

# kafka eagle webui port

######################################

kafka.eagle.webui.port=8048

######################################

# kafka offset storage

######################################

cluster1.kafka.eagle.offset.storage=kafka

######################################

# enable kafka metrics

######################################

kafka.eagle.metrics.charts=true

kafka.eagle.sql.fix.error=false

######################################

# kafka sql topic records max

######################################

kafka.eagle.sql.topic.records.max=5000

######################################

# alarm email configure

######################################

kafka.eagle.mail.enable=false

kafka.eagle.mail.sa=sa@163.com

kafka.eagle.mail.username=sa@163.com

kafka.eagle.mail.password=mq

kafka.eagle.mail.server.host=smtp.163.com

kafka.eagle.mail.server.port=25

######################################

# alarm im configure

######################################

#kafka.eagle.im.dingding.enable=true

#kafka.eagle.im.dingding.url=https://oapi.dingtalk.com/robot/send?access_token=

#kafka.eagle.im.wechat.enable=true

#kafka.eagle.im.wechat.token=https://qyapi.weixin.qq.com/cgi-bin/gettoken?corpid=xxx&corpsecret=xxx

#kafka.eagle.im.wechat.url=https://qyapi.weixin.qq.com/cgi-bin/message/send?access_token=

#kafka.eagle.im.wechat.touser=

#kafka.eagle.im.wechat.toparty=

#kafka.eagle.im.wechat.totag=

#kafka.eagle.im.wechat.agentid=

######################################

# delete kafka topic token

######################################

kafka.eagle.topic.token=admin

######################################

# kafka sasl authenticate

######################################

cluster1.kafka.eagle.sasl.enable=false

cluster1.kafka.eagle.sasl.protocol=SASL_PLAINTEXT

cluster1.kafka.eagle.sasl.mechanism=PLAIN

cluster2.kafka.eagle.sasl.enable=false

cluster2.kafka.eagle.sasl.protocol=SASL_PLAINTEXT

cluster2.kafka.eagle.sasl.mechanism=PLAIN

######################################

# kafka jdbc driver address

######################################

kafka.eagle.driver=org.sqlite.JDBC

kafka.eagle.url=jdbc:sqlite:/kafka-eagle/db/ke.db

kafka.eagle.username=root

kafka.eagle.password=root

一建搭建

compose.yaml

version: '3.1'

networks:

zk-net:

name: zk-net # 网络名

services:

zoo1:

container_name: zoo1 # 容器名称

image: confluentinc/cp-zookeeper:7.3.1

ports:

- "2181:2181"

environment:

ZOOKEEPER_CLIENT_PORT: 2181

ZOOKEEPER_TICK_TIME: 2000

KAFKA_JMX_PORT: 39999

networks:

- zk-net

kafka1:

container_name: kafka1 # 容器名称

image: confluentinc/cp-kafka:7.3.1

ports:

- "9092:9092"

- "29092:29092"

environment:

KAFKA_BROKER_ID: 1

KAFKA_ZOOKEEPER_CONNECT: 'zoo1:2181'

KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT

KAFKA_ADVERTISED_LISTENERS: 'PLAINTEXT://kafka1:9092,PLAINTEXT_HOST://localhost:29092'

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 3

KAFKA_GROUP_INITIAL_REBALANCE_DELAY_MS: 0

KAFKA_JMX_PORT: 19092

depends_on:

- zoo1

networks:

- zk-net

kafka2:

container_name: kafka2 # 容器名称

image: confluentinc/cp-kafka:7.3.1

ports:

- "9093:9092"

- "29093:29093"

environment:

KAFKA_BROKER_ID: 2

KAFKA_ZOOKEEPER_CONNECT: 'zoo1:2181'

KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT

KAFKA_ADVERTISED_LISTENERS: 'PLAINTEXT://kafka2:9092,PLAINTEXT_HOST://localhost:29093'

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 3

KAFKA_GROUP_INITIAL_REBALANCE_DELAY_MS: 0

KAFKA_JMX_PORT: 19093

depends_on:

- zoo1

networks:

- zk-net

kafka3:

container_name: kafka3 # 容器名称

image: confluentinc/cp-kafka:7.3.1

ports:

- "9094:9092"

- "29094:29094"

environment:

KAFKA_BROKER_ID: 3

KAFKA_ZOOKEEPER_CONNECT: 'zoo1:2181'

KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT

KAFKA_ADVERTISED_LISTENERS: 'PLAINTEXT://kafka3:9092,PLAINTEXT_HOST://localhost:29094'

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1

KAFKA_GROUP_INITIAL_REBALANCE_DELAY_MS: 0

KAFKA_JMX_PORT: 19094

depends_on:

- zoo1

networks:

- zk-net

eagle:

container_name: eagle # 容器名称

image: nickzurich/efak:3.0.1

volumes: # 挂载目录

- ./conf/system-config4.properties:/opt/efak/conf/system-config.properties

environment: # 配置参数

EFAK_CLUSTER_ZK_LIST: zoo1:2181

depends_on:

- kafka1

- kafka2

- kafka3

ports:

- 8048:8048

networks:

- zk-net

启动

docker compose -f compose.yaml up -d

查看日志

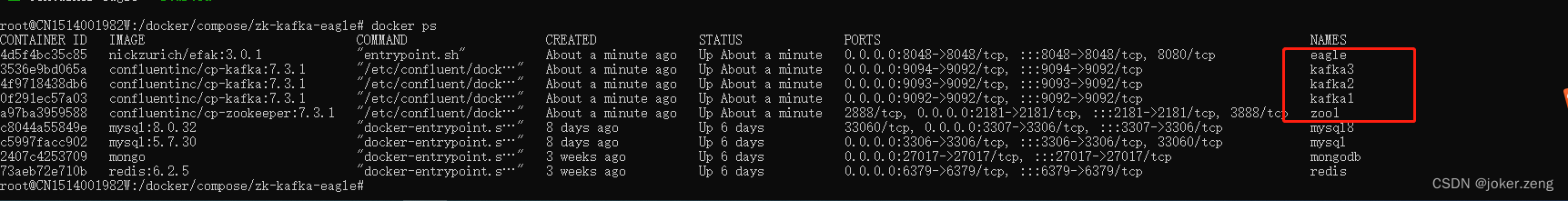

docker ps

检测zookeeper安装是否成功

1. 使用zookeeper的包

我们分别进入到zoo1、zoo2、zoo3中去查看当前它们各自的状态:

docker exec -it zoo2 /bin/bash # 进入容器

cd bin # 进入bin目录

./zkServer.sh status # 查看当前服务状态

2. 使用confluentinc/cp-zookeeper包

可以去官网看文章:https://docs.confluent.io/platform/current/installation/docker/installation.html

3.查看cp-kafka对于apche kafka版本

https://docs.confluent.io/platform/7.3/release-notes/index.html

4. 测试eagle

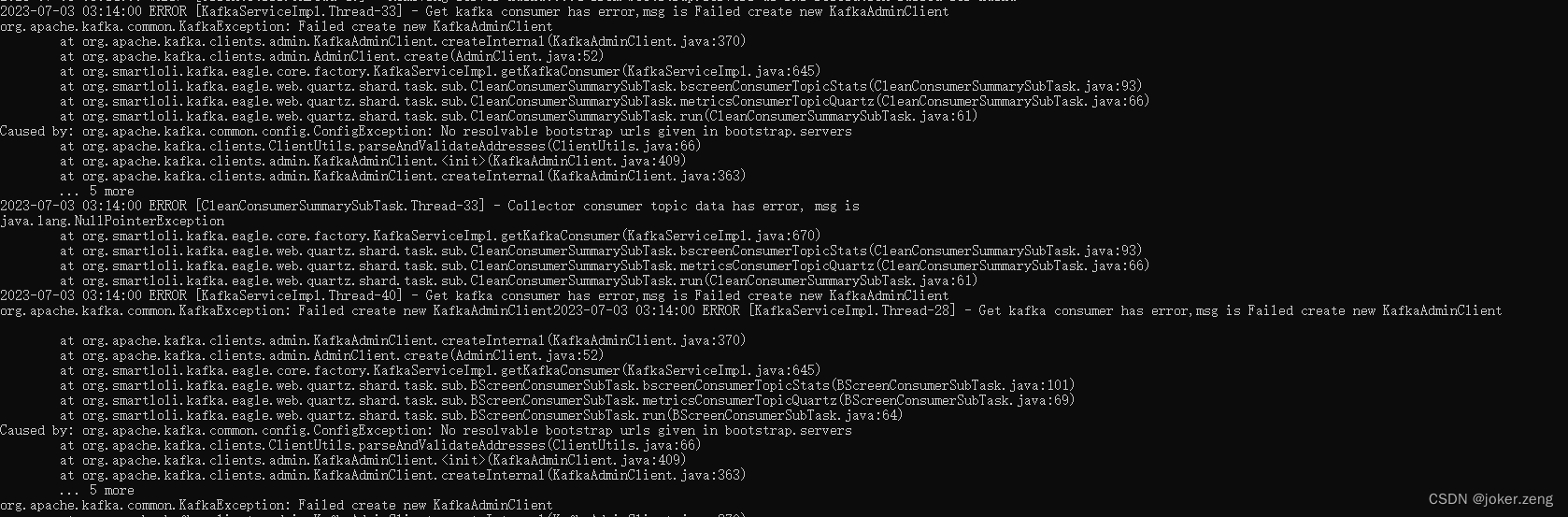

先查看日志是否启动成

docker logs eagle

这个报错的话检测一下kafka的配置的服务名

KAFKA_ADVERTISED_LISTENERS

还有是否网卡配置一致

KAFKA_BROKER_ID是否一致等

发现问题修复问题之后停止删除重装

docker compose -f compose.yaml stop && docker compose -f compose.yaml rm -f && docker compose -f compose.yaml up -d

# 查看日志

docker logs eagle

无报错正常去登录测试

http://127.0.0.1:8048/

账号密码:admin 123456

Node节点都正常

zk也正常

其他功能你自行摸索

1549

1549

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?