麒麟v10使用kubeadm安装k8s1.26

苦于目前下载不到现成el8的kubelet/kubeadm/kubectl,就算有,以后如果在arm架构上安装,又要寻找新的包,通过摸索,找到了源码构建方法。无论是arm架构还是amd架构,都是可以使用该办法进行安装。

服务器安排

| 服务器IP | 主机名 | 用途 | 部署说明 |

|---|---|---|---|

| 192.168.1.3 | kmaster1 | 主节点1 | kubelet/kubeadm/kubectl/containerd/ipvs/golang、build二进制文件、加载镜像、负载均衡 |

| 192.168.1.4 | kmaster2 | 主节点2 | kubelet/kubeadm/kubectl/containerd/ipvs、加载镜像、负载均衡 |

| 192.168.1.5 | kmaster3 | 主节点 3 | kubelet/kubeadm/kubectl/containerd/ipvs、加载镜像、负载均衡 |

| 192.168.1.6 | knode1 | 工作节点1 | kubelet/kubeadm/kubectl/containerd/ipvs、加载镜像 |

| 192.168.1.7 | knode2 | 工作节点2 | kubelet/kubeadm/kubectl/containerd/ipvs、加载镜像 |

| 192.168.1.2 | 无 | 主节点VIP | 无 |

初始化服务器,安装IPVS,主节点和工作节点都要执行

安装ipvs

yum install -y ipset ipvsadm

创建/etc/modules-load.d/containerd.conf配置文件

cat << EOF > /etc/modules-load.d/containerd.conf

overlay

br_netfilter

EOF

执行以下命令使配置生效

modprobe overlay

modprobe br_netfilter

创建/etc/sysctl.d/99-kubernetes-cri.conf配置文件

cat << EOF > /etc/sysctl.d/99-kubernetes-cri.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

user.max_user_namespaces=28633

EOF

sysctl -p /etc/sysctl.d/99-kubernetes-cri.conf

加载ipvs内核模块–4.19以上版本内核

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

授权生效

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack

关闭swap,并永久关闭

swapoff -a

sed -i "s/^[^#].*swap/#&/" /etc/fstab

配置/etc/hosts

cat >> /etc/hosts << EOF

192.168.1.3 kmaster1

192.168.1.4 kmaster2

192.168.1.5 kmaster3

192.168.1.6 knode1

192.168.1.7 knode2

EOF

免密登陆,不是必须

ssh-keygen

按回车几次

ssh-copy-id kmaster1

输入密码

ssh-copy-id kmaster2

ssh-copy-id kmaster3

ssh-copy-id knode1

ssh-copy-id knode2

安装containerd和cni,主节点和工作节点都要执行

#官方参考安装地址:https://github.com/containerd/containerd/blob/main/docs/getting-started.md

#cri-containerd下载地址:https://github.com/containerd/containerd/releases/download/v1.6.25/cri-containerd-1.6.25-linux-amd64.tar.gz

#libseccomp下载地址:https://github.com/opencontainers/runc/releases/download/v1.1.10/libseccomp-2.5.4.tar.gz

#gperf下载地址:https://rpmfind.net/linux/centos/8-stream/PowerTools/x86_64/os/Packages/gperf-3.1-5.el8.x86_64.rpm

#cni下载地址:https://github.com/containernetworking/plugins/releases/download/v1.3.0/cni-plugins-linux-amd64-v1.3.0.tgz

更新依赖,否则runc会运行不了

yum install gcc gcc-c++ openssl-devel pcre-devel make autoconf -y

rpm -ivh gperf-3.1-5.el8.x86_64.rpm

tar xf libseccomp-2.5.4.tar.gz

cd libseccomp-2.5.4

./configure

make && make install

#开始安装cri-containerd【包含containerd、runc】

#直接解压到根目录

tar zxvf cri-containerd-1.6.25-linux-amd64.tar.gz -C /

#生成默认配置文件

containerd config default > /etc/containerd/config.toml

#修改默认配置

sed -i 's#SystemdCgroup = false#SystemdCgroup = true#g' /etc/containerd/config.toml

sed -i 's#k8s.gcr.io/pause#registry.cn-hangzhou.aliyuncs.com/google_containers/pause#g' /etc/containerd/config.toml

sed -i 's#registry.gcr.io/pause#registry.cn-hangzhou.aliyuncs.com/google_containers/pause#g' /etc/containerd/config.toml

sed -i 's#registry.k8s.io/pause#registry.cn-hangzhou.aliyuncs.com/google_containers/pause#g' /etc/containerd/config.toml

sed -i s/pause:3.6/pause:3.9/g /etc/containerd/config.toml

#启动containerd

systemctl daemon-reload

systemctl start containerd

systemctl enable containerd

#解压cni到默认指定目录,如有修改,使用该命令查看目录地址:crictl info | grep binDir

mkdir -p /opt/cni/bin

tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.3.0.tgz

构建kubelet/kubeadm/kubectl二进制文件

安装golang,第一台master执行

tar -xzf go1.21.1.linux-amd64.tar.gz -C /usr/local

echo "export PATH=$PATH:/usr/local/go/bin" >> /etc/profile

source /etc/profile

构建k8s二进制文件,第一台master执行,后续从master1拷贝即可

tar xf kubernetes-1.26.12.tar.gz

cd kubernetes-1.26.12

设置kubeadm安装的集群证书时间为100年

sed -i s/365/365\ \*\ 100/g cmd/kubeadm/app/constants/constants.go

构建命令,arm架构的话就是linux/arm64

KUBE_BUILD_PLATFORMS=linux/amd64 make WHAT=cmd/kubelet GOFLAGS=-v GOGCFLAGS="-N -l"

KUBE_BUILD_PLATFORMS=linux/amd64 make WHAT=cmd/kubectl GOFLAGS=-v GOGCFLAGS="-N -l"

KUBE_BUILD_PLATFORMS=linux/amd64 make WHAT=cmd/kubeadm GOFLAGS=-v GOGCFLAGS="-N -l"

cp _output/bin/kubelet /usr/bin/

cp _output/bin/kubectl /usr/bin/

cp _output/bin/kubeadm /usr/bin/

拷贝到其他节点

scp _output/bin/kube* kmaster2@/usr/bin/

scp _output/bin/kube* kmaster3@/usr/bin/

scp _output/bin/kube* knode1@/usr/bin/

scp _output/bin/kube* knode2@/usr/bin/

安装kubelet,设置系统级启动

cat > /usr/lib/systemd/system/kubelet.service << EOF

[Unit]

Description=kubelet: The Kubernetes Node Agent

Documentation=https://kubernetes.io/docs/

Wants=network-online.target

After=network-online.target

[Service]

ExecStart=/usr/bin/kubelet

Restart=always

StartLimitInterval=0

RestartSec=10

[Install]

WantedBy=multi-user.target

EOF

mkdir /usr/lib/systemd/system/kubelet.service.d/

echo '[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf"

Environment="KUBELET_CONFIG_ARGS=--config=/var/lib/kubelet/config.yaml"

EnvironmentFile=-/var/lib/kubelet/kubeadm-flags.env

EnvironmentFile=-/etc/sysconfig/kubelet

ExecStart=

ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS' > /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf

cat > /etc/sysconfig/kubelet << EOF

KUBELET_CGROUP_ARGS="--cgroup-driver=systemd"

KUBE_PROXY_MODE="ipvs"

EOF

systemctl daemon-reload

systemctl start kubelet

systemctl enable kubelet

安装负载均衡

部署keepalived+HAProxy

1.信息可以按照自己的环境填写,或者和我相同

2.网卡名称都为ens33,如有不相同建议修改下面配置

3.cluster dns或domain有改变的话,需要修改kubelet-conf.yml

HA(haproxy+keepalived) 单台master就不要用HA了

首先所有master安装haproxy+keeplived

yum install haproxy keepalived -y

生成kmaster的haproxy配置文件,所有master通用

cat << EOF | tee /etc/haproxy/haproxy.cfg

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

defaults

mode tcp

log global

retries 3

timeout connect 10s

timeout client 1m

timeout server 1m

frontend kubernetes

bind *:8443

mode tcp

option tcplog

default_backend kubernetes-apiserver

backend kubernetes-apiserver

mode tcp

balance roundrobin

server kmaster1 192.168.1.3:6443 check maxconn 2000

server kmaster2 192.168.1.4:6443 check maxconn 2000

server kmaster3 192.168.1.5:6443 check maxconn 2000

EOF

生成kmaster1的keeplived配置文件

cat << EOF | tee /etc/keepalived/keepalived.conf

global_defs {

router_id LVS_DEVEL

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 3

fall 10

timeout 9

rise 2

}

vrrp_instance VI_1 {

state MASTER #备服务器上改为BACKUP

interface ens33 #改为自己的接口

virtual_router_id 51

priority 100 #备服务器上改为小于100的数字,90,80

advert_int 1

mcast_src_ip 192.168.1.3 #本机IP

nopreempt

authentication {

auth_type PASS

auth_pass 1111

}

unicast_peer {

192.168.1.4 #除本机外其余两个master的IP节点

192.168.1.5

}

virtual_ipaddress {

192.168.1.2 #虚拟vip,自己设定

}

track_script {

check_haproxy

}

}

EOF

生成kmaster2的keeplived配置文件

cat << EOF | tee /etc/keepalived/keepalived.conf

global_defs {

router_id LVS_DEVEL_1

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 3

fall 10

timeout 9

rise 2

}

vrrp_instance VI_1 {

state BACKUP #备服务器上改为BACKUP

interface ens33 #改为自己的接口

virtual_router_id 51

priority 90 #备服务器上改为小于100的数字,90,80

advert_int 1

mcast_src_ip 192.168.1.4 #本机IP

nopreempt

authentication {

auth_type PASS

auth_pass 1111

}

unicast_peer {

192.168.1.3 #除本机外其余两个master的IP节点

192.168.1.5

}

virtual_ipaddress {

192.168.1.2 #虚拟vip,自己设定

}

track_script {

check_haproxy

}

}

EOF

生成kmaster3的keeplived配置文件

cat << EOF | tee /etc/keepalived/keepalived3.conf

global_defs {

router_id LVS_DEVEL_3

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 3

fall 10

timeout 9

rise 2

}

vrrp_instance VI_1 {

state BACKUP #备服务器上改为BACKUP

interface ens33 #改为自己的接口

virtual_router_id 51

priority 80 #备服务器上改为小于100的数字,90,80

advert_int 1

mcast_src_ip 192.168.1.5 #本机IP

nopreempt

authentication {

auth_type PASS

auth_pass 1111

}

unicast_peer {

192.168.1.3 #除本机外其余两个master的IP节点

192.168.1.4

}

virtual_ipaddress {

192.168.1.2 #虚拟vip,自己设定

}

track_script {

check_haproxy

}

}

EOF

添加keeplived健康检查脚本,每台master通用

cat > /etc/keepalived/check_haproxy.sh <<EOF

#!/bin/bash

A=\`ps -C haproxy --no-header | wc -l\`

if [ \$A -eq 0 ];then

systemctl stop keepalived

fi

EOF

chmod +x /etc/keepalived/check_haproxy.sh

#启动haproxy和keepalived,并加入开机自启

systemctl enable --now haproxy keepalived

systemctl restart haproxy keepalived

加载镜像【每台都要执行】,或者使用私有镜像仓库

方法1,直接使用命令下载。

#master镜像

crictl pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.26.0

crictl pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.26.0

crictl pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.26.0

crictl pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.26.0

crictl pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9

crictl pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.3-0

crictl pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.8.6

#calico镜像

crictl pull registry.cn-beijing.aliyuncs.com/dotbalo/cni:v3.24.0

crictl pull registry.cn-beijing.aliyuncs.com/dotbalo/kube-controllers:v3.24.0

crictl pull registry.cn-beijing.aliyuncs.com/dotbalo/typha:v3.24.0

crictl pull registry.cn-beijing.aliyuncs.com/dotbalo/node:v3.24.0

#comp镜像

crictl pull registry.cn-beijing.aliyuncs.com/dotbalo/metrics-server:0.6.1

方法2,离线下载,先在其他地方准备好镜像tar包,再导入

#master镜像

ctr -n k8s.io images import coredns-v1.9.3.tar

ctr -n k8s.io images import etcd-3.5.6-0.tar

ctr -n k8s.io images import kube-apiserver-v1.26.0.tar

ctr -n k8s.io images import kube-controller-manager-v1.26.0.tar

ctr -n k8s.io images import kube-proxy-v1.26.0.tar

ctr -n k8s.io images import kube-scheduler-v1.26.0.tar

ctr -n k8s.io images import pause-3.9.tar

#calico镜像

ctr -n k8s.io images import cni-v3.24.0.tar

ctr -n k8s.io images import kube-controllers-v3.24.0.tar

ctr -n k8s.io images import node-v3.24.0.tar

ctr -n k8s.io images import typha-v3.24.0.tar

#comp镜像

ctr -n k8s.io images import metrics-server-0.6.1.tar

方法3,用kubeadm命令拉取镜像【有网络的情况下推荐使用该方法,并且后续calico、comp、dashbored都不需要额外手动拉取;网络不太好的情况下使用方法1一个个的下载;没网络的情况下只能用方法2】

kubeadm config images pull --image-repository registry.cn-hangzhou.aliyuncs.com/google_containers

[root@kmatser1 ~]# kubeadm config images pull --image-repository registry.cn-hangzhou.aliyuncs.com/google_containers

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.26.0

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.26.0

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.26.0

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.26.0

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.6-0

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.9.3

初始化集群主节点

有安装负载均衡的情况下,使用8443端口和VIP,单机主节点的话,使用6443端口和master的IP。只需要在第一台master执行,并且确保VIP当前就在该服务器上。

kubeadm init --apiserver-advertise-address 192.168.1.2 --apiserver-bind-port 8443 --image-repository registry.cn-hangzhou.aliyuncs.com/google_containers --cri-socket "unix:///var/run/containerd/containerd.sock" --kubernetes-version 1.26.0

输出如下表示成功:

[init] Using Kubernetes version: v1.26.0

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kmaster1 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.203.200]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [kmaster1 localhost] and IPs [192.168.1.2 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [kmaster1 localhost] and IPs [192.168.1.2 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 6.003773 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node kmaster1 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node kmaster1 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: styztp.kt842zi3r4lc5ez8

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.1.2:8443 --token styztp.kt842zi3r4lc5ez8 \

--discovery-token-ca-cert-hash sha256:85d216d87b847ca609cd3bfe0099ff2dd776bc33ca33586db2dac354e720a80f

复制初始化打印出来的命令,到node节点去执行,nide需要完成上述ipvs安装、containerd安装、kubelet安装等。否则会失败,仔细看文档里面提到的需要在哪些服务器执行。

kubeadm join 192.168.1.2:8443 --token styztp.kt842zi3r4lc5ez8 \

--discovery-token-ca-cert-hash sha256:85d216d87b847ca609cd3bfe0099ff2dd776bc33ca33586db2dac354e720a80f

新增master节点,初始化的时候没有给出master怎么加入集群,需要手动在第一台master生成,在去新的master执行加入集群。

#在master上生成新的token

kubeadm token create --print-join-command

#在master上生成用于新master加入的证书

kubeadm init phase upload-certs --experimental-upload-certs

#根据上述两条命令生成的信息,拿去新master节点执行

kubeadm join 192.168.1.2:8443 --token styztp.kt842zi3r4lc5ez8 \

--discovery-token-ca-cert-hash sha256:85d216d87b847ca609cd3bfe0099ff2dd776bc33ca33586db2dac354e720a80f \

--experimental-control-plane --certificate-key e799a655f667fc327ab8c91f4f2541b57b96d2693ab5af96314ebddea7a68526

每台master执行下列命令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

export KUBECONFIG=/etc/kubernetes/admin.conf

安装calico

下载地址:https://raw.githubusercontent.com/projectcalico/calico/v3.24.0/manifests/calico.yaml

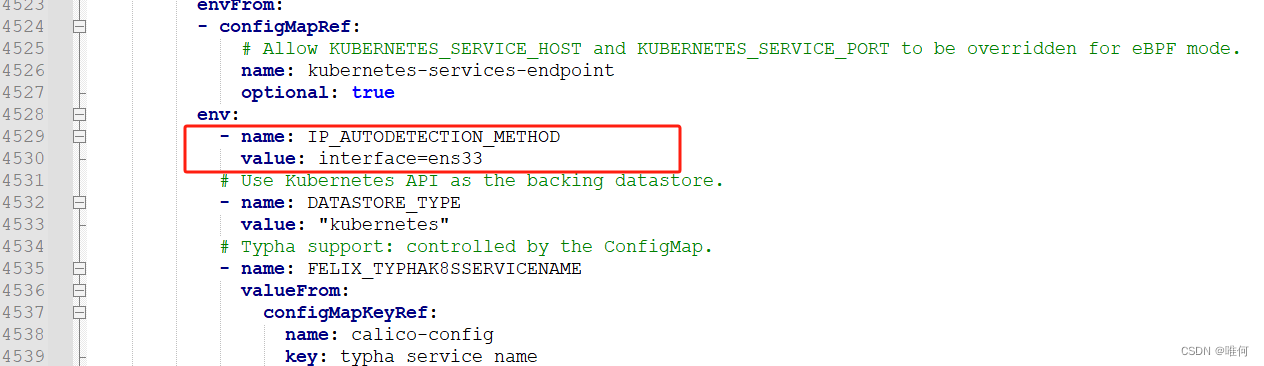

如果多网卡,或者报错网卡的问题,需要修改calico.yaml的第4530行,加入下列参数指定网卡。

- name: IP_AUTODETECTION_METHOD

value: interface=ens33

kubectl create -f calico.yaml

至此,k8s就安装完成了。

2900

2900

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?