章节一:Windows 下的 PIP 安装

官网安装教程地址

按照里面的教程去安装

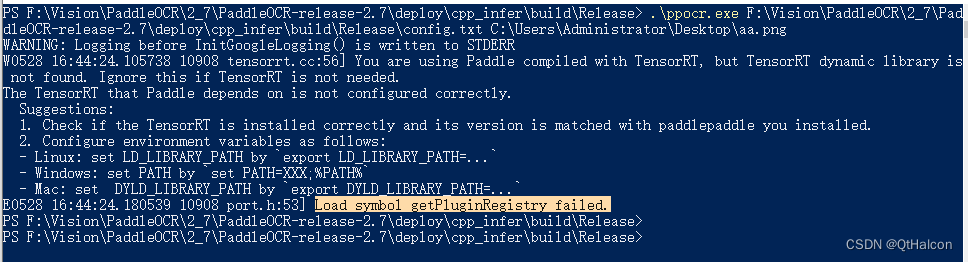

如果使用cuda版本的还要安装tensorrt,不然后面运行demo程序的程序会报如下错。

下载TensorRT 8版本,tensorrt下载地址

章节二:编译源码

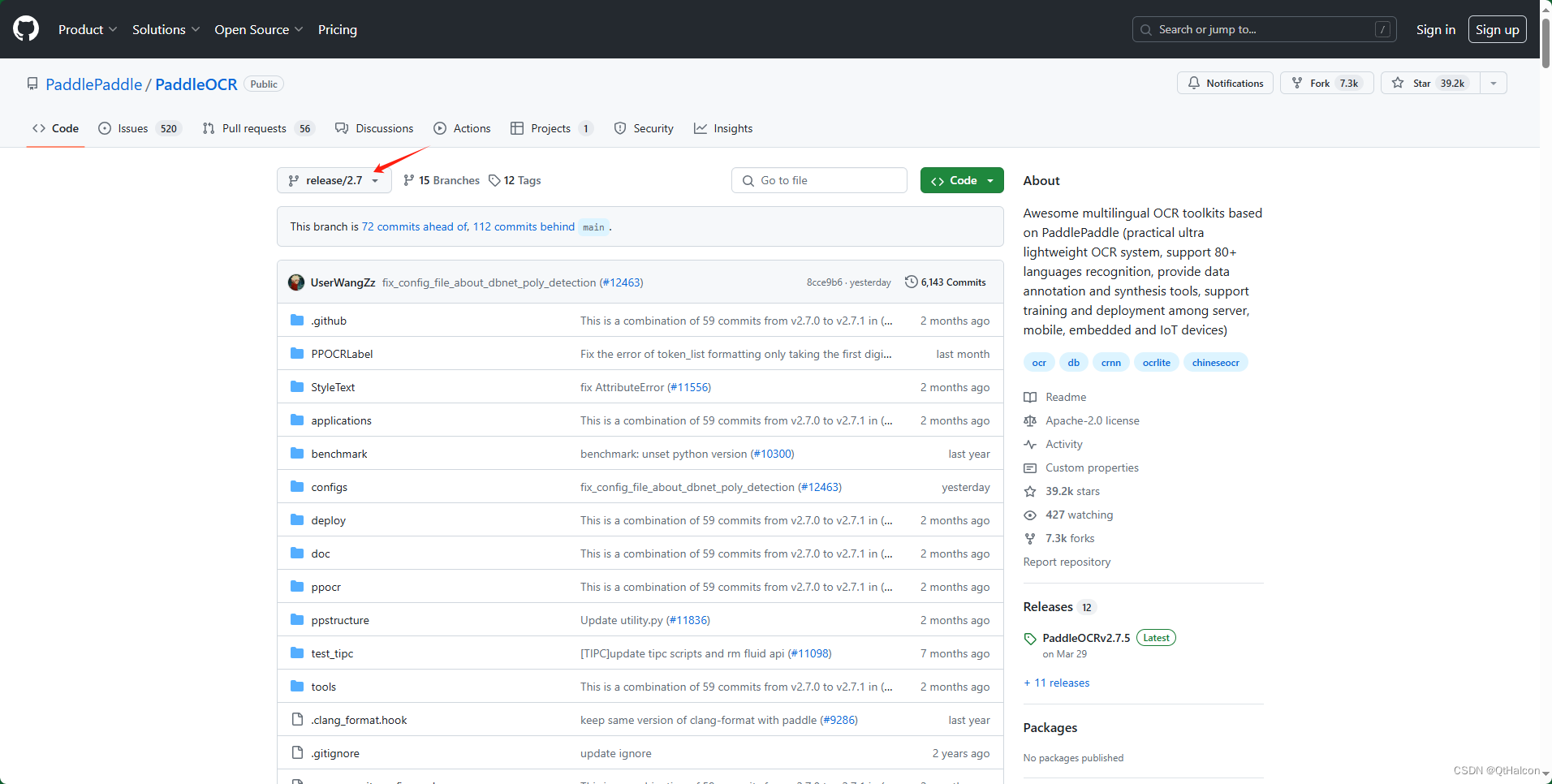

进入官网源码地址

下载release2.7

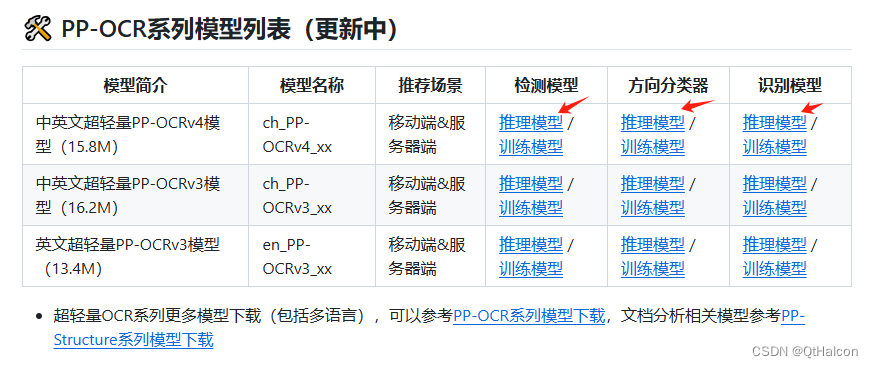

下载三个模型

下载推理预测库

官网下载地址,根据自己的情况下载CPU或对应的自己CUDA版本的GPU

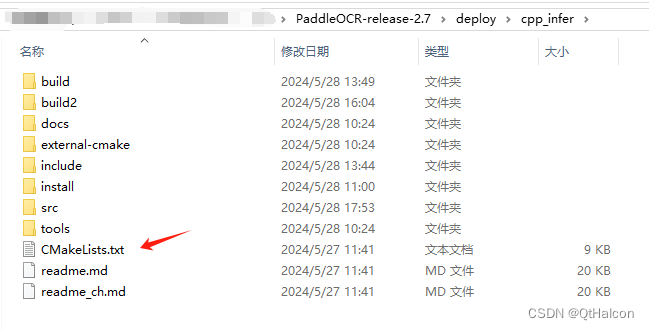

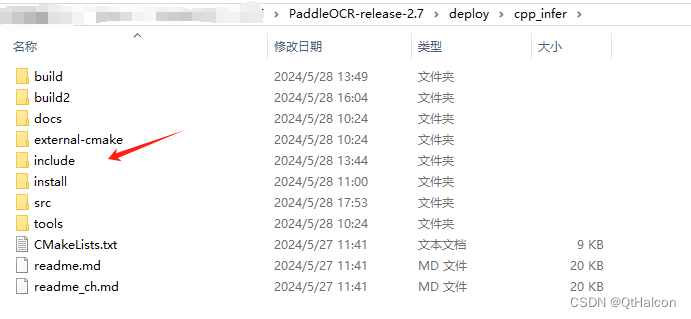

用CMAK打开如下文件

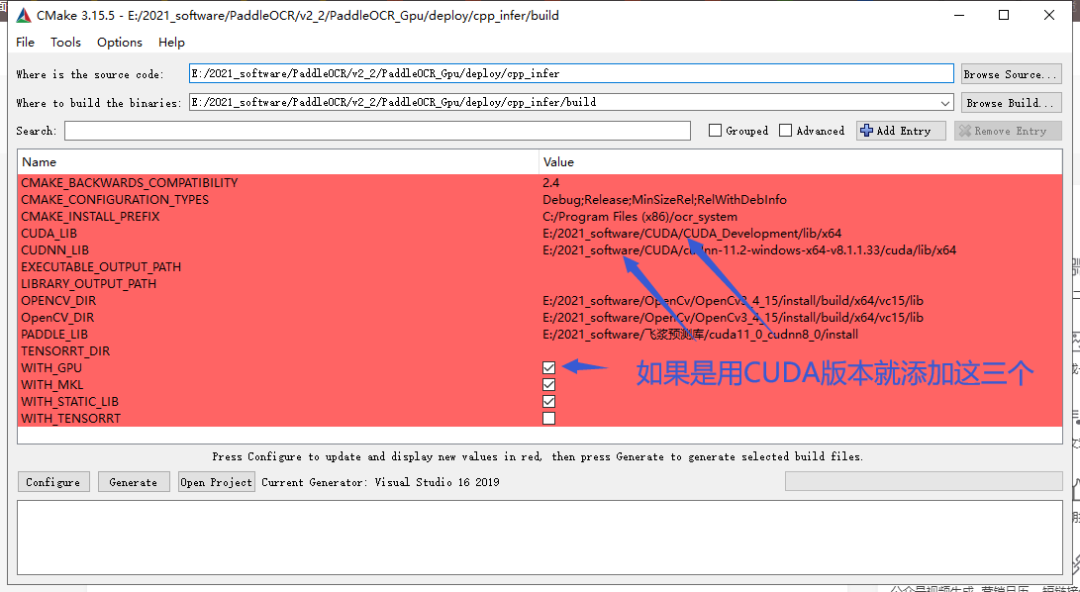

添加路径OpenCV路径和预测库路径。

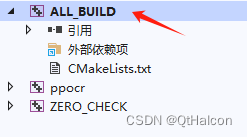

然后Configers,再Generate,如果有报错不用管他,最后在你的构建目录生成了项目,然后开始编译。

但是编译会报错

在utility.cpp中无法打开包括文件"dirent.h":No such file or directory<dirent.h>是个unix系统下常见的接口,但windows平台的MSVC编译器并没有提供这个接口,对于跨平台的项目开发就会带来一些麻烦,如果在MSVC下编译时可能因为windows平台缺少这个接口就要为windows平台另外写一些代码。

不过大佬已经做了一个windows版本的<dirent.h>,放在了github上面,链接如下:

https://github.com/tronkko/dirent下载完后加入这个文件夹:

然后重新编译,但是会报错找不到_stat,做如下修改就可以了。

//修改前

struct stat s;

_stat(dir_name, &s);

//修改后

struct _stat64 s;

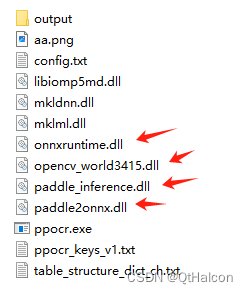

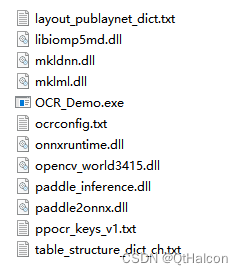

_stat64(dir_name, &s);最后就可以生成成功,生成成功要将这几个dll复制到程序目录。

onnxruntime和paddle2onnx在这里

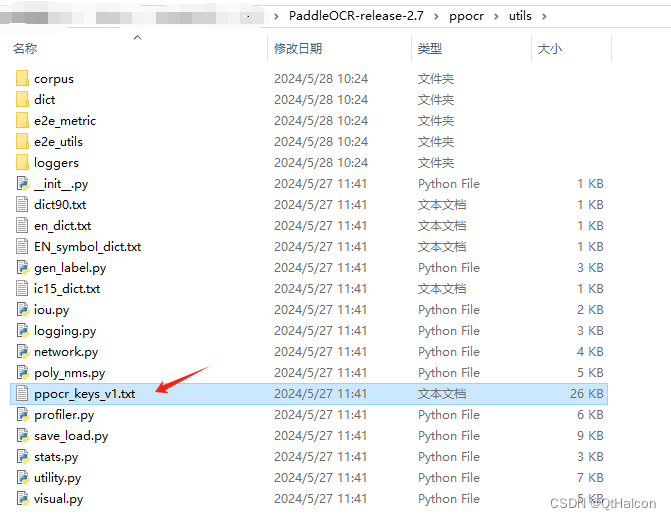

将这个文件复制到程序目录

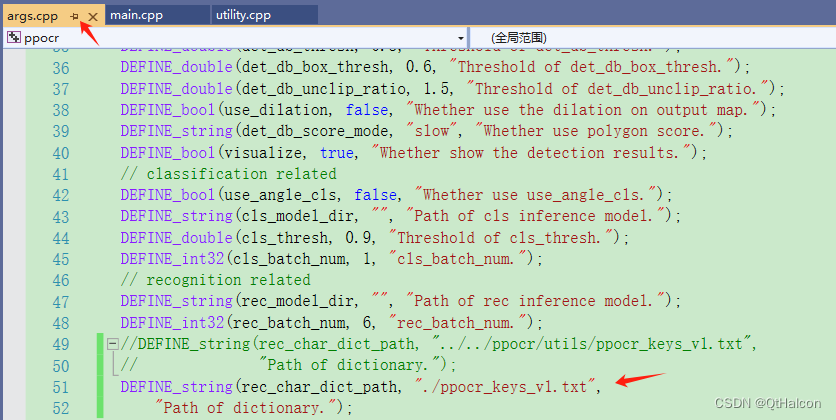

源码里面这个文件的路径也要改一下

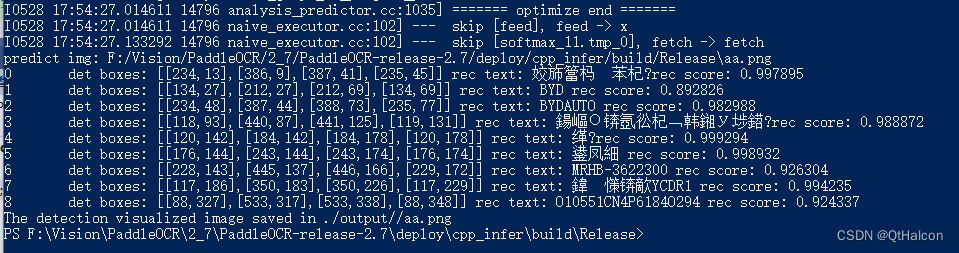

然后输入命令运行程序,下面的路径根据自己情况去修改

./ppocr.exe --det_model_dir=F:/Vision/PaddleOCR/2_7/model/ch_PP-OCRv4_det_infer --cls_model_dir=F:/Vision/PaddleOCR/2_7/model/ch_ppocr_mobile_v2.0_cls_infer --rec_model_dir=F:/Vision/PaddleOCR/2_7/model/ch_PP-OCRv4_rec_infer --image_dir=F:/Vision/PaddleOCR/2_7/PaddleOCR-release-2.7/deploy/cpp_infer/build/Release/aa.png运行结果如下

章节三:在Qt上编译运行

pro文件构建

QT += core gui widgets

greaterThan(QT_MAJOR_VERSION, 4): QT += widgets

CONFIG += c++17

DEFINES += QT_DEPRECATED_WARNINGS

##################################################################

#指定生成的文件存放位置

##################################################################

MOC_DIR = $$PWD/temp/moc

RCC_DIR = $$PWD/temp/rcc

UI_DIR = $$PWD/temp/ui

OBJECTS_DIR = $$PWD/temp/obj

DESTDIR = $$PWD/temp/bin

CONFIG(debug, debug|release) {

QMAKE_CXXFLAGS_DEBUG += /MTd

}

CONFIG(release, debug|release) {

QMAKE_CXXFLAGS_RELEASE += /MT

}

PaddleOCR_ROOT = F:/Vision/PaddleOCR/2_7/OCR_Demo/OCR_Demo/ocr

SOURCES += \

# $$PaddleOCR_ROOT/src/args.cpp \

$$PaddleOCR_ROOT/src/clipper.cpp \

$$PaddleOCR_ROOT/src/ocr_cls.cpp \

$$PaddleOCR_ROOT/src/ocr_det.cpp \

$$PaddleOCR_ROOT/src/ocr_rec.cpp \

# $$PaddleOCR_ROOT/src/paddleocr.cpp \

# $$PaddleOCR_ROOT/src/paddlestructure.cpp \

$$PaddleOCR_ROOT/src/postprocess_op.cpp \

$$PaddleOCR_ROOT/src/preprocess_op.cpp \

$$PaddleOCR_ROOT/src/structure_layout.cpp \

$$PaddleOCR_ROOT/src/structure_table.cpp \

$$PaddleOCR_ROOT/src/utility.cpp \

ScreenWidget/screen.cpp \

ScreenWidget/screenwidget.cpp \

main.cpp \

mainwindow.cpp \

my_config.cpp \

my_paddleocr.cpp

HEADERS += \

$$PaddleOCR_ROOT/include/ocr_cls.h \

$$PaddleOCR_ROOT/include/ocr_det.h \

$$PaddleOCR_ROOT/include/ocr_rec.h \

# $$PaddleOCR_ROOT/include/paddleocr.h \

# $$PaddleOCR_ROOT/include/paddlestructure.h \

$$PaddleOCR_ROOT/include/postprocess_op.h \

$$PaddleOCR_ROOT/include/preprocess_op.h \

$$PaddleOCR_ROOT/include/structure_layout.h \

$$PaddleOCR_ROOT/include/structure_table.h \

$$PaddleOCR_ROOT/include/utility.h \

# $$PaddleOCR_ROOT/include/args.h \

$$PaddleOCR_ROOT/include/clipper.h \

$$PaddleOCR_ROOT/include/dirent.h \

ScreenWidget/screen.h \

ScreenWidget/screenwidget.h \

mainwindow.h \

my_config.h \

my_paddleocr.h

FORMS += \

mainwindow.ui

INCLUDEPATH += $$PaddleOCR_ROOT

INCLUDEPATH += $$PaddleOCR_ROOT/include

INCLUDEPATH += $$PWD\ScreenWidget

Inference_ROOT = F:/Vision/PaddleOCR/2_7/prelib/cuda11_0

INCLUDEPATH += $$Inference_ROOT/paddle/include

INCLUDEPATH += $$Inference_ROOT/third_party/install/protobuf/include

INCLUDEPATH += $$Inference_ROOT/third_party/install/glog/include

#INCLUDEPATH += $$Inference_ROOT/third_party/install/gflags/include

INCLUDEPATH += $$Inference_ROOT/third_party/install/xxhash/include

INCLUDEPATH += $$Inference_ROOT/third_party/install/mklml/include

INCLUDEPATH += $$Inference_ROOT/third_party/install/mkldnn/include

LIBS += -L$$Inference_ROOT/paddle/lib -lpaddle_inference

LIBS += -L$$Inference_ROOT/third_party/install/mklml/lib -lmklml

LIBS += -L$$Inference_ROOT/third_party/install/mklml/lib -llibiomp5md

LIBS += -L$$Inference_ROOT/third_party/install/mkldnn/lib -lmkldnn

LIBS += -L$$Inference_ROOT/third_party/install/glog/lib -lglog

#LIBS += -L$$Inference_ROOT/third_party/install/gflags/lib -lgflags_static

LIBS += -L$$Inference_ROOT/third_party/install/protobuf/lib -llibprotobuf

LIBS += -L$$Inference_ROOT/third_party/install/xxhash/lib -lxxhash

OpenCV_ROOT = E:/2021_software/OpenCv/OpenCv3_4_15/install/opencv/build

INCLUDEPATH += $$OpenCV_ROOT/include

INCLUDEPATH += $$OpenCV_ROOT/include/opencv

INCLUDEPATH += $$OpenCV_ROOT/include/opencv2

LIBS += -L$$OpenCV_ROOT/x64/vc15/lib -lopencv_world3415

自定义一个config类

#pragma once

#include <iomanip>

#include <iostream>

#include <map>

#include <ostream>

#include <string>

#include <vector>

#include "include/utility.h"

using namespace PaddleOCR;

class MY_OCRConfig {

public:

explicit MY_OCRConfig(const std::string &config_file);

// common args

bool use_gpu = false;

bool use_tensorrt = false;

int gpu_id = 0;

int gpu_mem = 4000;

int cpu_threads = 10;

bool enable_mkldnn = false;

std::string precision = "fp32";

bool benchmark = false;

std::string output = "./output/";

std::string image_dir = "";

std::string type = "ocr";

// detection related

std::string det_model_dir = "";

std::string limit_type = "max";

int limit_side_len = 960;

double det_db_thresh = 0.3;

double det_db_box_thresh = 0.6;

double det_db_unclip_ratio = 1.5;

bool use_dilation = false;

std::string det_db_score_mode = "slow";

bool visualize = true;

// classification related

bool use_angle_cls = false;

std::string cls_model_dir = "";

double cls_thresh = 0.9;

int cls_batch_num = 1;

// recognition related

std::string rec_model_dir = "";

int rec_batch_num = 6;

std::string rec_char_dict_path = "./ppocr_keys_v1.txt";

int rec_img_h = 48;

int rec_img_w = 320;

// layout model related

std::string layout_model_dir = "";

std::string layout_dict_path = "../../ppocr/utils/dict/layout_dict/layout_publaynet_dict.txt";

double layout_score_threshold = 0.5;

double layout_nms_threshold = 0.5;

// structure model related

std::string table_model_dir = "";

int table_max_len = 488;

int table_batch_num = 1;

bool merge_no_span_structure = true;

std::string table_char_dict_path = "../../ppocr/utils/dict/table_structure_dict_ch.txt";

// ocr forward related

bool det = true;

bool rec = true;

bool cls = false;

bool table = false;

bool layout = false;

private:

// Load configuration

std::map<std::string, std::string> LoadConfig(const std::string &config_file);

std::vector<std::string> split(const std::string &str,

const std::string &delim);

std::map<std::string, std::string> config_map_;

};

#include "my_config.h"

#include <qdebug.h>

std::vector<std::string> MY_OCRConfig::split(const std::string &str,

const std::string &delim) {

std::vector<std::string> res;

if ("" == str)

return res;

int strlen = str.length() + 1;

char *strs = new char[strlen];

std::strcpy(strs, str.c_str());

int delimlen = delim.length() + 1;

char *d = new char[delimlen];

std::strcpy(d, delim.c_str());

char *p = std::strtok(strs, d);

while (p) {

std::string s = p;

res.push_back(s);

p = std::strtok(NULL, d);

}

delete[] strs;

delete[] d;

return res;

}

std::map<std::string, std::string>

MY_OCRConfig::LoadConfig(const std::string &config_path) {

auto config = Utility::ReadDict(config_path);

std::map<std::string, std::string> dict;

for (int i = 0; i < config.size(); i++) {

// pass for empty line or comment

if (config[i].size() <= 1 || config[i][0] == '#') {

continue;

}

//

std::vector<std::string> res = split(config[i], " ");

if (res.size() < 2) {

dict[res[0]] = "";

}else{

dict[res[0]] = res[1];

}

}

return dict;

}

MY_OCRConfig::MY_OCRConfig(const std::string &config_file)

{

config_map_ = LoadConfig(config_file);

// common args

this->use_gpu = (config_map_["use_gpu"] == "true");

this->use_tensorrt = (config_map_["use_tensorrt"] == "true");

this->gpu_id = stoi(config_map_["gpu_id"]);

this->gpu_mem = stoi(config_map_["gpu_mem"]);

this->cpu_threads = stoi(config_map_["cpu_threads"]);

this->enable_mkldnn = (config_map_["enable_mkldnn"] == "true");

this->precision = config_map_["precision"];

this->benchmark = (config_map_["benchmark"] == "true");

this->output = config_map_["output"];

this->image_dir = config_map_["image_dir"];

this->type = config_map_["type"];

// detection related

this->det_model_dir = config_map_["det_model_dir"];

this->limit_type = config_map_["limit_type"];

this->limit_side_len = stoi(config_map_["limit_side_len"]);

this->det_db_thresh = stod(config_map_["det_db_thresh"]);

this->det_db_box_thresh = stod(config_map_["det_db_box_thresh"]);

this->det_db_unclip_ratio = stod(config_map_["det_db_unclip_ratio"]);

this->use_dilation = (config_map_["use_dilation"] == "true");

this->det_db_score_mode = config_map_["det_db_score_mode"];

this->visualize = (config_map_["visualize"] == "true");

// classification related

this->use_angle_cls = (config_map_["use_angle_cls"] == "true");

this->cls_model_dir = config_map_["cls_model_dir"];

this->cls_thresh = stod(config_map_["cls_thresh"]);

this->cls_batch_num = stoi(config_map_["cls_batch_num"]);

// recognition related

this->rec_model_dir = config_map_["rec_model_dir"];

this->rec_batch_num = stoi(config_map_["rec_batch_num"]);

this->rec_char_dict_path = config_map_["rec_char_dict_path"];

this->rec_img_h = stoi(config_map_["rec_img_h"]);

this->rec_img_w = stoi(config_map_["rec_img_w"]);

// layout model related

this->layout_model_dir = config_map_["layout_model_dir"];

this->layout_dict_path = config_map_["layout_dict_path"];

this->layout_score_threshold = stod(config_map_["layout_score_threshold"]);

this->layout_nms_threshold = stod(config_map_["layout_nms_threshold"]);

// structure model related

this->table_model_dir = config_map_["table_model_dir"];

this->table_max_len = stoi(config_map_["table_max_len"]);

this->table_batch_num = stoi(config_map_["table_batch_num"]);

this->merge_no_span_structure = (config_map_["merge_no_span_structure"] == "true");

this->table_char_dict_path = config_map_["table_char_dict_path"];

// ocr forward related

this->det = (config_map_["det"] == "true");

this->rec = (config_map_["rec"] == "true");

this->cls = (config_map_["cls"] == "true");

this->table = (config_map_["table"] == "true");

this->layout = (config_map_["layout"] == "true");

qDebug()<<this->det<<config_map_["det"].c_str()<<QString(config_map_["det"].c_str())<<this->rec<<this->cls<<this->rec_model_dir.c_str();

}

自定义一个ocr类

#pragma once

#include <include/ocr_cls.h>

#include <include/ocr_det.h>

#include <include/ocr_rec.h>

#include "my_config.h"

using namespace PaddleOCR;

class MY_PPOCR {

public:

explicit MY_PPOCR();

~MY_PPOCR();

std::vector<std::vector<OCRPredictResult>> ocr(std::vector<cv::Mat> img_list,

bool det = true,

bool rec = true,

bool cls = true);

std::vector<OCRPredictResult> ocr(cv::Mat img, bool det = true,

bool rec = true, bool cls = true);

void reset_timer();

void benchmark_log(int img_num);

MY_OCRConfig *p_config;

protected:

std::vector<double> time_info_det = {0, 0, 0};

std::vector<double> time_info_rec = {0, 0, 0};

std::vector<double> time_info_cls = {0, 0, 0};

void det(cv::Mat img, std::vector<OCRPredictResult> &ocr_results);

void rec(std::vector<cv::Mat> img_list,

std::vector<OCRPredictResult> &ocr_results);

void cls(std::vector<cv::Mat> img_list,

std::vector<OCRPredictResult> &ocr_results);

private:

std::unique_ptr<DBDetector> detector_;

std::unique_ptr<Classifier> classifier_;

std::unique_ptr<CRNNRecognizer> recognizer_;

};

#include "my_paddleocr.h"

#include <qdebug.h>

//#include "auto_log/autolog.h"

MY_PPOCR::MY_PPOCR()

{

qDebug()<<"aaa1";

p_config = new MY_OCRConfig("./ocrconfig.txt");

qDebug()<<"aaa2";

//

if (p_config->det) {

this->detector_.reset(new DBDetector(

p_config->det_model_dir, p_config->use_gpu, p_config->gpu_id, p_config->gpu_mem,

p_config->cpu_threads, p_config->enable_mkldnn, p_config->limit_type,

p_config->limit_side_len, p_config->det_db_thresh, p_config->det_db_box_thresh,

p_config->det_db_unclip_ratio, p_config->det_db_score_mode, p_config->use_dilation,

p_config->use_tensorrt, p_config->precision));

}

qDebug()<<"aaa3";

//

if (p_config->cls && p_config->use_angle_cls) {

this->classifier_.reset(new Classifier(

p_config->cls_model_dir, p_config->use_gpu, p_config->gpu_id, p_config->gpu_mem,

p_config->cpu_threads, p_config->enable_mkldnn, p_config->cls_thresh,

p_config->use_tensorrt, p_config->precision, p_config->cls_batch_num));

}

qDebug()<<"aaa4";

//

if (p_config->rec) {

this->recognizer_.reset(new CRNNRecognizer(

p_config->rec_model_dir, p_config->use_gpu, p_config->gpu_id, p_config->gpu_mem,

p_config->cpu_threads, p_config->enable_mkldnn, p_config->rec_char_dict_path,

p_config->use_tensorrt, p_config->precision, p_config->rec_batch_num,

p_config->rec_img_h, p_config->rec_img_w));

}

qDebug()<<"aaa5";

}

MY_PPOCR::~MY_PPOCR()

{

delete p_config;

}

std::vector<std::vector<OCRPredictResult>>

MY_PPOCR::ocr(std::vector<cv::Mat> img_list, bool det, bool rec, bool cls)

{

std::vector<std::vector<OCRPredictResult>> ocr_results;

if (!det) {

std::vector<OCRPredictResult> ocr_result;

ocr_result.resize(img_list.size());

if (cls && this->classifier_) {

this->cls(img_list, ocr_result);

for (int i = 0; i < img_list.size(); i++) {

if (ocr_result[i].cls_label % 2 == 1 &&

ocr_result[i].cls_score > this->classifier_->cls_thresh) {

cv::rotate(img_list[i], img_list[i], 1);

}

}

}

if (rec) {

this->rec(img_list, ocr_result);

}

for (int i = 0; i < ocr_result.size(); ++i) {

std::vector<OCRPredictResult> ocr_result_tmp;

ocr_result_tmp.push_back(ocr_result[i]);

ocr_results.push_back(ocr_result_tmp);

}

} else {

for (int i = 0; i < img_list.size(); ++i) {

std::vector<OCRPredictResult> ocr_result =

this->ocr(img_list[i], true, rec, cls);

ocr_results.push_back(ocr_result);

}

}

return ocr_results;

}

std::vector<OCRPredictResult> MY_PPOCR::ocr(cv::Mat img, bool det, bool rec, bool cls)

{

std::vector<OCRPredictResult> ocr_result;

// det

this->det(img, ocr_result);

// crop image

std::vector<cv::Mat> img_list;

for (int j = 0; j < ocr_result.size(); j++) {

cv::Mat crop_img;

crop_img = Utility::GetRotateCropImage(img, ocr_result[j].box);

img_list.push_back(crop_img);

}

// cls

if (cls && this->classifier_) {

this->cls(img_list, ocr_result);

for (int i = 0; i < img_list.size(); i++) {

if (ocr_result[i].cls_label % 2 == 1 &&

ocr_result[i].cls_score > this->classifier_->cls_thresh) {

cv::rotate(img_list[i], img_list[i], 1);

}

}

}

// rec

if (rec) {

this->rec(img_list, ocr_result);

}

return ocr_result;

}

void MY_PPOCR::det(cv::Mat img, std::vector<OCRPredictResult> &ocr_results)

{

std::vector<std::vector<std::vector<int>>> boxes;

std::vector<double> det_times;

this->detector_->Run(img, boxes, det_times);

for (int i = 0; i < boxes.size(); i++) {

OCRPredictResult res;

res.box = boxes[i];

ocr_results.push_back(res);

}

// sort boex from top to bottom, from left to right

Utility::sorted_boxes(ocr_results);

this->time_info_det[0] += det_times[0];

this->time_info_det[1] += det_times[1];

this->time_info_det[2] += det_times[2];

}

void MY_PPOCR::rec(std::vector<cv::Mat> img_list, std::vector<OCRPredictResult> &ocr_results)

{

std::vector<std::string> rec_texts(img_list.size(), "");

std::vector<float> rec_text_scores(img_list.size(), 0);

std::vector<double> rec_times;

this->recognizer_->Run(img_list, rec_texts, rec_text_scores, rec_times);

// output rec results

for (int i = 0; i < rec_texts.size(); i++) {

ocr_results[i].text = rec_texts[i];

ocr_results[i].score = rec_text_scores[i];

}

this->time_info_rec[0] += rec_times[0];

this->time_info_rec[1] += rec_times[1];

this->time_info_rec[2] += rec_times[2];

}

void MY_PPOCR::cls(std::vector<cv::Mat> img_list, std::vector<OCRPredictResult> &ocr_results)

{

std::vector<int> cls_labels(img_list.size(), 0);

std::vector<float> cls_scores(img_list.size(), 0);

std::vector<double> cls_times;

this->classifier_->Run(img_list, cls_labels, cls_scores, cls_times);

// output cls results

for (int i = 0; i < cls_labels.size(); i++) {

ocr_results[i].cls_label = cls_labels[i];

ocr_results[i].cls_score = cls_scores[i];

}

this->time_info_cls[0] += cls_times[0];

this->time_info_cls[1] += cls_times[1];

this->time_info_cls[2] += cls_times[2];

}

void MY_PPOCR::reset_timer()

{

this->time_info_det = {0, 0, 0};

this->time_info_rec = {0, 0, 0};

this->time_info_cls = {0, 0, 0};

}

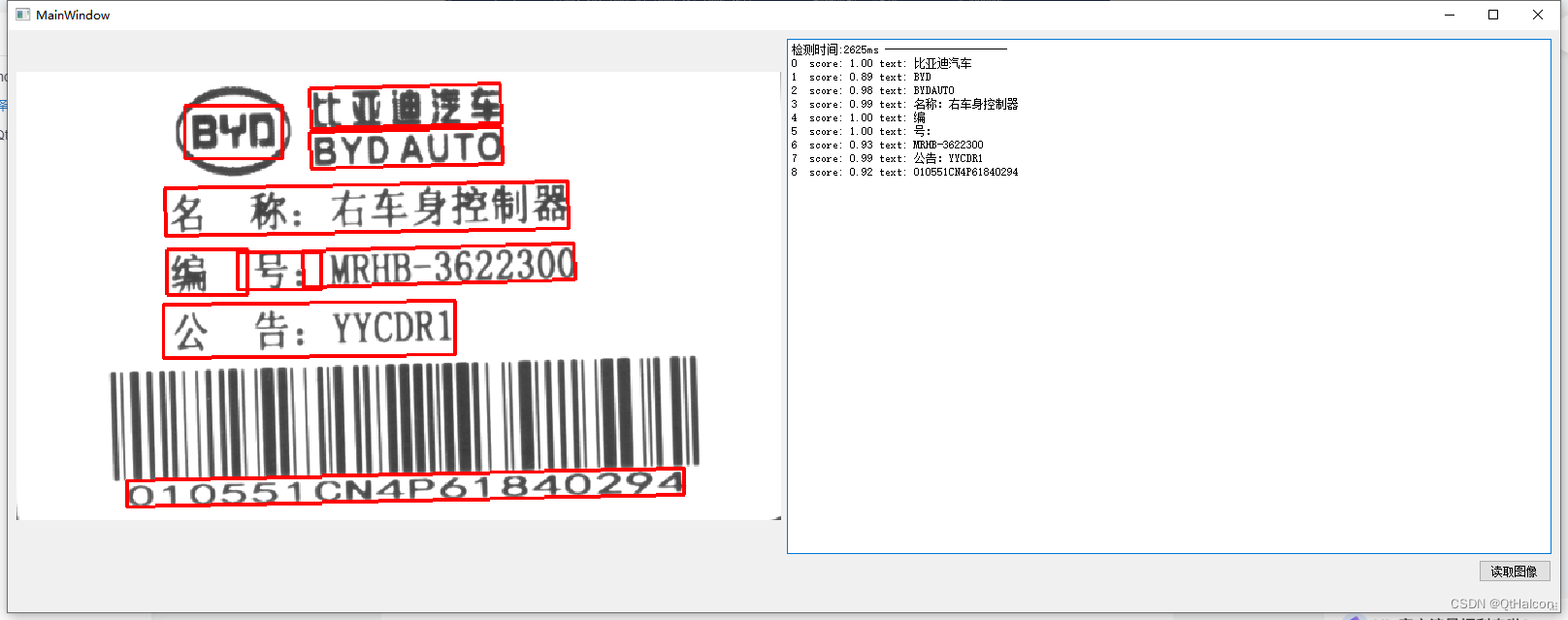

核心调用代码

//

std::vector<cv::Mat> img_list;

cv::Mat srcimg = cv::imread(qstr2str(fileName).data(), cv::IMREAD_COLOR);

img_list.push_back(srcimg);

p_ocr->reset_timer();

//

QElapsedTimer RunTimer;

RunTimer.start();

std::vector<std::vector<OCRPredictResult>> ocr_results = p_ocr->ocr(img_list, p_ocr->p_config->det, p_ocr->p_config->rec, p_ocr->p_config->cls);

ui->textBrowser->append(QString("检测时间:%1ms ---------------------").arg(RunTimer.elapsed()));

//

for (int i = 0; i < img_list.size(); ++i) {

std::vector<OCRPredictResult> &ocr_result = ocr_results[i];

for (int i = 0; i < ocr_result.size(); i++) {

QString oustr;

oustr += QString::number(i) + " ";

// det

// std::vector<std::vector<int>> boxes = ocr_result[i].box;

// if (boxes.size() > 0) {

// oustr += "det boxes: [";

// for (int n = 0; n < boxes.size(); n++) {

// oustr += "[" + QString::number(boxes[n][0]) + "," + QString::number(boxes[n][1]) + "]";

// if (n != boxes.size() - 1) {

// oustr += ",";

// }

// }

// oustr += "]";

// }

// rec

if (ocr_result[i].score != -1.0) {

oustr += " score: " + QString::number(ocr_result[i].score,'f',2) + " text: " + QString::fromUtf8(ocr_result[i].text.c_str()) + " ";

}

// cls

if (ocr_result[i].cls_label != -1) {

oustr += "cls label: " + QString::number(ocr_result[i].cls_label) + " cls score: " + ocr_result[i].cls_score;

}

//

// oustr += "\r\n";

ui->textBrowser->append(oustr);

}

//

for (int n = 0; n < ocr_result.size(); n++) {

cv::Point rook_points[4];

//

for (int m = 0; m < ocr_result[n].box.size(); m++) {

rook_points[m] = cv::Point(int(ocr_result[n].box[m][0]), int(ocr_result[n].box[m][1]));

}

//

const cv::Point *ppt[1] = {rook_points};

int npt[] = {4};

cv::polylines(img_list[i], ppt, npt, 1, 1, CV_RGB(255, 0, 0), 2, 8, 0);

}

//

QImage outimage;

cvMat2QImage(img_list[i], outimage);

ui->label_image->setPixmap(QPixmap::fromImage(outimage.scaled(ui->label_image->width(),

ui->label_image->height(),

Qt::KeepAspectRatio)));

}程序目录包含这个几个文件,如果你没有,在源码路径和预测库路径去搜索

ocrconfig.txt是自定义的配置

# common args

use_gpu false

use_tensorrt false

gpu_id 0

gpu_mem 4000

cpu_threads 10

enable_mkldnn false

precision fp32

benchmark false

output ./output/

image_dir

type ocr

# detection related

det_model_dir F:\Vision\PaddleOCR\2_7\model\ch_PP-OCRv4_det_infer

limit_type max

limit_side_len 960

det_db_thresh 0.3

det_db_box_thresh 0.6

det_db_unclip_ratio 1.5

use_dilation false

det_db_score_mode slow

visualize true

# classification related

use_angle_cls false

cls_model_dir F:\Vision\PaddleOCR\2_7\model\ch_ppocr_mobile_v2.0_cls_infer

cls_thresh 0.9

cls_batch_num 1

# recognition related

rec_model_dir F:\Vision\PaddleOCR\2_7\model\ch_PP-OCRv4_rec_infer

rec_batch_num 6

rec_char_dict_path ./ppocr_keys_v1.txt

rec_img_h 48

rec_img_w 320

# layout model related

layout_model_dir

layout_dict_path ./layout_publaynet_dict.txt

layout_score_threshold 0.5

layout_nms_threshold 0.5

# structure model related

table_model_dir

table_max_len 488

table_batch_num 1

merge_no_span_structure true

table_char_dict_path ./table_structure_dict_ch.txt

# ocr forward related

det true

rec true

cls false

table false

layout false最后运行结果

有需要源码测试的, 这是源码地址

6047

6047

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?