k8s(1.28.2)下部署ES集群。

1. 安装helm

# 下载

wget https://get.helm.sh/helm-v3.13.0-linux-amd64.tar.gz

# 解压

tar xvf helm-v3.13.0-linux-amd64.tar.gz

# 移动文件目录

sudo mv linux-amd64/helm /usr/local/bin/helm

# 查看版本

helm version

2. 创建动态存储storageclass(全局可用)

2.1 制作镜像,并上传到镜像仓库

#拉取可用的 nfs-subdir-external-provisioner:v4.0.2 镜像

docker pull dyrnq/nfs-subdir-external-provisioner:v4.0.2

# tag 192.168.255.132是自己搭建的镜像库地址

docker tag dyrnq/nfs-subdir-external-provisioner:v4.0.2 192.168.255.132/library/nfs-subdir-external-provisioner:v4.0.2

# push

docker push 192.168.255.132/library/nfs-subdir-external-provisioner:v4.0.2

2.2 执行,先确定是否有nfs服务

# 先手动下载 nfs-subdir-external-provisioner-4.0.2.tgz

https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner/releases/download/nfs-subdir-external-provisioner-4.0.2/nfs-subdir-external-provisioner-4.0.2.tgz

# 运行 helm install

# nfs-provisioner-es 名称 可修改

# namespace es-ns 命名空间

# nfs.server nfs服务地址

# nfs.path nfs共享路径

# storageClass.name 创建的storageClass名称

# image.repository、image.tag为镜像仓库地址和镜像版本

helm install nfs-provisioner-es nfs-subdir-external-provisioner-4.0.2.tgz \

--namespace es-ns \

--set nfs.server=192.168.255.142 \

--set nfs.path=/data/es \

--set storageClass.name=nfs-client-es \

--set image.repository=192.168.255.132/library/nfs-subdir-external-provisioner \

--set image.tag=v4.0.2

2.3 测试

#执行命令

# helm list -n es-ns 可查看到如下信息

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

nfs-provisioner-es es-ns 1 2023-12-12 19:32:23.296102681 +0800 CST deployed nfs-subdir-external-provisioner-4.0.2 4.0.0

# kubectl get storageclasses.storage.k8s.io,有如下显示则表示storageclass创建好了。

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client-es cluster.local/nfs-provisioner-es-nfs-subdir-external-provisioner Delete Immediate true 3m2s

3.部署ES

3.1 制作带分词器的ES镜像

wget https://github.com/medcl/elasticsearch-analysis-ik/releases/download/v7.12.1/elasticsearch-analysis-ik-7.12.1.zip

unzip xxx.zip ./ik/

# Dockerfile

# 使用官方Elasticsearch基础镜像

FROM elasticsearch:7.12.1

# 将IK分词插件目录复制到容器中

COPY ik /usr/share/elasticsearch/plugins/ik

# 制作镜像并上传到仓库

docker build -f Dockerfile -t es-with-ik:v7.12.1 .

docker tag es-with-ik:v7.12.1 192.168.255.132/library/es-with-ik:v7.12.1

docker push 192.168.255.132/library/es-with-ik:v7.12.1

3.2 编写yaml文件,然后执行

kubectl apply -f es-cluster.yaml

kubectl apply -f es-service.yaml

kubectl apply -f es-ingress.yaml

kubectl apply -f kibana.yaml

3.2.1 es-cluster.yaml文件

volumeClaimTemplates.spec.storageClassName 为创建的storageClass名称

# es-cluster.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: es-cluster

namespace: es-ns

spec:

serviceName: es-cluster-svc

replicas: 3

selector:

matchLabels:

app: es-net-data

template:

metadata:

labels:

app: es-net-data

spec:

# 初始化容器

initContainers:

- name: increase-vm-max-map

image: busybox:1.32

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: increase-fd-ulimit

image: busybox:1.32

command: ["sh", "-c", "ulimit -n 65536"]

securityContext:

privileged: true

# 初始化容器结束后,才能继续创建下面的容器

containers:

- name: es-container

image: 192.168.255.132/library/es-with-ik:v7.12.1

imagePullPolicy: Always

ports:

# 容器内端口

- name: rest

containerPort: 9200

protocol: TCP

# 限制CPU数量

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

# 设置挂载目录

volumeMounts:

- name: es-data

mountPath: /usr/share/elasticsearch/data

env:

- name: cluster.name

value: k8s-es

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: cluster.initial_master_nodes

value: "es-cluster-0,es-cluster-1,es-cluster-2"

- name: discovery.zen.minimum_master_nodes

value: "2"

- name: discovery.seed_hosts

value: "es-cluster-svc"

# 配置内存

- name: ES_JAVA_OPTS

value: "-Xms500m -Xmx500m"

- name: network.host

value: "0.0.0.0"

volumeClaimTemplates:

- metadata:

# 对应容器中volumeMounts.name

name: es-data

labels:

app: es-volume

spec:

# 存储卷可以被单个节点读写

accessModes: [ "ReadWriteOnce" ]

# 对应es-nfs-storage-class.yaml中的metadata.name

storageClassName: nfs-client-es

resources:

requests:

storage: 10Gi

3.2.2 es-service.yaml文件

# es-service.yaml

apiVersion: v1

kind: Service

metadata:

name: es-cluster-svc

namespace: es-ns

spec:

selector:

app: es-net-data

clusterIP: None

type: ClusterIP

ports:

- name: rest

protocol: TCP

port: 9200

targetPort: 9200

3.2.3 es-ingress.yaml文件

# es-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: es-cluster-ingress

namespace: es-ns

spec:

ingressClassName: nginx

rules:

- host: es.demo.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: es-cluster-svc

port:

number: 9200

- host: kibana.demo.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: kibana-svc

port:

number: 5601

3.2.4 kibana.yaml文件

# kibana.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: es-ns

spec:

selector:

matchLabels:

app: kibana

replicas: 1

template:

metadata:

labels:

app: kibana

spec:

restartPolicy: Always

containers:

- name: kibana

image: kibana:7.12.1

imagePullPolicy: Always

ports:

- containerPort: 5601

env:

- name: ELASTICSEARCH_HOSTS

value: http://es-cluster-svc:9200

- name: I18N.LOCALE

value: zh-CN

resources:

requests:

memory: 1024Mi

cpu: 50m

limits:

memory: 1024Mi

cpu: 1000m

---

apiVersion: v1

kind: Service

metadata:

name: kibana-svc

namespace: es-ns

spec:

selector:

app: kibana

clusterIP: None

type: ClusterIP

ports:

- name: rest

protocol: TCP

port: 5601

targetPort: 5601

3.3 测试

kubectl get ingress -A

NAMESPACE NAME CLASS HOSTS ADDRESS PORTS AGE

es-ns es-cluster-ingress nginx es.demo.com,kibana.demo.com 192.168.255.143 80, 443 69d

# 将Windows下hosts文件做映射

192.168.255.143 es.demo.com kibana.demo.com

#便可直接在浏览器中访问。

http://kibana.demo.com/

http://es.demo.com/

查看节点情况

http://es.demo.com/_cat/nodes?v

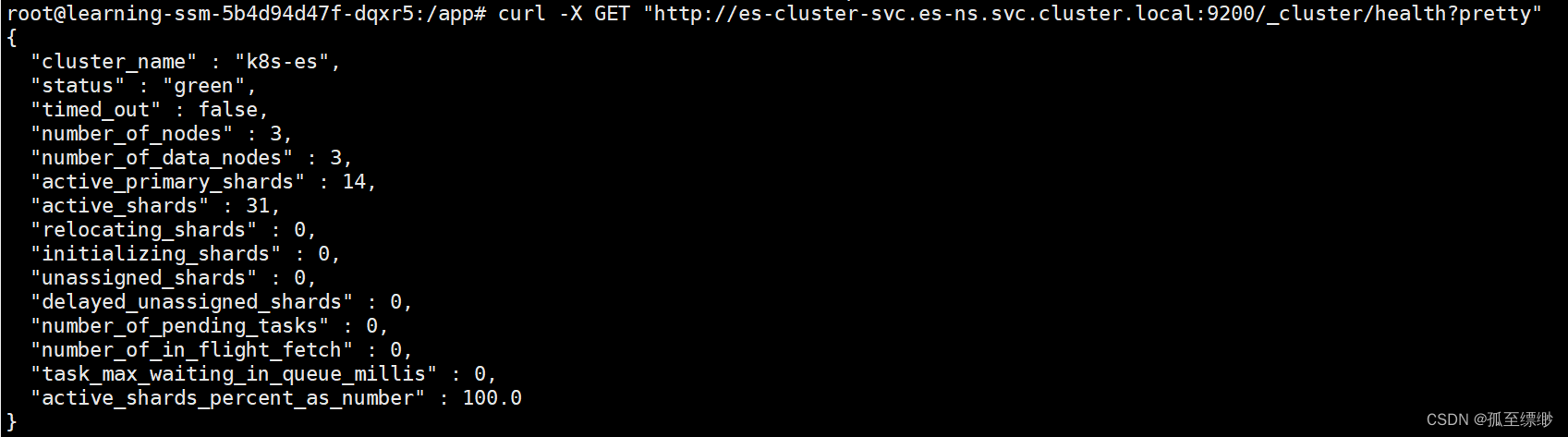

在k8s集群中,可使用内部域名的方式访问ES。

{service}.{namespace}.svc.cluster.local:port

对应当前部署的ES集群,访问地址就是 es-cluster-svc.es-ns.svc.cluster.local:9200

# 浏览器中访问

https://es.demo.com/_cluster/health?pretty

# 在k8s集群中任意pod里执行

curl -X GET "http://es-cluster-svc.es-ns.svc.cluster.local:9200/_cluster/health?pretty"

1574

1574

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?