下载链接:http://archive.apache.org/dist/sqoop/1.4.6/

下载后传到集群中master上。

前提环境:JDK1.8环境,Hadoop2.7环境。

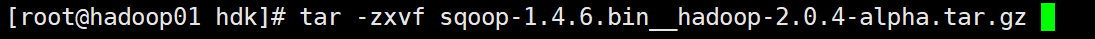

第一步:解压缩,重命名。

解压缩:tar -zxvf sqoop-1.4.6.bin__hadoop-2.0.4-alpha.tar.gz

重命名:mv sqoop-1.4.6.bin__hadoop-2.0.4-alpha sqoop

第二步:修改配置文件

进入sqoop安装目录中的conf目录:

修改配置文件名:

修改配置文件:

hadoop与hadoopMR的环境变量是必须配置的,zookeeper可以选择使用我们安装的zookeeper,hive与hbase在使用的时候必须配置。

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# included in all the hadoop scripts with source command

# should not be executable directly

# also should not be passed any arguments, since we need original $*

# Set Hadoop-specific environment variables here.

#Set path to where bin/hadoop is available

#hadoop的环境信息必须

export HADOOP_COMMON_HOME=/usr/hdk/hadoop

#Set path to where hadoop-*-core.jar is available

#hadoop的mr存放目录的配置信息必须

export HADOOP_MAPRED_HOME=/usr/hdk/hadoop/tmp/mapred

#set the path to where bin/hbase is available

export HBASE_HOME=/usr/hdk/hbase

#Set the path to where bin/hive is available

export HIVE_HOME=/usr/hdk/hive

#Set the path for where zookeper config dir is

export ZOOKEEPER_HOME=/usr/hdk/zookeeper

export ZOOCFGDIR=/usr/hdk/zookeeper

添加JDBC驱动

打算从什么数据库中提取数据,就将这个数据库的工具类放在sqoop安装路径/lib/下。

然后进入bin目录,运行sqoop help命令,会出来一些警告信息(忽略警告信息)和help指南。

Available commands:

codegen Generate code to interact with database records

create-hive-table Import a table definition into Hive

eval Evaluate a SQL statement and display the results

export Export an HDFS directory to a database table

help List available commands

import Import a table from a database to HDFS

import-all-tables Import tables from a database to HDFS

import-mainframe Import datasets from a mainframe server to HDFS

job Work with saved jobs

list-databases List available databases on a server

list-tables List available tables in a database

merge Merge results of incremental imports

metastore Run a standalone Sqoop metastore

version Display version information

这就表示配置成功。

然后测试是否可以连接数据库

sqoop list-databases --connect jdbc:mysql://ip地址:3306/ --username 用户名 --password 密码

231

231

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?