DownloaderImage

项目地址:https://github.com/404SpiderMan/DownloadImage(求star)

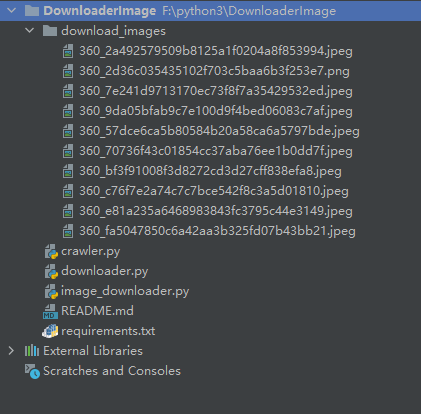

1. 简介

输入一组关键词,指定所需图片个数,在常见搜索引擎中检索,记录图片url地址,并将图片保存在指定目录下。

- 百度图片:https://image.baidu.com/

- 360搜图:https://image.so.com/

- 微软:https://cn.bing.com/images/trending?FORM=ILPTRD)

2. 功能

- 支持的搜索引擎: 360, 必应, 百度

- 可配置线程数及代理

3. 安装

3.1 安装相关python库

pip3 install -r requirements.txt

4. 如何使用

4.1 命令行

usage: image_downloader.py [-h] [--engine {baidu,bing,360}]

[--max-number MAX_NUMBER]

[--num-threads NUM_THREADS] [--timeout TIMEOUT]

[--output OUTPUT] [--user-proxy USER_PROXY]

keywords

help

usage: image_downloader.py [-h] [--engine {baidu,bing,360}] [--max-number MAX_NUMBER] [--num-threads NUM_THREADS] [--timeout TIMEOUT] [--output OUTPUT] [--user-proxy USER_PROXY]

keywords

Image Downloader

positional arguments:

keywords 搜索关键词

optional arguments:

-h, --help show this help message and exit

--engine {baidu,bing,360}, -e {baidu,bing,360}

抓取网站.

--max-number MAX_NUMBER, -n MAX_NUMBER

下载图片数量

--num-threads NUM_THREADS, -j NUM_THREADS

进程数

--timeout TIMEOUT, -t TIMEOUT

下载超时

--output OUTPUT, -o OUTPUT

输出文件夹

--user-proxy USER_PROXY, -p USER_PROXY

是否使用代理(默认不使用)

运行:

python image_downloader.py "中国地图" -e "360" -n 10

目标网站为:360

关键词为: 中国地图

抓取数量为:10条

目标网站搜索结果为:1500

目标抓取:10条 已抓取:10条

##下载成功: 360_a674c5fccbd187575dbe3c46698d841a.jpeg

##下载成功: 360_20760e3529df9287b80066168f35eae5.png

##下载成功: 360_cb28e97178694f3f45788eec0894bf55.jpg

##下载成功: 360_82dcd18912bbd65572d464e3e990eb8b.png

##下载成功: 360_dbfb153accb1e125ea40fcd5585e3e5e.jpeg

##下载成功: 360_7f95f7893557d445c668f448b159d9e0.jpg

##下载成功: 360_968b76513aa6a56866bc5a68c873f5cd.png

##下载成功: 360_aabb350a736c8418f8f581a660f9fb74.png

##下载成功: 360_06ffc47d52f056cb2848315c2e6cb2ef.jpeg

##下载成功: 360_a99a12dd76843c48264f215c50b099d0.jpeg

Finished.

downloader.py:做图片下载

""" Download image according to given urls and automatically rename them in order. """

# -*- coding: utf-8 -*-

# author: Yabin Zheng

# Email: sczhengyabin@hotmail.com

from __future__ import print_function

import hashlib

import shutil

import imghdr

import os

import concurrent.futures

import requests

headers = {

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8",

"Proxy-Connection": "keep-alive",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) "

"AppleWebKit/537.36 (KHTML, like Gecko) Chrome/54.0.2840.99 Safari/537.36",

"Accept-Encoding": "gzip, deflate, sdch",

}

def download_image(image_url, dst_dir, file_prefix, timeout=20, use_proxy=False):

proxies = None

# if proxy_type:

# proxies = {

# "http": proxy_type + "://" + proxy,

# "https": proxy_type + "://" + proxy

# }

response = None

try_times = 0

while True:

try:

try_times += 1

response = requests.get(

image_url, headers=headers, timeout=timeout, proxies=proxies)

image_name = get_img_name(response.content)

file_name = file_prefix + "_" + image_name

file_path = os.path.join(dst_dir, file_name)

with open(file_path, 'wb') as f:

f.write(response.content)

response.close()

file_type = imghdr.what(file_path)

if file_type in ["jpg", "jpeg", "png", "bmp"]:

new_file_name = "{}.{}".format(file_name, file_type)

else:

new_file_name = "{}.{}".format(file_name, 'jpg')

new_file_path = os.path.join(dst_dir, new_file_name)

shutil.move(file_path, new_file_path)

print("##下载成功: {} ".format(new_file_name))

break

except Exception as e:

if try_times < 3:

continue

if response:

response.close()

print("##下载错误: {} {}".format(e.args,image_url))

break

def download_images(image_urls, folder_dir='./download_images', file_prefix="img", max_workers=50, timeout=20, use_proxy=False):

"""

图片下载

:param image_urls:图片列表

:param folder_dir:文件夹地址

:param file_prefix:文件来源

:param max_workers:最大并发

:param timeout:下载超时

:param use_proxy:是否使用代理

:return:

"""

with concurrent.futures.ThreadPoolExecutor(max_workers=max_workers) as executor:

future_list = list()

count = 0

if not os.path.exists(folder_dir):

os.makedirs(folder_dir)

for image_url in image_urls:

future_list.append(executor.submit(

download_image, image_url, folder_dir, file_prefix, timeout, use_proxy))

count += 1

concurrent.futures.wait(future_list, timeout=180)

def get_img_name(image_url):

"""

图片name

:param image_url:

:return:

"""

md5 = hashlib.md5()

md5.update(image_url)

image_name = md5.hexdigest()

return image_name

image_downloader.py:做启动调度

from __future__ import print_function

import argparse

import crawler

import downloader

import sys

def main(argv):

parser = argparse.ArgumentParser(description="Image Downloader")

parser.add_argument("keywords", type=str,

help='搜索关键词')

parser.add_argument("--engine", "-e", type=str, default="baidu",

help="抓取网站.", choices=["baidu", "bing", "360"])

parser.add_argument("--max-number", "-n", type=int, default=100,

help="下载图片数量")

parser.add_argument("--num-threads", "-j", type=int, default=50,

help="进程数")

parser.add_argument("--timeout", "-t", type=int, default=20,

help="下载超时")

parser.add_argument("--output", "-o", type=str, default="./download_images",

help="输出文件夹")

parser.add_argument("--user-proxy", "-p", type=str, default=False,

help="是否使用代理(默认不使用)")

args = parser.parse_args(args=argv)

# 默认不加代理

use_proxy = False

crawled_urls = crawler.crawl_image_urls(args.keywords,

engine=args.engine, max_number=args.max_number,

use_proxy=use_proxy)

downloader.download_images(image_urls=crawled_urls, folder_dir=args.output,

max_workers=args.num_threads, timeout=args.timeout,

use_proxy=use_proxy, file_prefix=args.engine)

print("Finished.")

if __name__ == '__main__':

main(sys.argv[1:])

开源不易。跪求star!

console.log("公众号:虫术")

console.log("wx:spiderskill")

欢迎关注!探讨爬虫逆向及搬砖摸鱼技巧!

1783

1783

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?