Java版 Kafka ACL使用实战,动态创建SCRAM用户

上一篇: 【Kafka安全认证授权配置】

上一篇已经讲过kafka安全认证相关的配置,本篇就针对配置好的集群环境进行Java实战。

1、动态操作 SCRAM用户

1.1 调用远程脚本实现

我们是去年使用的kafka进行业务处理的,那时候最新版本的kafka是2.5.1,当时官方还不支持 SCRAM用户相关的API配置,因此我在服务器上写了两个脚本创建和删除kafka的用户,然后通过Java代码调用脚本实现动态添加和删除SCRAM用户的。

创建用户脚本:

#! /bin/bash

# 传入两个参数,分别为用户名和密码

/mnt/datadisk/kafka/bin/kafka-configs.sh --zookeeper 192.168.13.202:2181 --alter --add-config "SCRAM-SHA-256=[password=$2],SCRAM-SHA-512=[password=$2]" --entity-type users --entity-name $1

删除用户脚本:

#! /bin/bash

# 传入一个参数用户名

/mnt/datadisk/kafka/bin/kafka-configs.sh --zookeeper 192.168.13.202:2181 --alter --delete-config 'SCRAM-SHA-512' --delete-config 'SCRAM-SHA-256' --entity-type users --entity-name $1

代码中通过传参,远程调用这两个脚本实现对用户的操作

import ch.ethz.ssh2.Connection;

import ch.ethz.ssh2.Session;

import ch.ethz.ssh2.StreamGobbler;

import org.apache.zookeeper.WatchedEvent;

import org.apache.zookeeper.Watcher;

import org.apache.zookeeper.ZooKeeper;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.io.*;

import java.nio.charset.Charset;

import java.util.ArrayList;

import java.util.List;

public class KafkaSaslUserUtil {

private final Logger logger = LoggerFactory.getLogger(KafkaSaslUserUtil.class);

static {

//跳过zookeeper的sasl认证,提高效率

System.setProperty("zookeeper.sasl.client", "false");

}

private Connection conn;

private final String ip; //kafka服务器ip

private final int port; //ssh连接对外端口

private final String username; //服务器账户

private final String password; //服务器密码

private String charset = Charset.defaultCharset().toString();

public KafkaSaslUserUtil(String ip, int port, String username, String password, String charset) {

this.ip = ip;

this.port = port;

this.username = username;

this.password = password;

if (charset != null) {

this.charset = "utf-8";

}

}

/**

*

* @description: 用户名密码方式 远程登录linux服务器

* @return: boolean

*/

private boolean login() {

boolean flag = false;

try {

conn = new Connection(ip, port);

conn.connect();

flag = conn.authenticateWithPassword(username, password);

if (flag) {

logger.info("=========登陆{}服务器的用户名和密码认证成功!", ip);

} else {

logger.warn("=========登陆{}服务器的用户名和密码认证失败!", ip);

conn.close();

}

} catch (IOException e) {

logger.warn("=========登陆{}服务器异常,异常信息: {}", ip, e.getMessage());

}

return flag;

}

/**

* @description: 解析脚本执行的返回结果

* @param in 输入流对象

* @param charset 编码

* @return: 以纯文本的格式返回

*/

private String processStdout(InputStream in, String charset) {

InputStream stdout = new StreamGobbler(in);

StringBuilder buffer = new StringBuilder();

try {

BufferedReader br = new BufferedReader(new InputStreamReader(stdout, charset));

String line = null;

while ((line = br.readLine()) != null) {

buffer.append(line).append("\n");

}

} catch (IOException e) {

e.printStackTrace();

}

return buffer.toString();

}

/**

* @description: 远程执行shll脚本或者命令

* @param cmds 即将执行的命令

* @return: 命令执行完后返回的结果值, 如果命令执行失败,返回空字符串,不是null

*/

private String exec(String cmds) {

String result = "";

try {

if (this.login()) {

Session session = conn.openSession();

session.execCommand(cmds);

result = this.processStdout(session.getStdout(), this.charset);

session.close();

conn.close();

}

} catch (IOException e1) {

e1.printStackTrace();

}

return result;

}

/**

* @description: 创建kafka用户

* @param path 脚本路径

* @param username kafka Scram用户名

* @param password 用户密码

*/

public boolean createUser(String path, String username, String password, String connectString) {

boolean flag = false;

try {

//拼接执行脚本

String shell = path + " " + username + " " + password;

//调用远程脚本

String result = exec(shell);

logger.info("shell result:{}", result);

//zk中查询是否存在

if(getZkChildren(connectString).contains(username)) {

flag = true;

}

} catch (Exception e) {

logger.warn("执行创建用户脚本失败,失败信息:{}", e.getMessage());

}

return flag;

}

/**

* @description: 删除kafka用户

* @param path 脚本路径

* @param username 用户名

* @param connectString zk集群地址

*/

public boolean deleteUser(String path, String username, String connectString) {

boolean flag = false;

try {

//拼接执行脚本

String shell = "sh " + path + " " + username;

//调用远程脚本删除用户证书

String result = exec(shell);

logger.info("shell result : {}", result);

//执行删除脚本后,zk节点还存在,可以删除zk中用户节点

ZooKeeper zookeeper = new ZooKeeper(connectString, 300000, new Watcher() {

@Override

public void process(WatchedEvent event) {

logger.info("zookeeper删除用户节点,收到事件通知:{}", event.getState() );

}

});

zookeeper.delete("/config/users/" + username,-1);

flag = true;

} catch (Exception e) {

logger.warn("删除用户信息失败,失败信息:{}", e.getMessage());

}

return flag;

}

/**

* @description: 获取zookeeper节点信息

* @param connectString zk集群信息

*/

public List<String> getZkChildren(String connectString) {

List<String> list = new ArrayList<>();

ZooKeeper zookeeper = null;

try {

zookeeper = new ZooKeeper(connectString, 300000, new Watcher() {

@Override

public void process(WatchedEvent event) {

logger.info("查询zk用户列表信息,连接zk收到事件通知:" + event.getState() );

}

});

list = zookeeper.getChildren("/config/users", new Watcher() {

@Override

public void process(WatchedEvent event) {

logger.info("查询用户列表信息,收到事件通知:" + event.getState() );

}

});

} catch (Exception e) {

logger.error("查询zk子节点信息失败,异常信息:{}", e.getMessage());

}

return list;

}

}

1.2 官方API直接实现

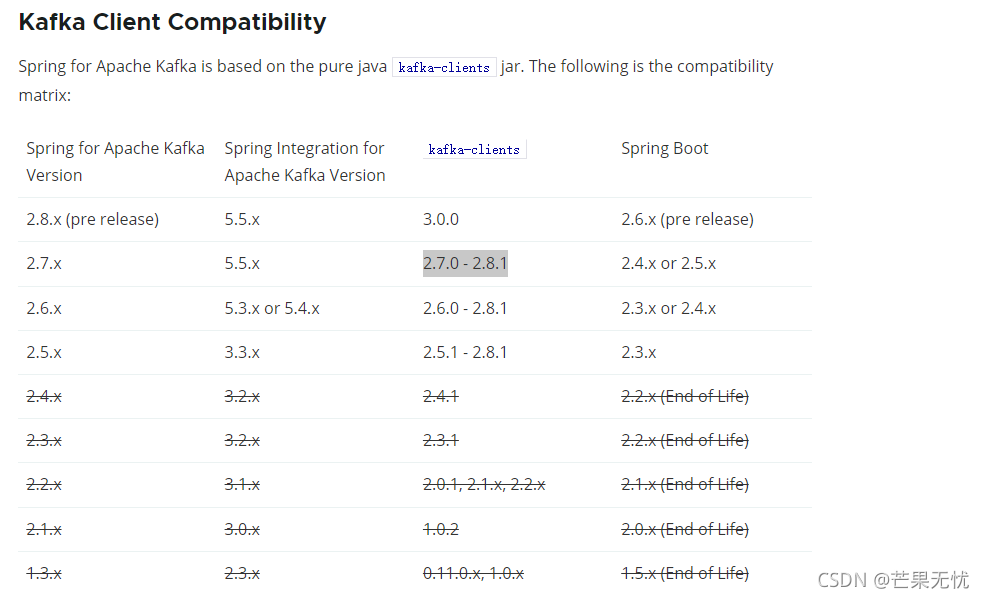

最近翻看Kafka官网发现已经更新到3.0.0,各种功能也越来越完善。然后发现2.7.0的时候SCRAM已经支持API操作。然后就下载试了一下。

开始用的时候因为Springboot版本、kafka版本、kafka-clients版本也是各种踩坑,这里贴一份我最终使用的版本。版本要求也可以参考Spring官网。

<!-- Springboot版本 -->

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.3.5.RELEASE</version>

<relativePath/>

</parent>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>2.7.0</version>

</dependency>

<!-- 调用远程脚本 -->

<dependency>

<groupId>org.jvnet.hudson</groupId>

<artifactId>ganymed-ssh2</artifactId>

<version>build210-hudson-1</version>

</dependency>

<!-- zookeeper -->

<dependency>

<groupId>org.apache.zookeeper</groupId>

<artifactId>zookeeper</artifactId>

<version>3.5.8</version>

</dependency>

</dependencies>

import org.apache.kafka.clients.admin.*;

import org.apache.kafka.common.KafkaFuture;

import org.apache.kafka.common.config.SaslConfigs;

import java.util.*;

public class KafkaCreateTest {

private final AdminClient adminClient;

/**

* 初始化

*/

public KafkaCreateTest(String bootstrapServers) {

Map<String, Object> configs = new HashMap<>();

configs.put(AdminClientConfig.BOOTSTRAP_SERVERS_CONFIG, bootstrapServers);

configs.put(AdminClientConfig.SECURITY_PROTOCOL_CONFIG, "SASL_PLAINTEXT");

configs.put(SaslConfigs.SASL_MECHANISM, "SCRAM-SHA-256");

configs.put(SaslConfigs.SASL_JAAS_CONFIG, "org.apache.kafka.common.security.scram.ScramLoginModule required username=\"kafka_server_admin\" password=\"admin\";");

adminClient = AdminClient.create(configs);

}

public boolean createScramUser(String username, String password) {

boolean res = false;

//指定一个协议ScramMechanism,迭代次数iterations还没搞清楚干嘛的,设置太小会报错

ScramCredentialInfo scramCredentialInfo = new ScramCredentialInfo(ScramMechanism.SCRAM_SHA_256, 10000);

//创建Scram用户凭证,用户不存在,会先创建用户

UserScramCredentialAlteration userScramCredentialUpsertion = new UserScramCredentialUpsertion(username, scramCredentialInfo, password);

AlterUserScramCredentialsResult alterUserScramCredentialsResult = adminClient.alterUserScramCredentials(Collections.singletonList(userScramCredentialUpsertion));

for (Map.Entry<String, KafkaFuture<Void>> e : alterUserScramCredentialsResult.values().entrySet()) {

KafkaFuture<Void> future = e.getValue();

try {

future.get();

} catch (Exception exc) {

System.err.println("返回信息:"+ exc.getMessage());

}

res = !future.isCompletedExceptionally();

}

return res;

}

public boolean deleteScramUser(String username) {

boolean res = false;

//删除Scram用户凭证,删除后用户无权限操作kafka,zk中用户节点还会存在

UserScramCredentialAlteration userScramCredentialDeletion = new UserScramCredentialDeletion(username, ScramMechanism.SCRAM_SHA_256);

AlterUserScramCredentialsResult alterUserScramCredentialsResult = adminClient.alterUserScramCredentials(Collections.singletonList(userScramCredentialDeletion));

for (Map.Entry<String, KafkaFuture<Void>> e : alterUserScramCredentialsResult.values().entrySet()) {

KafkaFuture<Void> future = e.getValue();

try {

future.get();

} catch (Exception exc) {

System.err.println("返回信息:"+ exc.getMessage());

}

res = !future.isCompletedExceptionally();

}

return res;

}

}

2、 Topic操作

topic的操作比较简单,这里就简单的写几个增删改查。

import org.apache.kafka.clients.admin.*;

import org.apache.kafka.common.KafkaFuture;

import org.apache.kafka.common.config.ConfigResource;

import org.apache.kafka.common.config.SaslConfigs;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.util.Collections;

import java.util.HashMap;

import java.util.Map;

import java.util.Set;

public class KafkaTopicUtil {

private final Logger logger = LoggerFactory.getLogger(KafkaTopicUtil.class);

private final AdminClient adminClient;

/**

* 初始化

*/

public KafkaTopicUtil(String bootstrapServers) {

Map<String, Object> configs = new HashMap<>();

configs.put(AdminClientConfig.BOOTSTRAP_SERVERS_CONFIG, bootstrapServers);

configs.put(AdminClientConfig.SECURITY_PROTOCOL_CONFIG, "SASL_PLAINTEXT");

configs.put(SaslConfigs.SASL_MECHANISM, "SCRAM-SHA-256");

configs.put(SaslConfigs.SASL_JAAS_CONFIG, "org.apache.kafka.common.security.scram.ScramLoginModule required username=\"kafka_server_admin\" password=\"admin\";");

adminClient = AdminClient.create(configs);

}

/**

* 创建系统对应的topic

*

* @param topicName 主题名称

* @param partitions 分区

* @param replicationFactor 副本

* @param retention 数据有效期

* @return boolean

*/

public boolean createTopic(String topicName, Integer partitions, Integer replicationFactor, Integer retention) {

boolean res = false;

Set<String> topics = getTopicList();

if (!topics.contains(topicName)) {

partitions = partitions == null ? 1 : partitions;

replicationFactor = replicationFactor == null ? 1 : replicationFactor;

NewTopic topic = new NewTopic(topicName, partitions, replicationFactor.shortValue());

long param = retention * 24 * 60 * 60 * 1000;

Map<String, String> configs = new HashMap<>();

configs.put("retention.ms", String.valueOf(param));

topic.configs(configs);

CreateTopicsResult createTopicsResult = adminClient.createTopics(Collections.singletonList(topic));

for (Map.Entry<String, KafkaFuture<Void>> e : createTopicsResult.values().entrySet()) {

KafkaFuture<Void> future = e.getValue();

try {

future.get();

} catch (Exception exc) {

logger.warn("创建topic参数异常,返回信息:{}", exc.getMessage());

}

res = !future.isCompletedExceptionally();

}

} else {

res = true;

logger.warn("该主题已存在,主题名称:{}", topicName);

}

return res;

}

/**

* 修改topic数据有效期

*

* @param topicName 主题名称

* @param retention 天数

* @return boolean

*/

public boolean updateTopic(String topicName, Integer retention) {

if (retention < 0) {

return false;

}

boolean res = false;

Map<ConfigResource, Config> alertConfigs = new HashMap<>();

ConfigResource configResource = new ConfigResource(ConfigResource.Type.TOPIC, topicName);

//转换为毫秒

long param = retention * 24 * 60 * 60 * 1000;

ConfigEntry configEntry = new ConfigEntry("retention.ms", String.valueOf(param));

Config config = new Config(Collections.singletonList(configEntry));

alertConfigs.put(configResource, config);

AlterConfigsResult alterConfigsResult = adminClient.alterConfigs(alertConfigs);

for (Map.Entry<ConfigResource, KafkaFuture<Void>> e : alterConfigsResult.values().entrySet()) {

KafkaFuture<Void> future = e.getValue();

try {

future.get();

} catch (Exception exc) {

logger.warn("修改topic参数异常,返回信息:{}", exc.getMessage());

}

res = !future.isCompletedExceptionally();

}

return res;

}

/**

* 删除topic

*

* @param topicName 主题

* @return boolean

*/

public boolean deleteTopic(String topicName) {

boolean res = false;

Set<String> topics = getTopicList();

if (topics.contains(topicName)) {

DeleteTopicsResult deleteTopicsResult = adminClient.deleteTopics(Collections.singletonList(topicName));

for (Map.Entry<String, KafkaFuture<Void>> e : deleteTopicsResult.values().entrySet()) {

KafkaFuture<Void> future = e.getValue();

try {

future.get();

} catch (Exception exc) {

logger.warn("删除topic参数异常,返回信息:{}", exc.getMessage());

}

res = !future.isCompletedExceptionally();

}

} else {

logger.info("topic不存在,名称:{}", topicName);

res = true;

}

return res;

}

/**

* 获取主题列表

*

* @return Set

*/

public Set<String> getTopicList() {

Set<String> result = null;

ListTopicsResult listTopicsResult = adminClient.listTopics();

try {

result = listTopicsResult.names().get();

} catch (Exception e) {

logger.warn("获取主题列表失败,失败原因:{}", e.getMessage());

e.printStackTrace();

}

return result;

}

}

3、 ACL权限操作

ACL权限配置比较灵活,可以根据自己的需求封装不同的请求实体,这里只列举我目前业务使用的方式,更多的配置方式欢迎讨论。

import org.apache.kafka.clients.admin.AdminClient;

import org.apache.kafka.clients.admin.AdminClientConfig;

import org.apache.kafka.clients.admin.CreateAclsResult;

import org.apache.kafka.clients.admin.DeleteAclsResult;

import org.apache.kafka.common.KafkaFuture;

import org.apache.kafka.common.acl.*;

import org.apache.kafka.common.config.SaslConfigs;

import org.apache.kafka.common.resource.PatternType;

import org.apache.kafka.common.resource.ResourcePattern;

import org.apache.kafka.common.resource.ResourceType;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.util.Collections;

import java.util.HashMap;

import java.util.Map;

public class KafkaAclUtil {

private final Logger logger = LoggerFactory.getLogger(KafkaTopicUtil.class);

private final AdminClient adminClient;

/**

* 初始化

*/

public KafkaAclUtil(String bootstrapServers) {

Map<String, Object> configs = new HashMap<>();

configs.put(AdminClientConfig.BOOTSTRAP_SERVERS_CONFIG, bootstrapServers);

configs.put(AdminClientConfig.SECURITY_PROTOCOL_CONFIG, "SASL_PLAINTEXT");

configs.put(SaslConfigs.SASL_MECHANISM, "SCRAM-SHA-256");

configs.put(SaslConfigs.SASL_JAAS_CONFIG, "org.apache.kafka.common.security.scram.ScramLoginModule required username=\"kafka_server_admin\" password=\"admin\";");

adminClient = AdminClient.create(configs);

}

/**

* 添加权限

*

* @param resourceType 资源类型

* @param resourceName 资源名称

* @param username 用户名

* @param operation 权限名称

*/

public void addACLAuth(String resourceType, String resourceName, String username, String operation) {

ResourcePattern resource = new ResourcePattern(getResourceType(resourceType), resourceName, PatternType.LITERAL);

AccessControlEntry accessControlEntry = new AccessControlEntry("User:" + username, "*", getOperation(operation), AclPermissionType.ALLOW);

AclBinding aclBinding = new AclBinding(resource, accessControlEntry);

CreateAclsResult createAclsResult = adminClient.createAcls(Collections.singletonList(aclBinding));

for (Map.Entry<AclBinding, KafkaFuture<Void>> e : createAclsResult.values().entrySet()) {

KafkaFuture<Void> future = e.getValue();

try {

future.get();

boolean success = !future.isCompletedExceptionally();

if (success) {

logger.info("创建权限成功");

}

} catch (Exception exc) {

logger.warn("创建权限失败,错误信息:{}", exc.getMessage());

exc.printStackTrace();

}

}

}

/**

* 删除权限

*

* @param resourceType 资源类型

* @param resourceName 资源名称

* @param username 用户名

* @param operation 权限名称

*/

public void deleteACLAuth(String resourceType, String resourceName, String username, String operation) {

ResourcePattern resource = new ResourcePattern(getResourceType(resourceType), resourceName, PatternType.LITERAL);

AccessControlEntry accessControlEntry = new AccessControlEntry("User:" + username, "*", getOperation(operation), AclPermissionType.ALLOW);

AclBinding aclBinding = new AclBinding(resource, accessControlEntry);

DeleteAclsResult deleteAclsResult = adminClient.deleteAcls(Collections.singletonList(aclBinding.toFilter()));

for (Map.Entry<AclBindingFilter, KafkaFuture<DeleteAclsResult.FilterResults>> e : deleteAclsResult.values().entrySet()) {

KafkaFuture<DeleteAclsResult.FilterResults> future = e.getValue();

try {

future.get();

boolean success = !future.isCompletedExceptionally();

if (success) {

logger.info("删除权限成功");

}

} catch (Exception exc) {

logger.warn("删除权限失败,错误信息:{}", exc.getMessage());

exc.printStackTrace();

}

}

}

private AclOperation getOperation(String operation) {

AclOperation aclOperation = null;

switch (operation) {

case "CREATE":

aclOperation = AclOperation.CREATE;

break;

case "WRITE":

aclOperation = AclOperation.WRITE;

break;

case "READ":

aclOperation = AclOperation.READ;

break;

default:

break;

}

return aclOperation;

}

private ResourceType getResourceType(String type) {

ResourceType resourceType = null;

switch (type) {

case "Group":

resourceType = ResourceType.GROUP;

break;

case "Topic":

resourceType = ResourceType.TOPIC;

break;

default:

break;

}

return resourceType;

}

public static void main(String[] args) {

KafkaAclUtil kafkaAclUtil = new KafkaAclUtil("192.168.80.130:9092");

//topic类型的资源,名称为test,用户名为writer,授予写权限

kafkaAclUtil.addACLAuth("Topic", "test", "writer", "WRITE");

//topic类型的资源,名称为test,用户名为reader,授予读权限

kafkaAclUtil.addACLAuth("Topic", "test", "reader", "READ");

//group类型的,名称为group_test,用户名为reader,授予读权限

kafkaAclUtil.addACLAuth("Group", "group_test", "reader", "READ");

}

}

461

461

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?