Linux安装各种各种程序

文章目录

rpm命令:相当于windows的添加/卸载程序

进行程序的安装、更新、卸载、查看

本地程序安装:rpm -ivh 程序名

本地程序查看:rpm -qa

本地程序卸载:rpm -e --nodeps 程序名

yum命令:相当于可以联网的rpm命令

相当于先联网下载程序安装包、程序的更新包

自动执行rpm命令

一、准备工作

因为JDK,TOMCAT,MYSQL的安装过程中需要从网上下载部分支持包才可以继续,所以要求提前安装下载好依赖。

yum install glibc.i686

yum -y install libaio.so.1 libgcc_s.so.1 libstdc++.so.6

yum update libstdc++-4.4.7-4.el6.x86_64

yum install gcc-c++

二、安装JDK

1.1、卸载OpenJDK

执行命令查看:

rpm –qa | grep java

卸载OPENJDK

rpm -e --nodeps java-1.6.0-openjdk-1.6.0.0-1.66.1.13.0.el6.i686

rpm -e --nodeps java-1.7.0-openjdk-1.7.0.45-2.4.3.3.el6.i686

如果上面的rpm -qa | grep java没有显示,但是java -version又可以查询到jdk的,试一下另一种卸载方式。

which java

rm -rf JDK地址(卸载JDK)

rm -rf /usr/local/java/jdk1.8.0_202/

vi命令编辑文件

profile vim /etc/profile

将环境变量删除,这样就卸载完毕了。使用java -version查询,就没有了

1.2、安装JDK

创建JDK的安装路径,在/usr/local/ 创建文件夹java目录。

mkdir -p /usr/local/java

将下载号的JDK上传到Linux,解压文件到安装目录。

tar -zxvf jdk-7u71-linux-i586.tar.gz -C /usr/local/java

在**/etc/profile**配置环境变量

vim /etc/profile

在文件底部添加一下文件

#set java environment

JAVA_HOME=/usr/local/java/jdk1.7.0_71

CLASSPATH=.:$JAVA_HOME/lib.tools.jar

PATH=$JAVA_HOME/bin:$PATH

export JAVA_HOME CLASSPATH PATH

重新加载配置文件:【否则环境变量不会重新执行】

source /etc/profile

三、redis简述及安装

官网:https://redis.io/

redis安装首选:https://blog.csdn.net/qq_41853447/article/details/102949784

注意:redis默认是没有设置密码的,需要自己设置。

redis安装:

redis是C语言开发,安装redis需要先将官网下载的源码进行编译,编译依赖gcc环境。如果没有gcc环境,需要安装gcc

- 步骤1、下载依赖

yum install gcc-c++

创建文件夹作为redis的安装目录

mkdir -p /usr/local/redis

- 步骤2、解压

上传redis到Linux并解压到指定目录

tar -zxvf redis-4.0.14.tar.gz -C /usr/local/redis

- 步骤3、编译redis (编译,将.c文件编译为.o文件)

进入:cd redis-4.0.14文件夹

执行:make

这样就编译成功

如果没有安装gcc,编译将出现错误提示。(如果安装失败,必须删除文件夹,重写解压)

- 步骤4、安装

就在该目录下执行这个命令,并指定安装在那个目录下。

make PREFIX=/usr/local/redis install

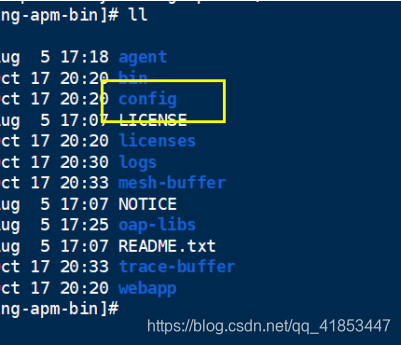

安装完后,在**/usr/local/redis/bin**下有几个可执行文件

redis-benchmark ----性能测试工具

redis-check-aof ----AOF文件修复工具

redis-check-dump ----RDB文件检查工具(快照持久化文件)

redis-cli ----命令行客户端

redis-server ----redis服务器启动命令

- 步骤5、copy配置文件

redis启动需要一个配置文件,可以修改端口号等信息

cp /usr/local/redis/redis-4.0.14/redis.conf /usr/local/redis

- 步骤6、配置文件

详细配置参考:https://www.cnblogs.com/zxtceq/p/7676911.html

#指定为后端启动

daemonize yes

#设置密码

requirepass 123456

#端口号

port 6379

# 一个客户端空闲多少秒后关闭连接。(0代表禁用,永不关闭)

timeout 0

#换成redis所在的服务器IP

bind 192.168.209.136

如果编辑器不能查询关键词,可以将配置文件复制到记事本里面查询行号,直接区查询就ok。

- 启动

启动时,指定配置文件

cd /usr/local/redis/

./bin/redis-server ./redis.conf

- 查询是否启动成功

ps -ef | grep -i redis

四、Elasticsearch安装

下载地址:https://www.elastic.co/cn/downloads/

下载地址:

https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-oss-7.3.2-linux-x86_64.tar.gz

安装参考文档:

ELK官网:https://www.elastic.co/

ELK官网文档:https://www.elastic.co/guide/index.html

ELK中文手册:https://www.elastic.co/guide/cn/elasticsearch/guide/current/index.html

ELK中文社区:https://elasticsearch.cn/

ELK-API :https://www.elastic.co/guide/en/elasticsearch/client/java-api/current/transport-client.html

4.1、安装

- 先将elasticsearch上传到Linux中,并解压

- 修改elasticsearch.yml配置文件

配置文件详细解说:https://www.cnblogs.com/xiaochina/p/6855591.html

位置:/usr/local/elasticsearch-7.3.2/config/elasticsearch.yml

命令:

vim /usr/local/elasticsearch-7.3.2/config/elasticsearch.yml

# elasticsearch的名字

cluster.name: elasticsearch

# 节点名

node.name: node-1

#数据保存的位置 该文件是不存在的,需要自己创建

path.data: /usr/local/elk/data

#日志 该文件是不存在的,需要自己创建

path.logs: /usr/local/elk/long

# 允许访问的IP 该配置表示所有IP都有权访问

network.host: 0.0.0.0

# 使用单机启动

discovery.seed_hosts: ["0.0.0.0"]

# 端口号

http.port: 9200

以下两个可配置,可不配

bootstrap.memory_lock: false

bootstrap.system_call_filter: false

完整配置

# ======================== Elasticsearch Configuration =========================

#

# NOTE: Elasticsearch comes with reasonable defaults for most settings.

# Before you set out to tweak and tune the configuration, make sure you

# understand what are you trying to accomplish and the consequences.

#

# The primary way of configuring a node is via this file. This template lists

# the most important settings you may want to configure for a production cluster.

#

# Please consult the documentation for further information on configuration options:

# https://www.elastic.co/guide/en/elasticsearch/reference/index.html

#

# ---------------------------------- Cluster -----------------------------------

#

# Use a descriptive name for your cluster:

# elasticsearch的名字

cluster.name: elasticsearch

#

# ------------------------------------ Node ------------------------------------

#

# Use a descriptive name for the node:

# 节点名

node.name: node-1

#

# Add custom attributes to the node:

#

#node.attr.rack: r1

#

# ----------------------------------- Paths ------------------------------------

#

# Path to directory where to store the data (separate multiple locations by comma):

#数据保存的位置 该文件是不存在的,需要自己创建

path.data: /usr/local/elk/data

#

# Path to log files:

#日志 该文件是不存在的,需要自己创建

path.logs: /usr/local/elk/long

#

# ----------------------------------- Memory -----------------------------------

bootstrap.memory_lock: false

bootstrap.system_call_filter: false

# Lock the memory on startup:

#

#bootstrap.memory_lock: true

#

# Make sure that the heap size is set to about half the memory available

# on the system and that the owner of the process is allowed to use this

# limit.

#

# Elasticsearch performs poorly when the system is swapping the memory.

#

# ---------------------------------- Network -----------------------------------

#

# Set the bind address to a specific IP (IPv4 or IPv6):

# 允许访问的IP 该配置表示所有IP都有权访问

network.host: 0.0.0.0

#

# Set a custom port for HTTP:

# 端口号

http.port: 9200

#

# For more information, consult the network module documentation.

#

# --------------------------------- Discovery ----------------------------------

#

# Pass an initial list of hosts to perform discovery when new node is started:

# The default list of hosts is ["127.0.0.1", "[::1]"]

#

#discovery.zen.ping.unicast.hosts: ["host1", "host2"]

#

# Prevent the "split brain" by configuring the majority of nodes (total number of master-eligible nodes / 2 + 1):

#

#discovery.zen.minimum_master_nodes:

#

# For more information, consult the zen discovery module documentation.

#

# ---------------------------------- Gateway -----------------------------------

#

# Block initial recovery after a full cluster restart until N nodes are started:

#

#gateway.recover_after_nodes: 3

#

# For more information, consult the gateway module documentation.

#

# ---------------------------------- Various -----------------------------------

#

# Require explicit names when deleting indices:

#

#action.destructive_requires_name: true

- 创建数据存放文件和日志文件

数据文件:

mkdir -p /usr/local/elk/data

日志文件:

mkdir -p /usr/local/elk/long

配置:jvm.options

就在当前位置,命令:

vim jvm.options

# 配置java的堆内存和栈内存,默认为1G有些大了,现在使用的是虚拟机,需要配置小一点。

# Xms最小,Xmx最大

-Xms512m

-Xmx512m

- 配置:vim /etc/security/limits.conf

* soft nofile 65536

* hard nofile 65536

这个配置文件重新打开一个窗口就生效了。

检查是否配置成功(有比较检测一下)

ulimit -Hn

ulimit -Sn

- 配置:vim /etc/sysctl.conf

添加在最后:

vm.max_map_count=262144

让这个文件生效:

命令:

sysctl -p

- 配置:vi /etc/security/limits.d/90-nproc.conf

* soft nproc 4096

* hard nproc 4096

- 创建用户并给日志文件和数据文件给予权限

创建用户命令:

useradd 用户名

useradd elk

命令: chown -R 用户名:用户名 文件(目录)名

chown -R elk:elk elasticsearch-7.3.2

chown -R elk:elk elk

4.2、启动

注意:启动非常缓慢,需要等待几分钟。

- 创建启动脚本

脚本需要在bin目录下创建。

语法:

nohup 绝对路径 >> 指定启动打印的日志文件 2>&1 &

用root授权:

touch startup.sh

chmod a+x startup.sh

脚本中写入:

#!/bin/bash

nohup /usr/local/elasticsearch-6.6.2/bin/elasticsearch >> /usr/local/elasticsearch-6.6.2/output.log 2>&1 &

启动命令:

./startup.sh

这里注意:注意更改启动文件地址和日志地址

- 查看启动日志

tail -f output.log

访问:http://192.168.240.133:9200/

注意:记得开放端口号,不建议关闭防火墙

- 关闭:

Elasticsearch是java写的,所以直接利用jps查看进程

建议不要使用杀死进程的方式关闭,最佳关闭方法请在下方留言。

4.3、常见的错误

Elasticsearch是不能使用root用户登录的,使用root用户登录会报这个错。

参考创建用户和授权模块

这个错是因为我们使用的是elk这个用户,但是没有给elasticsearch设置可执行权限。

解决方法:使用root用户分配权限。

命令: chown -R 用户名:用户名 文件(目录)名

例如:

chown -R elk:elk elasticsearch-7.3.2

错误:max number of threads [1024] for user [elk] is too low, increase to at least [4096]

说明该用户的线程太低,需要修改

修改:/etc/security/limits.d/90-nproc.conf文件

vim /etc/security/limits.d/90-nproc.conf

* soft nproc 4096

如果不行可以试一试

* soft nproc 4096

* hard nproc 4096

错误:system call filters failed to install; check the logs and fix your configuration or disable system call filters at your own risk

解决:

Centos6不支持SecComp,而ES5.2.0默认bootstrap.system_call_filter为true

禁用:在elasticsearch.yml中配置bootstrap.system_call_filter为false,注意要在Memory下面:

bootstrap.memory_lock: false

bootstrap.system_call_filter: false

4.4、一步就可以直接选找到错误位置

- 错误

the default discovery settings are unsuitable for production use; at least one of [discovery.seed_hosts, discovery.seed_providers, cluster.initial_master_nodes] must be configured

大致意思就是说,默认的配置不符合生产使用。因为我现在配置的是单机的,elasticsearch默认是必须集群使用。

解决:

修改config目录下elasticsearch.yml文件

- 修改network.host为

0.0.0.0 - 修改discovery.zen.ping.unicast.hosts为

["0.0.0.0"]

五、prometheus——系统监控(未完)

下载地址:https://prometheus.io/download/

GitHub网址:https://github.com/prometheus/prometheus

博客:https://www.cnblogs.com/netonline/p/8289411.html

博客:https://www.cnblogs.com/shhnwangjian/p/6878199.html

- 安装

上传解压

tar -xvf prometheus-1.6.2.linux-amd64.tar.gz

- 启动

nohup ./prometheus -config.file=prometheus.yml &

或者

nohup /opt/prometheus-1.6.2.linux-amd64/prometheus &

浏览器访问:http://192.168.209.136:9090

默认端口:9090

注意:一定记得开放端口

关闭的方法,欢迎下方

六、nacos

官方文档:

下载地址:https://github.com/alibaba/nacos/releases

直接下载,上传,加压,启动,完了,集群需要配置mysql等等。

Linux上启动:

sh startup.sh -m standalone

Linux上关闭

sh shutdown.sh

windows上启动

cmd startup.cmd

Windows上关闭

cmd shutdown.cmd

默认端口是8848

用户名:nacos

密码:nacos

七、SkyWalking(监听)

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-dGzq00AR-1574414383732)(https://www.funtl.com/assets/2279594-4b7d1b6abe595390.png)]

官方地址:http://skywalking.apache.org/

下载地址:http://skywalking.apache.org/zh/downloads/

文档:https://www.funtl.com/zh/spring-cloud-alibaba/为什么需要链路追踪.html#什么是-skywalking

视频地址:https://www.bilibili.com/video/av40796154/?spm_id_from=333.788.videocard.11

有的时候官网上下载不了,网盘上有。

链接:https://pan.baidu.com/s/1Klyv_dZP2kQyec9h6_QjKw

提取码:k907

复制这段内容后打开百度网盘手机App,操作更方便哦

7.1、下载上传到Linux系统的安装目录

在config目录下application.yml文件进行配置

storage:

elasticsearch:

# nameSpace: ${SW_NAMESPACE:""}

clusterNodes: ${SW_STORAGE_ES_CLUSTER_NODES:192.168.240.133:9200}

# user: ${SW_ES_USER:""}

# password: ${SW_ES_PASSWORD:""}

indexShardsNumber: ${SW_STORAGE_ES_INDEX_SHARDS_NUMBER:2}

indexReplicasNumber: ${SW_STORAGE_ES_INDEX_REPLICAS_NUMBER:0}

# # Those data TTL settings will override the same settings in core module.

# recordDataTTL: ${SW_STORAGE_ES_RECORD_DATA_TTL:7} # Unit is day

# otherMetricsDataTTL: ${SW_STORAGE_ES_OTHER_METRIC_DATA_TTL:45} # Unit is day

# monthMetricsDataTTL: ${SW_STORAGE_ES_MONTH_METRIC_DATA_TTL:18} # Unit is month

# # Batch process setting, refer to https://www.elastic.co/guide/en/elasticsearch/client/java-api/5.5/java-docs-bulk-processor.html

bulkActions: ${SW_STORAGE_ES_BULK_ACTIONS:1000} # Execute the bulk every 1000 requests

flushInterval: ${SW_STORAGE_ES_FLUSH_INTERVAL:10} # flush the bulk every 10 seconds whatever the number of requests

concurrentRequests: ${SW_STORAGE_ES_CONCURRENT_REQUESTS:2} # the number of concurrent requests

metadataQueryMaxSize: ${SW_STORAGE_ES_QUERY_MAX_SIZE:5000}

# segmentQueryMaxSize: ${SW_STORAGE_ES_QUERY_SEGMENT_SIZE:200}

# h2:

# driver: ${SW_STORAGE_H2_DRIVER:org.h2.jdbcx.JdbcDataSource}

# url: ${SW_STORAGE_H2_URL:jdbc:h2:mem:skywalking-oap-db}

# user: ${SW_STORAGE_H2_USER:sa}

# metadataQueryMaxSize: ${SW_STORAGE_H2_QUERY_MAX_SIZE:5000}

# mysql:

# metadataQueryMaxSize: ${SW_STORAGE_H2_QUERY_MAX_SIZE:5000}

注意:启动SkyWalking之前需要先启动elasticsearch

2、启动:进入bin目录执行

命令:

./startup.sh

浏览器访问:http://192.168.240.133:8080/

端口默认是:8080

配置文件的最后出现nacos的配置,说明也只是nacos的配置,可以做研究。

八、kibana安装

下载地址:https://www.elastic.co/cn/downloads/

文档地址:https://www.elastic.co/guide/en/kibana/index.html

注意:kibana安装的版本一定要和elasticsearch的版本一致。

- 配置文件配置:

配置文件地址:config/kibana.yml

# 端口号

server.port: 5601

# 允许那些地址可以访问

server.host: "0.0.0.0"

# elasticsearch所在的地址

elasticsearch.hosts: ["http://192.168.240.133:9200"]

# kibana的索引

#kibana.index: ".kibana"

# elasticsearch的用户名和密码

#elasticsearch.username: "user"

#elasticsearch.password: "pass"

- 完成配置如下:

# Kibana is served by a back end server. This setting specifies the port to use.

# 端口号

server.port: 5601

# Specifies the address to which the Kibana server will bind. IP addresses and host names are both valid values.

# The default is 'localhost', which usually means remote machines will not be able to connect.

# To allow connections from remote users, set this parameter to a non-loopback address.

# 允许那些地址可以访问

server.host: "0.0.0.0"

# Enables you to specify a path to mount Kibana at if you are running behind a proxy.

# Use the `server.rewriteBasePath` setting to tell Kibana if it should remove the basePath

# from requests it receives, and to prevent a deprecation warning at startup.

# This setting cannot end in a slash.

#server.basePath: ""

# Specifies whether Kibana should rewrite requests that are prefixed with

# `server.basePath` or require that they are rewritten by your reverse proxy.

# This setting was effectively always `false` before Kibana 6.3 and will

# default to `true` starting in Kibana 7.0.

#server.rewriteBasePath: false

# The maximum payload size in bytes for incoming server requests.

#server.maxPayloadBytes: 1048576

# The Kibana server's name. This is used for display purposes.

#server.name: "your-hostname"

# The URLs of the Elasticsearch instances to use for all your queries.

# elasticsearch所在的地址

elasticsearch.hosts: ["http://192.168.240.133:9200"]

# When this setting's value is true Kibana uses the hostname specified in the server.host

# setting. When the value of this setting is false, Kibana uses the hostname of the host

# that connects to this Kibana instance.

#elasticsearch.preserveHost: true

# Kibana uses an index in Elasticsearch to store saved searches, visualizations and

# dashboards. Kibana creates a new index if the index doesn't already exist.

# kibana的索引

#kibana.index: ".kibana"

# The default application to load.

#kibana.defaultAppId: "home"

# If your Elasticsearch is protected with basic authentication, these settings provide

# the username and password that the Kibana server uses to perform maintenance on the Kibana

# index at startup. Your Kibana users still need to authenticate with Elasticsearch, which

# is proxied through the Kibana server.

# elasticsearch的用户名和密码

#elasticsearch.username: "user"

#elasticsearch.password: "pass"

# Enables SSL and paths to the PEM-format SSL certificate and SSL key files, respectively.

# These settings enable SSL for outgoing requests from the Kibana server to the browser.

#server.ssl.enabled: false

#server.ssl.certificate: /path/to/your/server.crt

#server.ssl.key: /path/to/your/server.key

# Optional settings that provide the paths to the PEM-format SSL certificate and key files.

# These files validate that your Elasticsearch backend uses the same key files.

#elasticsearch.ssl.certificate: /path/to/your/client.crt

#elasticsearch.ssl.key: /path/to/your/client.key

# Optional setting that enables you to specify a path to the PEM file for the certificate

# authority for your Elasticsearch instance.

#elasticsearch.ssl.certificateAuthorities: [ "/path/to/your/CA.pem" ]

# To disregard the validity of SSL certificates, change this setting's value to 'none'.

#elasticsearch.ssl.verificationMode: full

# Time in milliseconds to wait for Elasticsearch to respond to pings. Defaults to the value of

# the elasticsearch.requestTimeout setting.

#elasticsearch.pingTimeout: 1500

# Time in milliseconds to wait for responses from the back end or Elasticsearch. This value

# must be a positive integer.

#elasticsearch.requestTimeout: 30000

# List of Kibana client-side headers to send to Elasticsearch. To send *no* client-side

# headers, set this value to [] (an empty list).

#elasticsearch.requestHeadersWhitelist: [ authorization ]

# Header names and values that are sent to Elasticsearch. Any custom headers cannot be overwritten

# by client-side headers, regardless of the elasticsearch.requestHeadersWhitelist configuration.

#elasticsearch.customHeaders: {}

# Time in milliseconds for Elasticsearch to wait for responses from shards. Set to 0 to disable.

#elasticsearch.shardTimeout: 30000

# Time in milliseconds to wait for Elasticsearch at Kibana startup before retrying.

#elasticsearch.startupTimeout: 5000

# Logs queries sent to Elasticsearch. Requires logging.verbose set to true.

#elasticsearch.logQueries: false

# Specifies the path where Kibana creates the process ID file.

#pid.file: /var/run/kibana.pid

# Enables you specify a file where Kibana stores log output.

#logging.dest: stdout

# Set the value of this setting to true to suppress all logging output.

#logging.silent: false

# Set the value of this setting to true to suppress all logging output other than error messages.

#logging.quiet: false

# Set the value of this setting to true to log all events, including system usage information

# and all requests.

#logging.verbose: false

# Set the interval in milliseconds to sample system and process performance

# metrics. Minimum is 100ms. Defaults to 5000.

#ops.interval: 5000

# Specifies locale to be used for all localizable strings, dates and number formats.

#i18n.locale: "en"

- 启动kibana

在kibana的bin目录下创建启动脚本

touch startup.sh

#!/bin/bash

nohup /usr/local/kibana-7.3.2-linux-x86_64/bin/kibana --allow-root >> /usr/local/kibana-7.3.2-linux-x86_64/output.log 2>&1 &

nohup 绝对路径 >> 指定输出的日志文件 2&1 & 【表示后台运行】

–allow-root:表示可以root命令执行

用root授权:

chmod a+x startup.sh

查看启动日志

tail -f output.log

访问:http://192.168.240.133:5601/

注意:记得在防火墙中开启端口号

- 关闭

不建议使用杀死进程的方式,但是怎么关闭欢迎下方留言。

九、 Logstash安装

下载地址:https://www.elastic.co/cn/downloads/

注意:版本一定要和kibana和elasticsearch保持一致

-

下载地址:https://www.elastic.co/guide/en/logstash/6.6/index.html

-

下载地址:https://www.elastic.co/guide/en/logstash/current/index.html

上传logstash到Linux上,加载在/usr/local目录 -

jvm.options配置

位置:/usr/local/logstash-6.6.2/config

- 复制logstash-sample.conf配置文件,重命名为:logstash.conf

将原来的全部删除,复制新的数据

# Sample Logstash configuration for creating a simple

# Beats -> Logstash -> Elasticsearch pipeline.

logstash -f logstash.conf

input {

# 从文件读取日志信息

file {

path => "/var/log/messages"

type => "system"

start_position => "beginning"

}

}

filter {

}

output {

# 标准输出

stdout {}

}

- 启动

创建启动脚本

命令:

touch startup.sh

root命令授权:

chmod a+x startup.sh

启动速度很慢,需要稍等片刻。

因为是java写的,所以利用jps查看

扩展

1、防火墙设置

- 开启防火墙

systemctl start firewalld

- 关闭防火墙

systemctl stop firewalld

- 查看防火墙状态

systemctl status firewalld

- 设置开机启动

systemctl enable firewalld

- 禁用开机启动

systemctl disable firewalld

- 重启防火墙

firewall-cmd --reload

- 开放端口(修改后需要重启防火墙方可生效)

firewall-cmd --zone=public --add-port=8080/tcp --permanent

- 查看开放的端口

firewall-cmd --list-ports

- 关闭端口

firewall-cmd --zone=public --remove-port=8080/tcp --permanent

- 指定端口号查询

netstat -ntulp | grep 端口号

- 安装wget命令

yum install wget

22万+

22万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?