ELK(ElasticSearch, Logstash, Kibana)搭建

在完成搭建后要在中间添加kafka

利用json来传递日志信息并存储到ElasticSearch内

Logstash的配置

input{

kafka{

#指定kafka地址,集群则逗号分隔

bootstrap_servers=>"XXXXXXXXX:9092"

#订阅的主题

topics=> "test_log3"

#format => json

codec => json{

charset=>"UTF-8"

}

consumer_threads=>5

}

}

filter{

}

output{

elasticsearch{

action=>"index"

hosts=>"localhost:9200"

index=>"test_log"

}

}

在项目中自己写一个KafkaAppender类继承自ConsoleAppender

import ch.qos.logback.classic.Level;

import ch.qos.logback.classic.spi.ILoggingEvent;

import ch.qos.logback.classic.spi.StackTraceElementProxy;

import ch.qos.logback.core.AppenderBase;

import ch.qos.logback.core.ConsoleAppender;

import chz.cloud.websocket.chat.entities.SimpleLog;

import chz.cloud.websocket.chat.formatter.Formatter;

import com.alibaba.fastjson.JSON;

import org.apache.commons.lang3.ObjectUtils;

import org.apache.kafka.clients.producer.Producer;

import org.apache.kafka.common.serialization.StringSerializer;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.kafka.core.DefaultKafkaProducerFactory;

import org.springframework.kafka.core.KafkaTemplate;

import java.io.StringReader;

import java.text.SimpleDateFormat;

import java.util.Date;

import java.util.HashMap;

import java.util.Map;

import java.util.Properties;

/**

* Created by IntelliJ IDEA.

*

* @Author: chz

* @Date: 2021/09/26/9:13

* @Description:

*/

public class KafkaAppender extends ConsoleAppender<ILoggingEvent> {

//kafka服务器地址

private static final String bootstrapServers = "XXXXXXXXXXXXX:9092";

//kafka生产者

private KafkaTemplate kafkaTemplate;

//自定义日志对象

private SimpleLog simpleLog = new SimpleLog();

@Override

public void start() {

super.start();

Map<String, Object> props = new HashMap();

props.put("bootstrap.servers", bootstrapServers);

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

kafkaTemplate = new KafkaTemplate(new DefaultKafkaProducerFactory(props));

kafkaTemplate.send("test", "user", "测试连接");

}

@Override

public void stop() {

super.stop();

}

@Override

protected void append(ILoggingEvent eventObject) {

long timeStamp = eventObject.getTimeStamp();

Date date = new Date(timeStamp);

SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

//格式化之后的时间

String dateStr = sdf.format(date);

//日志等级

String levStr = eventObject.getLevel().toString();

//日志信息

String formattedMessage = eventObject.getFormattedMessage();

if (ObjectUtils.isNotEmpty(eventObject.getThrowableProxy()) ) {

StackTraceElementProxy[] stackTraceElementProxyArray = eventObject.getThrowableProxy().getStackTraceElementProxyArray();

//错误的堆栈跟踪信息

simpleLog.setStackTraceElementProxyArray(stackTraceElementProxyArray);

}

//进程名

String threadName = eventObject.getThreadName();

simpleLog.setThreadName(threadName);

simpleLog.setDate(dateStr);

simpleLog.setLevel(levStr);

simpleLog.setMessage(formattedMessage);

//发送JSON格式的日志信息

kafkaTemplate.send("test_log", "user", JSON.toJSONString(simpleLog));

}

}

在logback.xml文件中添加配置信息

<?xml version="1.0" encoding="UTF-8" ?>

<!--该日志将日志级别不同的log信息保存到不同的文件中-->

<configuration>

<include resource="org/springframework/boot/logging/logback/defaults.xml"/>

<springProperty scope="context" name="springAppName" source="spring.application.name"/>

<!--日志在工程中的输出位置-->

<property name="LOG_FILE" value="${BUILD_FOLDER:-build}/${springAppName}"/>

<!--控制台的日志输出样式-->

<property name="CONSOLE_LOG_PATTERN" value="${CONSOLE_LOG_PATTERN:-%clr(%d{yyyy-MM-dd HH:mm:ss.SSS}){faint} %clr(${LOG_LEVEL_PATTERN:-%5p}) %clr(${PID:- }){magenta} %clr(---){faint} %clr([%15.15t]){faint} %clr(%-40.40logger{39}){cyan} %clr(:){faint} %m%n${LOG_EXCEPTION_CONVERSION_WORD:-%wEx}}"/>

<!--控制台输出-->

<appender name="console" class="ch.qos.logback.core.ConsoleAppender">

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<level>INFO</level>

</filter>

<!--日志输出编码-->

<encoder>

<pattern>${CONSOLE_LOG_PATTERN}</pattern>

<charset>utf8</charset>

</encoder>

</appender>

<!-- logstash远程日志配置-->

<!-- <appender name="logstash" class="net.logstash.logback.appender.LogstashTcpSocketAppender">-->

<!-- <destination>localhost:4560</destination>-->

<!-- <encoder charset="UTF-8" class="net.logstash.logback.encoder.LogstashEncoder"/>-->

<!-- </appender>-->

<appender name="KAFKA" class="chz.cloud.websocket.chat.config.KafkaAppender">

<encoder>

<pattern>${CONSOLE_LOG_PATTERN}</pattern>

<charset>utf8</charset>

</encoder>

</appender>

<!--日志输出级别-->

<root level="DEBUG">

<appender-ref ref="console"/>

<!--<appender-ref ref="logstash"/>-->

<!-- <appender-ref ref="KAFKA"/>-->

</root>

<root level="INFO">

<appender-ref ref="KAFKA"/>

</root>

</configuration>

自定义日志类SimpleLog

import ch.qos.logback.classic.spi.StackTraceElementProxy;

import lombok.Data;

/**

* Created by IntelliJ IDEA.

*

* @Author: chz

* @Date: 2021/09/26/14:08

* @Description:

*/

@Data

public class SimpleLog {

private String date;

private String level;

private String message;

private StackTraceElementProxy[] stackTraceElementProxyArray;

private String threadName;

}

POM文件

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-elasticsearch</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

</dependency>

<dependency>

<groupId>net.logstash.logback</groupId>

<artifactId>logstash-logback-encoder</artifactId>

<version>6.6</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-amqp</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-websocket</artifactId>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.31</version>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

</dependencies>

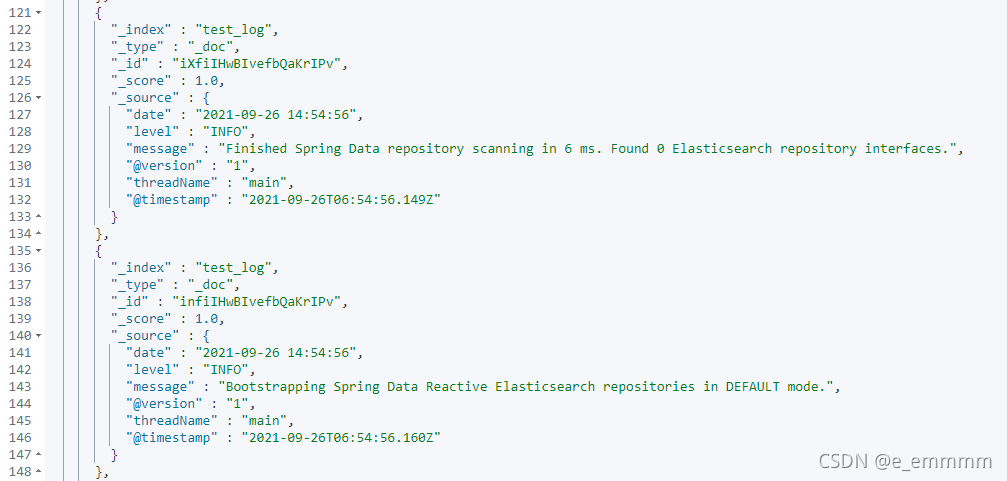

es中存储的信息

错误信息

1352

1352

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?