前提条件

Linux下安装好Hadoop2.7.3、Hive2.3.6

步骤

(1) 修改hadoop的core-site.xml添加如下配置

<property>

<name>hadoop.proxyuser.hadoop.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.groups</name>

<value>*</value>

</property>注意:

配置中hadoop.proxyuser.<用户名>.hosts 和 hadoop.proxyuser.<用户名>.groups 的用户名要改为Linux的用户名,例如Linux的用户名为hadoop就把<用户名>改为hadoop

(2) 启动hadoop

start-all.sh(3) 启动hiveserver2服务

hiveserver2此时当前终端进入阻塞状态,不要关闭它。可以另外启动一个终端,jps查看进程,看到RunJar进程。

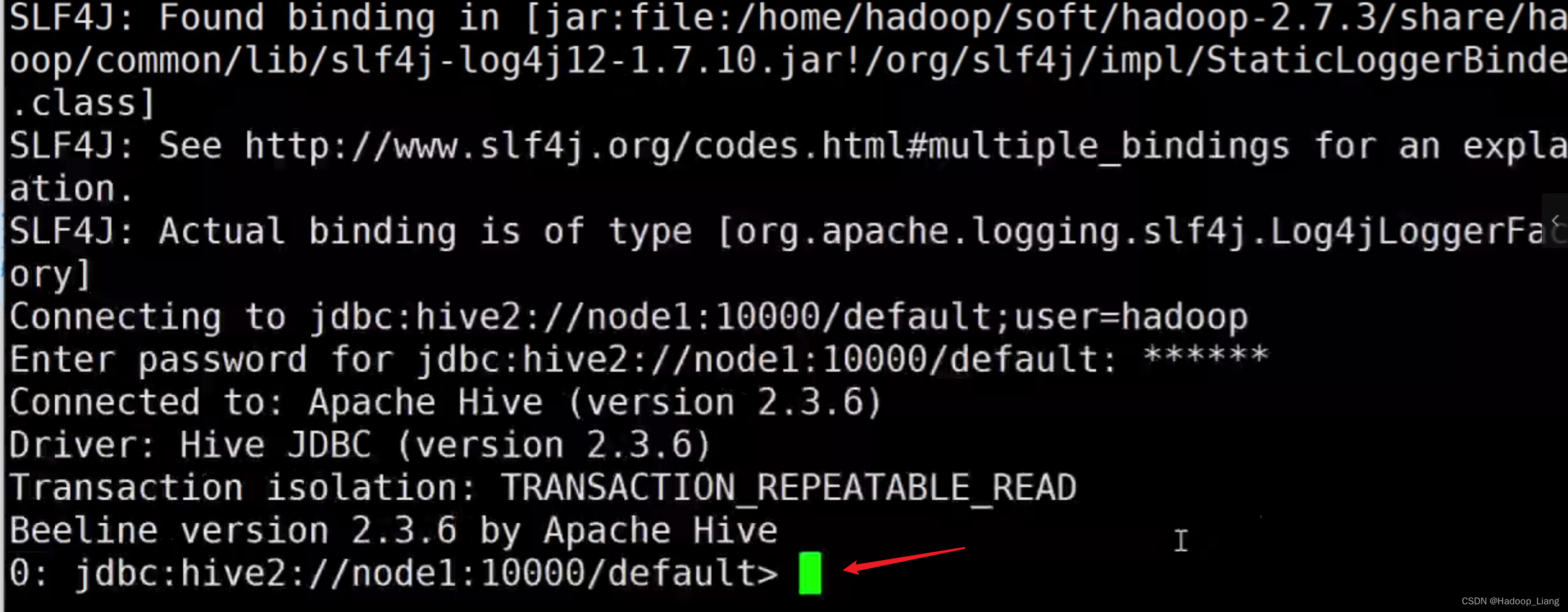

(4) 在新的终端下,使用beeline通过jdbc连接hiveserver2

beeline -u jdbc:hive2://node1:10000/defaul -n hadoop -p-u 为hive的url地址

-n 为hive所在Linux机器当前登录的用户名

-p 为Linux机器的登录密码

运行命令,根据提示输入密码后,看到如下箭头所示,为连接成功

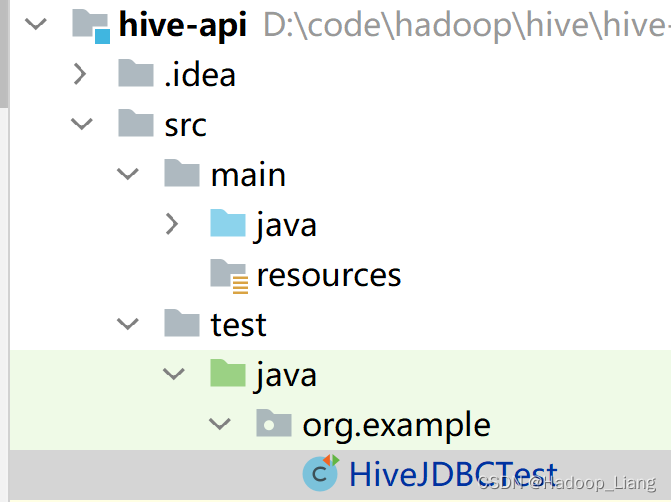

(5) 编码

新建maven工程,工程名例如:hive-api

添加pom.xml依赖

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.7.3</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-jdbc</artifactId>

<version>2.3.6</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.13.2</version>

<scope>test</scope>

</dependency>

</dependencies>编码,在src\test\java\org.example包下创建

HiveJDBCTest.java

代码如下:

package org.example;

import org.example.api.JDBCUtils;

import org.junit.After;

import org.junit.Before;

import org.junit.Test;

import java.sql.*;

public class HiveJDBCTest {

private static String driverName="org.apache.hive.jdbc.HiveDriver";//驱动名称

private static String url = "jdbc:hive2://node1:10000/default";//连接hive的default数据库

private static String user = "hadoop";//hive所在机器的用户名

private static String password = "hadoop";//hive所在机器的密码

private static Connection conn = null;//连接

private static Statement stmt = null;//声明

private static ResultSet rs = null;//结果集

@Before

public void init() throws ClassNotFoundException, SQLException {

Class.forName(driverName);

conn = DriverManager.getConnection(url, user, password);

stmt = conn.createStatement();

}

/**

* 创建数据库

* @throws SQLException

*/

@Test

public void createDatabase() throws SQLException {

String sql = "create database testdb1912";

System.out.println("Running: " + sql);

stmt.execute(sql);

}

/**

* 查询所有数据库

* @throws SQLException

*/

@Test

public void showDatabases() throws SQLException {

String sql = "show databases";

System.out.println("Running: " + sql);

rs = stmt.executeQuery(sql);

while (rs.next()){

System.out.println(rs.getString(1));//从1开始,查看第一列

// System.out.println("========");

// System.out.println(rs.getString(2));//没有第二列会报错Invalid columnIndex: 2

}

}

/**

* 创建表

* @throws SQLException

*/

@Test

public void createTable() throws SQLException {

String sql = "create table dept_api1(deptno int, dname string, loc string)" +

"row format delimited fields terminated by ','";

System.out.println("Running:"+sql);

stmt.execute(sql);

}

@Test

public void showTables() throws SQLException {

String sql = "show tables";

System.out.println("Running:"+sql);

rs = stmt.executeQuery(sql);

while (rs.next()){

System.out.println(rs.getString(1));

}

}

/**

* 查看表结构

* @throws SQLException

*/

@Test

public void descTable() throws SQLException {

String sql = "desc dept_api";

System.out.println("Running:"+sql);

rs = stmt.executeQuery(sql);

while (rs.next()){

// 表结构: 列名 列类型

System.out.println(rs.getString(1)+"\t"+rs.getString(2));

}

}

/**

* 加载数据

* @throws SQLException

*/

@Test

public void loadData() throws SQLException {

// String filePath = "D:/test/inputemp/dept.csv";

// String sql = "load data local inpath 'D:/test/inputemp/dept.csv' overwrite into table dept_api";

// String sql = "LOAD DATA LOCAL INPATH 'D:\\test\\inputemp\\dept.csv' " + "OVERWRITE INTO TABLE dept_api";

// 注意:这里相当于连接了hiveserver2的客户端,hiverserver2在linux上

// 路径为Linux的本地文件,用windows路径会报错

String sql = "LOAD DATA LOCAL INPATH '/home/hadoop/dept.csv' " + "OVERWRITE INTO TABLE dept_api1";

System.out.println("Running:"+sql);

stmt.execute(sql);

}

/**

* 统计记录数

* @throws SQLException

*/

@Test

public void countData() throws SQLException {

String sql = "select count(1) from dept_api1";

System.out.println("Running:"+sql);

rs = stmt.executeQuery(sql);

while (rs.next()){

System.out.println(rs.getInt(1));

}

}

/**

* 删除数据库

* @throws SQLException

*/

@Test

public void dropDB() throws SQLException {

String sql = "drop database if exists testdb1";

System.out.println("Running:"+sql);

stmt.execute(sql);

}

/**

* 删除表

* @throws SQLException

*/

@Test

public void deleteTable() throws SQLException {

String sql = "drop table if exists dept_api1";

System.out.println("Running:"+sql);

stmt.execute(sql);

}

@After

public void destory() throws SQLException {

if(rs !=null){

rs.close();

}

if(stmt != null){

stmt.close();

}

if(conn !=null){

conn.close();

}

}

}

分别进行单元测试。

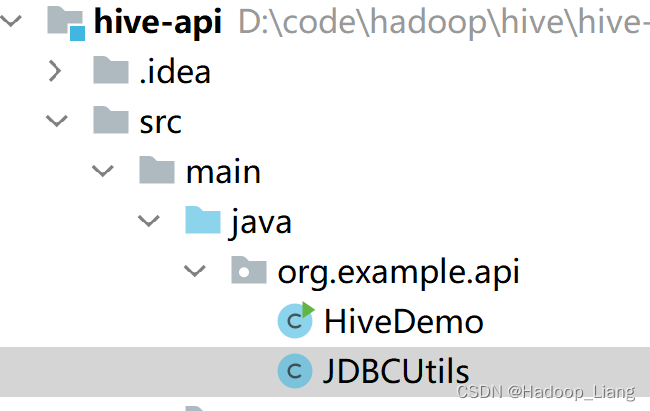

更多代码:

JDBCUtils.java

package org.example.api;

import java.sql.*;

public class JDBCUtils {

// Hive的驱动

private static String driver = "org.apache.hive.jdbc.HiveDriver";

// Hive的URL地址

// private static String url = "jdbc:hive2://192.168.193.140:10000/default";

private static String url = "jdbc:hive2://node1:10000/default";

// 注册数据库的驱动

static{

try {

Class.forName(driver);

} catch (ClassNotFoundException e) {

e.printStackTrace();

}

}

// 获取数据库Hive的链接

public static Connection getConnection(){

try {

return DriverManager.getConnection(url,"hadoop","hadoop");

// return DriverManager.getConnection(url);

} catch (SQLException throwables) {

throwables.printStackTrace();

}

return null;

}

// 释放资源

public static void release(Connection conn, Statement st, ResultSet rs){

if(rs != null){

try {

rs.close();

} catch (SQLException throwables) {

throwables.printStackTrace();

} finally {

rs = null;

}

}

if(st != null){

try {

st.close();

} catch (SQLException throwables) {

throwables.printStackTrace();

} finally {

st = null;

}

}

if(conn != null){

try {

conn.close();

} catch (SQLException throwables) {

throwables.printStackTrace();

} finally {

conn = null;

}

}

}

}

HiveDemo.java

package org.example.api;

import java.sql.Connection;

import java.sql.ResultSet;

import java.sql.SQLException;

import java.sql.Statement;

public class HiveDemo {

public static void main(String[] args) {

// String sql = "select * from emp1";

String sql = "create database testdb1";

Connection conn = null;

Statement st = null;

ResultSet rs = null;

try {

conn = JDBCUtils.getConnection();

st = conn.createStatement();

rs = st.executeQuery(sql);

while(rs.next()){

String ename = rs.getString("ename");

double sal = rs.getDouble("sal");

System.out.println(ename + "\t" + sal);

}

} catch (SQLException throwables) {

throwables.printStackTrace();

} finally {

JDBCUtils.release(conn,st,rs);

}

}

}

完成!enjoy it!

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?