游戏行业数据仓库—用户行为数仓

1、数据仓库的基本概念

1、什么是数据仓库

W.H.Inmon在《 Building the Data Warehouse 》一书中,对数据仓库的定义为:

数据仓库是一个

面向主题的

集成的

非易失的

随时间变化的

用来支持管理人员决策的数据集合。

面向主题

操作型数据库的数据组织面向事务处理任务,各个业务系统之间各自分离,而数据仓库中的数据是按照一定的主题域进行组织。

主题是一个抽象的概念,是数据归类的标准,是指用户使用数据仓库进行决策时所关心的重点方面,一个主题通常与多个操作型信息系统相关。每一个主题基本对应一个宏观的分析领域。

例如,银行的数据仓库的主题:客户

客户数据来源:从银行储蓄数据库、信用卡数据库、贷款数据库等几个数据库中抽取的数据整理而成。这些客户信息有可能是一致的,也可能是不一致的,这些信息需要统一整合才能完整体现客户。

集成

面向事务处理的操作型数据库通常与某些特定的应用相关,数据库之间相互独立,并且往往是异构的。而数据仓库中的数据是在对原有分散的数据库数据抽取、清理的基础上经过系统加工、汇总和整理得到的,必须消除源数据中的不一致性,以保证数据仓库内的信息是关于整个企业的一致的全局信息。

具体如下:

1:数据进入数据仓库后、使用之前,必须经过加工与集成。

2:对不同的数据来源进行统一数据结构和编码。统一原始数 据中的所有矛盾之处,如字段的同名异义,异名同义,单位不统一,字长不一致等。

3:将原始数据结构做一个从面向应用到面向主题的大转变。

非易失即相对稳定的

操作型数据库中的数据通常实时更新,数据根据需要及时发生变化。数据仓库的数据主要供企业决策分析之用,所涉及的数据操作主要是数据查询,一旦某个数据进入数据仓库以后,一般情况下将被长期保留,也就是数据仓库中一般有大量的查询操作,但修改和删除操作很少,通常只需要定期的加载、刷新。

数据仓库中包括了大量的历史数据。

数据经集成进入数据仓库后是极少或根本不更新的。

随时间变化即反映历史变化

操作型数据库主要关心当前某一个时间段内的数据,而数据仓库中的数据通常包含历史信息,系统记录了企业从过去某一时点(如开始应用数据仓库的时点)到目前的各个阶段的信息,通过这些信息,可以对企业的发展历程和未来趋势做出定量分析和预测。企业数据仓库的建设,是以现有企业业务系统和大量业务数据的积累为基础。数据仓库不是静态的概念,只有把信息及时交给需要这些信息的使用者,供他们做出改善其业务经营的决策,信息才能发挥作用,信息才有意义。而把信息加以整理归纳和重组,并及时提供给相应的管理决策人员,是数据仓库的根本任务。因此,从产业界的角度看,数据仓库建设是一个工程,是一个过程

数据仓库内的数据时限一般在5-10年以上,甚至永不删除,这些数据的键码都包含时间项,标明数据的历史时期,方便做时间趋势分析。

2、数据仓库的演进

3、数据库与数据仓库的对比

4、数据仓库的主要作用

1、支持数据提取

数据提取可以支撑来自企业各业务部门的数据需求。

由之前的不同业务部门给不同业务系统提需求转变为不同业务系统统一给数据仓库提需求

2、支持报表系统

基于企业的数据仓库,向上支撑企业的各部门的统计报表需求,辅助支撑企业日常运营决策。

3、支持数据分析(BI)

BI(商业智能)是一种解决方案:

从许多来自不同的企业业务系统的数据中提取出有用的数据并进行清理,以保证数据的正确性,然后经过抽取、转换和装载,即ETL过程,合并到一个企业级的数据仓库里,从而得到企业数据的一个全局视图;

在此基础上利用合适的查询和分析工具、数据挖掘工具、OLAP工具等对其进行分析和处理(这时信息变为辅助决策的知识);

最后将知识呈现给管理者,为管理者的决策过程提供支持 。

4、支持数据挖掘

数据挖掘也称为数据库知识发现(Knowledge Discovery in Databases, KDD),就是将高级智能计算技术应用于大量数据中,让计算机在有人或无人指导的情况下从海量数据中发现潜在的,有用的模式(也叫知识)。

Jiawei Han在《数据挖掘概念与技术》一书中对数据挖掘的定义:数据挖掘是从大量数据中挖掘有趣模式和知识的过程,数据源包括数据库、数据仓库、Web、其他信息存储库或动态地流入系统的数据。

5、支持数据应用

电信基于位置数据的旅游客流分析及人群画像

电信基于位置数据的人流监控和预警

银行基于用户交易数据的金融画像应用

电商根据用户浏览和购买行为的用户标签体系及推荐系统

征信机构根据用户信用记录的信用评估

5、数据仓库的架构设计

数据仓库经过多年的发展,其技术已经架构基本上都已经非常成熟稳定,不管是数仓的整体架构,还是各种技术选型方案,在业界基本上都已经非常稳定了,如下架构就是一个稳定的数据仓库的架构设计方案。

2、游戏行业背景介绍

2.1 游戏行业分类

现在的市面上很多游戏公司主要分类两大类:

一是游戏研发类,二是游戏运营类。

研发类公司就是自己开发游戏,有自己的游戏策划师,美术团队,有原画师,美术设计师,设计场景和角色,有3D建模师,有渲染师,程序员游戏开发工程师。

比如:《泡泡堂》,这个是韩国的NEXON游戏公司开发的,由盛大代理的。后来盛大出钱成立了启航工作室,开发出了《龙之谷手游》。还有天美工作室,开发过《QQ飞车》、《逆战》,由腾讯运营。

这种团队一般是以工作室的形式存在,不是有限责任公司的形式。他们没有自己的运营和客服团队,他们一般是把自己的游戏卖给专门做运营的公司,由运营公司负责游戏的运营、客服服务、玩家服务、游戏活动策划等工作。他们再根据流水来分成,就是用户充值的钱,叫做流水。

而这部分流水,不仅仅由游戏研发公司和游戏运营公司分钱,还有第三方渠道也要参与分钱。比如一款游戏是通过苹果的AppStore下载的,那苹果就要分钱,通过腾讯应用宝的,那腾讯也要分。

所以游戏行业的数据分析和数据仓库的建设中,渠道是很重要的一个维度。

2.2 网站运行产生日志系统架构图

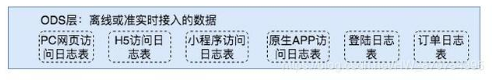

这里我们主要采集用户埋点日志数据,以及业务库当中的数据进行统一的数据仓库建设

3、业务库数据生成

为了模拟真实的数据生成,我们这里使用java程序来生成数据,保存到数据库当中去,接下来我们主要来介绍一下,主要涉及到的各种业务库表

1、数据库表准备

1 创建mysql数据库

CREATE DATABASE /!32312 IF NOT EXISTS/jiuyou_game /*!40100 DEFAULT CHARACTER SET utf8 */;

USE jiuyou_game;

2 文章详情表

DROP TABLE IF EXISTS article_detail;

CREATE TABLE article_detail (

article_id int(64) NOT NULL AUTO_INCREMENT,

article_name varchar(256) DEFAULT NULL COMMENT ‘文章名称’,

article_little_content text COMMENT ‘简略内容’,

article_content text COMMENT ‘文章内容’,

article_time datetime DEFAULT NULL COMMENT ‘文章发表时间’,

jiuyou_show_time datetime DEFAULT NULL COMMENT ‘九游展示时间’,

article_author_name varchar(128) DEFAULT NULL COMMENT ‘作者名字’,

article_user_id int(32) DEFAULT NULL COMMENT ‘作者用户id’,

article_status varchar(16) DEFAULT NULL COMMENT ‘文章状态 1:正常展示 2:下架 3:删除’,

article_create_time datetime DEFAULT NULL COMMENT ‘数据创建时间’,

article_update_time datetime DEFAULT NULL COMMENT ‘数据更新时间’,

first_class varchar(256) DEFAULT NULL COMMENT ‘一级分类’,

first_class_url varchar(256) DEFAULT NULL COMMENT ‘一级分类url地址’,

second_class varchar(256) DEFAULT NULL COMMENT ‘二级分类’,

second_class_url varchar(256) DEFAULT NULL COMMENT ‘二级分类url地址’,

third_class varchar(256) DEFAULT NULL COMMENT ‘三级分类’,

third_class_url varchar(256) DEFAULT NULL COMMENT ‘三级分类url地址’,

fourth_class varchar(256) DEFAULT NULL COMMENT ‘四级分类’,

fourth_class_url varchar(256) DEFAULT NULL COMMENT ‘四级分类url地址’,

article_url varchar(256) DEFAULT NULL COMMENT ‘文章请求地址’,

PRIMARY KEY (article_id)

) ENGINE=InnoDB AUTO_INCREMENT=2538691 DEFAULT CHARSET=utf8;

3 通用字典表

DROP TABLE IF EXISTS common_base;

CREATE TABLE common_base (

id int(16) NOT NULL AUTO_INCREMENT COMMENT ‘主键id’,

type_name varchar(32) DEFAULT NULL COMMENT ‘类别名称’,

type_value varchar(32) DEFAULT NULL COMMENT ‘类别对应的值’,

type_createtime datetime DEFAULT CURRENT_TIMESTAMP COMMENT ‘创建时间’,

type_status varchar(8) DEFAULT NULL COMMENT ‘类别状态 0:已经停用 1:正常展示 2:被删除’,

type_updatetime timestamp NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP COMMENT ‘更新时间’,

type_flag varchar(16) DEFAULT NULL,

PRIMARY KEY (id),

KEY type_flag (type_flag),

FULLTEXT KEY type_name (type_name)

) ENGINE=InnoDB AUTO_INCREMENT=35006 DEFAULT CHARSET=utf8;

4 游戏圈子表

DROP TABLE IF EXISTS game_cycle;

CREATE TABLE game_cycle (

cycle_id int(32) NOT NULL AUTO_INCREMENT,

cycle_name varchar(128) DEFAULT NULL COMMENT ‘圈子名称’,

cycle_person_num varchar(64) DEFAULT NULL COMMENT ‘圈子人数’,

cycle_type varchar(16) DEFAULT NULL COMMENT ‘加入或者取消圈子0取消 1加入’,

cycle_comment varchar(32) DEFAULT NULL COMMENT ‘圈子评论数量’,

PRIMARY KEY (cycle_id)

) ENGINE=InnoDB AUTO_INCREMENT=284 DEFAULT CHARSET=utf8;

5 游戏商品交易订单表

DROP TABLE IF EXISTS game_order;

CREATE TABLE game_order (

order_id int(32) NOT NULL AUTO_INCREMENT COMMENT ‘主键id’,

order_num varchar(64) DEFAULT NULL COMMENT ‘订单编号’,

buyer_user_id int(32) DEFAULT NULL COMMENT ‘订单关联购买方用户id’,

order_status varchar(16) DEFAULT NULL COMMENT ‘商品交易状态 0:加入购物车车 1:提交订单 2:已支付 3:确认收货,交易完成 4:订单交易有问题进行申诉’,

pay_type varchar(16) DEFAULT NULL COMMENT ‘支付方式 0:微信支付 1:支付宝支付 2:银联银行卡支付’,

pay_money decimal(8,2) DEFAULT NULL COMMENT ‘支付金额’,

order_time datetime DEFAULT NULL COMMENT ‘下单时间’,

pay_time datetime DEFAULT NULL COMMENT ‘支付时间’,

tax_percent varchar(32) DEFAULT NULL COMMENT ‘平台提成税点’,

order_deleted varchar(16) DEFAULT NULL COMMENT ‘订单是否删除 0:删除 1:未删除’,

PRIMARY KEY (order_id)

) ENGINE=InnoDB AUTO_INCREMENT=4001 DEFAULT CHARSET=utf8;

6 订单商品关联表

DROP TABLE IF EXISTS game_order_product;

CREATE TABLE game_order_product (

game_product_id int(32) NOT NULL AUTO_INCREMENT COMMENT ‘订单关联商品表主键’,

game_order_id varchar(128) DEFAULT NULL COMMENT ‘关联订单主键表’,

product_id int(32) DEFAULT NULL COMMENT ‘关联商品id表’,

product_num int(8) DEFAULT NULL COMMENT ‘商品购买数量’,

product_name varchar(512) DEFAULT NULL COMMENT ‘商品名称’,

product_url varchar(128) DEFAULT NULL COMMENT ‘购买商品url地址’,

seller_id int(32) DEFAULT NULL COMMENT ‘商品出售方id’,

product_price decimal(8,2) DEFAULT NULL COMMENT ‘商品金额’,

PRIMARY KEY (game_product_id)

) ENGINE=InnoDB AUTO_INCREMENT=9894 DEFAULT CHARSET=utf8;

7 游戏商品表

DROP TABLE IF EXISTS game_product;

CREATE TABLE game_product (

game_id int(32) NOT NULL AUTO_INCREMENT COMMENT ‘主键id’,

game_title varchar(128) DEFAULT NULL COMMENT ‘商品名称’,

game_pic varchar(128) DEFAULT NULL COMMENT ‘商品图片’,

game_detail_url varchar(256) DEFAULT NULL COMMENT ‘商品详情URL’,

game_title_second varchar(128) DEFAULT NULL COMMENT ‘商品副标题’,

game_qufu varchar(128) DEFAULT NULL COMMENT ‘游戏区服’,

game_credit varchar(128) DEFAULT NULL COMMENT ‘信誉等级’,

game_price varchar(64) DEFAULT NULL COMMENT ‘商品价格’,

game_stock varchar(16) DEFAULT NULL COMMENT ‘商品库存’,

game_protection varchar(256) DEFAULT NULL COMMENT ‘服务保障’,

game_user int(64) DEFAULT NULL COMMENT ‘游戏关联用户’,

game_type varchar(512) DEFAULT NULL COMMENT ‘商品所属类型,账号还是游戏币还是材料装备等’,

game_create_date datetime DEFAULT NULL COMMENT ‘商品上架日期’,

game_check_date datetime DEFAULT NULL COMMENT ‘商品审核日期’,

game_check_user int(32) DEFAULT NULL COMMENT ‘审核人’,

game_check_reson varchar(64) DEFAULT NULL COMMENT ‘审核结果说明’,

game_check_status varchar(16) DEFAULT NULL COMMENT ‘游戏审核结果 0 不通过 1通过’,

PRIMARY KEY (game_id)

) ENGINE=InnoDB AUTO_INCREMENT=31863 DEFAULT CHARSET=utf8;

8 省市区字典表

DROP TABLE IF EXISTS province_code;

create table province_code (

Id int (11),

Name varchar (120),

Pid int (11)

);

打开该文本文档,然后插入省市区编码信息

9 用户基础信息表

DROP TABLE IF EXISTS user_base;

CREATE TABLE user_base (

user_id int(32) NOT NULL AUTO_INCREMENT COMMENT ‘主键id’,

user_account varchar(64) DEFAULT NULL COMMENT ‘用户账号’,

user_email varchar(64) DEFAULT NULL COMMENT ‘用户邮箱’,

user_name varchar(64) DEFAULT NULL COMMENT ‘用户姓名’,

user_phone varchar(64) DEFAULT NULL COMMENT ‘用户手机号’,

user_origin_password varchar(64) DEFAULT NULL COMMENT ‘用户原始密码保存’,

user_password varchar(64) DEFAULT NULL COMMENT ‘用户密码,经过md5加密’,

user_sex char(2) DEFAULT NULL COMMENT ‘0 女性 1 男性’,

user_card varchar(64) DEFAULT NULL COMMENT ‘身份证号’,

user_registertime datetime DEFAULT CURRENT_TIMESTAMP COMMENT ‘创建时间’,

user_updatetime datetime DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP COMMENT ‘更新时间’,

is_deleted varchar(16) DEFAULT NULL COMMENT ‘是否注销(删除)’,

user_province int(16) DEFAULT NULL COMMENT ‘关联省编码’,

user_city int(16) DEFAULT NULL COMMENT ‘关联市编码’,

user_area int(16) DEFAULT NULL COMMENT ‘关联区编码’,

user_address_detail text,

register_ip varbinary(32) DEFAULT NULL COMMENT ‘用户注册ip’,

user_pic varchar(128) DEFAULT NULL COMMENT ‘用户图像访问地址’,

PRIMARY KEY (user_id)

) ENGINE=InnoDB AUTO_INCREMENT=10639 DEFAULT CHARSET=utf8;

10 用户登录记录流水表

DROP TABLE IF EXISTS user_login_record;

CREATE TABLE user_login_record (

login_id int(32) NOT NULL AUTO_INCREMENT,

login_user_id int(32) DEFAULT NULL COMMENT ‘登录用户id’,

login_time datetime DEFAULT NULL COMMENT ‘登录时间’,

login_mac varchar(32) DEFAULT NULL COMMENT ‘登录mac地址’,

login_ip varchar(32) DEFAULT NULL COMMENT ‘登录IP地址’,

login_message varchar(128) DEFAULT NULL COMMENT ‘登录时返回给客户端的消息’,

login_status varchar(8) DEFAULT NULL COMMENT ‘登录状态:0,登录失败 1,登录成功’,

login_fail_reson varchar(8) DEFAULT NULL COMMENT ‘登录失败原因 0:用户名不存在,1:密码错误 2:登录成功’,

PRIMARY KEY (login_id)

) ENGINE=InnoDB AUTO_INCREMENT=31727 DEFAULT CHARSET=utf8;

11 视频详情表

DROP TABLE IF EXISTS video_detail;

CREATE TABLE video_detail (

video_id int(16) NOT NULL AUTO_INCREMENT COMMENT ‘主键id’,

video_name varchar(128) DEFAULT NULL COMMENT ‘视频名称’,

video_detail text COMMENT ‘视频描述’,

video_type varchar(16) DEFAULT NULL COMMENT ‘视频所属分类’,

video_url varchar(256) DEFAULT NULL COMMENT ‘视频观看URL地址’,

video_time_long int(16) DEFAULT NULL COMMENT ‘视频时长’,

video_comment_total int(16) DEFAULT NULL COMMENT ‘视频评论数’,

video_like_total int(16) DEFAULT NULL COMMENT ‘视频点赞数’,

video_upload_time datetime DEFAULT CURRENT_TIMESTAMP COMMENT ‘创建时间’,

video_check_time datetime DEFAULT NULL COMMENT ‘视频审核时间’,

video_update_time timestamp NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP COMMENT ‘更新时间’,

video_upload_user int(11) DEFAULT NULL COMMENT ‘视频上传用户id’,

video_status varchar(8) DEFAULT NULL COMMENT ‘视频状态 0:上传待审核 1:审核通过正常展示 2:审核不通过,重新修改 3:视频被删除 4:违规禁止播放’,

video_comment text COMMENT ‘视频审核通过不通过原因说明’,

video_watch_time int(8) DEFAULT NULL COMMENT ‘视频观看次数’,

video_age int(8) DEFAULT NULL COMMENT ‘视频年龄,距离视频上传过去了多少天’,

PRIMARY KEY (video_id)

) ENGINE=InnoDB AUTO_INCREMENT=8334 DEFAULT CHARSET=utf8;

2、使用java代码生成数据库业务数据

1 创建maven工程data_generate

2 导入jar包坐标

<slf4j.version>1.7.20</slf4j.version>

<logback.version>1.2.3</logback.version>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

<version>3.6</version>

</dependency>

<dependency>

<groupId>commons-lang</groupId>

<artifactId>commons-lang</artifactId>

<version>2.6</version>

</dependency>

<dependency>

<groupId>commons-io</groupId>

<artifactId>commons-io</artifactId>

<version>2.6</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.47</version>

</dependency>

<dependency>

<groupId>org.mybatis</groupId>

<artifactId>mybatis</artifactId>

<version>3.5.5</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.47</version>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

<version>3.10</version>

</dependency>

<dependency>

<groupId>commons-codec</groupId>

<artifactId>commons-codec</artifactId>

<version>1.15</version>

</dependency>

<dependency>

<groupId>org.jsoup</groupId>

<artifactId>jsoup</artifactId>

<version>1.10.3</version>

</dependency>

3 定义数据库对应的javabean

在maven工程当中创建package 路径为com.datas.beans.dbbeans,该路径下专门用于存放javabean对象

1 文章详情表javabean

import java.util.Date;

public class ArticleDetail {

private int article_id ;

private String article_name ;

private String article_little_content;

private String article_content ;

private Date article_time ;

private Date jiuyou_show_time ;

private String article_author_name ;

private int article_user_id ;

private String article_status ;

private Date article_create_time ;

private Date article_update_time ;

private String first_class;

private String first_class_url;

private String second_class;

private String second_class_url;

private String third_class;

private String third_class_url;

private String fourth_class;

private String fourth_class_url;

private String article_url;

public String getArticle_url() {

return article_url;

}

public void setArticle_url(String article_url) {

this.article_url = article_url;

}

public String getFirst_class_url() {

return first_class_url;

}

public void setFirst_class_url(String first_class_url) {

this.first_class_url = first_class_url;

}

public String getSecond_class_url() {

return second_class_url;

}

public void setSecond_class_url(String second_class_url) {

this.second_class_url = second_class_url;

}

public String getThird_class_url() {

return third_class_url;

}

public void setThird_class_url(String third_class_url) {

this.third_class_url = third_class_url;

}

public String getFourth_class_url() {

return fourth_class_url;

}

public void setFourth_class_url(String fourth_class_url) {

this.fourth_class_url = fourth_class_url;

}

public String getFirst_class() {

return first_class;

}

public void setFirst_class(String first_class) {

this.first_class = first_class;

}

public String getSecond_class() {

return second_class;

}

public void setSecond_class(String second_class) {

this.second_class = second_class;

}

public String getThird_class() {

return third_class;

}

public void setThird_class(String third_class) {

this.third_class = third_class;

}

public String getFourth_class() {

return fourth_class;

}

public void setFourth_class(String fourth_class) {

this.fourth_class = fourth_class;

}

public String getArticle_little_content() {

return article_little_content;

}

public void setArticle_little_content(String article_little_content) {

this.article_little_content = article_little_content;

}

public int getArticle_id() {

return article_id;

}

public void setArticle_id(int article_id) {

this.article_id = article_id;

}

public String getArticle_name() {

return article_name;

}

public void setArticle_name(String article_name) {

this.article_name = article_name;

}

public String getArticle_content() {

return article_content;

}

public void setArticle_content(String article_content) {

this.article_content = article_content;

}

public Date getArticle_time() {

return article_time;

}

public void setArticle_time(Date article_time) {

this.article_time = article_time;

}

public Date getJiuyou_show_time() {

return jiuyou_show_time;

}

public void setJiuyou_show_time(Date jiuyou_show_time) {

this.jiuyou_show_time = jiuyou_show_time;

}

public String getArticle_author_name() {

return article_author_name;

}

public void setArticle_author_name(String article_author_name) {

this.article_author_name = article_author_name;

}

public int getArticle_user_id() {

return article_user_id;

}

public void setArticle_user_id(int article_user_id) {

this.article_user_id = article_user_id;

}

public String getArticle_status() {

return article_status;

}

public void setArticle_status(String article_status) {

this.article_status = article_status;

}

public Date getArticle_create_time() {

return article_create_time;

}

public void setArticle_create_time(Date article_create_time) {

this.article_create_time = article_create_time;

}

public Date getArticle_update_time() {

return article_update_time;

}

public void setArticle_update_time(Date article_update_time) {

this.article_update_time = article_update_time;

}

}

2 通用字典表javabean

import java.util.Date;

public class CommonBase {

private int id ;

private String type_name ;

private String type_value;

private Date type_createtime ;

private String type_status ;

private Date type_updatetime ;

private String type_flag ;

public String getType_value() {

return type_value;

}

public void setType_value(String type_value) {

this.type_value = type_value;

}

public int getId() {

return id;

}

public void setId(int id) {

this.id = id;

}

public String getType_name() {

return type_name;

}

public void setType_name(String type_name) {

this.type_name = type_name;

}

public Date getType_createtime() {

return type_createtime;

}

public void setType_createtime(Date type_createtime) {

this.type_createtime = type_createtime;

}

public String getType_status() {

return type_status;

}

public void setType_status(String type_status) {

this.type_status = type_status;

}

public Date getType_updatetime() {

return type_updatetime;

}

public void setType_updatetime(Date type_updatetime) {

this.type_updatetime = type_updatetime;

}

public String getType_flag() {

return type_flag;

}

public void setType_flag(String type_flag) {

this.type_flag = type_flag;

}

}

3 游戏商品交易订单表javabean

import java.util.Date;

public class GameOrder {

private int order_id ;

private String order_num ;

private int buyer_user_id ;

private String order_status ;

private String pay_type ;

private Double pay_money ;

private Date order_time ;

private Date pay_time ;

private String tax_percent ;

private String order_deleted ;

public int getOrder_id() {

return order_id;

}

public void setOrder_id(int order_id) {

this.order_id = order_id;

}

public String getOrder_num() {

return order_num;

}

public void setOrder_num(String order_num) {

this.order_num = order_num;

}

public int getBuyer_user_id() {

return buyer_user_id;

}

public void setBuyer_user_id(int buyer_user_id) {

this.buyer_user_id = buyer_user_id;

}

public String getOrder_status() {

return order_status;

}

public void setOrder_status(String order_status) {

this.order_status = order_status;

}

public String getPay_type() {

return pay_type;

}

public void setPay_type(String pay_type) {

this.pay_type = pay_type;

}

public Double getPay_money() {

return pay_money;

}

public void setPay_money(Double pay_money) {

this.pay_money = pay_money;

}

public Date getOrder_time() {

return order_time;

}

public void setOrder_time(Date order_time) {

this.order_time = order_time;

}

public Date getPay_time() {

return pay_time;

}

public void setPay_time(Date pay_time) {

this.pay_time = pay_time;

}

public String getTax_percent() {

return tax_percent;

}

public void setTax_percent(String tax_percent) {

this.tax_percent = tax_percent;

}

public String getOrder_deleted() {

return order_deleted;

}

public void setOrder_deleted(String order_deleted) {

this.order_deleted = order_deleted;

}

}

4 订单商品关联表javabean

public class GameOrderProduct {

private int game_product_id ;

private String game_order_id ;

private int product_id ;

private int product_num ;

private String product_name ;

private String product_url;

private String seller_id;

private Double product_price;

public Double getProduct_price() {

return product_price;

}

public void setProduct_price(Double product_price) {

this.product_price = product_price;

}

public String getSeller_id() {

return seller_id;

}

public void setSeller_id(String seller_id) {

this.seller_id = seller_id;

}

public int getGame_product_id() {

return game_product_id;

}

public void setGame_product_id(int game_product_id) {

this.game_product_id = game_product_id;

}

public String getGame_order_id() {

return game_order_id;

}

public void setGame_order_id(String game_order_id) {

this.game_order_id = game_order_id;

}

public int getProduct_id() {

return product_id;

}

public void setProduct_id(int product_id) {

this.product_id = product_id;

}

public int getProduct_num() {

return product_num;

}

public void setProduct_num(int product_num) {

this.product_num = product_num;

}

public String getProduct_name() {

return product_name;

}

public void setProduct_name(String product_name) {

this.product_name = product_name;

}

public String getProduct_url() {

return product_url;

}

public void setProduct_url(String product_url) {

this.product_url = product_url;

}

}

5 游戏商品表javabean

import java.util.Date;

public class GameProduct {

private int game_id ;

private String game_title ;

private String game_pic ;

private String game_detail_url ;

private String game_title_second ;

private String game_qufu ;

private String game_credit ;

private Double game_price ;

private String game_stock ;

private String game_protection ;

private int game_user ;

private String game_type ;

private Date game_create_date ;

private Date game_check_date ;

private int game_check_user ;

private String game_check_reson ;

private String game_check_status ;

public int getGame_id() {

return game_id;

}

public void setGame_id(int game_id) {

this.game_id = game_id;

}

public String getGame_title() {

return game_title;

}

public void setGame_title(String game_title) {

this.game_title = game_title;

}

public String getGame_pic() {

return game_pic;

}

public void setGame_pic(String game_pic) {

this.game_pic = game_pic;

}

public String getGame_detail_url() {

return game_detail_url;

}

public void setGame_detail_url(String game_detail_url) {

this.game_detail_url = game_detail_url;

}

public String getGame_title_second() {

return game_title_second;

}

public void setGame_title_second(String game_title_second) {

this.game_title_second = game_title_second;

}

public String getGame_qufu() {

return game_qufu;

}

public void setGame_qufu(String game_qufu) {

this.game_qufu = game_qufu;

}

public String getGame_credit() {

return game_credit;

}

public void setGame_credit(String game_credit) {

this.game_credit = game_credit;

}

public Double getGame_price() {

return game_price;

}

public void setGame_price(Double game_price) {

this.game_price = game_price;

}

public String getGame_stock() {

return game_stock;

}

public void setGame_stock(String game_stock) {

this.game_stock = game_stock;

}

public String getGame_protection() {

return game_protection;

}

public void setGame_protection(String game_protection) {

this.game_protection = game_protection;

}

public int getGame_user() {

return game_user;

}

public void setGame_user(int game_user) {

this.game_user = game_user;

}

public String getGame_type() {

return game_type;

}

public void setGame_type(String game_type) {

this.game_type = game_type;

}

public Date getGame_create_date() {

return game_create_date;

}

public void setGame_create_date(Date game_create_date) {

this.game_create_date = game_create_date;

}

public Date getGame_check_date() {

return game_check_date;

}

public void setGame_check_date(Date game_check_date) {

this.game_check_date = game_check_date;

}

public int getGame_check_user() {

return game_check_user;

}

public void setGame_check_user(int game_check_user) {

this.game_check_user = game_check_user;

}

public String getGame_check_reson() {

return game_check_reson;

}

public void setGame_check_reson(String game_check_reson) {

this.game_check_reson = game_check_reson;

}

public String getGame_check_status() {

return game_check_status;

}

public void setGame_check_status(String game_check_status) {

this.game_check_status = game_check_status;

}

}

6 省市区字典表javabean

public class ProvinceCode {

private int id ;

private String name ;

private int pid ;

public int getId() {

return id;

}

public void setId(int id) {

this.id = id;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public int getPid() {

return pid;

}

public void setPid(int pid) {

this.pid = pid;

}

}

7 用户基础信息表javabean

import java.util.Date;

public class UserBase {

private int user_id ;

private String user_account ;

private String user_email ;

private String user_name ;

private String user_phone ;

private String user_origin_password;

private String user_password ;

private String user_sex ;

private String user_card ;

private Date user_registertime ;

private Date user_updatetime ;

private String is_deleted ;

private int user_province ;

private int user_city ;

private int user_area ;

private String user_address_detail;

private String register_ip;

private String user_pic ;

public String getUser_address_detail() {

return user_address_detail;

}

public void setUser_address_detail(String user_address_detail) {

this.user_address_detail = user_address_detail;

}

public String getRegister_ip() {

return register_ip;

}

public void setRegister_ip(String register_ip) {

this.register_ip = register_ip;

}

public String getUser_origin_password() {

return user_origin_password;

}

public void setUser_origin_password(String user_origin_password) {

this.user_origin_password = user_origin_password;

}

public int getUser_id() {

return user_id;

}

public void setUser_id(int user_id) {

this.user_id = user_id;

}

public String getUser_account() {

return user_account;

}

public void setUser_account(String user_account) {

this.user_account = user_account;

}

public String getUser_email() {

return user_email;

}

public void setUser_email(String user_email) {

this.user_email = user_email;

}

public String getUser_name() {

return user_name;

}

public void setUser_name(String user_name) {

this.user_name = user_name;

}

public String getUser_phone() {

return user_phone;

}

public void setUser_phone(String user_phone) {

this.user_phone = user_phone;

}

public String getUser_password() {

return user_password;

}

public void setUser_password(String user_password) {

this.user_password = user_password;

}

public String getUser_sex() {

return user_sex;

}

public void setUser_sex(String user_sex) {

this.user_sex = user_sex;

}

public String getUser_card() {

return user_card;

}

public void setUser_card(String user_card) {

this.user_card = user_card;

}

public Date getUser_registertime() {

return user_registertime;

}

public void setUser_registertime(Date user_registertime) {

this.user_registertime = user_registertime;

}

public Date getUser_updatetime() {

return user_updatetime;

}

public void setUser_updatetime(Date user_updatetime) {

this.user_updatetime = user_updatetime;

}

public String getIs_deleted() {

return is_deleted;

}

public void setIs_deleted(String is_deleted) {

this.is_deleted = is_deleted;

}

public int getUser_province() {

return user_province;

}

public void setUser_province(int user_province) {

this.user_province = user_province;

}

public int getUser_city() {

return user_city;

}

public void setUser_city(int user_city) {

this.user_city = user_city;

}

public int getUser_area() {

return user_area;

}

public void setUser_area(int user_area) {

this.user_area = user_area;

}

public String getUser_pic() {

return user_pic;

}

public void setUser_pic(String user_pic) {

this.user_pic = user_pic;

}

}

8 用户登录流水表javabean

import java.util.Date;

public class UserLoginRecord {

private int login_id ;

private int login_user_id ;

private Date login_time ;

private String login_mac ;

private String login_ip ;

private String login_message ;

private String login_status ;

private String login_fail_reson ;

public int getLogin_id() {

return login_id;

}

public void setLogin_id(int login_id) {

this.login_id = login_id;

}

public int getLogin_user_id() {

return login_user_id;

}

public void setLogin_user_id(int login_user_id) {

this.login_user_id = login_user_id;

}

public Date getLogin_time() {

return login_time;

}

public void setLogin_time(Date login_time) {

this.login_time = login_time;

}

public String getLogin_mac() {

return login_mac;

}

public void setLogin_mac(String login_mac) {

this.login_mac = login_mac;

}

public String getLogin_ip() {

return login_ip;

}

public void setLogin_ip(String login_ip) {

this.login_ip = login_ip;

}

public String getLogin_message() {

return login_message;

}

public void setLogin_message(String login_message) {

this.login_message = login_message;

}

public String getLogin_status() {

return login_status;

}

public void setLogin_status(String login_status) {

this.login_status = login_status;

}

public String getLogin_fail_reson() {

return login_fail_reson;

}

public void setLogin_fail_reson(String login_fail_reson) {

this.login_fail_reson = login_fail_reson;

}

}

9 视频详情表javabean

import java.util.Date;

public class VideoDetail {

private int video_id ;

private String video_name ;

private String article_little_content;

private String video_detail ;

private String video_type ;

private String video_url ;

private int video_time_long ;

private int video_comment_total ;

private int video_like_total ;

private Date video_upload_time ;

private Date video_check_time ;

private Date video_update_time ;

private int video_upload_user ;

private String video_status ;

private String video_comment ;

private int video_watch_time ;

private int video_age ;

public String getArticle_little_content() {

return article_little_content;

}

public void setArticle_little_content(String article_little_content) {

this.article_little_content = article_little_content;

}

public int getVideo_id() {

return video_id;

}

public void setVideo_id(int video_id) {

this.video_id = video_id;

}

public String getVideo_name() {

return video_name;

}

public void setVideo_name(String video_name) {

this.video_name = video_name;

}

public String getVideo_detail() {

return video_detail;

}

public void setVideo_detail(String video_detail) {

this.video_detail = video_detail;

}

public String getVideo_type() {

return video_type;

}

public void setVideo_type(String video_type) {

this.video_type = video_type;

}

public String getVideo_url() {

return video_url;

}

public void setVideo_url(String video_url) {

this.video_url = video_url;

}

public int getVideo_time_long() {

return video_time_long;

}

public void setVideo_time_long(int video_time_long) {

this.video_time_long = video_time_long;

}

public int getVideo_comment_total() {

return video_comment_total;

}

public void setVideo_comment_total(int video_comment_total) {

this.video_comment_total = video_comment_total;

}

public int getVideo_like_total() {

return video_like_total;

}

public void setVideo_like_total(int video_like_total) {

this.video_like_total = video_like_total;

}

public Date getVideo_upload_time() {

return video_upload_time;

}

public void setVideo_upload_time(Date video_upload_time) {

this.video_upload_time = video_upload_time;

}

public Date getVideo_check_time() {

return video_check_time;

}

public void setVideo_check_time(Date video_check_time) {

this.video_check_time = video_check_time;

}

public Date getVideo_update_time() {

return video_update_time;

}

public void setVideo_update_time(Date video_update_time) {

this.video_update_time = video_update_time;

}

public int getVideo_upload_user() {

return video_upload_user;

}

public void setVideo_upload_user(int video_upload_user) {

this.video_upload_user = video_upload_user;

}

public String getVideo_status() {

return video_status;

}

public void setVideo_status(String video_status) {

this.video_status = video_status;

}

public String getVideo_comment() {

return video_comment;

}

public void setVideo_comment(String video_comment) {

this.video_comment = video_comment;

}

public int getVideo_watch_time() {

return video_watch_time;

}

public void setVideo_watch_time(int video_watch_time) {

this.video_watch_time = video_watch_time;

}

public int getVideo_age() {

return video_age;

}

public void setVideo_age(int video_age) {

this.video_age = video_age;

}

}

4 定义mybatis的配置SqlMapConfig.xml以及javabean对应的xml文件

1 定义javaBean对应的xml文件

在工程的resource路径下定义mappers文件夹,然后文件夹下面定义各个javabean对应的xml文件

1 定义ArticleDetail.xml配置文件

<?xml version="1.0" encoding="UTF-8" ?> insert into article_detail(article_id,article_name,article_little_content,article_content,article_time ,jiuyou_show_time,article_author_name,article_user_id,article_status,article_create_time,article_update_time ,first_class,first_class_url,second_class,second_class_url,third_class,third_class_url ,fourth_class,fourth_class_url,article_url ) values (#{articleDetail.article_id},#{articleDetail.article_name},#{articleDetail.article_little_content},#{articleDetail.article_content},#{articleDetail.article_time},#{articleDetail.jiuyou_show_time},#{articleDetail.article_author_name},#{articleDetail.article_user_id},#{articleDetail.article_status},#{articleDetail.article_create_time},#{articleDetail.article_update_time}, #{articleDetail.first_class},#{articleDetail.first_class_url},#{articleDetail.second_class} ,#{articleDetail.second_class_url},#{articleDetail.third_class},#{articleDetail.third_class_url} ,#{articleDetail.fourth_class},#{articleDetail.fourth_class_url},#{articleDetail.article_url} )</insert>

<insert id="addOne" parameterType="com.datas.beans.dbbeans.VideoDetail">

insert into video_detail(video_id,video_name,video_detail,video_type,video_url,video_time_long,video_comment_total,video_like_total,video_upload_time,video_check_time,video_update_time,video_upload_user,video_status,video_comment,video_watch_time,video_age)

values (#{video_id},#{video_name},#{video_detail},#{video_type},#{video_url},#{video_time_long},#{video_comment_total},#{video_like_total},#{video_upload_time},#{video_check_time},#{video_update_time},#{video_upload_user},#{video_status},#{video_comment},#{video_watch_time},#{video_age})

</insert>

<select id="getAllVideoDetail" resultType="com.datas.beans.dbbeans.VideoDetail">

select * from video_detail;

</select>

<select id="getArticleListByUserId" resultType="com.datas.beans.dbbeans.ArticleDetail" parameterType="int">

select * from article_detail where article_user_id = #{article_user_id}

</select>

2 定义DBCommonBase.xml

<?xml version="1.0" encoding="UTF-8" ?> insert into common_base(type_name,type_value,type_createtime,type_status,type_updatetime,type_flag) values (#{commonBase.type_name},#{commonBase.type_value},#{commonBase.type_createtime},#{commonBase.type_status},#{commonBase.type_updatetime},#{commonBase.type_flag}) select id,type_name,type_value,type_createtime,type_status,type_updatetime,type_flag from user_base where user_id = #{user_id} select id,type_name,type_value,type_createtime,type_status,type_updatetime,type_flag from common_base where type_flag = '4' and type_name = #{type_name} select id,type_name,type_value,type_createtime,type_status,type_updatetime,type_flag from common_base where type_flag = '5' and type_name = #{type_name} select id,type_name,type_value,type_createtime,type_status,type_updatetime,type_flag from common_base; select id,type_name,type_value,type_createtime,type_status,type_updatetime,type_flag from common_base where type_flag = '1';</select>

<select id="getSearchKey" resultType="com.datas.beans.dbbeans.DBCommonBase">

select id,type_name,type_value,type_createtime,type_status,type_updatetime,type_flag from common_base where type_flag = '6';

</select>

3 定义GameOrder.xml配置文件

<?xml version="1.0" encoding="UTF-8" ?> insert into game_order(order_id,order_num,buyer_user_id,order_status,pay_type,pay_money,order_time,pay_time,tax_percent,order_deleted) values(#{order_id},#{order_num},#{buyer_user_id},#{order_status},#{pay_type},#{pay_money},#{order_time},#{pay_time},#{tax_percent},#{order_deleted}) select * from game_order where buyer_user_id = #{buyer_user_id}4 定义GameOrderProduct.XML配置文件

<?xml version="1.0" encoding="UTF-8" ?><insert id="addGameOrderProductList" parameterType="java.util.List" >

insert into game_order_product(game_product_id,game_order_id,product_id,product_num,product_name,product_url,seller_id,product_price)

values

<foreach collection ="list" item="gameOrderProduct" index="index" separator =",">

(#{gameOrderProduct.game_product_id},#{gameOrderProduct.game_order_id},#{gameOrderProduct.product_id},#{gameOrderProduct.product_num},#{gameOrderProduct.product_name},#{gameOrderProduct.product_url},#{gameOrderProduct.seller_id},#{gameOrderProduct.product_price})

</foreach >

</insert>

5 定义GameProduct.xml配置文件

<?xml version="1.0" encoding="UTF-8" ?> insert into game_product(game_id,game_title,game_pic,game_detail_url,game_title_second,game_qufu,game_credit,game_price,game_stock,game_protection,game_user,game_type,game_create_date,game_check_date,game_check_user,game_check_reson,game_check_status) values ( #{gameProduct.game_id},#{gameProduct.game_title},#{gameProduct.game_pic},#{gameProduct.game_detail_url},#{gameProduct.game_title_second},#{gameProduct.game_qufu},#{gameProduct.game_credit},#{gameProduct.game_price},#{gameProduct.game_stock},#{gameProduct.game_protection},#{gameProduct.game_user},#{gameProduct.game_type},#{gameProduct.game_create_date},#{gameProduct.game_check_date},#{gameProduct.game_check_user},#{gameProduct.game_check_reson},#{gameProduct.game_check_status} ) select * from game_product; select * from game_product where game_id = #{game_id}6 定义ProvinceCode.xml配置文件

<?xml version="1.0" encoding="UTF-8" ?> select * from province_code WHERE pid = '0' and id != '0' select * from province_code WHERE pid = #{pid} select * from province_code WHERE pid = #{pid} AND NAME != '市辖区'7 定义UserBase.xml配置文件

<?xml version="1.0" encoding="UTF-8" ?> insert into user_base(user_account,user_email,user_name,user_phone,user_origin_password,user_password,user_sex,user_card,user_registertime,user_updatetime,is_deleted,user_province,user_city,user_area,user_address_detail,register_ip,user_pic) values (#{userBase.user_account},#{userBase.user_email},#{userBase.user_name},#{userBase.user_phone},#{userBase.user_origin_password},#{userBase.user_password},#{userBase.user_sex},#{userBase.user_card},#{userBase.user_registertime},#{userBase.user_updatetime},#{userBase.is_deleted},#{userBase.user_province},#{userBase.user_city},#{userBase.user_area},#{userBase.user_address_detail},#{userBase.register_ip},#{userBase.user_pic}) select * from user_base where user_id = #{user_id} insert into user_base(user_id,user_account,user_email,user_name,user_phone,user_origin_password,user_password,user_sex,user_card,user_registertime,user_updatetime,is_deleted,user_province,user_city,user_area,user_address_detail,register_ip,user_pic) values (null,#{user_account},#{user_email},#{user_name},#{user_phone},#{user_origin_password},#{user_password},#{user_sex},#{user_card},#{user_registertime},#{user_updatetime},#{is_deleted},#{user_province},#{user_city},#{user_area},#{user_address_detail},#{register_ip},#{user_pic}) select * from user_base; select * from user_base where user_registertime <= #{user_registertime};8 定义UserLoginRecord.xml配置文件

<?xml version="1.0" encoding="UTF-8" ?> insert into user_login_record(login_id ,login_user_id ,login_time,login_mac ,login_ip,login_message ,login_status,login_fail_reson) values (#{userLogin.login_id },#{userLogin.login_user_id },#{userLogin.login_time},#{userLogin.login_mac },#{userLogin.login_ip},#{userLogin.login_message },#{userLogin.login_status},#{userLogin.login_fail_reson})9 定义VideoDetail.xml配置文件

<?xml version="1.0" encoding="UTF-8" ?> insert into video_detail(video_id,video_name,video_detail,video_type,video_url,video_time_long,video_comment_total,video_like_total,video_upload_time,video_check_time,video_update_time,video_upload_user,video_status,video_comment,video_watch_time,video_age) values (#{videoDetail.video_id},#{videoDetail.video_name},#{videoDetail.video_detail},#{videoDetail.video_type},#{videoDetail.video_url},#{videoDetail.video_time_long},#{videoDetail.video_comment_total},#{videoDetail.video_like_total},#{videoDetail.video_upload_time},#{videoDetail.video_check_time},#{videoDetail.video_update_time},#{videoDetail.video_upload_user},#{videoDetail.video_status},#{videoDetail.video_comment},#{videoDetail.video_watch_time},#{videoDetail.video_age})</insert>

<insert id="addOne" parameterType="com.datas.beans.dbbeans.VideoDetail">

insert into video_detail(video_id,video_name,video_detail,video_type,video_url,video_time_long,video_comment_total,video_like_total,video_upload_time,video_check_time,video_update_time,video_upload_user,video_status,video_comment,video_watch_time,video_age)

values (#{video_id},#{video_name},#{video_detail},#{video_type},#{video_url},#{video_time_long},#{video_comment_total},#{video_like_total},#{video_upload_time},#{video_check_time},#{video_update_time},#{video_upload_user},#{video_status},#{video_comment},#{video_watch_time},#{video_age})

</insert>

<select id="getAllVideoDetail" resultType="com.datas.beans.dbbeans.VideoDetail">

select * from video_detail;

</select>

<select id="getVideoByUserId" parameterType="int" resultType="com.datas.beans.dbbeans.VideoDetail">

select * from video_detail where video_upload_user = #{video_upload_user}

</select>

2 定义mybatis核心配置文件SqlMapConfig.xml

在工程的resource路径下定义SqlMapConfig.xml配置文件作为mybatis的核心配置文件

<!--解析:这里是添加驱动,还需要注意:这里我的mysql版本是8点多版本,所以在添加驱动时,还需要添加cj。

如果版本不是那么高的话,驱动就这样的写(com.mysql.cj.jdbc.Driver),反正根据自己的来 -->

<property name="driver" value="com.mysql.jdbc.Driver" />

<!--这里属于所访问的地址,还是需要注意一下:下面是由于我的mysql是8点多的版本,需要添加“?serverTimezone=UTC”这个,

因为这个是解决时区的问题,出现时区的问题,就加上,如果没有,就不加 。介绍:day是我的数据库,还是根据自己的来 -->

<property name="url" value="jdbc:mysql://localhost:3306/jiuyou_game?useSSL=false" />

<!--用户 -->

<property name="username" value="root" />

<!--这是mysql的密码,反正还是根据自己的mysql的密码 -->

<property name="password" value="123456" />

</dataSource>

</environment>

</environments>

<mappers>

<!--这里是主配置文件(SqlMapConfig.xml)调用映射文件(user.xml),配置如下 -->

<!-- <mapper resource="com/kkb/log/bean/video/mappers/ProvinceCode.xml"/>-->

<mapper resource="mappers/ProvinceCode.xml"></mapper>

<mapper resource="mappers/UserBase.xml"></mapper>

<mapper resource="mappers/VideoDetail.xml"></mapper>

<mapper resource="mappers/DBCommonBase.xml"></mapper>

<mapper resource="mappers/GameProduct.xml"></mapper>

<mapper resource="mappers/GameOrder.xml"></mapper>

<mapper resource="mappers/GameOrderProduct.xml"></mapper>

<mapper resource="mappers/UserLoginRecord.xml"></mapper>

<mapper resource="mappers/ArticleDetail.xml"></mapper>

</mappers>

5 拷贝基础工具类

将项目当中需要用到的基础工具类拷贝到com.datas.utils 这个包路径下

6 开发用户生成代码

在com.datas.dbgenerate 这个package下面定义UserGenerate这个class类,用于生成用户基础信息表

package com.datas.dbgenerate;

import com.datas.beans.dbbeans.ProvinceCode;

import com.datas.beans.dbbeans.UserBase;

import com.datas.utils.DBOperateUtils;

import com.datas.utils.DateUtil;

import com.datas.utils.RandomIdCardMaker;

import com.datas.utils.RandomValue;

import org.apache.commons.codec.digest.DigestUtils;

import java.io.IOException;

import java.text.ParseException;

import java.util.ArrayList;

import java.util.Date;

import java.util.List;

/**

-

用户生成表

*/

public class UserGenerate {

public static void main(String[] args) throws IOException, ParseException {

String[] myArray = {“2021-03-15”,“2021-03-16”,“2021-03-17”,“2021-03-18”,“2021-03-19”,“2021-03-20”,“2021-03-21”,“2021-03-22”,“2021-03-23”,“2021-03-24”,“2021-03-25”,“2021-03-26”,“2021-03-27”,“2021-03-28”,“2021-03-29”,“2021-03-30”,“2021-03-31”};

for (String eachDate : myArray) {

List userBase = getUserBase(RandomValue.getRandomInt(300,800),eachDate);

DBOperateUtils.insertUserBase(userBase);

}}

public static List getUserBase(int totalUser,String formateDate) throws IOException, ParseException {

//根据传入的日期来生成注册时间和更新时间

ArrayList userBaseList = new ArrayList<>();List<ProvinceCode> provinceCodeList = DBOperateUtils.getProvince(); for(int i =0;i< totalUser;i++){ UserBase userBase = new UserBase(); userBase.setUser_account(RandomValue.getRandomUserName(8)); userBase.setUser_email(RandomValue.getEmail(8,12)); userBase.setUser_name(RandomValue.getRandomUserName(10)); userBase.setUser_phone(RandomValue.getUserPhone()); String passwords = RandomValue.getPasswords(10); userBase.setUser_origin_password(passwords); userBase.setUser_password(DigestUtils.md5Hex(passwords)); userBase.setUser_sex(RandomValue.getRandomInt(0,1)+""); userBase.setUser_card(RandomIdCardMaker.getUserCard(1)); Date date = DateUtil.generateRandomDate(formateDate); userBase.setUser_registertime(date); userBase.setUser_updatetime(date); userBase.setIs_deleted(RandomValue.getRandomInt(0,1)+""); //随机获取省编码 int randomInt = RandomValue.getRandomInt(0, provinceCodeList.size() - 1); ProvinceCode provinceCode = provinceCodeList.get(randomInt); int provinceId = provinceCode.getId(); //通过省编码随机获取市编码 ProvinceCode city = DBOperateUtils.getCityByCode(provinceId); int cityId = city.getId(); //通过市区编码获取区县编码 int regionId = DBOperateUtils.getRegionByCode(cityId); userBase.setUser_province(provinceId); userBase.setUser_city(cityId); userBase.setUser_area(regionId); userBase.setUser_address_detail(RandomValue.getRoad()); userBase.setRegister_ip(RandomValue.getRandomIp()); userBaseList.add(userBase); } return userBaseList;}

}

7 开发视频详情表数据生成

package com.datas.dbgenerate;

import com.datas.beans.dbbeans.VideoDetail;

import com.datas.utils.DBOperateUtils;

import com.datas.utils.JsoupGetAllVideo;

import java.io.IOException;

import java.util.List;

/**

- 生成用户浏览观看视频表

*/

public class VideoGenerate {

public static void main(String[] args) throws IOException {

List videoListByJsoup = JsoupGetAllVideo.getVideoListByJsoup();

DBOperateUtils.insertVideoBase(videoListByJsoup);

}

}

8 开发文章数据生成

package com.datas.dbgenerate;

import com.datas.utils.JSoupGetAllArticle;

import java.io.IOException;

import java.text.ParseException;

public class ArticleDetailGenerate {

public static void main(String[] args) throws IOException, ParseException {

JSoupGetAllArticle jSoupGetAllArticle = new JSoupGetAllArticle();

jSoupGetAllArticle.getVideoListByJsoup(“2021-03-18”);

}

}

9 开发基础信息字典表数据生成

package com.datas.dbgenerate;

import com.datas.beans.dbbeans.CommonBase;

import com.datas.beans.dbbeans.UserBase;

import com.datas.utils.DBOperateUtils;

import com.datas.utils.RandomValue;

import java.util.ArrayList;

import java.util.Date;

import java.util.List;

/**

-

生成用户的mac地址与ip地址

*/

public class CommonBaseGenerateMacAndIp {public static void main(String[] args) {

String[] type_dic = {“热门”,“古风江湖梦”,“日系宅世界”,“端游最经典”,“模拟”,“回合”,“竞技”,“卡牌”,“仙侠”,“魔幻”,“生存”,“角色”,“射击”,“魔性”,“赛车”,“中国风”,“动作”,“放置”,“休闲”,“经营”,“策略”,“塔防”,“消除”,“足球”,“传奇”,“体育”,“音乐”};

ArrayList type_dicList = new ArrayList<>();

for (String s : type_dic) {

CommonBase commonBase = new CommonBase();

commonBase.setType_name(s);

// commonBase.setType_value(s);

commonBase.setType_createtime(new Date());

commonBase.setType_status(“1”);

commonBase.setType_updatetime(new Date());

commonBase.setType_flag(“1”);

type_dicList.add(commonBase);

}

DBOperateUtils.insertCommonBaseList(type_dicList);String[] down_dic = {"应用宝","应用汇","华为商城","小米商城","苹果商城","魅族商城","三星商城","豌豆荚"}; ArrayList<CommonBase> down_dicList = new ArrayList<>(); for (String s : down_dic) { CommonBase commonBase = new CommonBase(); commonBase.setType_name(s); // commonBase.setType_value(s); commonBase.setType_createtime(new Date()); commonBase.setType_status("1"); commonBase.setType_updatetime(new Date()); commonBase.setType_flag("2"); down_dicList.add(commonBase); } DBOperateUtils.insertCommonBaseList(down_dicList); String[] operate_dic = {"android3.5","android3.6","android3.8","android4.2","android4.6","ios8.5","ios8.6","ios8.7","ios9.2","ios9.5","ios10","ios11"}; ArrayList<CommonBase> operate_dicList = new ArrayList<>(); for (String s : operate_dic) { CommonBase commonBase = new CommonBase(); commonBase.setType_name(s); // commonBase.setType_value(s); commonBase.setType_createtime(new Date()); commonBase.setType_status("1"); commonBase.setType_updatetime(new Date()); commonBase.setType_flag("3"); operate_dicList.add(commonBase); } DBOperateUtils.insertCommonBaseList(operate_dicList); //生成搜索关键字 String[] searchKey = {"九游国风版本","英雄联盟手游","天涯明月刀","荒野乱斗","地下城与勇士","最强蜗牛","江南百景图","妖精的尾巴","梦幻西游","率师之滨","王者荣耀","和平精英","吃鸡","初始化","金币","钻石、宝石","力量","智力","运气","敏捷","体质、体力","创造","毒性","生命","蓝、魔法","魔法值、技能值","精神","恢复","攻击","魔法攻击","防御","魔法防御","护甲","物理","魔法","暴击","闪避","命中","魅力","冷却","范围","速度、频率","速率、射速","成功率","精品成功率","攻击成功率","获得","设置","支付","成功","失败","取消","分数","名声","因果值","死亡","英雄、玩家","怪物、敌人","药水","回血道具","过关攻略","CF如何卡bug","地下城与勇士bug攻略","秒杀大boss","体力回升药水","火力覆盖","如何屏蔽SB","如何防止被杀","怎么提高速度","怎么拿三杀","青铜如何快速升级","怎么避免小学生","小学生打我怎么办","草丛如何隐身","逃跑攻略","王者荣耀青铜到黄金","王者荣耀快速升级","钻石","黄金","白银","青铜"}; ArrayList<CommonBase> search_keyList = new ArrayList<>(); for (String s : searchKey) { CommonBase commonBase = new CommonBase(); commonBase.setType_name("searchKey"); commonBase.setType_value(s); commonBase.setType_createtime(new Date()); commonBase.setType_status("1"); commonBase.setType_updatetime(new Date()); commonBase.setType_flag("4"); search_keyList.add(commonBase); } DBOperateUtils.insertCommonBaseList(search_keyList); //生成用户对应的mac地址与IP地址 List<UserBase> allUserBase = DBOperateUtils.getAllUserBase(); for (UserBase userBase : allUserBase) { List<CommonBase> commonBaseMacList = getCommonBaseMacList(RandomValue.getRandomInt(1, 5), userBase.getUser_id()); DBOperateUtils.insertCommonBaseList(commonBaseMacList); List<CommonBase> commonBaseList = generateUserIp(RandomValue.getRandomInt(1, 5), userBase.getUser_id()); DBOperateUtils.insertCommonBaseList(commonBaseList); }}

/**-

生成用户与mac地址的绑定对应关系

/

public static List getCommonBaseMacList(int totalResult, int userId){

ArrayList commonBaseList = new ArrayList<>();

for(int i =1;i<= totalResult;i++){

CommonBase commonBase = new CommonBase();

commonBase.setType_name(userId+"");

//给每个用户生成一个mac地址

commonBase.setType_value(RandomValue.randomMac4Qemu());

//甚至有的用户生成两个mac地址

commonBase.setType_createtime(new Date());

commonBase.setType_status(“1”);

commonBase.setType_updatetime(new Date());

commonBase.setType_flag(“5”);

commonBaseList.add(commonBase);

//如果是9的倍数,那就给他生成两个mac地址

if(i % 9 ==0){

CommonBase commonBase1 = new CommonBase();

commonBase1.setType_name(i+"");

//给每个用户生成一个mac地址

commonBase1.setType_value(RandomValue.randomMac4Qemu());

//甚至有的用户生成两个mac地址

commonBase1.setType_createtime(new Date());

commonBase1.setType_status(“1”);

commonBase1.setType_updatetime(new Date());

commonBase1.setType_flag(“4”);

commonBaseList.add(commonBase1);

}

//如果输入的i的值是9和4的倍数,那么就再给生成一个mac地址

if(i % 9 ==0 && i % 4 == 0){

CommonBase commonBase2 = new CommonBase();

commonBase2.setType_name(i+"");

//给每个用户生成一个mac地址

commonBase2.setType_value(RandomValue.randomMac4Qemu());

//甚至有的用户生成两个mac地址

commonBase2.setType_createtime(new Date());

commonBase2.setType_status(“1”);

commonBase2.setType_updatetime(new Date());

commonBase2.setType_flag(“5”);

commonBaseList.add(commonBase2);

}

}

return commonBaseList;

}

/* -

生成用户与IP地址的绑定对应关系

*/

public static List generateUserIp(int totalResult,int userId){

ArrayList commonBaseList = new ArrayList<>();

for(int i =1;i<= totalResult;i++){

CommonBase commonBase = new CommonBase();

commonBase.setType_name(userId+"");

//给每个用户生成一个mac地址

String randomIp = RandomValue.getRandomIp();

commonBase.setType_value(randomIp);

//甚至有的用户生成两个mac地址

commonBase.setType_createtime(new Date());

commonBase.setType_status(“1”);

commonBase.setType_updatetime(new Date());

commonBase.setType_flag(“6”);

commonBaseList.add(commonBase);String[] split = randomIp.split("\\."); for(int j = 2; j<= RandomValue.getRandomInt(5,10); j++){ int third = RandomValue.getRandomInt(Integer.parseInt(split[2]),Integer.parseInt(split[2])+ 50); if(third > 255){ third = 255; } int fourth = RandomValue.getRandomInt(Integer.parseInt(split[3]),Integer.parseInt(split[3])+ 50); if(fourth > 255){ fourth = 255; } String randomnewIP = split[0] + "." + split[1] + "." + third + "." + fourth; CommonBase commonBaseJ = new CommonBase(); commonBaseJ.setType_name(i+""); //给每个用户生成一个mac地址 commonBaseJ.setType_value(randomnewIP); //甚至有的用户生成两个mac地址 commonBaseJ.setType_createtime(new Date()); commonBaseJ.setType_status("1"); commonBaseJ.setType_updatetime(new Date()); commonBaseJ.setType_flag("6"); commonBaseList.add(commonBaseJ); }}

return commonBaseList;

}

}

-

10 开发商品表数据生成

package com.datas.dbgenerate;

import com.datas.beans.dbbeans.GameProduct;

import com.datas.beans.dbbeans.UserBase;

import com.datas.utils.DBOperateUtils;

import com.datas.utils.DateUtil;

import com.datas.utils.RandomValue;

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.jsoup.nodes.Element;

import org.jsoup.select.Elements;

import java.io.IOException;

import java.net.URL;

import java.util.ArrayList;

import java.util.Date;

import java.util.List;

/**

-

生成商品表

*/

public class GameProductGenerate {

public static void main(String[] args) throws IOException {

List userList = DBOperateUtils.getAllUserBase();

String urlFirst = “https://www.dd373.com/s-rbg22w-0-0-0-0-0-0-0-0-0-0-0-”;

String urlSecond = “-0-0-0.html”;

ArrayList gameProducts = new ArrayList<>();

for(int i =1000;i<=2096;i++){

String finalUrl = urlFirst + i + urlSecond;

Document parse = Jsoup.parse(new URL(finalUrl), 60000);

Elements select = parse.select(“div.goods-list-item”);

for (Element element : select) {

String gameTitle = element.select(“div.game-account-flag”).text();String game_detail_url = element.select("div.h1-box > h2 > a ").attr("href"); String game_title_second = element.select("span.label-bg").text(); Elements select1 = element.select("span.font12.color666.game-qufu-value.max-width506"); String game_qufu= ""; for (Element element1 : select1) { game_qufu += element1.text()+"\001"; } String game_type = select1.last().text(); int game_credit = element.select("p.game-reputation").select("i").size(); String game_price = element.select("div.goods-price > span").text(); String game_stock = element.select("div.kucun > span").text(); String game_protection = element.select("div.server-protection > a > span").text(); GameProduct gameProduct = new GameProduct(); gameProduct.setGame_title(gameTitle); gameProduct.setGame_pic(null); gameProduct.setGame_title_second(game_title_second); gameProduct.setGame_qufu(game_qufu); gameProduct.setGame_credit(game_credit+""); gameProduct.setGame_price(Double.valueOf(game_price.replace("¥",""))); gameProduct.setGame_stock(game_stock+""); gameProduct.setGame_protection(game_protection); gameProduct.setGame_type(game_type); gameProduct.setGame_detail_url(game_detail_url); UserBase userBase = userList.get(RandomValue.getRandomInt(1, userList.size() - 1)); gameProduct.setGame_user(userBase.getUser_id()); Date game_create_date = DateUtil.generatePutOffTime(userBase.getUser_registertime()); Date game_check_date = DateUtil.generatePutOffTime(game_create_date); gameProduct.setGame_create_date(game_create_date); gameProduct.setGame_check_date(game_check_date); gameProduct.setGame_check_user(Integer.MAX_VALUE); gameProduct.setGame_check_reson(""); gameProduct.setGame_check_status(RandomValue.getRandomInt(0,1) + ""); gameProducts.add(gameProduct); if(gameProducts.size()>= 200){ //插入数据库 DBOperateUtils.insertGameProducts(gameProducts); gameProducts.clear(); } } } //插入mysql数据库 DBOperateUtils.insertGameProducts(gameProducts);}

}

11 开发用户登录流水表数据生成

package com.datas.dbgenerate;

import com.datas.utils.UserLoginRecordUtils;

import java.text.ParseException;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

/**

-

用户登录产生的登录日志表

*/

public class UserLoginRecordGenerate {public static void main(String[] args) throws ParseException {

// new UserLoginRecordUtils2().generateUserLogin();

ExecutorService es = Executors.newFixedThreadPool(8);

es.submit(new UserLoginRecordUtils());

es.submit(new UserLoginRecordUtils());

es.submit(new UserLoginRecordUtils());

es.submit(new UserLoginRecordUtils());

}

}

12 订单商品表数据生成

package com.datas.dbgenerate;

import com.datas.beans.dbbeans.GameOrder;

import com.datas.beans.dbbeans.GameOrderProduct;

import com.datas.beans.dbbeans.GameProduct;

import com.datas.beans.dbbeans.UserBase;

import com.datas.utils.DBOperateUtils;

import com.datas.utils.DateUtil;

import com.datas.utils.RandomValue;

import java.util.*;

/**

- 生成订单表以及订单关联商品表

/

public class GameOrderAndProductGenerate {

public static void main(String[] args) {

for(int i =0;i<1000;i++){

Map<GameOrder, List> gameOrderAndProduct = getGameOrderAndProduct();

Set gameOrders = gameOrderAndProduct.keySet();

for (GameOrder gameOrder : gameOrders) {

List gameOrderProductList = gameOrderAndProduct.get(gameOrder);

DBOperateUtils.insertGameOrder(gameOrder);

DBOperateUtils.insertGameOrderProductList(gameOrderProductList);

}

}

}

/*-

生成订单以及订单对应的多个商品的集合

-

@return

*/

public static Map<GameOrder, List> getGameOrderAndProduct(){

Map<GameOrder, List> gameOrderAndProductMap = new HashMap<GameOrder, List>();

List gameProductList = DBOperateUtils.GetAllGameProduct();

List allUserBase = DBOperateUtils.getAllUserBase();

UserBase buyUser = allUserBase.get(RandomValue.getRandomInt(0, allUserBase.size() - 1));

List gameOrderProductList = new ArrayList();

GameOrder gameOrder = new GameOrder();

String orderNum = RandomValue.getOrderNum(Long.valueOf(buyUser.getUser_id()));

gameOrder.setOrder_num(orderNum);

gameOrder.setBuyer_user_id(buyUser.getUser_id());

gameOrder.setOrder_status(RandomValue.getRandomInt(0,4)+"");

gameOrder.setPay_type(RandomValue.getRandomInt(0,2)+"");Double totalPrice = new Double(0.0);

int randomInt = RandomValue.getRandomInt(1, 4);

for(int i=0;i< randomInt;i++) {

GameProduct gameProduct = gameProductList.get(RandomValue.getRandomInt(0, gameProductList.size() - 1));

GameOrderProduct gameOrderProduct = new GameOrderProduct();

gameOrderProduct.setGame_order_id(orderNum);

gameOrderProduct.setProduct_id(gameProduct.getGame_id());

gameOrderProduct.setProduct_num(RandomValue.getRandomInt(1,3));

gameOrderProduct.setProduct_name(gameProduct.getGame_title());

gameOrderProduct.setProduct_url(gameProduct.getGame_detail_url());

gameOrderProduct.setSeller_id(gameProduct.getGame_user()+"");

gameOrderProduct.setProduct_price(gameProduct.getGame_price());

totalPrice = totalPrice +gameProduct.getGame_price();

gameOrderProductList.add(gameOrderProduct);

}

Date getOrderTime = DateUtil.getMaxProductDate(gameOrderProductList);

gameOrder.setPay_money(totalPrice);

Date orderTime = DateUtil.generatePutOffTime(getOrderTime);

gameOrder.setOrder_time(orderTime);

gameOrder.setPay_time(DateUtil.generatePayTime(orderTime));

gameOrder.setTax_percent(“0.1”);

gameOrder.setOrder_deleted(“1”);

gameOrderAndProductMap.put(gameOrder,gameOrderProductList);

return gameOrderAndProductMap;

}

}

-

4、用户埋点数据生成模块

4.1 埋点数据基本格式

公共字段:基本所有安卓手机都包含的字段

业务字段:埋点上报的字段,有具体的业务类型

下面就是一个示例,表示业务字段的上传。

{

“log_type”:“app”,

“event_array”:[

{

“event_name”:“search”,

“event_json”:{

“search_code”:“1”,

“search_time”:“1615800500564”

},

“event_time”:1615800496748

},

{

“event_name”:“subscribe”,

“event_json”:{

“sub_user_name”:“41lpzLV325”,

“sub_type”:“1”,

“sub_goods_comment”:“33”,

“sub_goods_like”:“80”,

“sub_user_discuss”:“54”,

“sub_user_id”:“505”

},

“event_time”:1615800488946

},

{

“event_name”:“cycleAdd”,

“event_json”:{

“cycle_id”:“41”,

“cycleOperateTime”:1615800511002,

“cycle_operate_type”:“1”

},

“event_time”:1615800509870

},

{

“event_name”:“sendVideo”,

“event_json”:{

“video_time”:“1615800505380”,

“video_name”:"《穿行三国》郡城争夺-活动预览",

“video_user_id”:“505”,

“video_long”:106,

“video_success_time”:“1615800519237”,

“video_type”:“13”

},

“event_time”:1615800508498

},

{

“event_name”:“articleLike”,

“event_json”:{

“target_id”:285831,

“type”:“1”,

“add_time”:“1615800511556”,

“userid”:505

},

“event_time”:1615800485912

},

{

“event_name”:“articleComment”,

“event_json”:{

“p_comment_id”:916,

“commentType”:“1”,

“commentId”:“619779”,

“praise_count”:53,

“commentContent”:“妈程汇岔饵裸看驳孤绞别织伎柔痛媒合叶昼悟兄”,

“reply_count”:181

},

“event_time”:1615800511794

},

{

“event_name”:“articleShare”,

“event_json”:{

“shareCycle”:“1”,

“shareTime”:“1615800510091”,

“shareArticleId”:“3457”,

“shareType”:“2”,

“shareUserId”:505

},

“event_time”:1615800505943

}

],

“common_base”:{

“channel_num”:“0”,

“lat”:"-6.0",

“lng”:"-93.0",

“log_time”:“1615803420052”,

“mobile_brand”:“htc”,

“mobile_type”:“htc10”,

“net_type”:“4G”,

“operate_version”:“android3.8”,

“screen_size”:“640*960”,

“sdk_version”:“sdk1.6.6”,

“sys_lag”:“简体中文”,

“user_id”:“505”,

“user_ip”:“36.58.146.211”,

“version_name”:“第一个版本2017-02-08”,

“version_num”:“1”

}

}

示例日志(服务器时间戳 | 日志):

1615797260652| {

“log_type”:“app”,

“event_array”:[

{

“event_name”:“search”,

“event_json”:{

“search_code”:“1”,

“search_time”:“1615800500564”

},

“event_time”:1615800496748

},

{

“event_name”:“subscribe”,

“event_json”:{

“sub_user_name”:“41lpzLV325”,

“sub_type”:“1”,

“sub_goods_comment”:“33”,

“sub_goods_like”:“80”,

“sub_user_discuss”:“54”,

“sub_user_id”:“505”

},

“event_time”:1615800488946

},

{

“event_name”:“cycleAdd”,

“event_json”:{

“cycle_id”:“41”,

“cycleOperateTime”:1615800511002,

“cycle_operate_type”:“1”

},

“event_time”:1615800509870

},

{

“event_name”:“sendVideo”,

“event_json”:{

“video_time”:“1615800505380”,

“video_name”:"《穿行三国》郡城争夺-活动预览",

“video_user_id”:“505”,

“video_long”:106,

“video_success_time”:“1615800519237”,

“video_type”:“13”

},

“event_time”:1615800508498

},

{

“event_name”:“articleLike”,

“event_json”:{

“target_id”:285831,

“type”:“1”,

“add_time”:“1615800511556”,

“userid”:505

},

“event_time”:1615800485912

},

{

“event_name”:“articleComment”,

“event_json”:{

“p_comment_id”:916,

“commentType”:“1”,

“commentId”:“619779”,

“praise_count”:53,

“commentContent”:“妈程汇岔饵裸看驳孤绞别织伎柔痛媒合叶昼悟兄”,

“reply_count”:181

},

“event_time”:1615800511794

},

{

“event_name”:“articleShare”,

“event_json”:{

“shareCycle”:“1”,

“shareTime”:“1615800510091”,

“shareArticleId”:“3457”,

“shareType”:“2”,

“shareUserId”:505

},

“event_time”:1615800505943

}

],

“common_base”:{

“channel_num”:“0”,

“lat”:"-6.0",

“lng”:"-93.0",

“log_time”:“1615803420052”,

“mobile_brand”:“htc”,

“mobile_type”:“htc10”,

“net_type”:“4G”,

“operate_version”:“android3.8”,

“screen_size”:“640*960”,

“sdk_version”:“sdk1.6.6”,

“sys_lag”:“简体中文”,

“user_id”:“505”,

“user_ip”:“36.58.146.211”,

“version_name”:“第一个版本2017-02-08”,

“version_num”:“1”

}

}

下面是各个埋点日志详细数据字段格式。

4.2 事件日志数据

4.2.1 滑动操作日志

字段名称 含义

move_direct 客户端滑动方向 1:向下滑动 2:向上滑动

start_offset 滑动的起始offset

end_offset 滑动的结束offset

move_menu 哪个菜单界面滑动的 0:首页 :1:圈子 :2:新奇

4.2.2 点击操作日志

字段名称 含义

click_type 点击的分类,一级分类还是二级分类:1.一级分类 2.二级分类 3.三级分类 4.四级分类

type_name 一级分类名称 或者二级分类名称 或者三级分类名称 或者四级分类名称

click_request_url 点击之后请求的URL地址,一级分类没有请求地址,二级分类有请求URL地址

click_time 点击时间

click_entry 页面入口来源:1:首页浏览点击进入 2: 消息推送点击进入 3:新奇界面点击进入 4:首页推荐点击进入

4.2.3 搜索操作日志

字段名称 含义

search_key

搜索关键字

search_time

搜索开始时间

search_code

搜索的结果 0:搜索失败 1:搜索成功

4.2.4 订阅操作日志

字段名称 含义

sub_type

订阅类型 0:取消订阅 1:订阅用户

sub_user_id

取消或者订阅用户id

sub_user_name

订阅用户名称

sub_user_discuss

订阅的频道多少用户在讨论

sub_goods_comment

订阅视频评论数

sub_goods_like

订阅商品的点赞数量

4.2.5 加入圈子操作日志

字段名称 含义

cycle_operate_type

圈子操作类型 0:取消加入圈子 1:加入圈子

cycle_id

圈子id

cycleOperateTime

加入圈子时间

4.2.6 发视频操作日志

字段名称 含义

video_id 视频id

video_name 视频名称

video_long 视频时长

video_time 开始发送视频时间

video_type 视频发送状态 0:开始发送日志记录 1:发送成功日志记录

video_success_time 视频发送成功记录时间

video_user_id 视频关联用户id

4.2.7 发帖子操作日志

字段名称 含义

article_id

帖子id

article_type

发帖子类型 0:发帖 1:发帖成功 2:发帖失败

article_cycle

帖子所属圈子

article_pic_num

帖子图片数量

article_time

帖子操作时间

article_user_id

发帖关联用户id

4.2.8 点赞操作日志

字段名称 含义

userid

帖子id

target_id

发帖子类型 0:发帖 1:发帖成功 2:发帖失败

type

帖子所属圈子

add_time

添加时间

4.2.9 评论操作日志

字段名称 含义

commentType

1 文章评论 2视频评论

commentId

评论视频或者文章id

p_comment_id

父级评论id(为0则是一级评论,不为0则是回复)

commentContent

评论内容

commentTime

评论时间

praise_count

点赞数量

reply_count

回复数量

4.2.10 分享操作日志

字段名称 含义

shareUserId 分享用户id

shareType 分享类型 1 文章分享 2 视频分享

shareArticleId 分享视频或者文章id

shareTime 分享时间

shareCycle 分享方式 0 微信好友 1微信朋友圈 2 qq好友 3 微博分享

4.3 APP启动日志

4.3.1 App启动日志

字段名称 含义

entry 入口: push=1,widget=2,icon=3,notification=4, lockscreen_widget =5

open_ad_type 开屏广告类型: 开屏原生广告=1, 开屏插屏广告=2

action 状态:成功=1 失败=2

loading_time 加载时长:计算下拉开始到接口返回数据的时间,(开始加载报0,加载成功或加载失败才上报时间)

detail 失败码(没有则上报空)

extend1 失败的message(没有则上报空)

en 启动日志类型标记

4.4 用户日志数据生成

1 拷贝用户日志数据生成基础类

2 定义用户操作日志数据生成

package com.datas.loggenerate;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONArray;

import com.alibaba.fastjson.JSONObject;

import com.datas.beans.dbbeans.*;

import com.datas.beans.logbeans.AppStart;

import com.datas.utils.DBOperateUtils;

import com.datas.utils.GenerateLogUtils;

import com.datas.utils.RandomValue;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.io.IOException;

import java.text.ParseException;

import java.util.List;

import java.util.Random;

/**

-

主程序模拟生成用户操作日志

-

一定是要在存在的用户当中才存在操作日志

*/

public class OperateGenerate {

private final static Logger logger = LoggerFactory.getLogger(OperateGenerate.class);

private static Random rand = new Random();

public static void main(String[] args) throws IOException, ParseException {

String[] myArray = {“2021-03-15”,“2021-03-16”,“2021-03-17”,“2021-03-18”,“2021-03-19”,“2021-03-20”,“2021-03-21”,“2021-03-22”,“2021-03-23”,“2021-03-24”,“2021-03-25”,“2021-03-26”,“2021-03-27”,“2021-03-28”,“2021-03-29”,“2021-03-30”,“2021-03-31”};

List videoDetailList = DBOperateUtils.getAllVideoDetail();

List gameProductList = DBOperateUtils.GetAllGameProduct();

List commonBaseList = DBOperateUtils.getTypeNameBase();

for (String dateArray : myArray) {

List userBaseList = DBOperateUtils.getUserBaseByDate(dateArray);

//从这些用户当中挑选一些用户出来做各种操作,挑选多少个用户呢???挑选30% 到70%的用户吧

double lower = userBaseList.size() * 0.3;

double higher = userBaseList.size() * 0.7;

int lowerNum = (int)lower;

int higherNum = (int)higher;

int finalNumUser = RandomValue.getRandomInt(lowerNum, higherNum);

for(int i =0;i< finalNumUser;i++){

UserBase userBase = userBaseList.get(RandomValue.getRandomInt(0, userBaseList.size() - 1));

List videoDetailListByUserId = DBOperateUtils.getVideoListByUserId(userBase.getUser_id());

List articleDetailList = DBOperateUtils.getArticleDetailListByUserId(userBase.getUser_id());

//每个用户生成多少条数据??

//每个用户操作日志生成6-20条

//生成三个启动日志

//生成 6 - 20个 操作日志

int startTimes = RandomValue.getRandomInt(1, 5);

//启动次数日志

for(int j =0;j< startTimes;j++){

AppStart appStart = GenerateLogUtils.getAppStart(userBase,dateArray);

//操作日志次数

String jsonString = JSON.toJSONString(appStart);

//控制台打印

logger.info(jsonString);

//操作次数日志

int operateTimes = RandomValue.getRandomInt(startTimes * 10, startTimes * 40);

//操作日志包括 移动 点击

// 0 登录 已经有了登录记录 1 滑动 2:点击操作 3 搜索 4 订阅 5 加入圈子 6 发视频 7 发帖子 8 购买支付 9 点赞 10 评论 11 分享

//App启动时间

String startTime = appStart.getLog_time();

for(int p = 0;p<= operateTimes;p++){

JSONObject json = new JSONObject();

json.put(“log_type”, “app”);

json.put(“common_base”, GenerateLogUtils.getCommonBase(userBase,dateArray));

JSONArray eventsArray = new JSONArray();

//1 滑动

if(rand.nextBoolean()){

eventsArray.add(GenerateLogUtils.getMoveDetail(userBase,dateArray,startTime));

json.put(“event_array”, eventsArray);

}

// 2:点击操作按钮

if(rand.nextBoolean()){

eventsArray.add(GenerateLogUtils.getClickOperate(startTime,videoDetailList,gameProductList,commonBaseList));

json.put(“event_array”, eventsArray);

}

// 3 搜索

if(rand.nextBoolean()){

eventsArray.add(GenerateLogUtils.getSearchDetail(startTime,commonBaseList));

json.put(“event_array”, eventsArray);

}

// 4 订阅

if(rand.nextBoolean()){

eventsArray.add(GenerateLogUtils.getSubscribeDetail(userBase,startTime));

json.put(“event_array”, eventsArray);

}

// 5 加入圈子

if(rand.nextBoolean()){

eventsArray.add(GenerateLogUtils.getCycleAddDetail(userBase,startTime));

json.put(“event_array”, eventsArray);

}

//6 发视频

if(rand.nextBoolean()){

if(videoDetailListByUserId.size() > 0){

VideoDetail videoDetail = videoDetailListByUserId.get(RandomValue.getRandomInt(0, videoDetailListByUserId.size() - 1));

eventsArray.add(GenerateLogUtils.getSendVideo(userBase,startTime,videoDetail));

json.put(“event_array”, eventsArray);

}

}

// 7 发帖子

if(rand.nextBoolean()){if(articleDetailList.size() > 0){ ArticleDetail articleDetail = articleDetailList.get(RandomValue.getRandomInt(0, articleDetailList.size() - 1)); eventsArray.add(GenerateLogUtils.getSendArticle(userBase,startTime,articleDetail)); json.put("event_array", eventsArray); } } // 9 点赞 if(rand.nextBoolean()){ JSONObject articleLike = GenerateLogUtils.getArticleLike(userBase, startTime); eventsArray.add(articleLike); json.put("event_array",eventsArray); } // 10 评论 if(rand.nextBoolean()){ JSONObject articleComment = GenerateLogUtils.getArticleComment(userBase,startTime); eventsArray.add(articleComment); json.put("event_array",eventsArray); } // 11 分享 if(rand.nextBoolean()){ JSONObject articleShare = GenerateLogUtils.getArticleShare(userBase,startTime); eventsArray.add(articleShare); json.put("event_array",eventsArray); } //时间 long millis = System.currentTimeMillis(); System.out.println(millis + "|" + json.toJSONString()); //控制台打印 logger.info(millis + "|" + json.toJSONString()); } } } }}

}

5、日志生成代码打包执行

修改logback.xml配置文件属性

修改pom.xml指定main class路径

maven-assembly-plugin

jar-with-dependencies

com.datas.loggenerate.OperateGenerate

make-assembly

package

single

通过maven进行package打包

6、上传数据库文件以及jar包文件

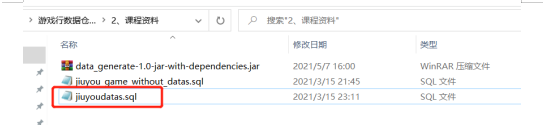

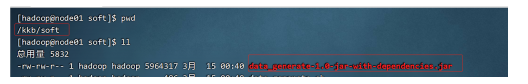

1、上传数据库文件到node03

将数据库文件jiuyoudatas.sql上传到node03的/kkb/soft路径下,并进入mysql的客户端,执行该sql文件

[hadoop@node03 ~]$ cd /kkb/soft/

[hadoop@node03 soft]$ mysql -uroot –p

mysql> source /kkb/soft/jiuyodatas.sql

2、上传jar包文件到node01