一、搭建基础

1.使用 Python 获取 HTML

- 请求库

首先去命令行安装具有 pip 的 requests

$ pip3 install requests

(根据 Python 安装,你可能需要使用 pip,而不是 pip3)

可能出现的问题:

pip is configured with locations that require TLS/SSL, however the ssl module in Python is not avail

具体报错如下:

pip is configured with locations that require TLS/SSL, however the ssl

module in Python is not available. Collecting scrapy Retrying

(Retry(total=4, connect=None, read=None, redirect=None, status=None))

after connection broken by ‘SSLError(“Can’t connect to HTTPS URL

because the SSL module is not available.”)’: /simple/scrapy/ Retrying

(Retry(total=3, connect=None, read=None, redirect=None, status=None))

after connection broken by ‘SSLError(“Can’t connect to HTTPS URL

because the SSL module is not available.”)’: /simple/scrapy/ Retrying

(Retry(total=2, connect=None, read=None, redirect=None, status=None))

after connection broken by ‘SSLError(“Can’t connect to HTTPS URL

because the SSL module is not available.”)’: /simple/scrapy/ Retrying

(Retry(total=1, connect=None, read=None, redirect=None, status=None))

after connection broken by ‘SSLError(“Can’t connect to HTTPS URL

because the SSL module is not available.”)’: /simple/scrapy/ Retrying

(Retry(total=0, connect=None, read=None, redirect=None, status=None))

after connection broken by ‘SSLError(“Can’t connect to HTTPS URL

because the SSL module is not available.”)’: /simple/scrapy/ Could not

fetch URL https://pypi.org/simple/scrapy/: There was a problem

confirming the ssl certificate: HTTPSConnectionPool(host=‘pypi.org’,

port=443): Max retries exceeded with url: /simple/scrapy/ (Caused by

SSLError(“Can’t connect to HTTPS URL because the SSL module is not

available.”)) - skipping Could not find a version that satisfies the

requirement scrapy (from versions: ) No matching distribution found

for scrapy

解决办法: 在系统环境变量paths中添加如下(针对win10):

D:\Anaconda3;

D:\Anaconda3\Scripts;

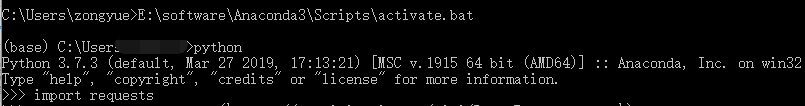

D:\Anaconda3\Library\bin - 打开交互式python解释器测试一些请求代码

(base) C:\Users>python

>>>import requests

>>> response = requests.get('你想访问的网页地址the web pages you wanna visit')

>>> print(response.text)

>>> print(type(response.text))出现的问题:

Warning: This Python interpreter is in a conda environment, but the environment has not been activated. Libraries may fail to load. To activate this environment please see https://conda.io/activation

解决办法:

C:\Users>E:\software\Anaconda3\Scripts\activate.bat

2.解析HTML(使用Beautiful Soup)

-

安装Beautiful Soup

pip3 install beautifulsoup4 -

使用Beautiful Soup解析HTML

from bs4 import BeautifulSoup #引入BeautifulSoup库 soup = BeautifulSoup(html,'html.parser') #定义soup soup.title #获取title标识 soup.div #获取div标识 soup.div.a #获取div标识中的a标识完整示例如下:

(base) C:\Users>python

Python 3.7.3 (default, Mar 27 2019, 17:13:21) [MSC v.1915 64 bit (AMD64)] :: Anaconda, Inc. on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> from bs4 import BeautifulSoup

>>> import requests

>>> response = requests.get('https://classroom.udacity.com/courses/ud1110/lessons/1be3e033-6aae-4e6f-8806-bca80a652222/concepts/a99c5929-38de-43e8-8072-556f776677e8')

>>> html = response.text

>>> print(html)

<!DOCTYPE html><html><head><meta http-equiv="Content-type" content="text/html; charset=utf-8"><meta name="viewport" content="width=device-width,initial-scale=1,maximum-scale=1,user-scalable=no"><title>Udacity</title><link rel="stylesheet" href="/css/fonts.min.css"><link rel="stylesheet" href="https://cdnjs.cloudflare.com/ajax/libs/KaTeX/0.8.3/katex.min.css" crossorigin="anonymous"><meta name="Baiduspider" content="nofollow"><meta name="Baiduspider" content="noarchive"><link rel="apple-touch-icon" href="/touch-icon.png"><link rel="manifest" href="/manifest.json"><script>window.NREUM||(NREUM={}),__nr_require=function(t,e,n){function r(n){if(!e[n]){var o=e[n]={exports:{}};t[n][0].call(o.exports,function(e){var o=t[n][1][e];return r(o||e)},o,o.exports)}return e[n].exports}if("function"==typeof __nr_require)return __nr_require;for(var o=0;o<n.length;o++)r(n[o]);return r}({1:[function(t,e,n){function r(t){try{s.console&&console.log(t)}catch(e){}}var o,i=t("ee"),a=t(21),s={};try{o=localStorage.getItem("__nr_flags").split(","),console&&"function"==typeof console.log&&(s.console=!0,o.indexOf("dev")!==-1&&(s.dev=!0),o.indexOf("nr_dev")!==-1&&(s.nrDev=!0))}catch(c){}s.nrDev&&i.on("internal-error",function(t){r(t.stack)}),s.dev&&i.on("fn-err",function(t,e,n){r(n.stack)}),s.dev&&(r("NR AGENT IN DEVELOPMENT MODE"),r("flags: "+a(s,function(t,e){return t}).join(", ")))},{}],2:[function(t,e,n){function r(t,e,n,r,s){try{l?l-=1:o(s||new UncaughtException(t,e,n),!0)}catch(f){try{i("ierr",[f,c.now(),!0])}catch(d){}}return"function"==typeof u&&u.apply(this,a(arguments))}function UncaughtException(t,e,n){this.message=t||"Uncaught error with no additional information",this.sourceURL=e,this.line=n}function o(t,e){var n=e?null:c.now();i("err",[t,n])}var i=t("handle"),a=t(22),s=t("ee"),c=t("loader"),f=t("gos"),u=window.onerror,d=!1,p="nr@seenError",l=0;c.features.err=!0,t(1),window.onerror=r;try{throw new Error}catch(h){"stack"in h&&(t(13),t(12),"addEventListener"in window&&t(6),c.xhrWrappable&&t(14),d=!0)}s.on("fn-start",function(t,e,n){d&&(l+=1)}),s.on("fn-err",function(t,e,n){d&&!n[p]&&(f(n,p,function(){return!0}),this.thrown=!0,o(n))}),s.on("fn-end",function(){d&&!this.thrown&&l>0&&(l-=1)}),s.on("internal-error",function(t){i("ierr",[t,c.now(),!0])})},{}],3:[function(t,e,n){t("loader").features.ins=!0},{}],4:[function(t,e,n){function r(){M++,N=y.hash,this[u]=g.now()}function o(){M--,y.hash!==N&&i(0,!0);var t=g.now();this[h]=~~this[h]+t-this[u],this[d]=t}function i(t,e){E.emit("newURL",[""+y,e])}function a(t,e){t.on(e,function(){this[e]=g.now()})}var s="-start",c="-end",f="-body",u="fn"+s,d="fn"+c,p="cb"+s,l="cb"+c,h="jsTime",m="fetch",v="addEventListener",w=window,y=w.location,g=t("loader");if(w[v]&&g.xhrWrappable){var b=t(10),x=t(11),E=t(8),O=t(6),P=t(13),R=t(7),T=t(14),L=t(9),j=t("ee"),S=j.get("tracer");t(15),g.features.spa=!0;var N,M=0;j.on(u,r),j.on(p,r),j.on(d,o),j.on(l,o),j.buffer([u,d,"xhr-done","xhr-resolved"]),O.buffer([u]),P.buffer(["setTimeout"+c,"clearTimeout"+s,u]),T.buffer([u,"new-xhr","send-xhr"+s]),R.buffer([m+s,m+"-done",m+f+s,m+f+c]),E.buffer(["newURL"]),b.buffer([u]),x.buffer(["propagate",p,l,"executor-err","resolve"+s]),S.buffer([u,"no-"+u]),L.buffer(["new-jsonp","cb-start","jsonp-error","jsonp-end"]),a(T,"send-xhr"+s),a(j,"xhr-resolved"),a(j,"xhr-done"),a(R,m+s),a(R,m+"-done"),a(L,"new-jsonp"),a(L,"jsonp-end"),a(L,"cb-start"),E.on("pushState-end",i),E.on("replaceState-end",i),w[v]("hashchange",i,!0),w[v]("load",i,!0),w[v]("popstate",function(){i(0,M>1)},!0)}},{}],5:[function(t,e,n){function r(t){}if(window.performance&&window.performance.timing&&window.performance.getEntriesByType){var o=t("ee"),i=t("handle"),a=t(13),s=t(12),c="learResourceTimings",f="addEventListener",u="resourcetimingbufferfull",d="bstResource",p="resource",l="-start",h="-end",m="fn"+l,v="fn"+h,w="bstTimer",y="pushState",g=t("loader");g.features.stn=!0,t(8);var b=NREUM.o.EV;o.on(m,function(t,e){var n=t[0];n instanceof b&&(this.bstStart=g.now())}),o.on(v,function(t,e){var n=t[0];n instanceof b&&i("bst",[n,e,this.bstStart,g.now()])}),a.on(m,function(t,e,n){this.bstStart=g.now(),this.bstType=n}),a.on(v,function(t,e){i(w,[e,this.bstStart,g.now(),this.bstType])}),s.on(m,function(){this.bstStart=g.now()}),s.on(v,function(t,e){i(w,[e,this.bstStart,g.now(),"requestAnimationFrame"])}),o.on(y+l,function(t){this.time=g.now(),this.startPath=location.pathname+location.hash}),o.on(y+h,function(t){i("bstHist",[location.pathname+location.hash,this.startPath,this.time])}),f in window.performance&&(window.performance["c"+c]?window.performance[f](u,function(t){i(d,[window.performance.getEntriesByType(p)]),window.performance["c"+c]()},!1):window.performance[f]("webkit"+u,function(t){i(d,[window.performance.getEntriesByType(p)]),window.performance["webkitC"+c]()},!1)),document[f]("scroll",r,{passive:!0}),document[f]("keypress",r,!1),document[f]("click",r,!1)}},{}],6:[function(t,e,n){function r(t){for(var e=t;e&&!e.hasOwnProperty(u);)e=Object.getPrototypeOf(e);e&&o(e)}function o(t){s.inPlace(t,[u,d],"-",i)}function i(t,e){return t[1]}var a=t("ee").get("events"),s=t(24)(a,!0),c=t("gos"),f=XMLHttpRequest,u="addEventListener",d="removeEventListener";e.exports=a,"getPrototypeOf"in Object?(r(document),r(window),r(f.prototype)):f.prototype.hasOwnProperty(u)&&(o(window),o(f.prototype)),a.on(u+"-start",function(t,e){var n=t[1],r=c(n,"nr@wrapped",function(){function t(){if("function"==typeof n.handleEvent)return n.handleEvent.apply(n,arguments)}var e={object:t,"function":n}[typeof n];return e?s(e,"fn-",null,e.name||"anonymous"):n});this.wrapped=t[1]=r}),a.on(d+"-start",function(t){t[1]=this.wrapped||t[1]})},{}],7:[function(t,e,n){function r(t,e,n){var r=t[e];"function"==typeof r&&(t[e]=function(){var t=r.apply(this,arguments);return o.emit(n+"start",arguments,t),t.then(function(e){return o.emit(n+"end",[null,e],t),e},function(e){throw o.emit(n+"end",[e],t),e})})}var o=t("ee").get("fetch"),i=t(21);e.exports=o;var a=window,s="fetch-",c=s+"body-",f=["arrayBuffer","blob","json","text","formData"],u=a.Request,d=a.Response,p=a.fetch,l="prototype";u&&d&&p&&(i(f,function(t,e){r(u[l],e,c),r(d[l],e,c)}),r(a,"fetch",s),o.on(s+"end",function(t,e){var n=this;if(e){var r=e.headers.get("content-length");null!==r&&(n.rxSize=r),o.emit(s+"done",[null,e],n)}else o.emit(s+"done",[t],n)}))},{}],8:[function(t,e,n){var r=t("ee").get("history"),o=t(24)(r);e.exports=r,o.inPlace(window.history,["pushState","replaceState"],"-")},{}],9:[function(t,e,n){function r(t){function e(){c.emit("jsonp-end",[],p),t.removeEventListener("load",e,!1),t.removeEventListener("error",n,!1)}function n(){c.emit("jsonp-error",[],p),c.emit("jsonp-end",[],p),t.removeEventListener("load",e,!1),t.removeEventListener("error",n,!1)}var r=t&&"string"==typeof t.nodeName&&"script"===t.nodeName.toLowerCase();if(r){var o="function"==typeof t.addEventListener;if(o){var a=i(t.src);if(a){var u=s(a),d="function"==typeof u.parent[u.key];if(d){var p={};f.inPlace(u.parent,[u.key],"cb-",p),t.addEventListener("load",e,!1),t.addEventListener("error",n,!1),c.emit("new-jsonp",[t.src],p)}}}}}function o(){return"addEventListener"in window}function i(t){var e=t.match(u);return e?e[1]:null}function a(t,e){var n=t.match(p),r=n[1],o=n[3];return o?a(o,e[r]):e[r]}function s(t){var e=t.match(d);return e&&e.length>=3?{key:e[2],parent:a(e[1],window)}:{key:t,parent:window}}var c=t("ee").get("jsonp"),f=t(24)(c);if(e.exports=c,o()){var u=/[?&](?:callback|cb)=([^&#]+)/,d=/(.*)\.([^.]+)/,p=/^(\w+)(\.|$)(.*)$/,l=["appendChild","insertBefore","replaceChild"];f.inPlace(HTMLElement.prototype,l,"dom-"),f.inPlace(HTMLHeadElement.prototype,l,"dom-"),f.inPlace(HTMLBodyElement.prototype,l,"dom-"),c.on("dom-start",function(t){r(t[0])})}},{}],10:[function(t,e,n){var r=t("ee").get("mutation"),o=t(24)(r),i=NREUM.o.MO;e.exports=r,i&&(window.MutationObserver=function(t){return this instanceof i?new i(o(t,"fn-")):i.apply(this,arguments)},MutationObserver.prototype=i.prototype)},{}],11:[function(t,e,n){function r(t){var e=a.context(),n=s(t,"executor-",e),r=new f(n);return a.context(r).getCtx=function(){return e},a.emit("new-promise",[r,e],e),r}function o(t,e){return e}var i=t(24),a=t("ee").get("promise"),s=i(a),c=t(21),f=NREUM.o.PR;e.exports=a,f&&(window.Promise=r,["all","race"].forEach(function(t){var e=f[t];f[t]=function(n){function r(t){return function(){a.emit("propagate",[null,!o],i),o=o||!t}}var o=!1;c(n,function(e,n){Promise.resolve(n).then(r("all"===t),r(!1))});var i=e.apply(f,arguments),s=f.resolve(i);return s}}),["resolve","reject"].forEach(function(t){var e=f[t];f[t]=function(t){var n=e.apply(f,arguments);return t!==n&&a.emit("propagate",[t,!0],n),n}}),f.prototype["catch"]=function(t){return this.then(null,t)},f.prototype=Object.create(f.prototype,{constructor:{value:r}}),c(Object.getOwnPropertyNames(f),function(t,e){try{r[e]=f[e]}catch(n){}}),a.on("executor-start",function(t){t[0]=s(t[0],"resolve-",this),t[1]=s(t[1],"resolve-",this)}),a.on("executor-err",function(t,e,n){t[1](n)}),s.inPlace(f.prototype,["then"],"then-",o),a.on("then-start",function(t,e){this.promise=e,t[0]=s(t[0],"cb-",this),t[1]=s(t[1],"cb-",this)}),a.on("then-end",function(t,e,n){this.nextPromise=n;var r=this.promise;a.emit("propagate",[r,!0],n)}),a.on("cb-end",function(t,e,n){a.emit("propagate",[n,!0],this.nextPromise)}),a.on("propagate",function(t,e,n){this.getCtx&&!e||(this.getCtx=fu>

>>>soup.title

<title>Udacity</title>

>>>soup.div 二、设计程序

目标:随便点开一个百科词条,点击上面的链接🔗直到点到 “哲学” 这个页面

我们遵循的手动过程是:

- 打开文章

- 找到文章中的第一个链接

- 单击链接

- 重复此过程,直到找到“哲学”文章或进入文章周期。

这个过程中的关键词是 “重复”。这个四步骤过程本质上是一个循环!如果我们的程序重复该手动过程,则程序将包含一个大循环。这将导致一个问题,我们该用 for循环还是while循环?

建议使用while循环

运行循环时,需要跟踪我们访问的文章,以便于输出爬虫发现的路径。我将其添加到我们的过程中:

-

打开文章

-

找到文章中的第一个链接

-

单击链接

-

在 article_chain 数据结构中记录链接

-

重复此过程,直到我们找到“哲学”文章或进入文章周期。

article_chain 将是我们的程序输出。我们也可以借此配合将探索的下一篇文章。在循环的第1步中,命令读出程序可以打开文章链最后的文章。

建议使用列表

手动查找链接并单击时,我们的速度自然受阅读和单击速度的限制。但 Python 程序不会受到这种限制,其循环速度将与页面下载速度一样快。虽然这可节省时间,但是用快速重复的请求敲击网络服务器显得无礼粗鲁。如果不减慢循环速度,服务器可能会认为我们是试图超载服务器的攻击者,因此会阻止我们。服务器可能是对的!如果代码中有一个错误,我们可能会进入一个无限循环,并且请求将淹没服务器。为了避免此种情况,我们应该在主循环中暂停几秒。

三、执行程序

1.循环部分

continue_crawl功能:辨别不同情况下程序会停止请求百度词条的原因

def continue_crawl(search_history, target_url, max_steps=25):

if search_history[-1] == target_url:

print("We've found the target article!")

return False

elif len(search_history) > max_steps:

print("The search has gone on suspiciously long, aborting search!")

return False

elif search_history[-1] in search_history[:-1]:

print("We've arrived at an article we've already seen, aborting search!")

return False

else:

return True

def web_crawl():

while continue_crawl(article_chain, target_url):

# download html of last article in article_chain

# find the first link in that html

first_link = find_first_link(article_chain[-1])

# add the first link to article chain

article_chain.append(first_link)

# delay for about two seconds

# TODO: YOUR CODE HERE!2.查找第一个链接

尝试一(包含了侧栏等链接):

soup.find(id='mw-content-text').find(class_="mw-parser-output").p.a.get('href')

#第二个 .find(class_="mw-parser-output") 输入名为 "mw-parser-output" 的 div 元素。

#请注意,我们必须使用参数 class_,原因是 class 是 Python 中的保留关键字尝试二(包含了主体中的脚注的链接):

content_div = soup.find(id="mw-content-text").find(class_="mw-parser-output")

for element in content_div.find_all("p", recursive=False):

if element.a:

first_relative_link = element.a.get('href')

break第一行代码查找到包含文章正文的 div。

如果该标签是 div 的子类,则下一行在 div 中循环每个

。我们从文档了解到,“如果想让 Beautiful Soup 只考虑直接子类,可以按照recursive=False进行传递” 。

循环主体可以查看段落中是否存在 a 标签。如果这样,从链接中获取 url,并将其存储在 first_relative_link 中,然后结束循环。

注意:我也可以使用 children 方法编写代码。但循环主体将有所不同。

尝试三:

content_div = soup.find(id="mw-content-text").find(class_="mw-parser-output")

for element in content_div.find_all("p", recursive=False):

if element.find("a", recursive=False):

first_relative_link = element.find("a", recursive=False).get('href')

break这发挥作用的原因是 “特殊链接”(如脚注和发音键)似乎都包含在更多 div 标签中。由于这些特殊链接不是段落标签的直接后代,可以使用与之前相同的技术跳过这些链接。我这次使用 find 方法,而不是 find_all,原因是 find 可返回其查找到的第一个标签,而不是匹配标签的列表。

四、归纳总结

import time

import urllib

import bs4

import requests

start_url = "https://en.wikipedia.org/wiki/Special:Random"

target_url = "https://en.wikipedia.org/wiki/Philosophy"

def find_first_link(url):

response = requests.get(url)

html = response.text

soup = bs4.BeautifulSoup(html, "html.parser")

# This div contains the article's body

# (June 2017 Note: Body nested in two div tags)

content_div = soup.find(id="mw-content-text").find(class_="mw-parser-output")

# stores the first link found in the article, if the article contains no

# links this value will remain None

article_link = None

# Find all the direct children of content_div that are paragraphs

for element in content_div.find_all("p", recursive=False):

# Find the first anchor tag that's a direct child of a paragraph.

# It's important to only look at direct children, because other types

# of link, e.g. footnotes and pronunciation, could come before the

# first link to an article. Those other link types aren't direct

# children though, they're in divs of various classes.

if element.find("a", recursive=False):

article_link = element.find("a", recursive=False).get('href')

break

if not article_link:

return

# Build a full url from the relative article_link url

first_link = urllib.parse.urljoin('https://en.wikipedia.org/', article_link)

return first_link

def continue_crawl(search_history, target_url, max_steps=25):

if search_history[-1] == target_url:

print("We've found the target article!")

return False

elif len(search_history) > max_steps:

print("The search has gone on suspiciously long, aborting search!")

return False

elif search_history[-1] in search_history[:-1]:

print("We've arrived at an article we've already seen, aborting search!")

return False

else:

return True

article_chain = [start_url]

while continue_crawl(article_chain, target_url):

print(article_chain[-1])

first_link = find_first_link(article_chain[-1])

if not first_link:

print("We've arrived at an article with no links, aborting search!")

break

article_chain.append(first_link)

time.sleep(2) # Slow things down so as to not hammer Wikipedia's servers

673

673

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?