| 版本 | 作者 | 更新时间 | 说明 |

|---|---|---|---|

| v1.0 | 各人有各人的隐晦与皎洁 | 2023-12 | 初版 |

| v1.1 | 各人有各人的隐晦与皎洁 | 完善验证阶段内容 |

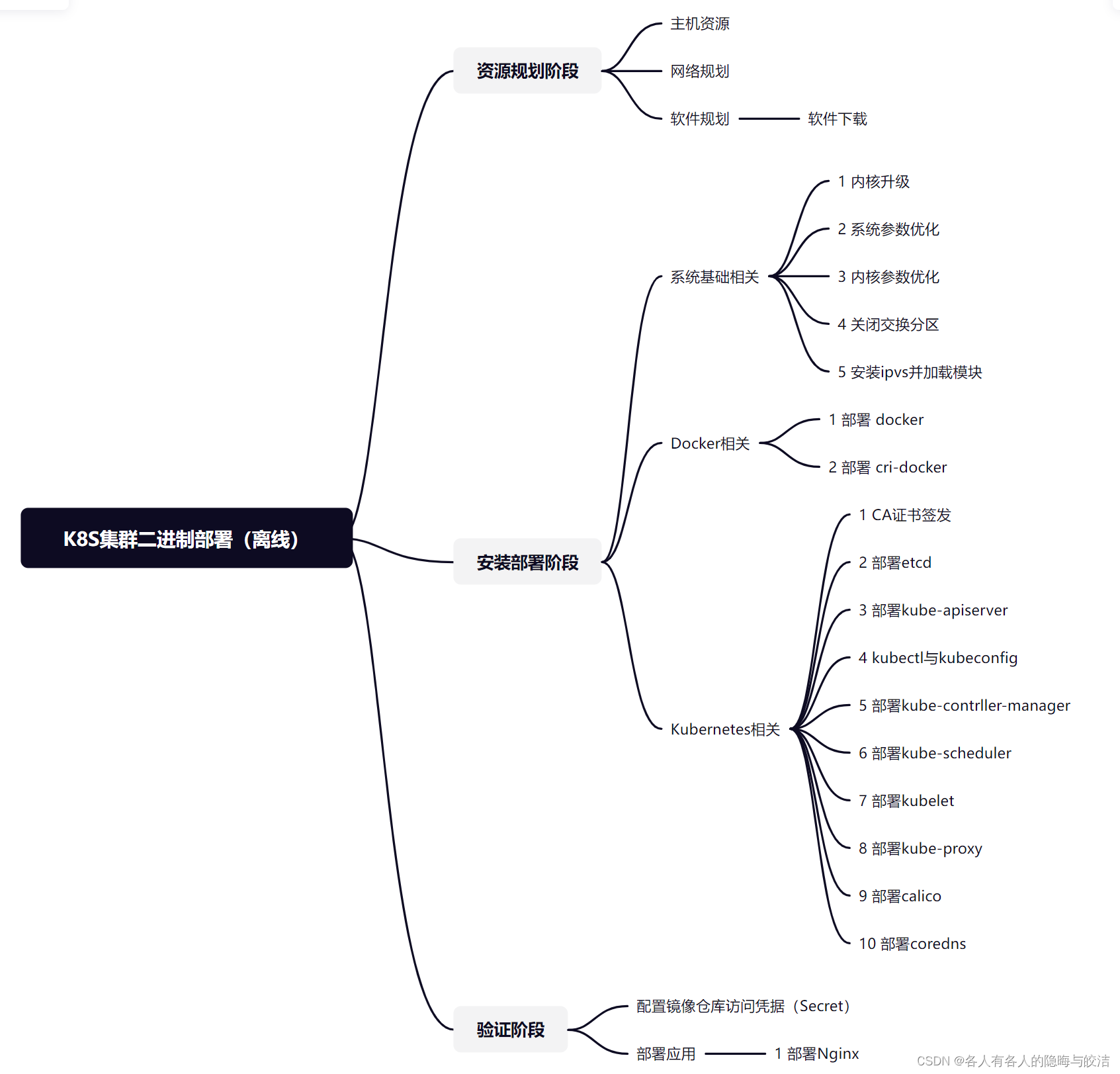

文档目录思维导图

资源规划

主机资源

| Hostname | IP | 规格 | 操作系统 | 备注 |

|---|---|---|---|---|

| k8s-cluster-master1 | 192.168.99.167 | 8C16G | CentOS7.9 | Master节点 |

| k8s-cluster-node1 | 192.168.99.93 | 4C8G | CentOS7.9 | Node节点 |

| k8s-cluster-node2 | 192.168.99.130 | 4C8G | CentOS7.9 | Node节点 |

网络规划

| 网络名称 | 网段 | 备注 |

|---|---|---|

| Node网络 | 192.168.99.0/24 | 服务器IP地址 |

| Service网络 | 10.96.0.0/16 | K8S集群中Service网段 |

| Pod网络 | 10.244.0.0/16 | K8S集群中Pod网段 |

软件规划

| 软件 | 版本 | 备注 |

|---|---|---|

| kernel-lt | 5.4 | LT为长期支持分支,ML为最新稳定分支 |

| cfessl | 1.6.4 | 最新版本 |

| docker | 24.0 | 最新版本 |

| cri-docker | 0.3.9 | 最新版本 |

| kubernetes | 1.28 | 最新版本 |

| pause | 3.6 | Rancher发布最新版本 |

| etcd | 3.5.11 | 最新版本 |

| calico | 3.5 | 稳定版本 |

| coredns | 1.9.4 | 最新版本 |

软件下载

依赖库:

https://mirror.centos.org/centos/7/os/x86_64/Packages/

Kernel:

Index of /linux/kernel/el7/x86_64/RPMS

Docker:

K8s:

https://dl.k8s.io/v1.28.0/kubernetes-server-linux-amd64.tar.gz

Github:

安装部署

系统基础相关

-

注:操作系统相关的配置与优化,每台服务器都需要操作

1 内核升级

软件下载

下载地址:https://elrepo.org/linux/kernel/el7/x86_64/RPMS/

软件包:kernel-lt-5.4.265-1.el7.elrepo.x86_64.rpm / kernel-lt-devel-5.4.265-1.el7.elrepo.x86_64.rpm

操作步骤

rpm -ivh kernel-lt-devel-5.4.265-1.el7.elrepo.x86_64.rpm rpm -ivh kernel-lt-5.4.265-1.el7.elrepo.x86_64.rpm # 查看当前默认启动内核 grubby --default-kernel # 修改grub启动内核顺序 sed -i 's/GRUB_DEFAULT.*/GRUB_DEFAULT=0/' /etc/default/grub # 生成grub配置文件 grub2-mkconfig -o /boot/grub2/grub.cfg # 重启 reboot

2 系统参数优化

操作步骤

cat << EOF >> /etc/security/limits.conf * hard nofile 1024000 * soft nofile 1024000 * hard nproc 1024000 * soft nproc 1024000 * hard memlock unlimited * soft memlock unlimited EOF echo "ulimit -SHn 65535" >> /etc/profile source /etc/profile

3 内核参数优化

操作步骤

cat << EOF >> /etc/sysctl.conf net.ipv4.tcp_timestamps = 0 net.ipv4.tcp_syncookies = 1 net.ipv4.tcp_tw_reuse = 1 net.core.somaxconn = 65535 net.ipv4.tcp_tw_recycle = 1 net.ipv4.tcp_fin_timeout = 25 fs.inotify.max_user_watches = 1048576 fs.inotify.max_user_instances = 256 net.ipv4.tcp_keepalive_time = 30 net.ipv4.ip_local_port_range = 1000 65000 net.ipv4.tcp_max_syn_backlog = 262144 net.ipv4.tcp_max_tw_buckets = 256000 vm.max_map_count = 262144 net.core.netdev_max_backlog = 262144 net.ipv4.tcp_max_orphans = 262144 net.ipv4.tcp_synack_retries = 1 net.ipv4.tcp_syn_retries = 1 EOF sysctl -p

4 关闭交换分区

操作步骤

# 修改fstab文件永久关闭swap分区 sed -i '/swap/ s/^/#/g' /etc/fstab # 关闭交换分区立即生效 swapoff -a

5 安装ipvs并加载模块

软件下载

下载地址:https://github.com/

软件包:ipvsadm-1.28.tar.gz

依赖包:libnl-devel / popt-devel

操作步骤

rpm -ivh libnl-devel-1.1.4-3.el7.x86_64.rpm rpm -ivh popt-devel-1.13-16.el7.x86_64.rpm # 编译安装 tar -zxvf ipvsadm-1.28.tar.gz -C /data/ make && make install whereis ipvsadm # 加载ipvs内核模块 cat << EOF > /etc/sysconfig/modules/ipvs.modules #!/bin/bash modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack EOF chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack # net.bridge.bridge-nf-call-ip6tables = 1,net.bridge.bridge-nf-call-iptables = 1 启用桥接网络设备上的Netfilter框架的IPv6和IPv4表的调用功能。 cat << EOF > /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 vm.swappiness = 0 EOF modprobe br_netfilter # 加载br_netfilter内核模块 sysctl -p /etc/sysctl.d/k8s.conf # 激活模块

Docker相关部署

1 部署Docker

软件下载

下载地址:https://download.docker.com/linux/centos/

软件包:docker-ce-24.0.7-1.el7.x86_64.rpm / docker-ce-cli-24.0.7-1.el7.x86_64.rpm

依赖包:docker-ce-rootless-extras / docker-buildx-plugin / docker-compose-plugin / fuse3-libs / fuse-overlayfs / slirp4netns

操作步骤

# 创建docker配置文件,指定了Docker使用的控制组(CGroup)驱动程序为systemd

cat << EOF > /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

# 安装依赖

rpm -ivh fuse3-libs

rpm -ivh fuse-overlayfs

rpm -ivh slirp4netns

# 安装docker

rpm -ivh docker-buildx-plugin

rpm -ivh docker-compose-plugin

rpm -ivh docker-ce-cli

rpm -ivh docker-ce-rootless-extras --nodeps

rpm -ivh docker-ce

2 部署cri-docker

软件下载

下载地址:https://download.docker.com/linux/centos/

软件包:docker-ce-24.0.7-1.el7.x86_64.rpm / docker-ce-cli-24.0.7-1.el7.x86_64.rpm

依赖包:container-selinux / containerd.io

操作步骤

# 安装容器依赖container-selinux、containerd rpm -ivh container-selinux rpm -ivh containerd.io # 安装cri-dockerd容器运行时接口 rpm -ivh cri-dockerd # 修改cri-docker系统服务配置,指定基础设施容器pause版本,离线环境下请提前确定镜像仓库中包含该镜像。 vim /usr/lib/systemd/system/cri-docker.service ExecStart=/usr/bin/cri-dockerd --pod-infra-container-image=rancher/pause:3.6 --container-runtime-endpoint fd:// # pause基础设施容器是每个 Kubernetes Pod 中的一个特殊容器,它负责为 Pod 中的其他容器提供基础设施服务。例如管理网络和存储,以及为其他容器提供文件系统挂载点。 # 启动服务 systemctl enable --now cri-docker.service

Kubernetes相关部署

注:

-

选择一台Master签发证书即可,所有签发证书操作都在这台master上进行

-

kube-apiserver kube-controller-manager kube-scheduler操作在Master节点进行

-

kubelet kube-proxy操作在Node节点进行

-

kubectl操作在所有节点进行

1 CA证书签发

软件下载

下载地址:https://github.com/

软件包:cfssl_1.6.4_linux_amd64 / cfssl-certinfo_1.6.4_linux_amd64 / cfssljson_1.6.4_linux_amd64

操作步骤

# 通过cfssl颁布证书

mv cfssl_1.6.4_linux_amd64 /usr/local/bin/cfssl

mv cfssl-certinfo_1.6.4_linux_amd64 /usr/local/bin/cfssl-certinfo

mv cfssljson_1.6.4_linux_amd64 /usr/local/bin/cfssljson

chmod +x /usr/local/bin/cfssl*

# 创建一个目录临时存放证书

mkdir -p /data/certificate

cd /data/certificate

# 配置签发CA证书请求文件

cat << EOF > ca-csr.json

{

"CN": "kubernetes", # Common Name (一般用于标识服务器名称或者CA名称)

"key": {

"algo": "rsa", # 密钥算法,RSA

"size": 2048 # 密钥长度,2048位

},

"names": [ # X.509 证书中的 Distinguished Names(DN)或是SANs

{

"C": "CN", # 国家代码

"ST": "Beijing", # 省

"L": "Beijing", # 市

"O": "kubemsb", # 组织

"OU": "CN" # 组织单元

}

]

}

EOF

# 生成一个自签名的根证书颁发机构(CA)及其相关密钥

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

cfssl print-defaults config > ca-config.json # 可生成默认的ca证书策略配置做参考

# 配置CA策略

cat << EOF > ca-config.json

{

"signing": {

"default": {

"expiry": "87600h" # 证书有效期

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h" # 证书作用于Kubernetes配置文件的有效期

}

}

}

}

EOF

# 查看证书

openssl x509 -in /etc/kubernetes/ssl/ca.pem -noout -text

2 部署etcd

软件下载

下载地址:https://github.com/

软件包:etcd-v3.5.11-linux-amd64.tar.gz

签发证书

# 配置签发etcd证书请求文件

cat << EOF > etcd-csr.json

{

"CN": "etcd",

"hosts": [

# hosts配置可以使用证书的ip,填写一个网段的第一个IP意味着授权整个网段,如192.168.0.1,为空时任何人都可以使用

"127.0.0.1",

"192.168.99.167",

"192.168.99.93",

"192.168.99.130"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "kubemsb",

"OU": "CN"

}

]

}

EOF

# 生成etcd证书(由CA颁发)

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

操作步骤

注:

-

根据的etcd节点修改配置文件中的IP

-

作者在3台服务器都部署了etcd组成集群

# 部署etcd

mkdir -p /etc/etcd/ssl

mkdir -p /var/lib/etcd/default.etcd

tar -zxvf etcd-v3.5.11-linux-amd64.tar.gz -C /data/

cp -p /data/etcd-v3.5.11-linux-amd64/etcd* /usr/local/bin/

scp -p /data/etcd-v3.5.11-linux-amd64/etcd* k8s-cluster-node1.novalocal:/usr/local/bin/

scp -p /data/etcd-v3.5.11-linux-amd64/etcd* k8s-cluster-node2.novalocal:/usr/local/bin/

# etcd配置文件

cat << EOF > /etc/etcd/etcd.conf

#[Member]

ETCD_NAME="etcd1"

ETCD_DATA_dir="/var/lib/etcd/default.etcd" # Etcd的数据目录位置

ETCD_LISTEN_PEER_URLS="https://192.168.99.167:2380" # Etcd监听集群内部通信地址

ETCD_LISTEN_CLIENT_URLS="https://192.168.99.167:2379,http://127.0.0.1:2379" # Etcd监听客户端请求地址

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.99.167:2380" # etcd节点初始化地址,其他节点会使用此地址来找到它。

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.99.167:2379" # etcd节点初始化地址,其他客户端会使用此地址来找到它。

ETCD_INITIAL_CLUSTER="etcd1=https://192.168.99.167:2380,etcd2=https://192.168.99.93:2380,etcd3=https://192.168.99.130:2380" # 集群中的其他etcd节点

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" # etcd集群的token,用来验证集群成员身份。

ETCD_INITIAL_CLUSTER_STATE="new" # 集群的初始状态,now意味着是一个新创建的集群

EOF

# 拷贝证书

cp -p ca*.pem etcd*.pem /etc/etcd/ssl

scp -p ca*.pem etcd*.pem /etc/etcd/ssl k8s-cluster-node1.novalocal:/etc/etcd/ssl

scp -p ca*.pem etcd*.pem /etc/etcd/ssl k8s-cluster-node2.novalocal:/etc/etcd/ssl

# etcd服务systemd系统管理配置文件

cat << EOF > /etc/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=-/etc/etcd/etcd.conf

WorkingDirectory=/var/lib/etcd/

ExecStart=/usr/local/bin/etcd \

--cert-file=/etc/etcd/ssl/etcd.pem \

--key-file=/etc/etcd/ssl/etcd-key.pem \

--trusted-ca-file=/etc/etcd/ssl/ca.pem \

--peer-cert-file=/etc/etcd/ssl/etcd.pem \

--peer-key-file=/etc/etcd/ssl/etcd-key.pem \

--peer-trusted-ca-file=/etc/etcd/ssl/ca.pem \

--peer-client-cert-auth \

--client-cert-auth

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable --now etcd.service

# 验证etcd集群状态

ETCDCTL_API=3 /usr/local/bin/etcdctl --write-out=table --cacert=/etc/etcd/ssl/ca.pem --cert=/etc/etcd/ssl/etcd.pem --key=/etc/etcd/ssl/etcd-key.pem --endpoints=https://192.168.99.167:2379,https://192.168.99.93:2379,https://192.168.99.130:2379 endpoint health

ETCDCTL_API=3 /usr/local/bin/etcdctl --write-out=table --cacert=/etc/etcd/ssl/ca.pem --cert=/etc/etcd/ssl/etcd.pem --key=/etc/etcd/ssl/etcd-key.pem --endpoints=https://192.168.99.167:2379,https://192.168.99.93:2379,https://192.168.99.130:2379 endpoint status

3 部署kube-apiserver

注:多kube-apiserver节点时请使用keepalived实现高可用,将kube-apiserver地址配置为VIP地址

软件下载

下载地址:https://dl.k8s.io/v1.28.0/kubernetes-server-linux-amd64.tar.gz (包含所有k8s组件)

签发证书

# 配置签发apiserver证书请求文件

cat << EOF > kube-apiserver-csr.json

{

"CN": "kubernetes",

"hosts": [

# hosts配置可以使用证书的ip,填写一个网段的第一个IP意味着授权整个网段,如10.96.0.1,由于k8s集群的所有资源都需要访问apiserver所以需要放通所有地址(注意考虑后期扩容)。

"127.0.0.1",

"192.168.99.167",

"192.168.99.93",

"192.168.99.130",

"192.168.99.1",

"10.96.0.1", # k8s集群service网段

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "kubemsb",

"OU": "CN"

}

]

}

EOF

# 生成apiserver证书及token文件(由CA颁发)

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-apiserver-csr.json | cfssljson -bare kube-apiserver

# 创建TLS机制所需TOKEN:TLS Bootstraping:Master apiserver启用TLS认证后,Node节点kubelet和kube-prox与kube-apiserver进行通讯,必须使用CA签发的有效证书才可以,当Node节点很多时,这种客户端证书颁发需要大量工作,同时也会增加集群扩展复杂度。为了简化流程,Kubernetes引入了TLS,bootstraping机制来自自动颁发客户端证书,kubelet会以一个低权限用户自动向apiserver申请证书,kubelet的证书有apiserver动态签署。所以强烈建议在Node上使用这种方式,目前主要用于kubelet,kube-proxy还是由我们统一颁发一个证书。

cat << EOF > token.csv

$(head -c 16 /dev/urandom | od -An -t x | tr -d ' '),kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

操作步骤

# 部署kubernetes

tar -zxvf kubernetes-server-linux-amd64.tar.gz -C /data/

cd /data/kubernetes/server/bin/

cp -p kube-apiserver kube-controller-manager kube-scheduler kubectl /usr/local/bin/

scp -p kubelet kube-proxy kubectl k8s-cluster-node1.novaloca:/usr/local/bin/

scp -p kubelet kube-proxy kubectl k8s-cluster-node2.novaloca:/usr/local/bin/

mkdir -p /etc/kubernetes/ssl

mkdir -p /var/log/kubernetes

# apiserver服务配置文件

cat > /etc/kubernetes/kube-apiserver.conf << EOF

KUBE_APISERVER_OPTS="--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \

--anonymous-auth=false \

--bind-address=192.168.99.167 \

--advertise-address=192.168.99.167 \

--secure-port=6443 \

--authorization-mode=Node,RBAC \

--runtime-config=api/all=true \

--enable-bootstrap-token-auth \

--service-cluster-ip-range=10.96.0.0/16 \

--token-auth-file=/etc/kubernetes/token.csv \

--service-node-port-range=30000-32767 \

--tls-cert-file=/etc/kubernetes/ssl/kube-apiserver.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kube-apiserver-key.pem \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--kubelet-client-certificate=/etc/kubernetes/ssl/kube-apiserver.pem \

--kubelet-client-key=/etc/kubernetes/ssl/kube-apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-issuer=api \

--etcd-cafile=/etc/etcd/ssl/ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--etcd-servers=https://192.168.99.167:2379,https://192.168.99.93:2379,https://192.168.99.130:2379 \

--allow-privileged=true \

--apiserver-count=3 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/var/log/kube-apiserver-audit.log \

--event-ttl=1h \

--v=4"

EOF

# apiserver服务systemd系统管理配置文件

cat << EOF > /etc/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=etcd.service # etcd服务为running状态apiserver才可启动

Wants=etcd.service

[Service]

Type=notify

EnvironmentFile=-/etc/kubernetes/kube-apiserver.conf

ExecStart=/usr/local/bin/kube-apiserver \$KUBE_APISERVER_OPTS

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

# master

cp -p ca*.pem /etc/kubernetes/ssl/

cp -p kube-apiserver*.pem /etc/kubernetes/ssl/

cp -p token.csv /etc/kubernetes/

systemctl daemon-reload

systemctl enable --now kube-apiserver.service

# 测试apiserver端口

curl --insecure https://192.168.99.167:6443

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {},

"status": "Failure",

"message": "Unauthorized",

"reason": "Unauthorized",

"code": 401

}

4 kubectl与kubeconfig

签发证书

# kube-apiserver使用RBAC对客户端(如kubelet、kube-proxy、pod)请求进行授权,kube-apiserver预定义了一些RBAC使用的RoleBindings(角色绑定),如cluster-admin将Group system:masters与Role cluster-admin绑定,该Role授予了调用kube-apiserver的所有api权限。。

# 配置签发证书请求文件

cat << EOF > admin-csr.json

{

"CN": "kubernetes",

"hosts": [], # 未指定hosts,所以任何人都可以使用

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:masters",

"OU": "system"

}

]

}

EOF

# 生成admin证书(由CA颁发)

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

cp -p admin*.pem /etc/kubernetes/ssl/

# 创建kubectl访问apiserver的kubeconfig文件

# 授权访问集群apiserver的认证信息并生成kubeconfig配置文件

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.99.167:6443 --kubeconfig=kube.config

# 授权admin用户证书信息并并加入到kubeconfig配置文件

kubectl config set-credentials admin --client-certificate=admin.pem --client-key=admin-key.pem --embed-certs=true --kubeconfig=kube.config

# 配置集群上下文,集群名称为kubernetes,用户信息为admin

kubectl config set-context kubernetes --cluster=kubernetes --user=admin --kubeconfig=kube.config

# 使用kubernetes上下文

kubectl config use-context kubernetes --kubeconfig=kube.config

mkdir ~/.kube

cp -p kube.config ~/.kube/config

# 查看apiserver组件状态

kubectl cluster-info

kubectl get cs

5 部署kube-controller-manager

签发证书

# 配置签发kube-controller-manager证书请求文件

cat << EOF > kube-controller-manager-csr.json

{

"CN": "system:kube-controller-manager",

"hosts": [

# kube-controller-manager所在的服务器IP,此处为了便于后期扩容所以将所有节点都写了进去。

"127.0.0.1",

"192.168.99.167",

"192.168.99.93",

"192.168.99.130"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-controller-manager",

"OU": "system"

}

]

}

EOF

# 生成controller-manager证书(由CA颁发)

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

# 创建controller-manager访问apiserver的kubeconfig文件

# 授权访问集群apiserver的认证信息并生成kube-controller-manager.kubeconfig配置文件

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.99.167:6443 --kubeconfig=kube-controller-manager.kubeconfig

# 授权system:kube-controller-manager用户证书信息并加入到kube-controller-manager.kubeconfig文件

kubectl config set-credentials system:kube-controller-manager --client-certificate=kube-controller-manager.pem --client-key=kube-controller-manager-key.pem --embed-certs=true --kubeconfig=kube-controller-manager.kubeconfig

# 配置集群上下文,集群名称为kubernetes,用户信息为kube-controller-manager

kubectl config set-context system:kube-controller-manager --cluster=kubernetes --user=system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

# 使用kubernetes上下文

kubectl config use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

操作步骤

# 部署kube-controller-manager

# kube-controler-manager服务配置文件

cat << EOF > /etc/kubernetes/kube-controller-manager.conf

KUBE_CONTROLLER_MANAGER_OPTS=" \

--secure-port=10257 \

--bind-address=127.0.0.1 \

--kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \

--service-cluster-ip-range=10.96.0.0/16 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--allocate-node-cidrs=true \

--cluster-cidr=10.244.0.0/16 \

--root-ca-file=/etc/kubernetes/ssl/ca.pem \

--service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \

--leader-elect=true \

--feature-gates=RotateKubeletServerCertificate=true \

--controllers=*,bootstrapsigner,tokencleaner \

--tls-cert-file=/etc/kubernetes/ssl/kube-controller-manager.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kube-controller-manager-key.pem \

--use-service-account-credentials=true \

--v=2"

EOF

# controller-manager服务管理配置文件

cat << EOF > /etc/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

Type=notify

EnvironmentFile=-/etc/kubernetes/kube-controller-manager.conf

ExecStart=/usr/local/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

# 同步证书

cp -p kube-controller-manager*.pem /etc/kubernetes/ssl/

cp -p kube-controller-manager.kubeconfig /etc/kubernetes/

systemctl daemon-reload

systemctl enable --now kube-controller-manager.service

# 查看kube-controller-manager组件状态

kubectl get cs

# systemctl status kube-controller-manager.service 可能因为apiserver限流问题导致状态异常,k8s系统组件状态ok即可,不影响k8s部署

6 部署kube-scheduler

签发证书

# 配置签发kube-scheduler 证书请求文件

cat << EOF > kube-scheduler-csr.json

{

"CN": "system:kube-scheduler",

"hosts": [

# hosts配置可以使用证书的ip,填写一个网段的第一个IP意味着授权整个网段,如192.168.0.1,为空时任何人都可以使用

"127.0.0.1",

"192.168.99.167",

"192.168.99.93",

"192.168.99.130"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-scheduler",

"OU": "system"

}

]

}

EOF

# 生成scheduler证书(由CA颁发)

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

# 授权访问集群apiserver的认证信息并生成kube-scheduler.kubeconfig配置文件

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.99.167:6443 --kubeconfig=kube-scheduler.kubeconfig

# 授权system:kube-scheduler用户证书信息并加入到kube-scheduler.kubeconfig文件

kubectl config set-credentials system:kube-scheduler --client-certificate=kube-scheduler.pem --client-key=kube-scheduler-key.pem --embed-certs=true --kubeconfig=kube-scheduler.kubeconfig

# 配置集群上下文,集群名称为kubernetes,用户信息为kube-scheduler

kubectl config set-context system:kube-scheduler --cluster=kubernetes --user=system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

# 使用kubernetes上下文

kubectl config use-context system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

操作步骤

# 部署kube-scheduler

# kube-scheduler服务配置文件

cat << EOF > /etc/kubernetes/kube-scheduler.conf

KUBE_SCHEDULER_OPTS=" \

--kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \

--leader-elect=true \

--v=2"

EOF

# scheduler服务管理配置文件

cat << EOF > /etc/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

Type=notify

EnvironmentFile=-/etc/kubernetes/kube-scheduler.conf

ExecStart=/usr/local/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

# 同步证书至k8s集群Master节点

cp -p kube-scheduler*.pem /etc/kubernetes/ssl/

cp -p kube-scheduler.kubeconfig /etc/kubernetes/

systemctl daemon-reload

systemctl enable --now kube-scheduler.service

# 查看kube-scheduler组件状态

kubectl get cs

# systemctl status kube-scheduler.service 可能因为apiserver限流问题导致状态异常,k8s系统组件状态ok即可,不影响k8s部署

7 部署kubelet

签发证书

# 生成kubelet使用的kubeconfig

# master生成kubelet-bootstrap的kubeconfig文件

BOOTSTRAP_TOKEN=$(awk -F "," '{print $1}' /etc/kubernetes/token.csv)

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.99.167:6443 --kubeconfig=kubelet-bootstrap.kubeconfig

kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=kubelet-bootstrap.kubeconfig

kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=kubelet-bootstrap.kubeconfig

kubectl config use-context default --kubeconfig=kubelet-bootstrap.kubeconfig

# 创建集群角色进行绑定

kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=kubelet-bootstrap

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap --kubeconfig=kubelet-bootstrap.kubeconfig

kubectl describe clusterrolebinding cluster-system-anonymous

kubectl describe clusterrolebinding kubelet-bootstrap

scp -p kubelet-bootstrap.kubeconfig 192.168.99.93:/etc/kubernetes/

scp -p ca.pem 192.168.99.93:/etc/kubernetes/ssl/

scp -p kubelet-bootstrap.kubeconfig 192.168.99.130:/etc/kubernetes/

scp -p ca.pem 192.168.99.130:/etc/kubernetes/ssl/

操作步骤(Node进行)

# node节点创建kubelet配置文件

cat << EOF > /etc/kubernetes/kubelet.json

{

"kind": "KubeletConfiguration",

"apiVersion": "kubelet.config.k8s.io/v1beta1",

"authentication": {

"x509": {

"clientCAFile": "/etc/kubernetes/ssl/ca.pem"

},

"webhook": {

"enabled": true,

"cacheTTL": "2m0s"

},

"anonymous": {

"enabled": false

}},

"authorization": {

"mode": "Webhook",

"webhook": {

"cacheAuthorizedTTL": "5m0s",

"cacheUnauthorizedTTL": "30s"

}

},

"address": "192.168.99.130", # 修改为Node节点自身IP地址

"port": 10250,

"readOnlyPort": 10255,

"cgroupDriver": "systemd",

"hairpinMode": "promiscuous-bridge",

"serializeImagePulls": false,

"clusterDomain": "cluster.local.",

"clusterDNS": ["10.96.0.2"]

}

EOF

# kubelet服务管理配置文件

cat << EOF > /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=docker.service

Requires=docker.service

[Service]

workingDirectory=/var/lib/kubelet

ExecStart=/usr/local/bin/kubelet \

--bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig \

--cert-dir=/etc/kubernetes/ssl \

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \

--config=/etc/kubernetes/kubelet.json \

--container-runtime-endpoint=unix:///run/cri-dockerd.sock \

--pod-infra-container-image=rancher/pause:3.6 \

--v=2

Restart=on-failure

Restartsec=5

[Install]

WantedBy=multi-user.target

EOF

# --pod-infra-container-image 指定基础设施容器

mkdir -p /var/lib/kubelet

systemctl daemon-reload

systemctl enable --now kubelet.service

# 在Master上执行,可查看到节点信息,状态为NotReady,等待部署完calico后状态为Ready

kubectl get nodes

kubectl get csr # 查看集群证书申请信息

8 部署kube-proxy

签发证书

# 配置签发kube-proxy 证书请求文件

cat << EOF > kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "kubemsb",

"OU": "CN"

}

]

}

EOF

# 生成proxy证书(由CA颁发)

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

# 授权访问集群apiserver的认证信息并生成kube-proxy.kubeconfig配置文件

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.99.167:6443 --kubeconfig=kube-proxy.kubeconfig

# 授权system:kube-proxy用户证书信息并加入到kube-proxy.kubeconfig文件

kubectl config set-credentials kube-proxy --client-certificate=kube-proxy.pem --client-key=kube-proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig

# 配置集群上下,集群名称为kubernetes,用户信息为kube-proxy

kubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig

# 使用kubernetes上下文

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

操作步骤(Node进行)

# kube-proxy部署 # kube-proxy服务配置文件 cat << EOF > /etc/kubernetes/kube-proxy.yaml apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration bindAddress: 192.168.99.130 # 修改为Node节点自身IP地址 clientConnection: kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig clusterCIDR: 10.244.0.0/16 healthzBindAddress: 192.168.99.130:10256 # 修改为Node节点自身IP地址 metricsBindAddress: 192.168.99.130:10249 # 修改为Node节点自身IP地址 mode: "ipvs" EOF # k8s组件配置文件的版本v1、v1alpha1、v1beta1的区别 #- v1:这是稳定的版本,已经经过充分测试,并且是推荐用于生产环境的版本。它的API规范不会轻易改变,除非为了向前兼容而进行必要改进或者废弃旧功能。 #- v1alpha1、v1beta1:这些是Kubernetes API的预发布版本。它们通常包含新的特性和实验性功能,还在不断演进和完善之中。v1alpha1表示特性尚处于早期实验阶段,可能存在不稳定或未完全实现的情况;而v1beta1则意味着该特性已经相对成熟,但可能仍需进一步测试和调整,才会晋升为稳定版(v1)。 # prxy服务管理配置文件 cat << EOF > /etc/systemd/system/kube-proxy.service [Unit] Description=Kubernetes Kube-Proxy Server Documentation=https://github.com/kubernetes/kubernetes After=network.target [Service] workingDirectory=/var/lib/kube-proxy ExecStart=/usr/local/bin/kube-proxy \ --config=/etc/kubernetes/kube-proxy.yaml \ --v=2 Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF mkdir -p /var/lib/kube-proxy # 同步证书至k8s集群node节点 scp -p kube-proxy.kubeconfig kube-proxy*.pem 192.168.99.93:/etc/kubernetes/ scp -p kube-proxy.kubeconfig kube-proxy*.pem 192.168.99.130:/etc/kubernetes/ systemctl daemon-reload systemctl enable --now kube-proxy.service

9 部署calico

YAML下载

下载地址:https://docs.projectcalico.org/archive/v3.25/manifests/calico.yaml

镜像下载

下载地址:https://hub.docker.com

镜像名:docker.io/calico/cni:v3.25.0 docker.io/calico/node:v3.25.0 docker.io/calico/kube-controllers:v3.25.0 docker.io/rancher/pause:3.6 docker.io/coredns/coredns:1.9.4

-

注:找一台可联网的服务器拉取以上镜像并导入3台服务器

# 若服务器联网直接拉取后跳过打包导入步骤 docker pull docker.io/calico/cni:v3.25.0 docker pull docker.io/calico/node:v3.25.0 docker pull docker.io/calico/kube-controllers:v3.25.0 # 打包 docker save -o calico/cni:v325.tar docker.io/calico/cni:v3.25.0 docker save -o calico/node:v325.tar docker.io/calico/node:v3.25.0 docker save -o calico/kube-controllers:v325.tar docker.io/calico/kube-controllers:v3.25.0 # 将tar包传至服务器节点,导入镜像 docker load -i calico/cni:v325.tar docker load -i calico/node:v325.tar docker load -i calico/kube-controllers:v325.tar

操作步骤(Master进行)

# 修改默认网桥calico.yaml里pod所在网段改成 --cluster-cidr=10.244.0.0/16 时选项所指定的网段 vim calico.yaml # no effect. This should fall within `--cluster-cidr`. # - name: CALICO_IPV4POOL_CIDR # value: "192.168.1.0/16" # no effect. This should fall within `--cluster-cidr`. - name: CALICO_IPV4POOL_CIDR value: "10.244.0.0/16" # 查找image将镜像替换为对应导入后的calico镜像 vim calico.yaml /image kubectl apply -f calico.yaml # 会拉取部署calico相关镜像与pause镜像(确定镜像已导入到每个节点) # 查看calico容器状态 kubectl get all -A # 部署完calico后node节点状态为Ready kubectl get nodes

10 部署coredns

操作步骤

# 部署coredns

cat << EOF > coredns.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- discovery.k8s.io

resources:

- endpointslices

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/name: "CoreDNS"

app.kubernetes.io/name: coredns

spec:

# replicas: not specified here:

# 1. Default is 1.

# 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

app.kubernetes.io/name: coredns

template:

metadata:

labels:

k8s-app: kube-dns

app.kubernetes.io/name: coredns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

containers:

- name: coredns

image: docker.io/coredns/coredns:1.9.4

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

app.kubernetes.io/name: coredns

spec:

selector:

k8s-app: kube-dns

app.kubernetes.io/name: coredns

clusterIP: 10.96.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

EOF

# 修改coredns.yaml文件内容,以上yaml已修改完成

# sed -i -e "s/CLUSTER_DNS_IP/10.96.0.2/g" -e "s/CLUSTER_DOMAIN/cluster.local/g" -e "s/REVERSE_CIDRS/in-addr.arpa\ ip6.arpa/g" -e "s/STUBDOMAINS//g" -e "s#UPSTREAMNAMESERVER#/etc/resolv.conf#g" coredns.yaml

# 部署

kubectl apply -f coredns.yaml

# 查看coredns容器状态

kubectl get all -A

# 测试域名解析

dig -t a <--输入本机可解析的域名--> @10.96.0.2

验证阶段

注:请提前配置好镜像仓库

配置镜像仓库访问凭据(Secret)

YAML文件

kind: Secret

apiVersion: v1

metadata:

name: default-secret

namespace: default

data:

.dockerconfigjson:

<--Base64编码-->

type: kubernetes.io/dockerconfigjson

操作步骤

# 登录镜像仓库 docker login <--镜像仓库地址--> # 将认证凭证进行base64编码加密 cat ~/.docker/config.json | base64 # 创建Secret kubectl apply -f default-secret.yaml # 查看Secret kubectl get secret # 在后续部署应用容器时指定spec.template.spec.imagePullSecrets: - name: default-secret

部署应用

1 部署Nginx

YAML文件

apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment spec: replicas: 3 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx:latest ports: - containerPort: 80 imagePullSecrets: - name: default-secret --- apiVersion: v1 kind: Service metadata: name: nginx-service spec: selector: app: nginx ports: - protocol: TCP port: 80 targetPort: 80 nodePort: 30001 type: NodePort

操作步骤

# 部署nginx kubectl apply -f nginx-deployment.yaml # 查看容器状态 kubectl get pod -o wide

2731

2731

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?