filebeat —> logstash —>elasticsearch —> kibana

官网地址:

https://www.elastic.co/guide/en/kibana/current/settings.html

https://www.elastic.co/guide/en/logstash/current/docker-config.html

https://www.elastic.co/guide/en/elasticsearch/reference/8.6/docker.html

geoip插件下载地址:https://www.maxmind.com/en/accounts/936663/geoip/downloads?show_all_dates=1

一、centos7 yum安装源

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

二、docker部署

ELK和Filebeat组合在一起可以实现更强大的日志收集和处理功能。

1. 创建Docker网络(省略)

ELK和Filebeat的容器将会在同一个网络下通信,因此我们需要先创建一个Docker网络。

docker network create -d overlay --attachable elastic-net

docker service部署elk3副本(可忽略,笔记)

docker service create --name es --network elastic-net --replicas 3 -p 9200:9200 -p 9300:9300 -e "discovery.type=single-node" elasticsearch:8.6.2

docker service logs -f es

curl -X GET "localhost:9200/_cat/nodes?v&pretty"

2. 部署Elasticsearch、kibana容器

我们将在Docker容器中部署Elasticsearch。

在这个命令中,我们使用docker run命令创建并启动一个名为elasticsearch的容器。-p选项将Elasticsearch容器的端口4映射到主机的端口,–network选项将容器连接到之前创建的elk-network网络。最后,我们将discovery.type配置为single-node,以指示该节点是一个独立节点。

a、创建所需目录及yml

mkdir -p /u01/elk/elasticsearch/es0{1,2,3}/data

mkdir -p /u01/elk/elasticsearch/es0{1,2,3}/logs

mkdir -p /u01/elk/kibana/{data,config}

mkdir -p /u01/elk/logstash/{data,config}

chmod -R 777 /u01/elk/*

a1、编写docker-copose.yml

cd /u01/elk/elasticsearch/

vim docker-compose.yml

version: "2.2"

services:

setup:

image: elasticsearch:8.6.2

volumes:

- ./certs:/usr/share/elasticsearch/config/certs

user: "0"

command: >

bash -c '

if [ x${ELASTIC_PASSWORD} == x ]; then

echo "Set the ELASTIC_PASSWORD environment variable in the .env file";

exit 1;

elif [ x${KIBANA_PASSWORD} == x ]; then

echo "Set the KIBANA_PASSWORD environment variable in the .env file";

exit 1;

fi;

if [ ! -f config/certs/ca.zip ]; then

echo "Creating CA";

bin/elasticsearch-certutil ca --silent --pem -out config/certs/ca.zip;

unzip config/certs/ca.zip -d config/certs;

fi;

if [ ! -f config/certs/certs.zip ]; then

echo "Creating certs";

echo -ne \

"instances:\n"\

" - name: es01\n"\

" dns:\n"\

" - es01\n"\

" - localhost\n"\

" ip:\n"\

" - 127.0.0.1\n"\

" - name: es02\n"\

" dns:\n"\

" - es02\n"\

" - localhost\n"\

" ip:\n"\

" - 127.0.0.1\n"\

" - name: es03\n"\

" dns:\n"\

" - es03\n"\

" - localhost\n"\

" ip:\n"\

" - 127.0.0.1\n"\

> config/certs/instances.yml;

bin/elasticsearch-certutil cert --silent --pem -out config/certs/certs.zip --in config/certs/instances.yml --ca-cert config/certs/ca/ca.crt --ca-key config/certs/ca/ca.key;

unzip config/certs/certs.zip -d config/certs;

fi;

echo "Setting file permissions"

chown -R root:root config/certs;

find . -type d -exec chmod 750 \{\} \;;

find . -type f -exec chmod 640 \{\} \;;

echo "Waiting for Elasticsearch availability";

until curl -s --cacert config/certs/ca/ca.crt https://es01:9200 | grep -q "missing authentication credentials"; do sleep 30; done;

echo "Setting kibana_system password";

until curl -s -X POST --cacert config/certs/ca/ca.crt -u "elastic:${ELASTIC_PASSWORD}" -H "Content-Type: application/json" https://es01:9200/_security/user/kibana_system/_password -d "{\"password\":\"${KIBANA_PASSWORD}\"}" | grep -q "^{}"; do sleep 10; done;

echo "All done!";

'

healthcheck:

test: ["CMD-SHELL", "[ -f config/certs/es01/es01.crt ]"]

interval: 1s

timeout: 5s

retries: 120

es01:

container_name: es01

depends_on:

setup:

condition: service_healthy

image: elasticsearch:8.6.2

volumes:

- ./certs:/usr/share/elasticsearch/config/certs

- ./es01/data:/usr/share/elasticsearch/data

- ./es01/logs:/usr/share/elasticsearch/logs

ports:

- 9200:9200

- 9300:9300

environment:

- node.name=es01

- cluster.name=${CLUSTER_NAME}

- cluster.initial_master_nodes=es01,es02,es03

- discovery.seed_hosts=es02,es03

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- bootstrap.memory_lock=true

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=true

- xpack.security.http.ssl.key=certs/es01/es01.key

- xpack.security.http.ssl.certificate=certs/es01/es01.crt

- xpack.security.http.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.enabled=true

- xpack.security.transport.ssl.key=certs/es01/es01.key

- xpack.security.transport.ssl.certificate=certs/es01/es01.crt

- xpack.security.transport.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.verification_mode=certificate

- xpack.license.self_generated.type=${LICENSE}

mem_limit: ${MEM_LIMIT}

ulimits:

memlock:

soft: -1

hard: -1

deploy:

resources:

limits:

cpus: '0.5'

memory: 1g

reservations:

cpus: '0.25'

memory: 512m

healthcheck:

test:

[

"CMD-SHELL",

"curl -s --cacert config/certs/ca/ca.crt https://localhost:9200 | grep -q 'missing authentication credentials'",

]

interval: 10s

timeout: 10s

retries: 120

es02:

container_name: es02

depends_on:

- es01

image: elasticsearch:8.6.2

volumes:

- ./certs:/usr/share/elasticsearch/config/certs

- ./es02/data:/usr/share/elasticsearch/data

- ./es02/logs:/usr/share/elasticsearch/logs

environment:

- node.name=es02

- cluster.name=${CLUSTER_NAME}

- cluster.initial_master_nodes=es01,es02,es03

- discovery.seed_hosts=es01,es03

- bootstrap.memory_lock=true

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=true

- xpack.security.http.ssl.key=certs/es02/es02.key

- xpack.security.http.ssl.certificate=certs/es02/es02.crt

- xpack.security.http.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.enabled=true

- xpack.security.transport.ssl.key=certs/es02/es02.key

- xpack.security.transport.ssl.certificate=certs/es02/es02.crt

- xpack.security.transport.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.verification_mode=certificate

- xpack.license.self_generated.type=${LICENSE}

mem_limit: ${MEM_LIMIT}

ulimits:

memlock:

soft: -1

hard: -1

deploy:

resources:

limits:

cpus: '0.5'

memory: 1g

reservations:

cpus: '0.25'

memory: 512m

healthcheck:

test:

[

"CMD-SHELL",

"curl -s --cacert config/certs/ca/ca.crt https://localhost:9200 | grep -q 'missing authentication credentials'",

]

interval: 10s

timeout: 10s

retries: 120

es03:

container_name: es03

depends_on:

- es02

image: elasticsearch:8.6.2

volumes:

- ./certs:/usr/share/elasticsearch/config/certs

- ./es03/logs:/usr/share/elasticsearch/logs

- ./es03/data:/usr/share/elasticsearch/data

environment:

- node.name=es03

- cluster.name=${CLUSTER_NAME}

- cluster.initial_master_nodes=es01,es02,es03

- discovery.seed_hosts=es01,es02

- bootstrap.memory_lock=true

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=true

- xpack.security.http.ssl.key=certs/es03/es03.key

- xpack.security.http.ssl.certificate=certs/es03/es03.crt

- xpack.security.http.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.enabled=true

- xpack.security.transport.ssl.key=certs/es03/es03.key

- xpack.security.transport.ssl.certificate=certs/es03/es03.crt

- xpack.security.transport.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.verification_mode=certificate

- xpack.license.self_generated.type=${LICENSE}

mem_limit: ${MEM_LIMIT}

ulimits:

memlock:

soft: -1

hard: -1

deploy:

resources:

limits:

cpus: '0.5'

memory: 1g

reservations:

cpus: '0.25'

memory: 512m

healthcheck:

test:

[

"CMD-SHELL",

"curl -s --cacert config/certs/ca/ca.crt https://localhost:9200 | grep -q 'missing authentication credentials'",

]

interval: 10s

timeout: 10s

retries: 120

kibana:

container_name: kibana

depends_on:

es01:

condition: service_healthy

es02:

condition: service_healthy

es03:

condition: service_healthy

image: kibana:8.6.2

volumes:

- ./certs:/usr/share/kibana/config/certs

- /u01/elk/kibana/data:/usr/share/kibana/data

ports:

- ${KIBANA_PORT}:5601

environment:

- SERVERNAME=kibana

- ELASTICSEARCH_HOSTS=https://es01:9200

- ELASTICSEARCH_USERNAME=kibana_system

- ELASTICSEARCH_PASSWORD=${KIBANA_PASSWORD}

- SERVER_PUBLICBASEURL=http://192.168.174.130:5601

- ELASTICSEARCH_SSL_CERTIFICATEAUTHORITIES=config/certs/ca/ca.crt

mem_limit: ${MEM_LIMIT}

healthcheck:

test:

[

"CMD-SHELL",

"curl -s -I http://localhost:5601 | grep -q 'HTTP/1.1 302 Found'",

]

interval: 10s

timeout: 10s

retries: 120

#volumes:

# certs:

# driver: local

# esdata01:

# driver: local

# esdata02:

# driver: local

# esdata03:

# driver: local

# kibanadata:

# driver: local

a2、同目录下编写 .env文件

# Password for the 'elastP@sswordic' user (at least 6 characters)

ELASTIC_PASSWORD=P@ssword

# Password for the 'kibana_system' user (at least 6 characters)

KIBANA_PASSWORD=P@ssword

# Version of Elastic products

STACK_VERSION=8.6.2

# Set the cluster name

CLUSTER_NAME=es_cluster

# Set to 'basic' or 'trial' to automatically start the 30-day trial

LICENSE=basic

#LICENSE=trial

# Port to expose Elasticsearch HTTP API to the host

ES_PORT=9200

#ES_PORT=127.0.0.1:9200

# Port to expose Kibana to the host

KIBANA_PORT=5601

#KIBANA_PORT=80

# Increase or decrease based on the available host memory (in bytes)

MEM_LIMIT=1073741824

# Project namespace (defaults to the current folder name if not set)

#COMPOSE_PROJECT_NAME=myproject

a3、后台启动

docker compose up -d ## 后台运行

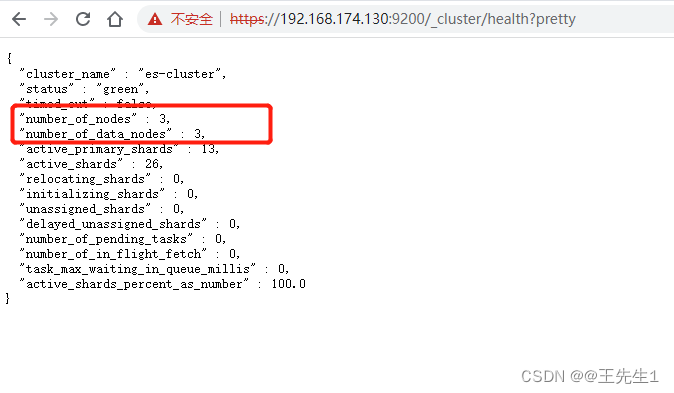

a4、查看集群健康状态

https://192.168.174.130:9200/_cluster/health?pretty

b、docker-compose.yaml shell脚本注解

检查环境变量ELASTIC_PASSWORD和KIBANA_PASSWORD是否设置,如果没有设置,则输出提示信息并退出脚本。

检查是否已经生成了CA和证书。如果没有生成,则使用elasticsearch-certutil工具生成,将生成的CA和证书文件保存在config/certs目录下。

设置文件权限为root:root和750/640,以保护文件安全。

等待Elasticsearch可用,并检查是否需要身份验证。使用curl命令检查Elasticsearch是否可用,如果返回的信息中包含"missing authentication credentials"字样,则说明不需要身份验证。此处等待30秒,直到Elasticsearch可用。

设置Kibana_system用户的密码。使用curl命令修改Kibana_system用户的密码,直到返回的信息中包含空json {} 为止。

输出"All done!"表示脚本执行完成。

c、health check注解

test:指定健康检查的命令或脚本,使用的格式为 CMD-SHELL,表示在容器内执行 Shell 命令。

interval:健康检查的间隔时间,默认为 30s。

timeout:每次健康检查的超时时间,默认为 30s。

retries:健康检查失败后的重试次数,默认为 3 次。

d、 depends_on注解

用于指定服务之间的依赖关系,其中depends_on用于指定本服务依赖的其他服务,condition用于指定依赖关系的条件。

在这段配置中,depends_on指定了本服务依赖的服务名为setup,condition指定了当setup服务的健康检查状态为service_healthy时,才会启动本服务。这意味着,本服务启动前会等待setup服务健康检查通过。

e、mem_limit资源限制

mem_limit: 容器最大内存限制。这里使用变量 ${MEM_LIMIT} 来设置内存限制,该变量的值应该在部署前设置。

ulimits: 包含容器的ulimit参数配置,用于控制容器对系统资源的使用。这里的 memlock 参数设置内存锁定限制,将内存锁定限制设置为无限制。

deploy: 用于定义Docker服务的部署策略和资源配置,包括:

resources: 用于指定该服务可以使用的CPU和内存资源的上限和下限。在这个例子中,设置CPU使用限制为0.5,内存限制为1G。同时,设置CPU使用保证为0.25,内存保证为512M。

注意这里的 limits 和 reservations 是两个不同的限制级别,限制级别的不同会影响Docker的调度策略。

3. 部署Logstash容器

我们将使用Logstash来收集、处理和转换数据,并将其发送到Elasticsearch。在这里,我们使用映射配置文件的方式来部署Logstash。

准备Logstash配置文件

cat logstash.yml

http.host: "0.0.0.0"

xpack.monitoring.elasticsearch.hosts: [ "https://es01:9200" ]

xpack.monitoring.elasticsearch.username: "elastic"

xpack.monitoring.elasticsearch.password: "P@ssword"

xpack.monitoring.elasticsearch.ssl.certificate_authority: "/usr/share/logstash/config/ca.crt"

cat logstash.conf

input {

beats {

port => 5044

}

}

filter {

grok {

match => { "message" => "%{COMBINEDAPACHELOG}" }

}

}

output {

elasticsearch {

hosts => "https://es01:9200"

user => "elastic"

password => "P@ssword"

ssl => true

cacert => "/user/share/logstash/config/ca.crt"

index => "httpd_logstash-%{+YYYY.MM.dd}"

}

}

这个配置文件定义了Logstash如何处理收集到的日志数据。其中:

input定义了使用Beats协议监听5044端口来接收数据。

filter定义了如何将原始的日志数据进行过滤和转换,这里使用Grok插件匹配Apache日志格式。

output定义了将处理后的日志数据输出到Elasticsearch的方式。

运行logstash

docker run -d \

-v /u01/elk/logstash/config/logstash.conf:/usr/share/logstash/config/logstash.conf \

-v /u01/elk/logstash/config/logstash.yml:/usr/share/logstash/config/logstash.yml \

-v /u01/elk/logstash/config/ca.crt:/usr/share/logstash/config/ca.crt --name logstash --network elasticsearch_default logstash:8.6.2 ./bin/logstash -f /usr/share/logstash/config/your_config_path

4. 部署Filebeat容器

4.1 准备Filebeat配置文件

我们将在主机上创建一个名为filebeat.yml的配置文件,并将其映射到容器内的路径。

filebeat.inputs:

- type: log

paths:

- /u01/elk/filebeat/logs/*.log

output.logstash:

hosts: ["logstash:5044"]

这个配置文件定义了Filebeat如何收集和处理日志数据。其中:

filebeat.inputs定义了Filebeat要监控的日志文件路径。

output.logstash定义了将日志数据发送到Logstash的方式。

4.2 启动Filebeat容器

docker run -d --name filebeat \

--network elasticsearch_default \

-v /u01/elk/to/filebeat.yml:/usr/share/filebeat/filebeat.yml \

-v /var/lib/docker/containers:/var/lib/docker/containers:ro \

filebeat:8.6.2

在这个命令中,我们使用docker run命令创建并启动一个名为filebeat的容器。–network选项将容器连接到之前创建的elasticsearch_default网络。-v选项将主机上的Filebeat配置文件映射到容器内的路径。此外,我们还将Docker容器的日志路径映射到Filebeat容器内的路径,以便可以收集和处理Docker容器的日志数据。

5. 启动Kibana容器

最后,我们将在Docker容器中启动Kibana来可视化和分析Elasticsearch中的日志数据。

docker run -d --name kibana \

-p 5601:5601 \

--network elasticsearch_default \

kibana:8.6.2

在这个命令中,我们使用docker run命令创建并启动一个名为kibana的容器。-p选项将Kibana容器的端口映射到主机的端口,–network选项将容器连接到之前创建的elasticsearch_default网络。

三、配置文件

logstash:

配置查询文档:https://www.elastic.co/guide/en/logstash/current/index.html

examples—> options

conf match=模式

[root@hh patterns]# pwd

/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/logstash-patterns-core-4.3.4/patterns

logstash.conf

input{

beats {

port => 5044

}

file {

path => [ "/usr/local/nginx/logs/access.log","/usr/local/nginx/logs/error.log" ]

sincedb_path => "/var/log/logstash/since.db"

start_position => "beginning"

type => "nginxlog"

}

tcp {

mode => "server"

host => "0.0.0.0"

port => 8888

type => "tcplog"

}

udp {

port => 8888

type => "udplog"

}

}

filter{

grok {

# match => { "message" => "%{IP:client} %{WORD:method} %{URIPATHPARAM:request} %{NUMBER:bytes} %{NUMBER:duration}" }

match => { "message" => "(?<ip>[0-9\.]+).*\[(?<time>.+)\] \"(?<method>[A-Z]+) (?<url>\S+) (?<ver>[^\"]+)\" (?<rc>\d+) (?<size>\d+) \".*\" \"(?<agent>.*)\""}

}

}

output{

#stdout{ codec => "json"}

stdout{ codec => "rubydebug"}

}

elasticsearch

elasticsearch.yml

[root@hh elasticsearch]# grep -Pv "^(#|$)" elasticsearch.yml

node.name: es1 ##hosts文件中主机名

path.data: /var/lib/elasticsearch

path.logs: /var/log/elasticsearch

network.host: 192.168.174.130 ###0.0.0.0监听地址

discovery.seed_hosts: ["es1"] ###集群多个节点

cluster.initial_master_nodes: ["es1"]

http.cors.enabled: true

http.cors.allow-origin: "*"

kibana

#server.port: 5601

server.host: '0.0.0.0'

#server.basePath: ""

#server.maxPayloadBytes: 1048576

#server.name: "your-hostname"

elasticsearch.url: 'http://192.168.174.130:9201'

#elasticsearch.preserveHost: true

#kibana.index: ".kibana"

#kibana.defaultAppId: "discover"

#elasticsearch.username: "user"

#elasticsearch.password: "pass"

#server.ssl.enabled: false

#server.ssl.certificate: /path/to/your/server.crt

#server.ssl.key: /path/to/your/server.key

#elasticsearch.ssl.certificate: /path/to/your/client.crt

#elasticsearch.ssl.key: /path/to/your/client.key

#elasticsearch.ssl.certificateAuthorities: [ "/path/to/your/CA.pem" ]

#elasticsearch.ssl.verificationMode: full

#elasticsearch.pingTimeout: 1500

#elasticsearch.requestTimeout: 30000

#elasticsearch.requestHeadersWhitelist: [ authorization ]

#elasticsearch.customHeaders: {}

#elasticsearch.shardTimeout: 0

#elasticsearch.startupTimeout: 5000

#pid.file: /var/run/kibana.pid

#logging.dest: stdout

#logging.silent: false

#logging.quiet: false

#logging.verbose: false

#ops.interval: 5000

i18n.defaultLocale: "zh_CN"

后期单加

./elasticsearch-setup-passwords interactive

##这俩是开启ssl的

cd /usr/share/elasticsearch/bin/

./elasticsearch-certutil ca

./elasticsearch-certutil cert --ca elastic-stack-ca.p12

chmod 640 /etc/elasticsearch/elastic-stack-ca.p12

chown elasticsearch:elasticsearch /etc/elasticsearch/elastic-stack-ca.p12

elasticsearch.yml

root@cygx-elk:/etc/elasticsearch# egrep -v "^#|^$" elasticsearch.yml

cluster.name: elk

node.name: cygx-es

path.data: /var/lib/elasticsearch

path.logs: /var/log/elasticsearch

bootstrap.memory_lock: false

network.host: 0.0.0.0

network.publish_host: 192.168.0.153

http.port: 9200

discovery.seed_hosts: ["192.168.0.153"]

cluster.initial_master_nodes: ["192.168.0.153"]

action.destructive_requires_name: true

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: elastic-certificates.p12

xpack.security.transport.ssl.truststore.path: elastic-certificates.p12

xpack.security.transport.ssl.keystore.password: 123456

xpack.security.transport.ssl.truststore.password: 123456

kibana.yml

root@cygx-elk:/etc/kibana# egrep -v "^#|^$" kibana.yml

server.port: 5601

server.host: "192.168.0.153"

elasticsearch.hosts: ["http://192.168.0.153:9200"]

elasticsearch.username: "kibana"

elasticsearch.password: "123456"

i18n.locale: "zh-CN"

logstash.yml

root@cygx-elk:/etc/logstash# egrep -v "^#|^$" logstash.yml

path.data: /var/lib/logstash

path.logs: /var/log/logstash

logstash-to-es.conf

##logstash-to-es.conf

input {

redis {

data_type => "list"

key => "cygx-log"

host => "192.168.0.153"

port => "6379"

db => "0"

password => "1111111"

}

}

filter {

if [fields][project] == "cygx-nginx-accesslog" {

geoip {

source => "clientip"

target => "geoip"

database => "/etc/logstash/GeoLite2-City_20231107/GeoLite2-City.mmdb"

add_field => [ "[geoip][coordinates]", "%{[geoip][longitude]}" ]

add_field => [ "[geoip][coordinates]", "%{[geoip][latitude]}" ]

}

mutate {

convert => [ "[geoip][coordinates]", "float" ]

}

}

}

output {

if [fields][project] == "cygx-nginx-accesslog" {

elasticsearch {

hosts => ["http://192.168.0.153:9200"]

user => "elastic"

password => "123456"

index => "cygx-nginx-accesslog-%{+YYYY.MM.dd}"

}

}

}

本文提供了一个详细的步骤,指导如何在CentOS7上使用Docker部署ELK(Elasticsearch,Logstash,Kibana)和Filebeat。首先,通过YUM添加Docker源,然后部署Docker网络和Elasticsearch服务。接着,配置Elasticsearch、Kibana和Logstash容器,包括证书生成、安全设置、健康检查以及资源限制。最后,介绍了如何配置和启动Filebeat以收集和发送日志数据。整个过程强调了安全性和日志处理的自动化流程。

本文提供了一个详细的步骤,指导如何在CentOS7上使用Docker部署ELK(Elasticsearch,Logstash,Kibana)和Filebeat。首先,通过YUM添加Docker源,然后部署Docker网络和Elasticsearch服务。接着,配置Elasticsearch、Kibana和Logstash容器,包括证书生成、安全设置、健康检查以及资源限制。最后,介绍了如何配置和启动Filebeat以收集和发送日志数据。整个过程强调了安全性和日志处理的自动化流程。

948

948

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?