1.docker的安装与配置

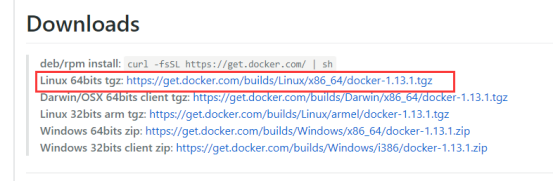

1.1下载所需的 docker 二进制文件

https://github.com/moby/moby/releases

1.2创建docker目录,上传并解压二进制包

*cd /usr*

*mkdir docker*

*cd docker*

*rz********(选中上传准备好的docker二进制包)*

*tar –zxvf docker-1.13.1.tgz*

1.3.将解压目录下的所有docker*文件复制到/usr/bin下

*cp /usr/docker/docker/docker* /usr/bin*

1.4.创建 docker 的 启动文件

*vi /usr/lib/systemd/system/docker.service*

[Unit]

Description=Docker Application Container Engine

Documentation=http://docs.docker.io

[Service]

Environment="PATH=/root/local/bin:/bin:/sbin:/usr/bin:/usr/sbin"

EnvironmentFile=-/etc/sysconfig/docker

ExecStart=/usr/bin/dockerd --log-level=error $DOCKER_NETWORK_OPTIONS --insecure-registry 172.16.3.30:5000

ExecReload=/bin/kill -s HUP $MAINPID

Restart=on-failure

RestartSec=5

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

1.5.关闭防火墙

*systemctl stop firewalld systemctl disable firewalld*

1.6启动docker并设置开机自启

*systemctl daemon-reload*

*systemctl enable docker systemctl start docker*

1.7.测试

*docker version*

*docker run hello-world*

2.Kubernetes的安装与配置

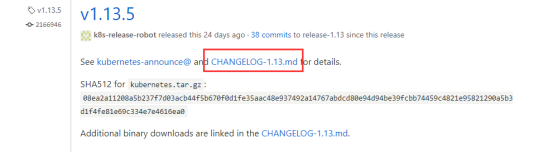

2.1.下载所需版本的K8S二进制文件(下载需要翻墙)

https://github.com/kubernetes/kubernetes/releases

Service Binaries中的kubernetes-server-linux-amd64.tar.gz文件已经包含了 K8S所需要的全部组件,无需单独下载Client等组件

2.2.本次只部署一主一从两个节点

****master:****etcd、kube-apiserver、kube-controller-manager、kube-scheduler、docker

****slaver:****kubelet、kube-proxy、flanneld、docker

2.3.Master节点部署

2.3.1. etcd数据库安装

*etcd是k8s集群的主数据库,在安装k8s其他服务之前首先安装与启动。*

*2.3.1.1.下载所需版本的etcd二进制文件*

https://github.com/coreos/etcd/releases/

*2.3.1.2.创建k8s目录,上传并解压二进制包*

*cd /usr*

*mkdir k8s*

*cd k8s*

*rz********(选中上传准备好的etcd二进制包)*

*tar –zxvf etcd-v3.3.11-linux-amd64.tar.gz*

*2.3.1.3.将解压目录下的etcd和etcdctl文件复制到/usr/bin下*

*cp etcd etcdctl /usr/bin/*

*2.3.1.4.创建 etcd 的 启动文件*

*vi /usr/lib/systemd/system/etcd.service*

[Unit]

Description=Etcd Server

After=network.target

[Service]

Type=simple

WorkingDirectory=/var/lib/etcd/

EnvironmentFile=-/etc/etcd/etcd.conf

ExecStart=/usr/bin/etcd

[Install]

WantedBy=multi-user.target

(其中WorkingD

ETCD_NAME=default

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_CLIENT_URLS="http://172.16.3.30:2379,http://172.16.3.30:4001,http://127.0.0.1:2379,http://127.0.0.1:4001"

ETCD_ADVERTISE_CLIENT_URLS="http://172.16.3.30:2379,http://172.16.3.30:4001,http://127.0.0.1:2379,http://127.0.0.1:4001"

irectory为etcd数据保存的目录,需要在启动etcd服务之前首先创建)

****2.3.1.5.创建配置/etc/etcd/etcd.conf文件****(红色部分为master节点的ip)

vi /etc/etcd/etcd.conf

*2.3.1.6.启动etcd服务并配置开机启动*

*systemctl daemon-reload*

*systemctl enable etcd.service*

*systemctl start etcd.service*

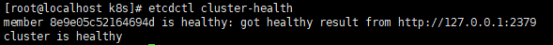

*2.3.1.7.校验*

*etcdctl cluster-health*

*2.3.2.kube-apiserver服务*

*2.3.2.1.将准备好的k8s二进制包上传并解压到/usr/k8s目录下*

*cd /usr/k8s*

*rz********(选中上传准备好的k8s二进制包)*

*tar –zxvf kubernetes-server-linux-amd64.tar.gz*

*2.3.2.2.将所需二进制文件复制到/usr/bin下*

*cp -r /usr/k8s/kubernetes/server/bin/{kube-apiserver,kube-controller-manager,kube-scheduler,kubectl} /usr/bin/*

*2.3.2.3.创建 kube-apiserver的启动文件*

*vi /usr/lib/systemd/system/kube-apiserver.service*

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

After=etcd.service

[Service]

EnvironmentFile=-/etc/kubernetes/apiserver

ExecStart=/usr/bin/kube-apiserver \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_ETCD_SERVERS \

$KUBE_API_ADDRESS \

$KUBE_API_PORT \

$KUBELET_PORT \

$KUBE_ALLOW_PRIV \

$KUBE_SERVICE_ADDRESSES \

$KUBE_ADMISSION_CONTROL \

$KUBE_API_ARGS

Restart=on-failure

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

****2.3.2.4.创建配置文件apiserver****(红色部分为master节点的ip)

*vi /etc/kubernetes/apiserver*

KUBE_API_ADDRESS="--address=0.0.0.0"

KUBE_API_PORT="--port=8080"

KUBELET_PORT="--kubelet_port=10250"

KUBE_ETCD_SERVERS="--etcd_servers=http://172.16.3.30:2379"

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16"

KUBE_ADMISSION_CONTROL="--admission_control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota"

KUBE_API_ARGS=""

*2.3.3.kube-controller-manger服务*

*2.3.3.1.创建kube-controller-manager的启动文件*

*vi /usr/lib/systemd/system/kube-controller-manager.service*

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=kube-apiserver.service

Requires=kube-apiserver.service

[Service]

EnvironmentFile=-/etc/kubernetes/controller-manager

ExecStart=/usr/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

****2.3.3.2.创建配置文件controller-manager****(红色部分为master节点的ip)

*vi /etc/kubernetes/controller-manager*

KUBE_CONTROLLER_MANAGER_ARGS="--master=http://172.16.3.30:8080 --logtostderr=true --log-dir=/var/lib/kubernetes --v=0"

*2.3.4.kube-scheduler服务*

*2.3.4.1.创建kube-scheduler的启动文件*

*vi /usr/lib/systemd/system/kube-scheduler.service*

> [Unit]

>

> Description=Kubernetes Scheduler Plugin

>

> Documentation=https://github.com/GoogleCloudPlatform/kubernetes

>

> After=kube-apiserver.service

>

> Requires=kube-apiserver.service

>

>

>

> [Service]

>

> EnvironmentFile=-/etc/kubernetes/scheduler

>

> ExecStart=/usr/bin/kube-scheduler $KUBE_SCHEDULER_ARGS

>

> Restart=on-failure

>

> LimitNOFILE=65536

>

>

>

> [Install]

>

> WantedBy=multi-user.target

****2.3.4.2.创建配置文件scheduler****(红色部分为master节点的ip)

*vi /etc/kubernetes/scheduler*

KUBE_SCHEDULER_ARGS="--master=http://172.16.3.30:8080 --logtostderr=true --log-dir=/var/log/kubernetes --v=0"

*2.3.5.开启各组件并将其加入开机自启*

*systemctl daemon-reload*

*systemctl enable kube-apiserver.service*

*systemctl start kube-apiserver.service*

*systemctl enable kube-controller-manager.service*

*systemctl start kube-controller-manager.service*

*systemctl enable kube-scheduler.service*

*systemctl start kube-scheduler.service*

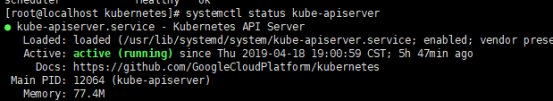

*2.3.6.验证 master 节点功能,并查看其状态(状态为running即为正常)*

*kubectl get componentstatuses*

*systemctl status etcd*

*systemctl status kube-apiserver*

*systemctl status kube-controller-manager*

*systemctl status kube-scheduler*

*2.4.slaver节点部署*

2.4.0.设置iptables重启自动执行

vi ~/.bashrc

echo 1 > /proc/sys/net/ipv4/ip_forward

iptables -P INPUT ACCEPT

iptables -P FORWARD ACCEPT

iptables -F

source ~/.bashrc

*2.4.1.安装和配置 kubelet*

*2.4.1.1.将准备好的k8s二进制包上传并解压到/usr/k8s目录下*

*cd /usr/k8s*

*rz********(选中上传准备好的k8s二进制包)*

*tar –zxvf kubernetes-server-linux-amd64.tar.gz*

*2.4.1.2.将所需二进制文件复制到/usr/bin下*

*cp -r /usr/k8s/kubernetes/server/bin/{kube-proxy,kubelet} /usr/bin/*

*2.4.1.3.创建kubelet的启动文件*

*vi /usr/lib/systemd/system/kubelet.service*

[Unit]

Description=Kubernetes Kubelet Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet/

EnvironmentFile=-/etc/kubernetes/kubelet

ExecStart=/usr/bin/kubelet \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBELET_ADDRESS \

$KUBELET_PORT \

$KUBELET_HOSTNAME \

$KUBELET_API_SERVER \

$KUBELET_POD_INFRA_CONTAINER \

$KUBELET_ARGS

Restart=on-failure

KillMode=process

[Install]

WantedBy=multi-user.target

(其中WorkingDirectory为kubelet数据保存的目录,需要在启动kubelet服务之前首先创建)

****2.4.1.4.创建配置文件kubelet****(红色部分为master节点的ip,蓝色部分为本节点的ip,黄色部分为私服ip)

*vi /etc/kubernetes/kubelet*

KUBELET_ADDRESS="--address=0.0.0.0"

KUBELET_PORT="--port=10250"

KUBELET_HOSTNAME="--hostname-override=172.16.3.37"

KUBELET_API_SERVER="--api-servers=http://172.16.3.30:8080"

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=172.16.3.30:5000/pod-infrastructure:latest"

KUBELET_ARGS=""

*vi /etc/kubernetes/config*

KUBE_LOGTOSTDERR="–logtostderr=true"

# journal message level, 0 is debug

KUBE_LOG_LEVEL="–v=0"

# Should this cluster be allowed to run privileged docker containers

KUBE_ALLOW_PRIV="–allow-privileged=false"

# How the controller-manager, scheduler, and proxy find the apiserver

KUBE_MASTER="–master=http://172.16.3.30:8080"

KUBELET_API_SERVER="–api-servers=http://172.16.3.30:8080"

*2.4.1.5.启动 kubelet并配置开机自启*

*systemctl daemon-reload*

*systemctl enable kubelet.service*

*systemctl start kubelet.service*

*2.4.1.6.查看状态信息*

*systemctl status kubelet.service*

*2.4.2.安装和配置 kube-proxy*

*2.4.2.1.创建kube-proxy的启动文件*

*vi /usr/lib/systemd/system/kube-proxy.service*

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/proxy

ExecStart=/usr/bin/kube-proxy \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_MASTER \

$KUBE_PROXY_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

****2.4.2.2.创建配置文件kube-proxy****(红色部分为master节点的ip)

*vi /etc/kubernetes/proxy*

KUBE_PROXY_ARGS=""

*vi /etc/kubernetes/config*

KUBE_LOGTOSTDERR="–logtostderr=true"

# journal message level, 0 is debug

KUBE_LOG_LEVEL="–v=0"

# Should this cluster be allowed to run privileged docker containers

KUBE_ALLOW_PRIV="–allow-privileged=false"

# How the controller-manager, scheduler, and proxy find the apiserver

KUBE_MASTER="–master=http://172.16.3.30:8080"

KUBELET_API_SERVER="–api-servers=http://172.16.3.30:8080"

*2.4.2.3. 启动 kube-proxy并配置开机自启*

*systemctl daemon-reload*

*systemctl enable kube-proxy*

*systemctl start kube-proxy*

*2.4.2.4.查看状态*

*systemctl status kube-proxy*

*2.4.3.检查节点状态(在主节点执行)*

*kubectl get nodes*

*2.4.4.部署 Flannel 网络*

*2.4.4.1.rpm包方式安装*

*2.4.4.2.下载rpm包*

http://rpmfind.net/linux/rpm2html/search.php?query=flannel

*2.4.4.3.将flannel的rpm包上传解压到k8s目录下*

*cd /usr/k8s*

*rpm -ivh flannel-0.7.1-4.el7.x86_64.rpm*

****2.4.4.4.配置flannel网络****(红色部分为master节点的ip,黄色部分为本身节点的网卡和ip)

*vi /etc/sysconfig/flanneld*

# Flanneld configuration options

# etcd url location. Point this to the server where etcd runs

FLANNEL_ETCD_ENDPOINTS=“http://172.16.3.30:2379”

# etcd config key. This is the configuration key that flannel queries

# For address range assignment

FLANNEL_ETCD_PREFIX="/atomic.io/network"

# Any additional options that you want to pass

FLANNEL_ETCD=“http://172.16.3.30:2379”

FLANNEL_ETCD_KEY="/atomic.io/network"

FLANNEL_OPTIONS="-iface=ens33 -public-ip=172.16.3.37 -ip-masq=true"

****2.4.4.5.配置etcd中关于flannel的key****(在master节点执行)

*etcdctl mk /atomic.io/network/config ‘{“Network”:“172.19.0.0/16”, “SubnetLen”:24, “Backend”:{“Type”:“vxlan”}}’*

*2.4.4.6.启动flannel并配置开机自启*

*systemctl daemon-reload*

*systemctl enable flanneld.service*

*systemctl start flanneld.service*

*2.4.4.7.查看状态*

*systemctl status flanneld.service*

*2.5.二进制文件包及参考资料*

****文件链接:****https://pan.baidu.com/s/1wZtoEgpQd9kWShbxbOzjlg

*提取码:********f2n8*

492

492

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?