一开始只是想看看select源码,但似乎需要先看看等待队列,发现了一些好的博客

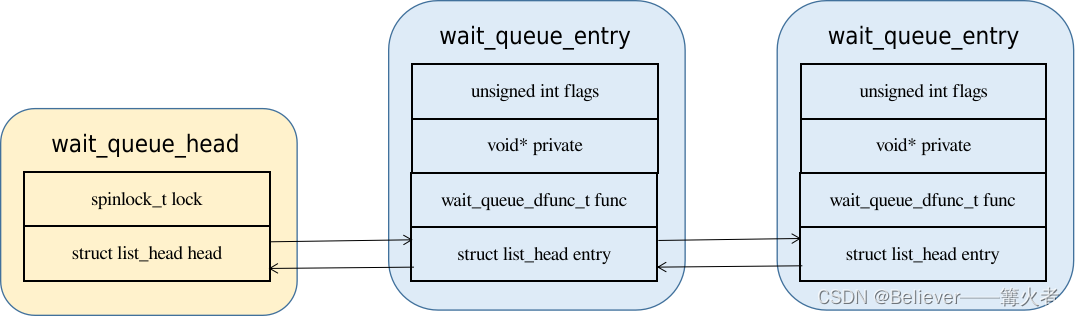

一、等待队列的数据结构

struct list_head {

struct list_head *next, *prev;

};typedef int (*wait_queue_func_t)(struct wait_queue_entry *wq_entry, unsigned mode, int flags, void *key);

struct wait_queue_entry {

unsigned int flags;

void *private; // 指向等待的task_struct

wait_queue_func_t func; // 唤醒函数

struct list_head entry; // 链表元素

};

struct wait_queue_head {

spinlock_t lock;

struct list_head head;

};等待队列是一个双向链表,包括一个队列头和一个队列实体。

二、初始化

上面三个结构体的初始化宏

// wait_queue_entry

#define DECLARE_WAITQUEUE(name, tsk) \

struct wait_queue_entry name = __WAITQUEUE_INITIALIZER(name, tsk)

#define __WAITQUEUE_INITIALIZER(name, tsk) { \

.private = tsk, \

.func = default_wake_function, \

.entry = { NULL, NULL } }// wait_queue_head

#define DECLARE_WAIT_QUEUE_HEAD(name) \

struct wait_queue_head name = __WAIT_QUEUE_HEAD_INITIALIZER(name)

#define __WAIT_QUEUE_HEAD_INITIALIZER(name) { \

.lock = __SPIN_LOCK_UNLOCKED(name.lock), \

.head = LIST_HEAD_INIT(name.head) }

// list_head

#define LIST_HEAD_INIT(name) { &(name), &(name) }

#define LIST_HEAD(name) \

struct list_head name = LIST_HEAD_INIT(name)

三、添加到队列add_wait_queue

void add_wait_queue(struct wait_queue_head *wq_head, struct wait_queue_entry *wq_entry)

{

unsigned long flags;

wq_entry->flags &= ~WQ_FLAG_EXCLUSIVE;

spin_lock_irqsave(&wq_head->lock, flags);

__add_wait_queue(wq_head, wq_entry);

spin_unlock_irqrestore(&wq_head->lock, flags);

}

EXPORT_SYMBOL(add_wait_queue);

static inline void __add_wait_queue(struct wait_queue_head *wq_head, struct wait_queue_entry *wq_entry)

{

struct list_head *head = &wq_head->head;

struct wait_queue_entry *wq;

list_for_each_entry(wq, &wq_head->head, entry) {

if (!(wq->flags & WQ_FLAG_PRIORITY))

break;

head = &wq->entry;

}

list_add(&wq_entry->entry, head);

}大致流程是:先将标志位设置为不可加载,然后获取锁,加入队列

#define list_for_each_entry(pos, head, member) \

for (pos = list_first_entry(head, typeof(*pos), member); \

!list_entry_is_head(pos, head, member); \

pos = list_next_entry(pos, member))

#define list_first_entry(ptr, type, member) \

list_entry((ptr)->next, type, member)

#define list_entry(ptr, type, member) \

container_of(ptr, type, member)

#define list_entry_is_head(pos, head, member) \

list_is_head(&pos->member, (head))

static inline int list_is_head(const struct list_head *list, const struct list_head *head)

{

return list == head;

}

#define list_next_entry(pos, member) \

list_entry((pos)->member.next, typeof(*(pos)), member)说实话,这个宏一堆宏比较复杂,但是好像也没有什么好理解的办法,就是硬看,硬理解。

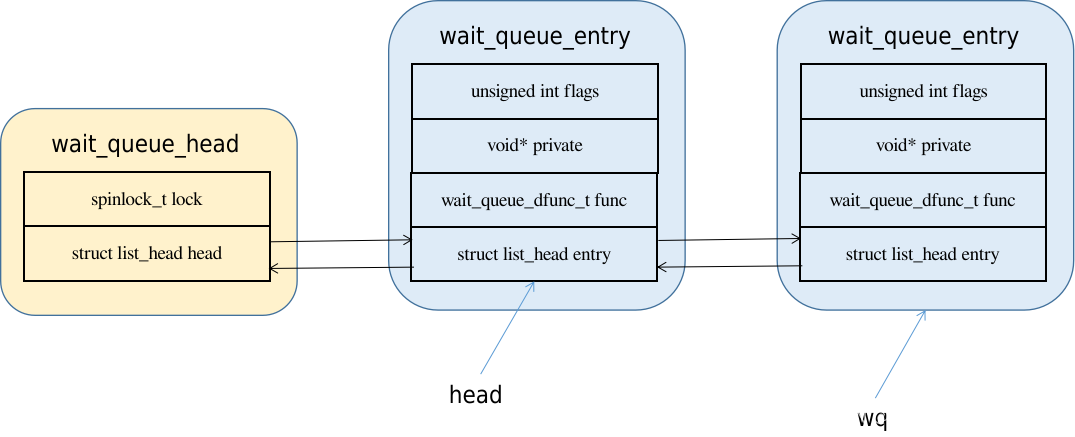

这个大概是等待队列的整体结构,最后一个wait_queue_entry的entry的next没有下一个元素,所以,他的指向是自己,这也是为什么 list_entry_is_head结束标志是当前地址和next地址是否相同。而add函数实际上不是找到队尾,而是找到队列满足不是WQ_FLAG_PRIORITY的第一个位置。然后头插。(注意,这里break是在head = &wq->entry;语句前,也就是,这样

插在了第一个不为WQ_FLAG_PRIORITY的前面。)

static inline void list_add(struct list_head *new, struct list_head *head)

{

__list_add(new, head, head->next);

}

static inline void __list_add(struct list_head *new,

struct list_head *prev,

struct list_head *next)

{

if (!__list_add_valid(new, prev, next))

return;

next->prev = new;

new->next = next;

new->prev = prev;

WRITE_ONCE(prev->next, new);

}关于write_once宏,可以见Linux内核中的READ_ONCE和WRITE_ONCE宏为了保证读的是最新的内容。

三、从队列删除remove_wait_queue

void remove_wait_queue(struct wait_queue_head *wq_head, struct wait_queue_entry *wq_entry)

{

unsigned long flags;

spin_lock_irqsave(&wq_head->lock, flags);

__remove_wait_queue(wq_head, wq_entry);

spin_unlock_irqrestore(&wq_head->lock, flags);

}

EXPORT_SYMBOL(remove_wait_queue);

static inline void

__remove_wait_queue(struct wait_queue_head *wq_head, struct wait_queue_entry *wq_entry)

{

list_del(&wq_entry->entry);

}

static inline void list_del(struct list_head *entry)

{

__list_del_entry(entry);

entry->next = LIST_POISON1;

entry->prev = LIST_POISON2;

}

static inline void __list_del_entry(struct list_head *entry)

{

__list_del(entry->prev, entry->next);

}

static inline void __list_del(struct list_head *prev, struct list_head *next)

{

next->prev = prev;

prev->next = next;

}

#define LIST_POISON1 ((void *) 0x100)

#define LIST_POISON2 ((void *) 0x122)删除就很简单了。不过为什么选0x100和0x122,不是很清楚。

四、休眠wait_event

#define wait_event(wq_head, condition) \

do { \

might_sleep(); \

if (condition) \

break; \

__wait_event(wq_head, condition); \

} while (0)might_sleep(): 指示当前函数可以睡眠。如果它所在的函数处于原子上下文(atomic context)中(如,spinlock, irq-handler…),将打印出堆栈的回溯信息。这个函数主要用来做调试工作,在不确定不期望睡眠的地方是否真的不会睡眠时,就把这个宏加进去。显然wait_event在condition不满足的条件的时候就睡眠。

#define __wait_event(wq_head, condition) \

(void)___wait_event(wq_head, condition, TASK_UNINTERRUPTIBLE, 0, 0, \

schedule())

#define ___wait_event(wq_head, condition, state, exclusive, ret, cmd) \

({ \

__label__ __out; \

struct wait_queue_entry __wq_entry; \

long __ret = ret; /* explicit shadow */ \

\

init_wait_entry(&__wq_entry, exclusive ? WQ_FLAG_EXCLUSIVE : 0); \

for (;;) { \

long __int = prepare_to_wait_event(&wq_head, &__wq_entry, state);\

\

if (condition) \

break; \

\

if (___wait_is_interruptible(state) && __int) { \

__ret = __int; \

goto __out; \

} \

\

cmd; \

} \

finish_wait(&wq_head, &__wq_entry); \

__out: __ret; \

})代码简单展开

({

__label__ __out; // GNU标签,作跳转用

struct wait_queue_entry __wq_entry;

long __ret = 0; /* explicit shadow */

\

init_wait_entry(&__wq_entry, 0 ? WQ_FLAG_EXCLUSIVE : 0);

for (;;) { \

long __int = prepare_to_wait_event(&wq_head, &__wq_entry, TASK_UNINTERRUPTIBLE);

if (condition)

break;

if (___wait_is_interruptible(TASK_UNINTERRUPTIBLE) && __int) {

__ret = __int;

goto __out;

}

schedule();

}

finish_wait(&wq_head, &__wq_entry);

__out: __ret;

})void init_wait_entry(struct wait_queue_entry *wq_entry, int flags)

{

wq_entry->flags = flags;

wq_entry->private = current;

wq_entry->func = autoremove_wake_function;

INIT_LIST_HEAD(&wq_entry->entry);

}

EXPORT_SYMBOL(init_wait_entry);

int autoremove_wake_function(struct wait_queue_entry *wq_entry, unsigned mode, int sync, void *key)

{

int ret = default_wake_function(wq_entry, mode, sync, key);

if (ret)

list_del_init_careful(&wq_entry->entry);

return ret;

}

EXPORT_SYMBOL(autoremove_wake_function);long prepare_to_wait_event(struct wait_queue_head *wq_head, struct wait_queue_entry *wq_entry, int state)

{

unsigned long flags;

long ret = 0;

spin_lock_irqsave(&wq_head->lock, flags);

if (signal_pending_state(state, current)) {

/*

* Exclusive waiter must not fail if it was selected by wakeup,

* it should "consume" the condition we were waiting for.

*

* The caller will recheck the condition and return success if

* we were already woken up, we can not miss the event because

* wakeup locks/unlocks the same wq_head->lock.

*

* But we need to ensure that set-condition + wakeup after that

* can't see us, it should wake up another exclusive waiter if

* we fail.

*/

list_del_init(&wq_entry->entry);

ret = -ERESTARTSYS;

} else {

if (list_empty(&wq_entry->entry)) {

if (wq_entry->flags & WQ_FLAG_EXCLUSIVE)

__add_wait_queue_entry_tail(wq_head, wq_entry);

else

__add_wait_queue(wq_head, wq_entry);

}

set_current_state(state);

}

spin_unlock_irqrestore(&wq_head->lock, flags);

return ret;

}

EXPORT_SYMBOL(prepare_to_wait_event);prepare_to_wait_event函数逻辑是,首先获取锁(虽然不清楚是什么锁.....)然后判断是否有信号需要处理,如果有信号需要处理,就删除构建的等待实体,返回处理信号。然后加入等待队列,加入队列的方法有两种。

引用博客的话:

wait_event(wq, condition):进入睡眠状态直到condition为true,在等待期进程状态为TASK_UNINTERRUPTIBLE。对应的唤醒方法是wake_up(),当等待队列wq被唤醒时会执行如下两个检测:

- 检查condition是否为true,满足条件,则跳出循环。

- 检测该进程task的成员thread_info->flags是否被设置TIF_SIGPENDING,被设置则说明有待处理的信号,则跳出循环。

wait_event_xxx有一组用于睡眠的函数,基于是否可中断(TASK_UNINTERRUPTIBLE),是否有超时机制,在方法名后缀加上interruptible,timeout等信息,对应的含义就是允许中断(TASK_INTERRUPTINLE)和带有超时机制,比如wait_event_interruptible(),这里就不再列举。另外sleep_on()也是进入睡眠状态,没有condition,不过该方法有可能导致竞态,从kernel 3.15移除该方法,采用wait_event代替sleep_on()。

四、唤醒wait_up

#define wake_up(x) __wake_up(x, TASK_NORMAL, 1, NULL)int __wake_up(struct wait_queue_head *wq_head, unsigned int mode,

int nr_exclusive, void *key)

{

return __wake_up_common_lock(wq_head, mode, nr_exclusive, 0, key);

}

EXPORT_SYMBOL(__wake_up);static int __wake_up_common_lock(struct wait_queue_head *wq_head, unsigned int mode,

int nr_exclusive, int wake_flags, void *key)

{

unsigned long flags;

int remaining;

spin_lock_irqsave(&wq_head->lock, flags);

remaining = __wake_up_common(wq_head, mode, nr_exclusive, wake_flags,

key);

spin_unlock_irqrestore(&wq_head->lock, flags);

return nr_exclusive - remaining;

}static int __wake_up_common(struct wait_queue_head *wq_head, unsigned int mode,

int nr_exclusive, int wake_flags, void *key)

{

wait_queue_entry_t *curr, *next;

lockdep_assert_held(&wq_head->lock);

curr = list_first_entry(&wq_head->head, wait_queue_entry_t, entry);

if (&curr->entry == &wq_head->head)

return nr_exclusive;

list_for_each_entry_safe_from(curr, next, &wq_head->head, entry) {

unsigned flags = curr->flags;

int ret;

ret = curr->func(curr, mode, wake_flags, key);

if (ret < 0)

break;

if (ret && (flags & WQ_FLAG_EXCLUSIVE) && !--nr_exclusive)

break;

}

return nr_exclusive;

}#define list_for_each_entry_safe_from(pos, n, head, member) \

for (n = list_next_entry(pos, member); \

!list_entry_is_head(pos, head, member); \

pos = n, n = list_next_entry(n, member))

#define list_next_entry(pos, member) \

list_entry((pos)->member.next, typeof(*(pos)), member)从等待队列的头部遍历等待队列,然后调用每个等待体的回调函数,

wait_event(wq)遍历整个等待列表wq中的每一项wait_queue_t,依次调用唤醒函数来唤醒该等待队列中的所有项,唤醒函数如下:

- 对于通过宏DECLARE_WAITQUEUE(name, tsk) 来创建wait,再调用add_wait_queue(wq, wait)方法,则唤醒函数为default_wake_function

- 对于通过wait_event(wq, condition)方式加入的wait项,则经过调用prepare_to_wait_event()方法,则唤醒函数为autoremove_wake_function,由前面可知,该方法主要还是调用default_wake_function来唤醒。

wake_up_xxx有一组用于唤醒的函数,跟wait_event配套使用。比如wait_event()与wake_up(),wait_event_interruptible()与wake_up_interruptible()。

五、唤醒函数default_wake_function

int default_wake_function(wait_queue_entry_t *curr, unsigned mode, int wake_flags,

void *key)

{

WARN_ON_ONCE(IS_ENABLED(CONFIG_SCHED_DEBUG) && wake_flags & ~(WF_SYNC|WF_CURRENT_CPU));

return try_to_wake_up(curr->private, mode, wake_flags);

}

EXPORT_SYMBOL(default_wake_function);关于WARN_ON_ONCE有点复杂,先略过,唤醒函数主要是try_to_wake_up

int try_to_wake_up(struct task_struct *p, unsigned int state, int wake_flags)

{

guard(preempt)();

int cpu, success = 0;

if (p == current) {

/*

* We're waking current, this means 'p->on_rq' and 'task_cpu(p)

* == smp_processor_id()'. Together this means we can special

* case the whole 'p->on_rq && ttwu_runnable()' case below

* without taking any locks.

*

* In particular:

* - we rely on Program-Order guarantees for all the ordering,

* - we're serialized against set_special_state() by virtue of

* it disabling IRQs (this allows not taking ->pi_lock).

*/

if (!ttwu_state_match(p, state, &success))

goto out;

trace_sched_waking(p);

ttwu_do_wakeup(p);

goto out;

}

/*

* If we are going to wake up a thread waiting for CONDITION we

* need to ensure that CONDITION=1 done by the caller can not be

* reordered with p->state check below. This pairs with smp_store_mb()

* in set_current_state() that the waiting thread does.

*/

scoped_guard (raw_spinlock_irqsave, &p->pi_lock) {

smp_mb__after_spinlock();

if (!ttwu_state_match(p, state, &success))

break;

trace_sched_waking(p);

/*

* Ensure we load p->on_rq _after_ p->state, otherwise it would

* be possible to, falsely, observe p->on_rq == 0 and get stuck

* in smp_cond_load_acquire() below.

*

* sched_ttwu_pending() try_to_wake_up()

* STORE p->on_rq = 1 LOAD p->state

* UNLOCK rq->lock

*

* __schedule() (switch to task 'p')

* LOCK rq->lock smp_rmb();

* smp_mb__after_spinlock();

* UNLOCK rq->lock

*

* [task p]

* STORE p->state = UNINTERRUPTIBLE LOAD p->on_rq

*

* Pairs with the LOCK+smp_mb__after_spinlock() on rq->lock in

* __schedule(). See the comment for smp_mb__after_spinlock().

*

* A similar smp_rmb() lives in __task_needs_rq_lock().

*/

smp_rmb();

if (READ_ONCE(p->on_rq) && ttwu_runnable(p, wake_flags))

break;

#ifdef CONFIG_SMP

/*

* Ensure we load p->on_cpu _after_ p->on_rq, otherwise it would be

* possible to, falsely, observe p->on_cpu == 0.

*

* One must be running (->on_cpu == 1) in order to remove oneself

* from the runqueue.

*

* __schedule() (switch to task 'p') try_to_wake_up()

* STORE p->on_cpu = 1 LOAD p->on_rq

* UNLOCK rq->lock

*

* __schedule() (put 'p' to sleep)

* LOCK rq->lock smp_rmb();

* smp_mb__after_spinlock();

* STORE p->on_rq = 0 LOAD p->on_cpu

*

* Pairs with the LOCK+smp_mb__after_spinlock() on rq->lock in

* __schedule(). See the comment for smp_mb__after_spinlock().

*

* Form a control-dep-acquire with p->on_rq == 0 above, to ensure

* schedule()'s deactivate_task() has 'happened' and p will no longer

* care about it's own p->state. See the comment in __schedule().

*/

smp_acquire__after_ctrl_dep();

/*

* We're doing the wakeup (@success == 1), they did a dequeue (p->on_rq

* == 0), which means we need to do an enqueue, change p->state to

* TASK_WAKING such that we can unlock p->pi_lock before doing the

* enqueue, such as ttwu_queue_wakelist().

*/

WRITE_ONCE(p->__state, TASK_WAKING);

/*

* If the owning (remote) CPU is still in the middle of schedule() with

* this task as prev, considering queueing p on the remote CPUs wake_list

* which potentially sends an IPI instead of spinning on p->on_cpu to

* let the waker make forward progress. This is safe because IRQs are

* disabled and the IPI will deliver after on_cpu is cleared.

*

* Ensure we load task_cpu(p) after p->on_cpu:

*

* set_task_cpu(p, cpu);

* STORE p->cpu = @cpu

* __schedule() (switch to task 'p')

* LOCK rq->lock

* smp_mb__after_spin_lock() smp_cond_load_acquire(&p->on_cpu)

* STORE p->on_cpu = 1 LOAD p->cpu

*

* to ensure we observe the correct CPU on which the task is currently

* scheduling.

*/

if (smp_load_acquire(&p->on_cpu) &&

ttwu_queue_wakelist(p, task_cpu(p), wake_flags))

break;

/*

* If the owning (remote) CPU is still in the middle of schedule() with

* this task as prev, wait until it's done referencing the task.

*

* Pairs with the smp_store_release() in finish_task().

*

* This ensures that tasks getting woken will be fully ordered against

* their previous state and preserve Program Order.

*/

smp_cond_load_acquire(&p->on_cpu, !VAL);

cpu = select_task_rq(p, p->wake_cpu, wake_flags | WF_TTWU);

if (task_cpu(p) != cpu) {

if (p->in_iowait) {

delayacct_blkio_end(p);

atomic_dec(&task_rq(p)->nr_iowait);

}

wake_flags |= WF_MIGRATED;

psi_ttwu_dequeue(p);

set_task_cpu(p, cpu);

}

#else

cpu = task_cpu(p);

#endif /* CONFIG_SMP */

ttwu_queue(p, cpu, wake_flags);

}

out:

if (success)

ttwu_stat(p, task_cpu(p), wake_flags);

return success;

}有点复杂,是内核的核心函数,按照那篇博客简化大概是这样的(主要是确实看不懂上面的每行代码,大概就按博客简略了解)

首先是,如果在本线程内task_cpu(p)(获取进程p所在CPU的编号) = smp_processor_id()(用来获取当前cpu的id),虽然不知道这有什么好处,但是注释说是不需要获取锁,总之,如果是在本进程内就先调用ttwu_state_match

static __always_inline

bool ttwu_state_match(struct task_struct *p, unsigned int state, int *success)

{

int match;

if (IS_ENABLED(CONFIG_DEBUG_PREEMPT)) {

WARN_ON_ONCE((state & TASK_RTLOCK_WAIT) &&

state != TASK_RTLOCK_WAIT);

}

// 判断内核是否可以抢占

*success = !!(match = __task_state_match(p, state));

/*

* Saved state preserves the task state across blocking on

* an RT lock or TASK_FREEZABLE tasks. If the state matches,

* set p::saved_state to TASK_RUNNING, but do not wake the task

* because it waits for a lock wakeup or __thaw_task(). Also

* indicate success because from the regular waker's point of

* view this has succeeded.

*

* After acquiring the lock the task will restore p::__state

* from p::saved_state which ensures that the regular

* wakeup is not lost. The restore will also set

* p::saved_state to TASK_RUNNING so any further tests will

* not result in false positives vs. @success

*/

if (match < 0)

p->saved_state = TASK_RUNNING;

return match > 0;

}static __always_inline

int __task_state_match(struct task_struct *p, unsigned int state)

{

if (READ_ONCE(p->__state) & state)

return 1;

if (READ_ONCE(p->saved_state) & state)

return -1;

return 0;

}说实话,,有点看不懂了,但是根据那个博客大概ttwu_queue函是将进程加入唤醒队列

static void ttwu_queue(struct task_struct *p, int cpu, int wake_flags)

{

struct rq *rq = cpu_rq(cpu);

struct rq_flags rf;

if (ttwu_queue_wakelist(p, cpu, wake_flags))

return;

rq_lock(rq, &rf);

update_rq_clock(rq);

ttwu_do_activate(rq, p, wake_flags, &rf);

rq_unlock(rq, &rf);

}

static void

ttwu_do_activate(struct rq *rq, struct task_struct *p, int wake_flags,

struct rq_flags *rf)

{

int en_flags = ENQUEUE_WAKEUP | ENQUEUE_NOCLOCK;

lockdep_assert_rq_held(rq);

if (p->sched_contributes_to_load)

rq->nr_uninterruptible--;

#ifdef CONFIG_SMP

if (wake_flags & WF_MIGRATED)

en_flags |= ENQUEUE_MIGRATED;

else

#endif

if (p->in_iowait) {

delayacct_blkio_end(p);

atomic_dec(&task_rq(p)->nr_iowait);

}

activate_task(rq, p, en_flags);

wakeup_preempt(rq, p, wake_flags);

ttwu_do_wakeup(p);

#ifdef CONFIG_SMP

if (p->sched_class->task_woken) {

/*

* Our task @p is fully woken up and running; so it's safe to

* drop the rq->lock, hereafter rq is only used for statistics.

*/

rq_unpin_lock(rq, rf);

p->sched_class->task_woken(rq, p);

rq_repin_lock(rq, rf);

}

if (rq->idle_stamp) {

u64 delta = rq_clock(rq) - rq->idle_stamp;

u64 max = 2*rq->max_idle_balance_cost;

update_avg(&rq->avg_idle, delta);

if (rq->avg_idle > max)

rq->avg_idle = max;

rq->idle_stamp = 0;

}

#endif

p->dl_server = NULL;

}

void activate_task(struct rq *rq, struct task_struct *p, int flags)

{

if (task_on_rq_migrating(p))

flags |= ENQUEUE_MIGRATED;

if (flags & ENQUEUE_MIGRATED)

sched_mm_cid_migrate_to(rq, p);

enqueue_task(rq, p, flags);

WRITE_ONCE(p->on_rq, TASK_ON_RQ_QUEUED);

ASSERT_EXCLUSIVE_WRITER(p->on_rq);

}

static inline void ttwu_do_wakeup(struct task_struct *p)

{

WRITE_ONCE(p->__state, TASK_RUNNING);

trace_sched_wakeup(p);

}建议这一块直接看那个博客吧,反正代码确实搞不太明白,那个博客也很简略。

五、总节

这部分转自那个博客

通过DECLARE_WAIT_QUEUE_HEAD(name)可初始化wait_queue_head_t结构体,通过DECLARE_WAITQUEUE可初始化wait_queue_t结构体,由等待队列头(wait_queue_head_t)和等待队列项(wait_queue_t)构建一个双向链表。 可通过add_wait_queue和remove_wait_queue分别向双向链表中添加或删除等待项。

休眠与唤醒流程:

- 进程A调用wait_event(wq, condition)就是向等待队列头中添加等待队列项wait_queue_t,该该等待队列项中的成员变量private记录当前进程,其成员变量func记录唤醒回调函数,然后调用schedule()使当前进程进入休眠状态。

- 进程B调用wake_up(wq)会遍历整个等待列表wq中的每一项wait_queue_t,依次调用每一项的唤醒函数try_to_wake_up()。这个过程会将private记录的进程加入rq运行队列,并设置进程状态为TASK_RUNNING。

- 进程A被唤醒后只执行如下检测:

- 检查condition是否为true,满足条件则跳出循环,再把wait_queue_t从wq队列中移除;

- 检测该进程task的成员thread_info->flags是否被设置TIF_SIGPENDING,被设置则说明有待处理的信号,则跳出循环,再把wait_queue_t从wq队列中移除;

- 否则,继续调用schedule()再次进入休眠等待状态,如果wait_queue_t不在wq队列,则再次加入wq队列。

说实话,第一次看linux源码,感觉确实复杂,而且等待队列的细节部分仍有好多不是很清楚,后面再慢慢填坑理解。

483

483

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?