Tmall爬虫工作笔记

我们的这个爬虫功能是爬取Tmall店铺的所有商品。

一、流程分析

- 输入搜索店铺的名称抓取搜索结果

- 获取店铺每页的产品url

- 获取产品详情数据

- 获取商品评论

- 获取店铺评分

二、代码部分

1.店铺抓取

1.1目标url分析

search_url = 'https://list.tmall.com/search_product.htm?q=三只松鼠&type=p&spm=a220m.8599659.a2227oh.d100&from=mallfp..m_1_searchbutton&searchType=default&style=w'

分析:注意我们的红色部分是我们需要输入的店铺名称。

1.2抓取过程

(1)在谷歌浏览器我们登录我们的tmall,淘宝

(2)打开Tmall的店铺搜索界面(https://list.tmall.com/search_product.htm?q=kindle&type=p&style=w&spm=a220m.1000858.a2227oh.d100&xl=kindle_2&from=.list.pc_2_suggest)

(3) 自行打开抓包工具进行分析(在此不多赘述,下面直接说结果)

(4)店铺的搜索结果在当前url中包含,所以我们就选择这个url进行爬取

(5)构造请求包

这一步是为今后爬取其他页面奠定基础,所以需要仔细设计请求包。

class request_url(object):

"""请求网页,以及反爬处理"""

def __init__(self,url):

self.url = url我们编写一个类用来处理在请求页面的过程中的各种问题。接下里我们构造请求头。(这里我犯了一个错误,用的网页版tmall来分析,用的移动端的请求头,当headers为pc版时,下面的代码会提取不到信息。)

import random

class request_url(object):

"""请求网页,以及反爬处理"""

def __init__(self, url):

self.url = url

def construct_headers(self):

agent = ['Dalvik/2.1.0 (Linux; U; Android 10; Redmi K30 5G MIUI/V11.0.11.0.QGICNXM)',

'TBAndroid/Native',

'Mozilla/5.0 (Linux; Android 7.1.1; MI 6 Build/NMF26X; wv) AppleWebKit/537.36 (KHTML, like Gecko) Version/4.0 Chrome/57.0.2987.132 MQQBrowser/6.2 TBS/043807 Mobile Safari/537.36 MicroMessenger/6.6.1.1220(0x26060135) NetType/WIFI Language/zh_CN',

]

with open('cookie/search_cookie.txt', 'r') as f:

cookie = f.readline()

self.headers = {

'user-agent': random.choice(agent),

'cookie': cookie,

'Connection': 'close',

# 'referer': 'https://detail.tmall.com/item.htm?spm=a220m.1000858.1000725.1.48c37111IiH0Ml&id=578004651332&skuId=4180113216736&areaId=610100&user_id=2099020602&cat_id=50094904&is_b=1&rn=246062bfaa1943ec6b72afcd1ff3ded8',

}较上一步我们构造construct_headers方法来构造请求头。

| agent | 存有我们的各种请求头,自己可以在网上搜一些添加进去 |

| cookie | cookie是我在当前项目的文件夹下创建了一个cookie文件夹来存放search_cookie |

接下来写请求方法。

import requests

import random

from lxml import etree

from fake_useragent import UserAgent

import time

class request_url(object):

"""请求网页,以及反爬处理"""

def __init__(self, url):

self.url = url

def construct_headers(self):

agent = ['Dalvik/2.1.0 (Linux; U; Android 10; Redmi K30 5G MIUI/V11.0.11.0.QGICNXM)',

'TBAndroid/Native',

'Mozilla/5.0 (Linux; Android 7.1.1; MI 6 Build/NMF26X; wv) AppleWebKit/537.36 (KHTML, like Gecko) Version/4.0 Chrome/57.0.2987.132 MQQBrowser/6.2 TBS/043807 Mobile Safari/537.36 MicroMessenger/6.6.1.1220(0x26060135) NetType/WIFI Language/zh_CN',

]

with open('cookie/search_cookie.txt', 'r') as f:

cookie = f.readline()

self.headers = {

'user-agent': random.choice(agent), # 随机选择一个请求头部信息

'cookie': cookie,

'Connection': 'close',

# 'referer': 'https://detail.tmall.com/item.htm?spm=a220m.1000858.1000725.1.48c37111IiH0Ml&id=578004651332&skuId=4180113216736&areaId=610100&user_id=2099020602&cat_id=50094904&is_b=1&rn=246062bfaa1943ec6b72afcd1ff3ded8',

}

def request(self):

self.construct_headers()

response = requests.get(self.url,headers=self.headers)

xml = etree.HTML(response.text) # 转换成xml,为了使用xpath提取数据

return response.text,xml

def mainRquest(url):

RU = request_url(url)

response,xml = RU.request()

return response,xml至此我们的请求包初步构造完成,然后我们将这个python文件命名为Elements.py

(6)店铺搜索结果爬取

首先创建一个名为Tmall.py的文件。

我们在这个文件中还是构造一个类来爬取Tmall。

from Elements import mainRquest

class Tmall():

def __init__(self):

self.search = input('请输入店铺名:')

self.url = 'https://list.tmall.com/search_product.htm?q=%s&type=p&style=w&spm=a220m.1000858.a2227oh.d100&xl=kindle_2&from=.list.pc_2_suggest'%(self.search)

# 1.0搜索店铺

def shop_search(self):

response,xml = mainRquest(self.url)

print(response)

def main():

tmall = Tmall()

tmall.shop_search()

if __name__ == '__main__':

main()执行结果:

下一步我们利用xpath,或者正则表达式进行内容筛选。

from Elements import mainRquest

class Tmall():

def __init__(self):

self.search = input('请输入店铺名:')

self.url = 'https://list.tmall.com/search_product.htm?q=%s&type=p&style=w&spm=a220m.1000858.a2227oh.d100&xl=kindle_2&from=.list.pc_2_suggest'%(self.search)

# 1.0搜索店铺

def shop_search(self):

response,xml = mainRquest(self.url)

title = xml.xpath('//div[@class="shop-title"]/label/text()')

print(title)

def main():

tmall = Tmall()

tmall.shop_search()

if __name__ == '__main__':

main()执行结果:

['kindle官方旗舰店', '天猫国际进口超市', '锦读数码专营店', '苏宁易购官方旗舰店', '天佑润泽数码专营店', 'boox曼尼金专卖店', 'kindle海江通专卖店', '洋桃海外旗舰店', '志赟数码专营店', '天猫国际小酒馆'] (7)店铺产品基本信息抓取

经过小编的分析,我们会发现店铺产品的链接就在:

我们点击这个url就到达:

把网页往下滑,会有第几页,我们紧接着点击第几页,便可以进行翻页,然后我们观察网址的变化:

分析url我们发现,我们可以通过构造url来爬取店铺所有的产品。那接下来让我们来写代码吧!

第一步在上一个店铺搜索结果获取中,我们需要提取出user_id。

# 1.0搜索店铺

def shop_search(self):

response,xml = mainRquest(self.url)

title = xml.xpath('//div[@class="shop-title"]/label/text()')

user_id = re.findall('user_id=(\d+)',response)

print(user_id)运行结果:

['2099020602', '2549841410', '3424411379', '2616970884', '3322458767', '3173040572', '2041560994', '2206736426581', '2838273504', '2200657974488']我们接下里要把这些爬下来的数据先创建一个变量存起来。(为了避免每次都要输入,小编在举例中将q=kindle写死)

from Elements import mainRquest

import re

class Tmall():

def __init__(self):

# self.search = input('请输入店铺名:')

# self.url = 'https://list.tmall.com/search_product.htm?q=%s&type=p&style=w&spm=a220m.1000858.a2227oh.d100&xl=kindle_2&from=.list.pc_2_suggest'%(self.search)

self.url = 'https://list.tmall.com/search_product.htm?q=kindle&type=p&style=w&spm=a220m.1000858.a2227oh.d100&xl=kindle_2&from=.list.pc_2_suggest'

self.shop_dict = {} # 用于存储店铺的信息

# 1.0搜索店铺

def shop_search(self):

response, xml = mainRquest(self.url)

title = xml.xpath('//div[@class="shop-title"]/label/text()')

user_id = re.findall('user_id=(\d+)', response)

assert (len(title) == len(user_id) and len(title) != []) # 确保我们的提取到了数据,若没有将会报错

self.shop_dict['shopTitle'] = title

self.shop_dict['userId'] = user_id

print('\r1.0店铺搜索结果处理完成!', end='')

def main():

tmall = Tmall()

tmall.shop_search()

if __name__ == '__main__':

main()

注意:我的爬取思路是=====》shop_search返回一个user_id(yield方法),下一个抓取店铺内产品的方法拿到user_id构造url,获取到店铺内商品的链接后返回一个链接,给下面抓取商品详情的方法。

下面是改版后的shop_search()

class Tmall():

def __init__(self):

self.search = input('请输入店铺名:')

self.url = 'https://list.tmall.com/search_product.htm?q=%s&type=p&style=w&spm=a220m.1000858.a2227oh.d100&xl=kindle_2&from=.list.pc_2_suggest' % (

self.search)

self.shop_dict = {} # 用于存储店铺的信息

self.product_list = []

# 1.0搜索店铺

def shop_search(self):

response, xml = mainRquest(self.url)

title = xml.xpath('//div[@class="shop-title"]/label/text()') # 店铺的名称

user_id = re.findall('user_id=(\d+)', response) # 店主的id

self.shop_dict['shop_data'] = {} # 创建一个字典用于存储店铺数据

for i in range(len(user_id)): # 我们的目的

self.shop_dict['shop_data'][user_id[i]] = {'shop_title': title[i]}

self.title = title[i]

print(user_id[i], self.title)

yield user_id[i]关于上面的self.shop_dict其实是一个存储数据的字典,具体结构如下:

{'shop_data': {'user_id': {'shop_title':'','product':[{商品1},{商品2}]}, 'user_id':{} } }

二.店铺内的商品抓取

准备工作:

经过我多次实验发现在访问店铺内所有商品的信息时容易出现反爬,每次cookie会发生变化,因此我使用了pyautogui来打开浏览器访问所有产品页面,利用fiddler抓包保存到本地,在进行一次提取。

fiddler下载地址:链接:https://pan.baidu.com/s/1PqAOVO4Vwujf8loT37z4Tg

提取码:x0zm

fiddler抓包的配置教程请自行搜索,很多的,实在不会请私信我。

请在fiddler文件所在目录创建一个response.txt文件(一定要在安装目录下,如图所示)

操作截图:

下一步打开fiddler,打开FiddlerScript,转到beforeresponse,添加如下代码。

if (oSession.fullUrl.Contains("tmall.com/search_shopitem"))

{

oSession.utilDecodeResponse();

var fso;

var file;

fso = new ActiveXObject("Scripting.FileSystemObject");

//文件保存路径,可自定义

file = fso.OpenTextFile("response.txt",8 ,true, true);

file.writeLine(oSession.GetResponseBodyAsString());

file.close();

}记得保存脚本。

我们打开一个网站测试一下(把你的杀毒软件关了):https://list.tmall.com/search_shopitem.htm?spm=a220m.1000862.0.0.48813ec6huCom4&s=0&style=sg&sort=s&user_id=2099020602&from=_1_&stype=search#grid-column

记得登录你的tmall账号

测试后你的response.txt中会保存有:

在上面我们得到了店铺的user_id,以及店铺的名称。接下来我们要利用user_id来抓取店铺内的商品。

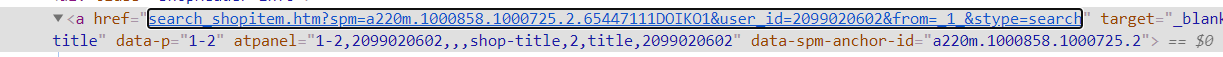

先来分析一下url:

| spm | 作用不明,可有可无 |

| s | 当前页面展示的第一个商品是店铺内第几个商品,用于翻页,间隔60 |

| sort | 排序,s是默认排序 |

其他暂且不分析,等后面用到再说,接下来构造店铺商品url。(接上一节的最后一部分代码)

# 2.0抓取店铺所有产品基本信息。

def all_product(self, userid):

print('2.0抓取店铺所有产品基本信息开始')

self.product = [] # 用于存储店铺内所有产品的信息

url = 'https://list.tmall.com/search_shopitem.htm?' \

'spm=a220m.1000862.0.0.48813ec6huCom4' \

'&s=0&style=sg&sort=s&user_id=%s&from=_1_&stype=search' % (userid)

在这里我们完成了对店铺所有产品的数据抓取,接下来进行提取:

(1)创建一个functions.py用于存储我们自定义的包。

(2)在functions.py中创建自动打开tmall店铺所商品的函数GetAllProduct()。

def GetAllProduct(url):

import pyautogui

import time

# 在运行本程序之前打开fiddler(配置完成的)

time.sleep(2) # 因为程序启动需要时间,在此等待2秒,防止出错。

x, y = 710, 1070 # 这是我的谷歌浏览器的在屏幕上的位置。

pyautogui.moveTo(x, y)

time.sleep(1)

pyautogui.click() # 左击

time.sleep(1)

x, y = 700, 70 # 这是谷歌浏览器的网址输入栏的位置,每台电脑略有不同,请自行测试。

pyautogui.moveTo(x + 100, y)

pyautogui.click()

time.sleep(1)

pyautogui.write(url, interval=0.01) # 在地址栏中输入url,0.01是输入速度

pyautogui.press('enter') # 按回车

time.sleep(5) # 根据自己的网速自行调节速度

# 切换回程序运行界面

pyautogui.keyDown('alt')

pyautogui.press('tab')

pyautogui.keyUp('alt')(3)response.txt读取与提取,创建函数GetResponseHtml()。

# 将保存的数据读取并处理

def GetResponseHtml():

from lxml import etree

def check_charset(file_path):

# 此函数有用于防止数据编码出错,小编在这入坑几个小时

import chardet

with open(file_path, "rb") as f:

data = f.read(4)

charset = chardet.detect(data)['encoding']

return charset

your_path = r'D:\编程工具大全\独家汉化Fiddler5.0.20182\Fiddler5.0.20182\response.txt' # response.txt的路径

with open(your_path, 'r+', encoding=check_charset(your_path)) as f:

data = f.read()

html = etree.HTML(data) # 转换成html

with open('data/response.txt', 'a+') as q: # 这是我对抓取到的response.txt进行一个备份,可有可无

q.write(data)

f.truncate(0) # 清除response.txt中的内容

return data, html

(4)打开Tmall.py,创建方法self.all_product(),利用正则、xpath提取数据。

from Elements import mainRquest

import re

import time

from functions import GetResponseHtml, GetAllProduct, mkdir

from slider import all_product_slider # 可有可无,这个是我写的过滑块验证的包,成功率不是很高,放在这是为了降低代码出现bug的概率。

import json

class Tmall():

def __init__(self):

self.search = input('请输入店铺名:')

self.url = 'https://list.tmall.com/search_product.htm?q=%s&type=p&style=w&spm=a220m.1000858.a2227oh.d100&xl=kindle_2&from=.list.pc_2_suggest' % (

self.search)

self.shop_dict = {} # 用于存储店铺的信息

# 1.0搜索店铺

def shop_search(self):

response, xml = mainRquest(self.url)

title = xml.xpath('//div[@class="shop-title"]/label/text()') # 店铺的名称

user_id = re.findall('user_id=(\d+)', response) # 店主的id

self.shop_dict['shop_data'] = {} # 创建一个字典用于存储店铺数据

for i in range(len(user_id)): # 这部分我在外面做个详细的说明

self.shop_dict['shop_data'][user_id[i]] = {'shop_title': title[i]}

self.title = title[i]

print(user_id[i], self.title)

yield user_id[i] # 测试时使用time.sleep(10),我们爬取店铺商品时,需要换成yield

# 2.0抓取店铺所有产品基本信息。

def all_product(self, userid):

print('2.0抓取店铺所有产品基本信息开始')

self.product = [] # 用于存储店铺内所有产品的信息

url = 'https://list.tmall.com/search_shopitem.htm?' \

'spm=a220m.1000862.0.0.48813ec6huCom4' \

'&s=0&style=sg&sort=s&user_id=%s&from=_1_&stype=search' % (userid)

GetAllProduct(url) # pyautogui自动打开构造的地址,

response, html = GetResponseHtml() # 数据读取

# 下面这些数据是根据我的需要提取的,如需其他数据自行写提取代码

self.price = re.findall('<em><b>¥</b>(.*?)</em>', response) # 价格

self.Msold = re.findall('<span>月成交<em>(.*?)笔</em></span>', response) # 月销量

self.product_title = html.xpath(

'//p[@class="productTitle"]/a/@title') # 产品名称,这报错一般是你的网速太慢,运行到这时网页还没加载好,导致fiddler没捕捉到,

self.product_url = html.xpath('//p[@class="productTitle"]/a/@href') # 产品链接

if self.price == []: # 说明出现了滑块验证

all_product_slider() # 这个主要用于过滑块验证,可以写成pass,你自己到浏览器拉一下毕竟出现的频次很低。

else:

assert (len(self.product_url) == len(self.Msold))

for i in range(len(self.product_url)):

self.product_dict = {}

self.product_dict['product_title'] = self.product_title[i]

self.product_dict['price'] = self.price[i]

self.product_dict['url'] = 'https:' + self.product_url[i]

if self.Msold != []:

self.product_dict['Msold'] = self.Msold[i]

yield self.product_dict # 用于测试,防止运行过快,后面会删掉。

# else:

# self.shop_dict['shop_data'][userid]['product'] = self.product

# grade_list = self.Grade()

# self.shop_dict['shop_data'][userid]['grade'] = grade_list

# mkdir('shop/%s' % self.title)

# with open('shop/%s/%s.json' % (self.title, self.title), 'w') as f:

# json.dump(self.shop_dict['shop_data'][userid], f)

def main():

tmall = Tmall()

for user_id in tmall.shop_search():

tmall.all_product(user_id)

if __name__ == '__main__':

main()

mkdir是创建文件夹的函数:

def mkdir(path):

# 创建文件夹

import os

if not os.path.exists(path): # 如果不存在就创建

os.makedirs(path)在上面我提取了商品的名称,价格,月销量,以及商品详情url。

运行结果:

请输入店铺名:文具

2590283672 pilot百乐凌派专卖店

1708415073 潮乐办公专营店

743905254 联新办公专营店

3862696800 晨光优乐诚品专卖店

2920855757 胜晋办公专营店

407910984 得力官方旗舰店

1134235533 慕诺办公专营店

1063119794 趣梦办公专营店

3243160094 sakura樱花才峻专卖店

2138614417 协创优致办公专营店三、爬取商品详情页面的信息

分析

这个url我们在上一步已经爬取到了。

你需要提取什么信息自己去分析。

我爬取了商品详情的数据,以及大家都写道。

写代码

(1)在cookie文件夹下面创建一个product_cookie.txt的文件;

(2)定义一个新的方法get_product_imformation();

(3)抓取商品详情页面数据;

这部分很简单就是你登录tmall商城,把cookie找到,放到product_cookie.txt,当然之前写的Elements.py需要改改了。

我加入了随机ip,不同url使用不同的cookie(这一步的必要性要验证)。

(1)IP的获取

创建ip文件夹,以及getip.py。

import requests

from lxml import etree

import json

def Ip():

# 获取最新ip

url = 'https://www.kuaidaili.com/free/inha/1/' #3

headers = {"User-Agent": "Mozilla/5.0"}

res = requests.get(url,headers=headers).text

html = etree.HTML(res)

ip = html.xpath('//td[@data-title="IP" ]/text()')

port = html.xpath('//td[@data-title="PORT"]/text()')

return ip,port

def testIp(ipList,portList):

ipDict = {}

for i in range(len(ipList)):

ip = ipList[i]

port = portList[i]

url = 'https://www.taobao.com/'

proxies = {

'http': f"http://{ip}:{port}"

}

headers = {"User-Agent": "Mozilla/5.0"}

# 响应头

res = requests.get(url, proxies=proxies, headers=headers)

# 发起请求

print(res.status_code) # 返回响应码

if res.status_code == 200:

ipDict[ip] = port

else:

with open('ip.json','w') as f:

json.dump(ipDict,f)

print('ip写入完成!')

if __name__ == '__main__':

ipList,portList = Ip()

testIp(ipList,portList)

(2)Elements.py。

import requests

import random

from lxml import etree

import time

import logging

import json

logging.captureWarnings(True) # 禁止打印SSL警告

class request_url(object):

"""请求网页,以及反爬处理"""

def __init__(self, url):

self.url = url

def ip(self):

with open('ip/ip.json','r') as f:

ip_dict = json.load(f) # 读取ip

ip = random.choice(list(ip_dict.keys()))

self.proxy = {

'http': f"http://{ip}:{ip_dict[ip]}",}

def construct_headers(self):

# 不同url使用不同的cookie,前面的错误导致这太乱。

if 'https://detail.tmall.com/' in self.url:

with open('cookie/product_cookie.txt', 'r') as f:

cookie = f.readline()

agent = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.111 '

'Safari/537.36', ]

elif 'rate.tmall.com' in self.url:

with open('cookie/comment_cookie.txt', 'r') as f:

cookie = f.readline()

agent = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.111 '

'Safari/537.36', ]

else:

with open('cookie/search_cookie.txt', 'r') as f:

cookie = f.readline()

# 移动端的请求头,网上一搜一大堆。

agent = [

'Mozilla/5.0 (iPhone; CPU iPhone OS 13_2_3 like Mac OS X) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/13.0.3 Mobile/15E148 Safari/604.1', ]

self.headers = {

'user-agent': random.choice(agent), # 随机选择一个请求头部信息

'cookie': cookie,

'referer': 'https://detail.tmall.com/item.htm?spm=a220m.1000858.1000725.1.48c37111IiH0Ml&id=578004651332&skuId=4180113216736&areaId=610100&user_id=2099020602&cat_id=50094904&is_b=1&rn=246062bfaa1943ec6b72afcd1ff3ded8',

}

def request(self):

self.ip()

self.construct_headers()

response = requests.get(self.url, headers=self.headers, verify=False,proxies=self.proxy)

xml = etree.HTML(response.text) # 转换成xml,为了使用xpath提取数据

return response.text, xml

def punish():

# 这部分在我的许多测试版本中是用来过滑块验证的,但是后来发现没必要。

pass

def mainRquest(url):

for i in range(3): # 最多请求三次,好比断网重连

try:

RU = request_url(url)

response, xml = RU.request()

except Exception:

time.sleep(60)

else:

break

return response, xml

产品详情数据代码:

from Elements import mainRquest

import re

import time

from functions import GetResponseHtml, GetAllProduct, mkdir,Compound

from slider import all_product_slider

import json

class Tmall():

def __init__(self):

self.search = input('请输入店铺名:')

self.url = 'https://list.tmall.com/search_product.htm?q=%s&type=p&style=w&spm=a220m.1000858.a2227oh.d100&xl=kindle_2&from=.list.pc_2_suggest' % (

self.search)

self.shop_dict = {} # 用于存储店铺的信息

# 1.0搜索店铺

def shop_search(self):

response, xml = mainRquest(self.url)

title = xml.xpath('//div[@class="shop-title"]/label/text()') # 店铺的名称

user_id = re.findall('user_id=(\d+)', response) # 店主的id

self.shop_dict['shop_data'] = {} # 创建一个字典用于存储店铺数据

for i in range(len(user_id)): # 这部分我在外面做个详细的说明

self.shop_dict['shop_data'][user_id[i]] = {'shop_title': title[i]}

self.title = title[i]

print(user_id[i], self.title)

yield user_id[i] # 测试时使用time.sleep(10),我们爬取店铺商品时,需要换成yield

# 2.0抓取店铺所有产品基本信息。

def all_product(self, userid):

print('2.0抓取店铺所有产品基本信息开始')

self.product = [] # 用于存储店铺内所有产品的信息

url = 'https://list.tmall.com/search_shopitem.htm?' \

'spm=a220m.1000862.0.0.48813ec6huCom4' \

'&s=0&style=sg&sort=s&user_id=%s&from=_1_&stype=search' % (userid)

GetAllProduct(url) # pyautogui自动打开构造的地址,

response, html = GetResponseHtml() # 数据读取

# 下面这些数据是根据我的需要提取的,如需其他数据自行写提取代码

self.price = re.findall('<em><b>¥</b>(.*?)</em>', response) # 价格

self.Msold = re.findall('<span>月成交<em>(.*?)笔</em></span>', response) # 月销量

self.product_title = html.xpath(

'//p[@class="productTitle"]/a/@title') # 产品名称,这报错一般是你的网速太慢,运行到这时网页还没加载好,导致fiddler没捕捉到,

self.product_url = html.xpath('//p[@class="productTitle"]/a/@href') # 产品链接

if self.price == []: # 说明出现了滑块验证

all_product_slider() # 这个主要用于过滑块验证,在获取一个店铺的

else:

assert (len(self.product_url) == len(self.Msold))

for i in range(len(self.product_url)):

self.product_dict = {}

self.product_dict['product_title'] = self.product_title[i]

self.product_dict['price'] = self.price[i]

self.product_dict['url'] = 'https:' + self.product_url[i]

if self.Msold != []:

self.product_dict['Msold'] = self.Msold[i]

yield self.product_dict # 用于测试,防止运行过快,后面会删掉。

# else:

# self.shop_dict['shop_data'][userid]['product'] = self.product

# grade_list = self.Grade()

# self.shop_dict['shop_data'][userid]['grade'] = grade_list

# mkdir('shop/%s' % self.title)

# with open('shop/%s/%s.json' % (self.title, self.title), 'w') as f:

# json.dump(self.shop_dict['shop_data'][userid], f)

# 3.0 产品详情数据抓取

def get_product_imformation(self, userid):

print('\r3.0产品开始', end='')

# 构造url

url = self.product_dict['url']

itemid = re.findall('id=(\d+)', url)[0]

# 请求,下面的各种方法都是为了提取数据,这部分你完全可以自己写。

response, html = mainRquest(url)

self.shop_grade_url = 'https:' + html.xpath('//input[@id="dsr-ratelink"]/@value')[0] # 店铺评分链接

brand = re.findall('li id="J_attrBrandName" title=" (.*?)">', response) # 品牌

para = html.xpath('//ul[@id="J_AttrUL"]/li/text()') # 商品详情

# 将数据装入字典

self.product_dict['userid'] = userid

self.product_dict['itemid'] = itemid

self.product_dict['shop_grade_url'] = self.shop_grade_url

if brand != []:

self.product_dict['brand'] = brand

if para != []:

self.product_dict['para'] = para

specification = html.xpath('//table[@class="tm-tableAttr"]/tbody/tr/th/text()')

stitle = html.xpath('//table[@class="tm-tableAttr"]/tbody/tr[@class="tm-tableAttrSub"]/th/text()')

sanswer = html.xpath('//table[@class="tm-tableAttr"]/tbody/tr/td/text()')

# self.shop_grade_url_list.append(shop_grade_url)

if specification != []:

self.sparadict = Compound(specification, sanswer, stitle)

self.product_dict['specification'] = specification

# 评论关键词

curl = 'https://rate.tmall.com/listTagClouds.htm?itemId=%s' % itemid # 接口

response, html = mainRquest(curl)

true = True

false = False

null = None

comment_ketword = eval(response)

self.product_dict['comment_ketword '] = comment_ketword

yield self.product_dict

def main():

tmall = Tmall()

for user_id in tmall.shop_search():

for i in tmall.all_product(user_id):

tmall.get_product_imformation(i)

if __name__ == '__main__':

main()

四、评论数据爬取

评论的接口其实很简单,我不想放出来自己一抓就出来了(我的数据抓取工作还没完成,我想抓完后再放在这)。

其实我们爬取最耗时的部分就在这,我在这使用了多线程爬取,且听我的思路。

import time

from threading import Thread

def foo(start=1): # 相当于爬取评论

# 我是准备三个线程,所以步长为3

for i in range(start, 10, 3):

print(i)

time.sleep(3)

return [start] # 这是结果

class Add(Thread):

def __init__(self, func, x):

Thread.__init__(self)

self.func = func

self.x = x

def run(self):

self.result = self.func(self.x)

def get_result(self):

return self.result

def main():

t1 = Add(foo, 1)

t2 = Add(foo, 2)

t3 = Add(foo, 3)

t1.start()

t2.start()

t3.start()

t1.join()

t2.join()

t3.join()

comment_list = t1.get_result() + t2.get_result() + t3.get_result() # 将三个结果加在一起

return comment_list

if __name__ == '__main__':

print(main())

ok,上面的简化代码表达我的评论抓取思想。

from Elements import mainRquest

import re

import time

from functions import GetResponseHtml, GetAllProduct, mkdir,Compound,Combine,HandleJson

from slider import all_product_slider

import json

class Tmall():

def __init__(self):

self.search = input('请输入店铺名:')

self.url = 'https://list.tmall.com/search_product.htm?q=%s&type=p&style=w&spm=a220m.1000858.a2227oh.d100&xl=kindle_2&from=.list.pc_2_suggest' % (

self.search)

self.shop_dict = {} # 用于存储店铺的信息

# 1.0搜索店铺

def shop_search(self):

response, xml = mainRquest(self.url)

title = xml.xpath('//div[@class="shop-title"]/label/text()') # 店铺的名称

user_id = re.findall('user_id=(\d+)', response) # 店主的id

self.shop_dict['shop_data'] = {} # 创建一个字典用于存储店铺数据

for i in range(len(user_id)): # 这部分我在外面做个详细的说明

self.shop_dict['shop_data'][user_id[i]] = {'shop_title': title[i]}

self.title = title[i]

print(user_id[i], self.title)

yield user_id[i] # 测试时使用time.sleep(10),我们爬取店铺商品时,需要换成yield

# 2.0抓取店铺所有产品基本信息。

def all_product(self, userid):

print('2.0抓取店铺所有产品基本信息开始')

self.product = [] # 用于存储店铺内所有产品的信息

url = 'https://list.tmall.com/search_shopitem.htm?' \

'spm=a220m.1000862.0.0.48813ec6huCom4' \

'&s=0&style=sg&sort=s&user_id=%s&from=_1_&stype=search' % (userid)

GetAllProduct(url) # pyautogui自动打开构造的地址,

response, html = GetResponseHtml() # 数据读取

# 下面这些数据是根据我的需要提取的,如需其他数据自行写提取代码

self.price = re.findall('<em><b>¥</b>(.*?)</em>', response) # 价格

self.Msold = re.findall('<span>月成交<em>(.*?)笔</em></span>', response) # 月销量

self.product_title = html.xpath(

'//p[@class="productTitle"]/a/@title') # 产品名称,这报错一般是你的网速太慢,运行到这时网页还没加载好,导致fiddler没捕捉到,

self.product_url = html.xpath('//p[@class="productTitle"]/a/@href') # 产品链接

if self.price == []: # 说明出现了滑块验证

all_product_slider() # 这个主要用于过滑块验证,在获取一个店铺的

else:

assert (len(self.product_url) == len(self.Msold))

for i in range(len(self.product_url)):

self.product_dict = {}

self.product_dict['product_title'] = self.product_title[i]

self.product_dict['price'] = self.price[i]

self.product_dict['url'] = 'https:' + self.product_url[i]

if self.Msold != []:

self.product_dict['Msold'] = self.Msold[i]

yield self.product_dict # 用于测试,防止运行过快,后面会删掉。

# else:

# self.shop_dict['shop_data'][userid]['product'] = self.product

# grade_list = self.Grade()

# self.shop_dict['shop_data'][userid]['grade'] = grade_list

# mkdir('shop/%s' % self.title)

# with open('shop/%s/%s.json' % (self.title, self.title), 'w') as f:

# json.dump(self.shop_dict['shop_data'][userid], f)

# 3.0 产品详情数据抓取

def get_product_imformation(self, userid):

print('\r3.0产品开始', end='')

# 构造url

url = self.product_dict['url']

itemid = re.findall('id=(\d+)', url)[0]

# 请求,下面的各种方法都是为了提取数据,这部分你完全可以自己写。

response, html = mainRquest(url)

self.shop_grade_url = 'https:' + html.xpath('//input[@id="dsr-ratelink"]/@value')[0] # 店铺评分链接

brand = re.findall('li id="J_attrBrandName" title=" (.*?)">', response) # 品牌

para = html.xpath('//ul[@id="J_AttrUL"]/li/text()') # 商品详情

# 将数据装入字典

self.product_dict['userid'] = userid

self.product_dict['itemid'] = itemid

self.product_dict['shop_grade_url'] = self.shop_grade_url

if brand != []:

self.product_dict['brand'] = brand

if para != []:

self.product_dict['para'] = para

specification = html.xpath('//table[@class="tm-tableAttr"]/tbody/tr/th/text()')

stitle = html.xpath('//table[@class="tm-tableAttr"]/tbody/tr[@class="tm-tableAttrSub"]/th/text()')

sanswer = html.xpath('//table[@class="tm-tableAttr"]/tbody/tr/td/text()')

# self.shop_grade_url_list.append(shop_grade_url)

if specification != []:

self.sparadict = Compound(specification, sanswer, stitle)

self.product_dict['specification'] = specification

# 评论关键词

curl = 'https://rate.tmall.com/listTagClouds.htm?itemId=%s' % itemid # 接口

response, html = mainRquest(curl)

true = True

false = False

null = None

comment_ketword = eval(response)

self.product_dict['comment_ketword '] = comment_ketword

yield self.product_dict

def get_comment(self, start=1):

comment_list = []

itemid = self.product_dict['itemid']

userid = self.product_dict['userid']

for i in range(start, 100, 3): # 为什么是100,评论最多99页

print('\r正在爬取第%s页' % (i), end='')

url = 'https://rate.tmall.com/list_detail_rate.htm?itemId=%s&sellerId=%s¤tPage=%s' % (

itemid, userid, i)

response, html = mainRquest(url)

comment_dict = HandleJson(response) # 这个函数用来处理json

last_page = comment_dict['rateDetail']['paginator']['lastPage']

if last_page == i or last_page == 0:

break

comment_list.append(comment_dict)

return comment_list

def save_comment(self, comment_list):

itemid = self.product_dict['itemid']

mkdir('shop/%s/评论信息' % self.title) # 创建评论文件夹

if comment_list != []:

with open('shop/%s/评论信息/%s.json' % (self.title, itemid), 'w') as f:

json.dump(comment_list, f)

self.product.append(self.product_dict)

def main():

tmall = Tmall()

for user_id in tmall.shop_search():

for i in tmall.all_product(user_id):

try:

for e in tmall.get_product_imformation(user_id):

t1 = Combine(tmall.get_comment, 1)

t2 = Combine(tmall.get_comment, 2)

t3 = Combine(tmall.get_comment, 3)

t1.start()

t2.start()

t3.start()

t1.join()

t2.join()

t3.join()

# comment_list 存储的是我们的评论

comment_list = t1.get_result() + t2.get_result() + t3.get_result()

tmall.save_comment(comment_list)

except Exception:

continue

if __name__ == '__main__':

main()

Combine代码:

from threading import Thread

class Combine(Thread):

def __init__(self, func, x):

Thread.__init__(self)

self.func = func

self.x = x

def run(self):

self.comment_list = self.func(self.x)

def get_result(self):

return self.comment_listHandjson代码:

def HandleJson(data):

false = False

null = None

true = True

dict = eval(data.replace('\n', '').replace('jsonp128', ''))

return dict五、店铺评分抓取

我们爬了这么久,只保存了评论信息而我们的商品信息会在爬完店铺的60个商品后保存。什么意思呢举个例子

for i in range(60):

print('第{i}个')

print('一系列操作后,将得到的数据存起来。')

else:

print('保存')保存数据需要返回到all_product(),你回头看这个方法后面有些注释掉的代码。仔细看一下,很简单的。

self.Grade()就是我们爬取店铺评分的方法。哦,记得创建shop文件夹。

from Elements import mainRquest

import re

import time

from functions import GetResponseHtml, GetAllProduct, mkdir,Compound,Combine,HandleJson

from slider import all_product_slider

import json

class Tmall():

def __init__(self):

self.search = input('请输入店铺名:')

self.url = 'https://list.tmall.com/search_product.htm?q=%s&type=p&style=w&spm=a220m.1000858.a2227oh.d100&xl=kindle_2&from=.list.pc_2_suggest' % (

self.search)

self.shop_dict = {} # 用于存储店铺的信息

# 1.0搜索店铺

def shop_search(self):

response, xml = mainRquest(self.url)

title = xml.xpath('//div[@class="shop-title"]/label/text()') # 店铺的名称

user_id = re.findall('user_id=(\d+)', response) # 店主的id

self.shop_dict['shop_data'] = {} # 创建一个字典用于存储店铺数据

for i in range(len(user_id)): # 这部分我在外面做个详细的说明

self.shop_dict['shop_data'][user_id[i]] = {'shop_title': title[i]}

self.title = title[i]

print(user_id[i], self.title)

yield user_id[i] # 测试时使用time.sleep(10),我们爬取店铺商品时,需要换成yield

# 2.0抓取店铺所有产品基本信息。

def all_product(self, userid):

print('2.0抓取店铺所有产品基本信息开始')

self.product = [] # 用于存储店铺内所有产品的信息

url = 'https://list.tmall.com/search_shopitem.htm?' \

'spm=a220m.1000862.0.0.48813ec6huCom4' \

'&s=0&style=sg&sort=s&user_id=%s&from=_1_&stype=search' % (userid)

GetAllProduct(url) # pyautogui自动打开构造的地址,

response, html = GetResponseHtml() # 数据读取

# 下面这些数据是根据我的需要提取的,如需其他数据自行写提取代码

self.price = re.findall('<em><b>¥</b>(.*?)</em>', response) # 价格

self.Msold = re.findall('<span>月成交<em>(.*?)笔</em></span>', response) # 月销量

self.product_title = html.xpath(

'//p[@class="productTitle"]/a/@title') # 产品名称,这报错一般是你的网速太慢,运行到这时网页还没加载好,导致fiddler没捕捉到,

self.product_url = html.xpath('//p[@class="productTitle"]/a/@href') # 产品链接

if self.price == []: # 说明出现了滑块验证

all_product_slider() # 这个主要用于过滑块验证,在获取一个店铺的

else:

assert (len(self.product_url) == len(self.Msold))

for i in range(len(self.product_url)):

self.product_dict = {}

self.product_dict['product_title'] = self.product_title[i]

self.product_dict['price'] = self.price[i]

self.product_dict['url'] = 'https:' + self.product_url[i]

if self.Msold != []:

self.product_dict['Msold'] = self.Msold[i]

yield self.product_dict # 用于测试,防止运行过快,后面会删掉。

else:

self.shop_dict['shop_data'][userid]['product'] = self.product

grade_list = self.Grade()

self.shop_dict['shop_data'][userid]['grade'] = grade_list

mkdir('shop/%s' % self.title)

with open('shop/%s/%s.json' % (self.title, self.title), 'w') as f:

json.dump(self.shop_dict['shop_data'][userid], f)

# 3.0 产品详情数据抓取

def get_product_imformation(self, userid):

print('\r3.0产品开始', end='')

# 构造url

url = self.product_dict['url']

itemid = re.findall('id=(\d+)', url)[0]

# 请求,下面的各种方法都是为了提取数据,这部分你完全可以自己写。

response, html = mainRquest(url)

self.shop_grade_url = 'https:' + html.xpath('//input[@id="dsr-ratelink"]/@value')[0] # 店铺评分链接

brand = re.findall('li id="J_attrBrandName" title=" (.*?)">', response) # 品牌

para = html.xpath('//ul[@id="J_AttrUL"]/li/text()') # 商品详情

# 将数据装入字典

self.product_dict['userid'] = userid

self.product_dict['itemid'] = itemid

self.product_dict['shop_grade_url'] = self.shop_grade_url

if brand != []:

self.product_dict['brand'] = brand

if para != []:

self.product_dict['para'] = para

specification = html.xpath('//table[@class="tm-tableAttr"]/tbody/tr/th/text()')

stitle = html.xpath('//table[@class="tm-tableAttr"]/tbody/tr[@class="tm-tableAttrSub"]/th/text()')

sanswer = html.xpath('//table[@class="tm-tableAttr"]/tbody/tr/td/text()')

# self.shop_grade_url_list.append(shop_grade_url)

if specification != []:

self.sparadict = Compound(specification, sanswer, stitle)

self.product_dict['specification'] = specification

# 评论关键词

curl = 'https://rate.tmall.com/listTagClouds.htm?itemId=%s' % itemid # 接口

response, html = mainRquest(curl)

true = True

false = False

null = None

comment_ketword = eval(response)

self.product_dict['comment_ketword '] = comment_ketword

yield self.product_dict

def get_comment(self, start=1):

comment_list = []

itemid = self.product_dict['itemid']

userid = self.product_dict['userid']

for i in range(start, 100, 3): # 为什么是100,评论最多99页

print('\r正在爬取第%s页' % (i), end='')

url = 'https://rate.tmall.com/list_detail_rate.htm?itemId=%s&sellerId=%s¤tPage=%s' % (

itemid, userid, i)

response, html = mainRquest(url)

comment_dict = HandleJson(response) # 这个函数用来处理json

last_page = comment_dict['rateDetail']['paginator']['lastPage']

if last_page == i or last_page == 0:

break

comment_list.append(comment_dict)

return comment_list

def save_comment(self, comment_list):

itemid = self.product_dict['itemid']

mkdir('shop/%s/评论信息' % self.title) # 创建评论文件夹

if comment_list != []:

with open('shop/%s/评论信息/%s.json' % (self.title, itemid), 'w') as f:

json.dump(comment_list, f)

self.product.append(self.product_dict)

def Grade(self):

response, html = mainRquest(self.shop_grade_url)

grade = re.findall('<em title="(.*?)分" class="count">.*?</em>分', response)

# 与行业水准比较

compare = html.xpath(

'//strong[contains(@class,"percent over")or contains(@class,"percent lower")or contains(@class,"percent normal")]/text()')

comment_people = re.findall('共<span>(\d+)</span>人', response) # 就是参与店铺三种服务的评分的人数

rate = re.findall('<em class="h">(.*?)%</em>', response) # 各种星级多占比例

d = re.findall('<strong class="(.*?)">.*?</strong>', response) # 解释comapre是高于行业还是低于或持平

shopid = re.findall('"shopID": "(\d+)",', response)[0] # 用在后面爬取店铺近三十天的服务情况

userNumid = re.findall('user-rate-(.*?).htm', self.shop_grade_url)[0] # 用在后面爬取店铺近三十天的服务情况

grade_list = []

for i in range(3):

Dict = {}

Dict['grade'] = grade[i]

Dict['total'] = comment_people[i]

Dict['compare'] = compare[i] + ',' + d[i]

Dict['star'] = rate[i * 5:(i + 1) * 5]

grade_list.append(Dict)

print(grade_list)

# 爬取店铺近三十天的服务情况

url = 'https://rate.taobao.com/refund/refundIndex.htm?userNumId=%s&shopId=%s&businessType=0&callback=jsonp120' % (

userNumid, shopid)

response, html = mainRquest(url)

true = True

null = None

false = False

month_service = eval(re.findall('.*?&& jsonp120(.*?);', response)[0])

grade_list.append(month_service)

return grade_list

def main():

tmall = Tmall()

for user_id in tmall.shop_search():

for i in tmall.all_product(user_id):

try:

for e in tmall.get_product_imformation(user_id):

t1 = Combine(tmall.get_comment, 1)

t2 = Combine(tmall.get_comment, 2)

t3 = Combine(tmall.get_comment, 3)

t1.start()

t2.start()

t3.start()

t1.join()

t2.join()

t3.join()

# comment_list 存储的是我们的评论

comment_list = t1.get_result() + t2.get_result() + t3.get_result()

tmall.save_comment(comment_list)

except Exception:

continue

if __name__ == '__main__':

main()

各个包的代码:

Elements.py

import requests

import random

from lxml import etree

import time

import logging

import json

logging.captureWarnings(True) # 禁止打印SSL警告

class request_url(object):

"""请求网页,以及反爬处理"""

def __init__(self, url):

self.url = url

def ip(self):

with open('ip/ip.json','r') as f:

ip_dict = json.load(f) # 读取ip

ip = random.choice(list(ip_dict.keys()))

self.proxy = {

'http': f"http://{ip}:{ip_dict[ip]}",}

def construct_headers(self):

# 不同url使用不同的cookie,前面的错误导致这太乱。

if 'https://detail.tmall.com/' in self.url:

with open('cookie/product_cookie.txt', 'r') as f:

cookie = f.readline()

agent = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.111 '

'Safari/537.36', ]

elif 'rate.tmall.com' in self.url:

with open('cookie/comment_cookie.txt', 'r') as f:

cookie = f.readline()

agent = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.111 '

'Safari/537.36', ]

else:

with open('cookie/search_cookie.txt', 'r') as f:

cookie = f.readline()

# 移动端的请求头,网上一搜一大堆。

agent = [

'Mozilla/5.0 (iPhone; CPU iPhone OS 13_2_3 like Mac OS X) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/13.0.3 Mobile/15E148 Safari/604.1', ]

self.headers = {

'user-agent': random.choice(agent), # 随机选择一个请求头部信息

'cookie': cookie,

'referer': 'https://detail.tmall.com/item.htm?spm=a220m.1000858.1000725.1.48c37111IiH0Ml&id=578004651332&skuId=4180113216736&areaId=610100&user_id=2099020602&cat_id=50094904&is_b=1&rn=246062bfaa1943ec6b72afcd1ff3ded8',

}

def request(self):

self.ip()

self.construct_headers()

response = requests.get(self.url, headers=self.headers, verify=False,proxies=self.proxy)

xml = etree.HTML(response.text) # 转换成xml,为了使用xpath提取数据

return response.text, xml

def punish():

# 这部分在我的许多测试版本中是用来过滑块验证的,但是后来发现没必要。

pass

def mainRquest(url):

for i in range(3): # 最多请求三次,好比断网重连

try:

RU = request_url(url)

response, xml = RU.request()

except Exception:

time.sleep(60)

else:

break

return response, xml

functions.py

# url = 'https://list.tmall.com/search_shopitem.htm?spm=a220m.1000862.0.0.48813ec6huCom4&s=0&style=sg&sort=s&user_id=2099020602&from=_1_&stype=search#grid-column'

def GetAllProduct(url):

import pyautogui

import time

# 在运行本程序之前打开fiddler(配置完成的)

time.sleep(2) # 因为程序启动需要时间,在此等待2秒,防止出错。

x, y = 710, 1070 # 这是我的谷歌浏览器的在屏幕上的位置。

pyautogui.moveTo(x, y)

time.sleep(1)

pyautogui.click() # 左击

time.sleep(1)

x, y = 700, 70 # 这是谷歌浏览器的网址输入栏的位置,每台电脑略有不同,请自行测试。

pyautogui.moveTo(x + 100, y)

pyautogui.click()

time.sleep(1)

pyautogui.write(url, interval=0.01) # 在地址栏中输入url,0.01是输入速度

pyautogui.press('enter') # 按回车

time.sleep(5) # 根据自己的网速自行调节速度

# 切换回程序运行界面

pyautogui.keyDown('alt')

pyautogui.press('tab')

pyautogui.keyUp('alt')

# 将保存的数据读取并处理

def GetResponseHtml():

from lxml import etree

def check_charset(file_path):

# 此函数有用于防止数据编码出错,小编在这入坑几个小时

import chardet

with open(file_path, "rb") as f:

data = f.read(4)

charset = chardet.detect(data)['encoding']

return charset

your_path = r'D:\编程工具大全\独家汉化Fiddler5.0.20182\Fiddler5.0.20182\response.txt' # response.txt的路径

with open(your_path, 'r+', encoding=check_charset(your_path)) as f:

response = f.read()

html = etree.HTML(response) # 转换成html

with open('response/response.txt', 'a+',

encoding=check_charset(your_path)) as q: # 这是我对抓取到的response.txt进行一个备份,可有可无

q.write(response)

f.truncate(0) # 清除response.txt中的内容,防止干扰读取下次爬取到的内容

return response, html

def slider():

import pyautogui

import time

# 在运行本程序之前打开fiddler(配置完成的)

time.sleep(2) # 因为程序启动需要时间,在此等待2秒,防止出错。

x, y = 710, 1070 # 这是我的谷歌浏览器的在屏幕上的位置。

pyautogui.moveTo(x, y)

time.sleep(1)

pyautogui.click() # 左击

time.sleep(1)

x, y = 1000, 540

pyautogui.moveTo(x, y)

pyautogui.scroll(-180)

time.sleep(1)

x, y = 750, 650

pyautogui.moveTo(x, y)

pyautogui.dragTo(x + 450, y, 2, button='left')

def Replace(l, rd='', new=''):

for i in range(len(l)):

l[i] = l[i].replace(rd, new)

return l

def Compound(specification, sanswer, stitle):

para_dict = {}

for q in stitle:

index = specification.index(q)

if index > 0:

para_dict[Last] = dict(zip(specification[Last_index:index], Replace(sanswer, '\xa0')[Last_index:index]))

specification.remove(q)

Last = q

Last_index = index

para_dict[Last] = dict(zip(specification[Last_index:index], Replace(sanswer, '\xa0')[Last_index:index]))

return para_dict

def ReadHeaders(CookieName):

with open('D:\编程工具大全\独家汉化Fiddler5.0.20182\Fiddler5.0.20182\headers.txt', 'r', encoding='utf16') as f:

headers = f.readlines()

headers.reverse()

for i in headers:

if 'cookie' in i:

i = i.replace('\n', '').replace('cookie: ', '').replace('Cookie: ', '')

print(i)

with open(r'cookie\%s.txt' % CookieName, 'w') as f:

f.write(i)

print('%scookie写入完成!' % (CookieName))

break

def HandleJson(data):

false = False

null = None

true = True

dict = eval(data.replace('\n', '').replace('jsonp128', ''))

return dict

def mkdir(path):

# 创建文件夹

import os

if not os.path.exists(path): # 如果不存在就创建

os.makedirs(path)

from threading import Thread

class Combine(Thread):

def __init__(self, func, x):

Thread.__init__(self)

self.func = func

self.x = x

def run(self):

self.comment_list = self.func(self.x)

def get_result(self):

return self.comment_list

slider.py

import pyautogui

import time

"""

淘宝的这个滑块验证我没弄明白,

为什么人工拉有时也过不了,

所以代码拉滑块不一定100%成功,

但是我有解决办法。

1.我们爬取allproduct页面的频次并不高,

在加上我们使用pyautogui模拟点击访问,

这样的话滑块验证几乎不会出现。

2.我们写slider的目的是降低我们失败的概率,如果这部分挺难的,你也可以跳过,

"""

def open_chrome():

# 打开我的chrome浏览器,它在我的任务栏处

time.sleep(2)

x, y = 700, 1070

pyautogui.moveTo(x, y)

time.sleep(1)

pyautogui.click()

def open_url(url):

x, y = 700, 70

pyautogui.moveTo(x + 100, y)

pyautogui.click()

time.sleep(1)

pyautogui.write(url, interval=0.01) # 在谷歌浏览器网址输入处输入网址。

pyautogui.press('enter')

time.sleep(4)

def scroll2():

"""

此滚动用于评论页面

"""

time.sleep(2)

pyautogui.moveTo(1000, 500)

# scroll滚动

pyautogui.scroll(-2000)

time.sleep(1)

# 点击评论

x, y = 600, 150

pyautogui.moveTo(x, y)

pyautogui.click()

time.sleep(1)

x, y = 650, 150

pyautogui.moveTo(x, y)

pyautogui.click()

def slider3():

# 过评论滑块验证

time.sleep(3)

x, y = 1000, 540

pyautogui.moveTo(x, y)

pyautogui.scroll(-180)

time.sleep(1)

# pyautogui.moveTo(750, 680)

# pyautogui.dragTo(x + 450, y,2, button='left')

# time.sleep(1)

x, y = 770, 630

pyautogui.moveTo(x, y)

pyautogui.dragTo(x + 450, y, 1, button='left')

def switch_window():

pyautogui.keyDown('alt')

pyautogui.press('tab')

pyautogui.keyUp('alt')

def read_heaeders(Type):

type = {'comment': ['comment_headers', 'comment_cookie'],

'product': ['product_headers', 'product_cookie'],

'all_product': ['all_product_headers', 'all_product_cookie'],

'search': ['search_headers', 'search_cookie']}

with open(r'D:\编程工具大全\独家汉化Fiddler5.0.20182\Fiddler5.0.20182\%s.txt' % (type[Type][0]), 'r', encoding='utf16') as f:

headers = f.readlines()

headers.reverse()

for i in headers:

if 'cookie' in i:

i = i.replace('\n', '').replace('cookie: ', '').replace('Cookie: ', '')

print(i)

with open(r'cookie\%s.txt' % (type[Type][1]), 'w') as f:

f.write(i)

print('%scookie写入完成!' % (Type))

break

def CommentSlider():

print('CommentSlider!!!')

open_chrome()

url = 'https://detail.tmall.com/item.htm?spm=a1z10.11404-b-s.0.0.c9634d8cQ8HAvV&id=604446170894'

open_url(url)

scroll2()

slider3()

switch_window()

read_heaeders('comment')

def grade_slider():

pass

def slider1():

# 过评论滑块验证

time.sleep(3)

# pyautogui.moveTo(750, 680)

# pyautogui.dragTo(x + 450, y,2, button='left')

# time.sleep(1)

x, y = 1100, 630

pyautogui.moveTo(x, y)

pyautogui.dragTo(x + 450, y, 1, button='left')

def slider2():

# 过评论滑块验证

time.sleep(3)

x, y = 1100, 610

pyautogui.moveTo(x, y)

pyautogui.dragTo(x + 450, y, 1, button='left')

flush()

time.sleep(3)

x, y = 1100, 610

pyautogui.moveTo(x, y)

pyautogui.dragTo(x + 450, y, 1, button='left')

def flush():

time.sleep(1)

pyautogui.keyDown('ctrl')

pyautogui.press('r')

pyautogui.keyUp('ctrl')

time.sleep(4)

def all_product_slider():

# 你想一下,当出现滑块验证时,

# fiddler抓到的包里没有你要的信息,

# 因此你可你根据这个触发我们的滑块验证,点到为止

open_chrome()

# url = 'https://list.tmall.com/search_shopitem.htm?spm=a220m.1000862.0.0.48813ec6huCom4&s=0&style=sg&sort=s&user_id=666279096&from=_1_&stype=search'

# open_url(url)n'g

slider2()

flush()

slider2()

switch_window()

read_heaeders('all_product')

Tmall.py

from Elements import mainRquest

import re

import time

from functions import GetResponseHtml, GetAllProduct, mkdir,Compound,Combine,HandleJson

from slider import all_product_slider

import json

class Tmall():

def __init__(self):

self.search = input('请输入店铺名:')

self.url = 'https://list.tmall.com/search_product.htm?q=%s&type=p&style=w&spm=a220m.1000858.a2227oh.d100&xl=kindle_2&from=.list.pc_2_suggest' % (

self.search)

self.shop_dict = {} # 用于存储店铺的信息

# 1.0搜索店铺

def shop_search(self):

response, xml = mainRquest(self.url)

title = xml.xpath('//div[@class="shop-title"]/label/text()') # 店铺的名称

user_id = re.findall('user_id=(\d+)', response) # 店主的id

self.shop_dict['shop_data'] = {} # 创建一个字典用于存储店铺数据

for i in range(len(user_id)): # 这部分我在外面做个详细的说明

self.shop_dict['shop_data'][user_id[i]] = {'shop_title': title[i]}

self.title = title[i]

print(user_id[i], self.title)

yield user_id[i] # 测试时使用time.sleep(10),我们爬取店铺商品时,需要换成yield

# 2.0抓取店铺所有产品基本信息。

def all_product(self, userid):

print('2.0抓取店铺所有产品基本信息开始')

self.product = [] # 用于存储店铺内所有产品的信息

url = 'https://list.tmall.com/search_shopitem.htm?' \

'spm=a220m.1000862.0.0.48813ec6huCom4' \

'&s=0&style=sg&sort=s&user_id=%s&from=_1_&stype=search' % (userid)

GetAllProduct(url) # pyautogui自动打开构造的地址,

response, html = GetResponseHtml() # 数据读取

# 下面这些数据是根据我的需要提取的,如需其他数据自行写提取代码

self.price = re.findall('<em><b>¥</b>(.*?)</em>', response) # 价格

self.Msold = re.findall('<span>月成交<em>(.*?)笔</em></span>', response) # 月销量

self.product_title = html.xpath(

'//p[@class="productTitle"]/a/@title') # 产品名称,这报错一般是你的网速太慢,运行到这时网页还没加载好,导致fiddler没捕捉到,

self.product_url = html.xpath('//p[@class="productTitle"]/a/@href') # 产品链接

if self.price == []: # 说明出现了滑块验证

all_product_slider() # 这个主要用于过滑块验证,在获取一个店铺的

else:

assert (len(self.product_url) == len(self.Msold))

for i in range(len(self.product_url)):

self.product_dict = {}

self.product_dict['product_title'] = self.product_title[i]

self.product_dict['price'] = self.price[i]

self.product_dict['url'] = 'https:' + self.product_url[i]

if self.Msold != []:

self.product_dict['Msold'] = self.Msold[i]

yield self.product_dict # 用于测试,防止运行过快,后面会删掉。

else:

self.shop_dict['shop_data'][userid]['product'] = self.product

grade_list = self.Grade()

self.shop_dict['shop_data'][userid]['grade'] = grade_list

mkdir('shop/%s' % self.title)

with open('shop/%s/%s.json' % (self.title, self.title), 'w') as f:

json.dump(self.shop_dict['shop_data'][userid], f)

# 3.0 产品详情数据抓取

def get_product_imformation(self, userid):

print('\r3.0产品开始', end='')

# 构造url

url = self.product_dict['url']

itemid = re.findall('id=(\d+)', url)[0]

# 请求,下面的各种方法都是为了提取数据,这部分你完全可以自己写。

response, html = mainRquest(url)

self.shop_grade_url = 'https:' + html.xpath('//input[@id="dsr-ratelink"]/@value')[0] # 店铺评分链接

brand = re.findall('li id="J_attrBrandName" title=" (.*?)">', response) # 品牌

para = html.xpath('//ul[@id="J_AttrUL"]/li/text()') # 商品详情

# 将数据装入字典

self.product_dict['userid'] = userid

self.product_dict['itemid'] = itemid

self.product_dict['shop_grade_url'] = self.shop_grade_url

if brand != []:

self.product_dict['brand'] = brand

if para != []:

self.product_dict['para'] = para

specification = html.xpath('//table[@class="tm-tableAttr"]/tbody/tr/th/text()')

stitle = html.xpath('//table[@class="tm-tableAttr"]/tbody/tr[@class="tm-tableAttrSub"]/th/text()')

sanswer = html.xpath('//table[@class="tm-tableAttr"]/tbody/tr/td/text()')

# self.shop_grade_url_list.append(shop_grade_url)

if specification != []:

self.sparadict = Compound(specification, sanswer, stitle)

self.product_dict['specification'] = specification

# 评论关键词

curl = 'https://rate.tmall.com/listTagClouds.htm?itemId=%s' % itemid # 接口

response, html = mainRquest(curl)

true = True

false = False

null = None

comment_ketword = eval(response)

self.product_dict['comment_ketword '] = comment_ketword

yield self.product_dict

def get_comment(self, start=1):

comment_list = []

itemid = self.product_dict['itemid']

userid = self.product_dict['userid']

for i in range(start, 100, 3): # 为什么是100,评论最多99页

print('\r正在爬取第%s页' % (i), end='')

url = 'https://rate.tmall.com/list_detail_rate.htm?itemId=%s&sellerId=%s¤tPage=%s' % (

itemid, userid, i)

response, html = mainRquest(url)

comment_dict = HandleJson(response) # 这个函数用来处理json

last_page = comment_dict['rateDetail']['paginator']['lastPage']

if last_page == i or last_page == 0:

break

comment_list.append(comment_dict)

return comment_list

def save_comment(self, comment_list):

itemid = self.product_dict['itemid']

mkdir('shop/%s/评论信息' % self.title) # 创建评论文件夹

if comment_list != []:

with open('shop/%s/评论信息/%s.json' % (self.title, itemid), 'w') as f:

json.dump(comment_list, f)

self.product.append(self.product_dict)

def Grade(self):

response, html = mainRquest(self.shop_grade_url)

grade = re.findall('<em title="(.*?)分" class="count">.*?</em>分', response)

# 与行业水准比较

compare = html.xpath(

'//strong[contains(@class,"percent over")or contains(@class,"percent lower")or contains(@class,"percent normal")]/text()')

comment_people = re.findall('共<span>(\d+)</span>人', response) # 就是参与店铺三种服务的评分的人数

rate = re.findall('<em class="h">(.*?)%</em>', response) # 各种星级多占比例

d = re.findall('<strong class="(.*?)">.*?</strong>', response) # 解释comapre是高于行业还是低于或持平

shopid = re.findall('"shopID": "(\d+)",', response)[0] # 用在后面爬取店铺近三十天的服务情况

userNumid = re.findall('user-rate-(.*?).htm', self.shop_grade_url)[0] # 用在后面爬取店铺近三十天的服务情况

grade_list = []

for i in range(3):

Dict = {}

Dict['grade'] = grade[i]

Dict['total'] = comment_people[i]

Dict['compare'] = compare[i] + ',' + d[i]

Dict['star'] = rate[i * 5:(i + 1) * 5]

grade_list.append(Dict)

print(grade_list)

# 爬取店铺近三十天的服务情况

url = 'https://rate.taobao.com/refund/refundIndex.htm?userNumId=%s&shopId=%s&businessType=0&callback=jsonp120' % (

userNumid, shopid)

response, html = mainRquest(url)

true = True

null = None

false = False

month_service = eval(re.findall('.*?&& jsonp120(.*?);', response)[0])

grade_list.append(month_service)

return grade_list

def main():

tmall = Tmall()

for user_id in tmall.shop_search():

for i in tmall.all_product(user_id):

try:

for e in tmall.get_product_imformation(user_id):

t1 = Combine(tmall.get_comment, 1)

t2 = Combine(tmall.get_comment, 2)

t3 = Combine(tmall.get_comment, 3)

t1.start()

t2.start()

t3.start()

t1.join()

t2.join()

t3.join()

# comment_list 存储的是我们的评论

comment_list = t1.get_result() + t2.get_result() + t3.get_result()

tmall.save_comment(comment_list)

except Exception:

continue

if __name__ == '__main__':

main()

2430

2430

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?