docker中配置redis集群

首先介绍一下几种解决方案

1、哈希取余分区

2亿条记录就是2亿个

k,v,我们单机不行必须要分布式多机,假设有3台机器构成一个集群,用户每次读写操作都是根据公式:

hash(key) % N个机器台数,计算出哈希值,用来决定数据映射到哪一个节点上。

优点

简单粗暴,直接有效,只需要预估好数据规划好节点,例如3台、8台、10台,就能保证一段时间的数据支撑。使用Hash算法让固定的一部分请求落到同一台服务器上,这样每台服务器固定处理一部分请求(并维护这些请求的信息),起到负载均衡+分而治之的作用。

缺点

原来规划好的节点,进行扩容或者缩容就比较麻烦了,不管扩缩,每次数据变动导致节点有变动,映射关系需要重新进行计算,在服务器个数固定不变时没有问题,如果需要弹性扩容或故障停机的情况下,原来的取模公式就会发生变化:

Hash(key)/3会变成Hash(key) /?。此时地址经过取余运算的结果将发生很大变化,根据公式获取的服务器也会变得不可控。某个

redis机器宕机了,由于台数数量变化,会导致hash取余全部数据重新洗牌。

2、一致性哈希算法分区

为了在节点数目发生改变时尽可能少的迁移数据

将所有的存储节点排列在收尾相接的Hash环上,每个key在计算Hash后会顺时针找到临近的存储节点存放。

而当有节点加入或退出时仅影响该节点在Hash环上顺时针相邻的后续节点。

干嘛的:目的是当服务器个数发生变动时,尽量减少影响客户端到服务器的映射关系

怎么做:

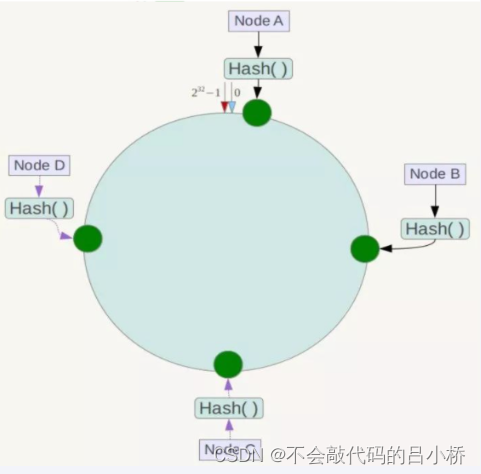

一致性哈希环:一致性哈希算法必然有个hash函数并按照算法产生hash值,这个算法的所有可能哈希值会构成一个全量集,这个集合可以成为一个hash空间[0,2^32-1],这个是一个线性空间,但是在算法中,我们通过适当的逻辑控制将它首尾相连(0 = 2^32),这样让它逻辑上形成了一个环形空间。

节点映射: 将集群中各个IP节点映射到环上的某一个位置。将各个服务器使用Hash进行一个哈希,具体可以选择服务器的IP或主机名作为关键字进行哈希,这样每台机器就能确定其在哈希环上的位置。假如4个节点NodeA、B、C、D,经过IP地址的哈希函数计算(hash(ip)),使用IP地址哈希后在环空间的位置如下:

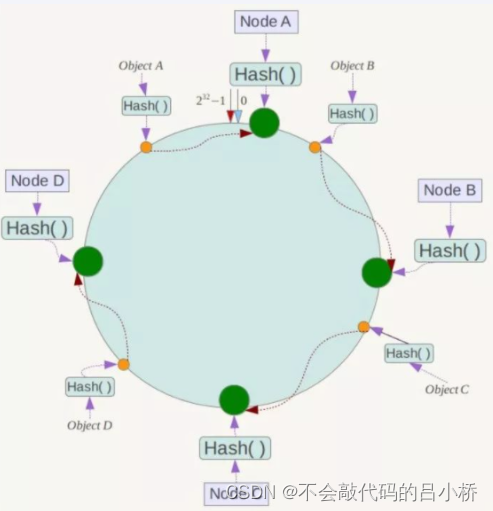

key落到服务器的落键规则

当我们需要存储一个kv键值对时,首先计算key的hash值,hash(key),将这个key使用相同的函数Hash计算出哈希值并确定此数据在环上的位置,从此位置沿环顺时针“行走”,第一台遇到的服务器就是其应该定位到的服务器,并将该键值对存储在该节点上。

如我们有Object A、Object B、Object C、Object D四个数据对象,经过哈希计算后,在环空间上的位置如下:根据一致性Hash算法,数据A会被定为到Node A上,B被定为到Node B上,C被定为到Node C上,D被定为到Node D上。

优点

加入和删除节点只影响哈希环中顺时针方向的相邻的节点,对其他节点无影响。

容错性:假设Node C宕机,可以看到此时对象A、B、D不会受到影响,只有C对象被重定位到Node D。一般的,在一致性Hash算法中,如果一台服务器不可用,则受影响的数据仅仅是此服务器到其环空间中前一台服务器(即沿着逆时针方向行走遇到的第一台服务器)之间数据,其它不会受到影响。简单说,就是C挂了,受到影响的只是B、C之间的数据,并且这些数据会转移到D进行存储。

扩展性:数据量增加了,需要增加一台节点NodeX,X的位置在A和B之间,那收到影响的也就是A到X之间的数据,重新把A到X的数据录入到X上即可,不会导致hash取余全部数据重新洗牌。

缺点

数据的分布和节点的位置有关,因为这些节点不是均匀的分布在哈希环上的,所以数据在进行存储时达不到均匀分布的效果。

Hash环的数据倾斜问题

一致性Hash算法在服务节点太少时,容易因为节点分布不均匀而造成数据倾斜(被缓存的对象大部分集中缓存在某一台服务器上)问题

3、哈希槽分区

一个

Redis集群包含16384个插槽(hash slot), 数据库中的每个键都属于这16384个插槽的其中一个,集群使用公式

CRC16(key) % 16384来计算键key属于哪个槽, 其中CRC16(key)语句用于计算键key的CRC16校验和 。集群中的每个节点负责处理一部分插槽。 举个例子, 如果一个集群可以有主节点, 其中:

- 节点 A 负责处理 0 号至 5460 号插槽。

- 节点 B 负责处理 5461 号至 10922 号插槽。

- 节点 C 负责处理 10923 号至 16383 号插槽。

一个集群只能有

16384个槽,编号0-16383(0-2^14-1)。这些槽会分配给集群中的所有主节点,分配策略没有要求。可以指定哪些编号的槽分配给哪个主节点。集群会记录节点和槽的对应关系。解决了节点和槽的关系后,接下来就需要对key求哈希值,然后对16384取余,余数是几key就落入对应的槽里。slot = CRC16(key) % 16384。以槽为单位移动数据,因为槽的数目是固定的,处理起来比较容易,这样数据移动问题就解决了。

4、redis3主3从集群搭建

1、执行run命令

因为我的虚机中的端口号

6381被占用了,所以我改用了6377,如果容器启动失败,请使用docker logs 容器id进行查看错误原因

docker run -d --name redis-node-1 --net host --privileged=true -v /data/redis/share/redis-node-1:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6377

docker run -d --name redis-node-2 --net host --privileged=true -v /data/redis/share/redis-node-2:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6382

docker run -d --name redis-node-3 --net host --privileged=true -v /data/redis/share/redis-node-3:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6383

docker run -d --name redis-node-4 --net host --privileged=true -v /data/redis/share/redis-node-4:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6384

docker run -d --name redis-node-5 --net host --privileged=true -v /data/redis/share/redis-node-5:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6385

docker run -d --name redis-node-6 --net host --privileged=true -v /data/redis/share/redis-node-6:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6386

[root@192 ljq]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

ae23e22e7fd9 redis:6.0.8 "docker-entrypoint.s…" 2 minutes ago Up 2 minutes redis-node-1

566d8f61575e redis:6.0.8 "docker-entrypoint.s…" 5 minutes ago Up 5 minutes redis-node-6

8a23d9259ae6 redis:6.0.8 "docker-entrypoint.s…" 5 minutes ago Up 5 minutes redis-node-5

4f1c557491dd redis:6.0.8 "docker-entrypoint.s…" 5 minutes ago Up 5 minutes redis-node-4

9f1b98a89f30 redis:6.0.8 "docker-entrypoint.s…" 5 minutes ago Up 5 minutes redis-node-3

d6f99ad29285 redis:6.0.8 "docker-entrypoint.s…" 5 minutes ago Up 5 minutes redis-node-2

docker run命令说明

docker run创建并运行docker容器实例--name redis-node-1容器名称--net host使用宿主机的ip和端口,默认--privileged=true获取宿主机的root用户权限-v /data/redis/share/redis-node-1:/data挂载容器卷 宿主机地址:docker内部地址redis:6.0.8redis镜像和版本号--cluster-enabled yes开启redis集群--appendonly yes开启持久化--port 6386设置端口号

2、构建主从关系

docker exec -it redis-node-1 /bin/bash

redis-cli --cluster create 192.168.76.128:6377 192.168.76.128:6382 192.168.76.128:6383 192.168.76.128:6384 192.168.76.128:6385 192.168.76.128:6386 --cluster-replicas 1

[root@192 ljq]# docker exec -it redis-node-1 /bin/bash

root@192:/data# redis-cli --cluster create 192.168.76.128:6377 192.168.76.128:6382 192.168.76.128:6383 192.168.76.128:6384 192.168.76.128:6385 192.168.76.128:6386 --cluster-replicas 1

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 192.168.76.128:6385 to 192.168.76.128:6377

Adding replica 192.168.76.128:6386 to 192.168.76.128:6382

Adding replica 192.168.76.128:6384 to 192.168.76.128:6383

>>> Trying to optimize slaves allocation for anti-affinity

[WARNING] Some slaves are in the same host as their master

M: cb374d152deac1e5c3fee9bb6dbfe284ac1b51e2 192.168.76.128:6377

slots:[0-5460] (5461 slots) master

M: 99c8874b6fa1f72e949d6753554a532962e9371f 192.168.76.128:6382

slots:[5461-10922] (5462 slots) master

M: 9933b8ebf6cd8038df9be855d44e2a6930263070 192.168.76.128:6383

slots:[10923-16383] (5461 slots) master

S: 8cfc4ce1766a0b214cd334100937320a14bf1a47 192.168.76.128:6384

replicates 9933b8ebf6cd8038df9be855d44e2a6930263070

S: fd1c36788ae8cbe0091c9514875928d421c86462 192.168.76.128:6385

replicates cb374d152deac1e5c3fee9bb6dbfe284ac1b51e2

S: 553fd59f59b521ac42ad82bebfe08d5130881c12 192.168.76.128:6386

replicates 99c8874b6fa1f72e949d6753554a532962e9371f

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

.

>>> Performing Cluster Check (using node 192.168.76.128:6377)

M: cb374d152deac1e5c3fee9bb6dbfe284ac1b51e2 192.168.76.128:6377

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: 99c8874b6fa1f72e949d6753554a532962e9371f 192.168.76.128:6382

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: fd1c36788ae8cbe0091c9514875928d421c86462 192.168.76.128:6385

slots: (0 slots) slave

replicates cb374d152deac1e5c3fee9bb6dbfe284ac1b51e2

M: 9933b8ebf6cd8038df9be855d44e2a6930263070 192.168.76.128:6383

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 8cfc4ce1766a0b214cd334100937320a14bf1a47 192.168.76.128:6384

slots: (0 slots) slave

replicates 9933b8ebf6cd8038df9be855d44e2a6930263070

S: 553fd59f59b521ac42ad82bebfe08d5130881c12 192.168.76.128:6386

slots: (0 slots) slave

replicates 99c8874b6fa1f72e949d6753554a532962e9371f

[OK] All nodes agree about slots configuration. # 注意

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered. # 注意

看到上面两个ok 即可

3、查看节点信息

登录到6377节点使用cluster info 进行查看,使用cluster nodes 查看集群节点的主从信息

root@192:/data# redis-cli -p 6377

127.0.0.1:6377> cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6 # 节点数

cluster_size:3

cluster_current_epoch:6

cluster_my_epoch:1

cluster_stats_messages_ping_sent:809

cluster_stats_messages_pong_sent:736

cluster_stats_messages_sent:1545

cluster_stats_messages_ping_received:731

cluster_stats_messages_pong_received:809

cluster_stats_messages_meet_received:5

cluster_stats_messages_received:1545

127.0.0.1:6377> cluster nodes

cb374d152deac1e5c3fee9bb6dbfe284ac1b51e2 192.168.76.128:6377@16377 myself,master - 0 1663505889000 1 connected 0-5460

99c8874b6fa1f72e949d6753554a532962e9371f 192.168.76.128:6382@16382 master - 0 1663505888000 2 connected 5461-10922

fd1c36788ae8cbe0091c9514875928d421c86462 192.168.76.128:6385@16385 slave cb374d152deac1e5c3fee9bb6dbfe284ac1b51e2 0 1663505887000 1 connected

9933b8ebf6cd8038df9be855d44e2a6930263070 192.168.76.128:6383@16383 master - 0 1663505888000 3 connected 10923-16383

8cfc4ce1766a0b214cd334100937320a14bf1a47 192.168.76.128:6384@16384 slave 9933b8ebf6cd8038df9be855d44e2a6930263070 0 1663505888667 3 connected

553fd59f59b521ac42ad82bebfe08d5130881c12 192.168.76.128:6386@16386 slave 99c8874b6fa1f72e949d6753554a532962e9371f 0 1663505889671 2 connected

从上面可以看出有3台master 和 3台slave节点,注意主从的分配在不同的机器上都可能是不一样的,这里仅需要注意怎样去查看

从节点观察方法,以节点1和节点5为例,会发现从节点5的后面对应着节点1的id

# 节点1的信息

cb374d152deac1e5c3fee9bb6dbfe284ac1b51e2 192.168.76.128:6377@16377 myself,master - 0 1663505889000 1 connected 0-5460

# 节点5的信息

fd1c36788ae8cbe0091c9514875928d421c86462 192.168.76.128:6385@16385 slave cb374d152deac1e5c3fee9bb6dbfe284ac1b51e2 0 1663505887000 1 connected

myself为集群的老大,之后扩容时要使用,节点的id之后也会使用到

节点1的id cb374d152deac1e5c3fee9bb6dbfe284ac1b51e2 myself,master

节点2的id 99c8874b6fa1f72e949d6753554a532962e9371f master

节点3的id 9933b8ebf6cd8038df9be855d44e2a6930263070 master

节点4的id 8cfc4ce1766a0b214cd334100937320a14bf1a47 slave master id : 9933b8ebf6cd8038df9be855d44e2a6930263070

节点5的id fd1c36788ae8cbe0091c9514875928d421c86462 slave master id : cb374d152deac1e5c3fee9bb6dbfe284ac1b51e2

节点6的id 553fd59f59b521ac42ad82bebfe08d5130881c12 slave master id : 99c8874b6fa1f72e949d6753554a532962e9371f

4、以集群的方式登录

- 集群方式登录的命令为

redis-cli -c -p 端口号- 在设置键值对的时候,会发现在来回跳转,是因为根据

key进行hash并存放到不同的集群中- 同理,在获取

key时,也进行hash跳转到对应的redis实例中

root@192:/data# redis-cli -c -p 6377

127.0.0.1:6377> set k1 v1

-> Redirected to slot [12706] located at 192.168.76.128:6383

OK

192.168.76.128:6383> set k2 v2

-> Redirected to slot [449] located at 192.168.76.128:6377

OK

192.168.76.128:6377> set k3 v3

OK

192.168.76.128:6377> set k4 v4

-> Redirected to slot [8455] located at 192.168.76.128:6382

OK

192.168.76.128:6382> set k5 v5

-> Redirected to slot [12582] located at 192.168.76.128:6383

OK

192.168.76.128:6383> set k6 v7

-> Redirected to slot [325] located at 192.168.76.128:6377

OK

192.168.76.128:6377> get k5

-> Redirected to slot [12582] located at 192.168.76.128:6383

"v5"

192.168.76.128:6383>

如果以普通的方式进行登录,在计算key的hash值并放入对应的节点中时,会出现以下错误

192.168.76.128:6383> FLUSHALL # 会清空所有集群下的内容

OK

192.168.76.128:6383> get k1

(nil)

192.168.76.128:6383> get k5

(nil)

192.168.76.128:6383> exit

root@192:/data# redis-cli -p 6377

127.0.0.1:6377> set k5 v5

(error) MOVED 12582 192.168.76.128:6383

5、主从容错

这里在

redis中学过,可以看看我的redis笔记

停掉6377,并查看集群状态,可以看到节点1宕机之后,节点5上位成为了master节点

[root@192 ~]# docker stop ae23e22e7fd9

ae23e22e7fd9

[root@192 ~]# docker exec -it redis-node-2 bash

root@192:/data# redis-cli -p 6382

127.0.0.1:6382> cluster nodes

fd1c36788ae8cbe0091c9514875928d421c86462 192.168.76.128:6385@16385 master - 0 1663507192443 7 connected 0-5460

cb374d152deac1e5c3fee9bb6dbfe284ac1b51e2 192.168.76.128:6377@16377 master,fail - 1663507163594 1663507156000 1 disconnected # 注意这里

553fd59f59b521ac42ad82bebfe08d5130881c12 192.168.76.128:6386@16386 slave 99c8874b6fa1f72e949d6753554a532962e9371f 0 1663507194449 2 connected

8cfc4ce1766a0b214cd334100937320a14bf1a47 192.168.76.128:6384@16384 slave 9933b8ebf6cd8038df9be855d44e2a6930263070 0 1663507193446 3 connected

9933b8ebf6cd8038df9be855d44e2a6930263070 192.168.76.128:6383@16383 master - 0 1663507195453 3 connected 10923-16383

99c8874b6fa1f72e949d6753554a532962e9371f 192.168.76.128:6382@16382 myself,master - 0 1663507193000 2 connected 5461-10922

再次重启节点1,会怎么样呢?

可以看到节点1变为节点5的从节点了。

[root@192 ljq]# docker start redis-node-1

127.0.0.1:6382> cluster nodes

fd1c36788ae8cbe0091c9514875928d421c86462 192.168.76.128:6385@16385 master - 0 1663507326117 7 connected 0-5460

cb374d152deac1e5c3fee9bb6dbfe284ac1b51e2 192.168.76.128:6377@16377 slave fd1c36788ae8cbe0091c9514875928d421c86462 0 1663507326000 7 connected # 注意这里

553fd59f59b521ac42ad82bebfe08d5130881c12 192.168.76.128:6386@16386 slave 99c8874b6fa1f72e949d6753554a532962e9371f 0 1663507329126 2 connected

8cfc4ce1766a0b214cd334100937320a14bf1a47 192.168.76.128:6384@16384 slave 9933b8ebf6cd8038df9be855d44e2a6930263070 0 1663507327121 3 connected

9933b8ebf6cd8038df9be855d44e2a6930263070 192.168.76.128:6383@16383 master - 0 1663507328123 3 connected 10923-16383

99c8874b6fa1f72e949d6753554a532962e9371f 192.168.76.128:6382@16382 myself,master - 0 1663507328000 2 connected 5461-10922

6、主从扩容

新增6387节点和6388节点

docker run -d --name redis-node-7 --net host --privileged=true -v /data/redis/share/redis-node-7:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6387

docker run -d --name redis-node-8 --net host --privileged=true -v /data/redis/share/redis-node-8:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6388

[root@192 ljq]# docker run -d --name redis-node-7 --net host --privileged=true -v /data/redis/share/redis-node-7:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6387

a5265ba8d7a0190053759f9ddb1bb5587400bc0ea10bfb537f07d2382f9b4491

[root@192 ljq]#

[root@192 ljq]# docker run -d --name redis-node-8 --net host --privileged=true -v /data/redis/share/redis-node-8:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6388

8674b98c395dedab85badbb406b87de8e1e2f4774471616f0fc577cb8f414ba4

[root@192 ljq]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

8674b98c395d redis:6.0.8 "docker-entrypoint.s…" 7 seconds ago Up 6 seconds redis-node-8

a5265ba8d7a0 redis:6.0.8 "docker-entrypoint.s…" 12 seconds ago Up 11 seconds redis-node-7

ae23e22e7fd9 redis:6.0.8 "docker-entrypoint.s…" About an hour ago Up 22 minutes redis-node-1

566d8f61575e redis:6.0.8 "docker-entrypoint.s…" About an hour ago Up About an hour redis-node-6

8a23d9259ae6 redis:6.0.8 "docker-entrypoint.s…" About an hour ago Up 16 minutes redis-node-5

4f1c557491dd redis:6.0.8 "docker-entrypoint.s…" About an hour ago Up About an hour redis-node-4

9f1b98a89f30 redis:6.0.8 "docker-entrypoint.s…" About an hour ago Up About an hour redis-node-3

d6f99ad29285 redis:6.0.8 "docker-entrypoint.s…" About an hour ago Up About an hour redis-node-2

将新增的6387作为master节点加入集群,6387就是将要作为master新增节点6382 就是原来集群节点里面的领路人

以自己的实际操作为准

# redis-cli --cluster add-node 自己实际IP地址:6387 自己实际IP地址:6381

[root@192 ljq]# docker exec -it redis-node-7 bash

root@192:/data# redis-cli --cluster add-node 192.168.76.128:6387 192.168.76.128:6382

>>> Adding node 192.168.76.128:6387 to cluster 192.168.76.128:6382

>>> Performing Cluster Check (using node 192.168.76.128:6382)

M: 99c8874b6fa1f72e949d6753554a532962e9371f 192.168.76.128:6382

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: fd1c36788ae8cbe0091c9514875928d421c86462 192.168.76.128:6385

slots: (0 slots) slave

replicates cb374d152deac1e5c3fee9bb6dbfe284ac1b51e2

M: cb374d152deac1e5c3fee9bb6dbfe284ac1b51e2 192.168.76.128:6377

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 553fd59f59b521ac42ad82bebfe08d5130881c12 192.168.76.128:6386

slots: (0 slots) slave

replicates 99c8874b6fa1f72e949d6753554a532962e9371f

S: 8cfc4ce1766a0b214cd334100937320a14bf1a47 192.168.76.128:6384

slots: (0 slots) slave

replicates 9933b8ebf6cd8038df9be855d44e2a6930263070

M: 9933b8ebf6cd8038df9be855d44e2a6930263070 192.168.76.128:6383

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 192.168.76.128:6387 to make it join the cluster.

[OK] New node added correctly.

检查集群信息

# redis-cli --cluster check 真实ip地址:6381

root@192:/data# redis-cli --cluster check 192.168.76.128:6382

192.168.76.128:6382 (99c8874b...) -> 1 keys | 5462 slots | 1 slaves.

192.168.76.128:6387 (651716ab...) -> 0 keys | 0 slots | 0 slaves.

192.168.76.128:6377 (cb374d15...) -> 3 keys | 5461 slots | 1 slaves.

192.168.76.128:6383 (9933b8eb...) -> 0 keys | 5461 slots | 1 slaves.

[OK] 4 keys in 4 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 192.168.76.128:6382)

M: 99c8874b6fa1f72e949d6753554a532962e9371f 192.168.76.128:6382

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: fd1c36788ae8cbe0091c9514875928d421c86462 192.168.76.128:6385

slots: (0 slots) slave

replicates cb374d152deac1e5c3fee9bb6dbfe284ac1b51e2

M: 651716ab88e1f5f0336d6ca4eeda7911e94fda78 192.168.76.128:6387

slots: (0 slots) master

M: cb374d152deac1e5c3fee9bb6dbfe284ac1b51e2 192.168.76.128:6377

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 553fd59f59b521ac42ad82bebfe08d5130881c12 192.168.76.128:6386

slots: (0 slots) slave

replicates 99c8874b6fa1f72e949d6753554a532962e9371f

S: 8cfc4ce1766a0b214cd334100937320a14bf1a47 192.168.76.128:6384

slots: (0 slots) slave

replicates 9933b8ebf6cd8038df9be855d44e2a6930263070

M: 9933b8ebf6cd8038df9be855d44e2a6930263070 192.168.76.128:6383

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

从上面的信息可以看到 192.168.76.128:6387 (651716ab...) -> 0 keys | 0 slots | 0 slaves. 6387中是0个slot

重新分派槽号

# 命令:redis-cli --cluster reshard IP地址:端口号

redis-cli --cluster reshard 192.168.76.128:6382

root@192:/data# redis-cli --cluster reshard 192.168.76.128:6382

How many slots do you want to move (from 1 to 16384)? # 输入想要分配槽点的数量,16384/4

what is the receiving node ID? # 输入接收的masterID

Source node #1: #输入all

再次检查集群信息

root@192:/data# redis-cli --cluster check 192.168.76.128:6382

192.168.76.128:6382 (99c8874b...) -> 1 keys | 4096 slots | 1 slaves.

192.168.76.128:6387 (651716ab...) -> 2 keys | 4096 slots | 0 slaves.

192.168.76.128:6377 (cb374d15...) -> 1 keys | 4096 slots | 1 slaves.

192.168.76.128:6383 (9933b8eb...) -> 0 keys | 4096 slots | 1 slaves.

[OK] 4 keys in 4 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 192.168.76.128:6382)

M: 99c8874b6fa1f72e949d6753554a532962e9371f 192.168.76.128:6382

slots:[6827-10922] (4096 slots) master

1 additional replica(s)

S: fd1c36788ae8cbe0091c9514875928d421c86462 192.168.76.128:6385

slots: (0 slots) slave

replicates cb374d152deac1e5c3fee9bb6dbfe284ac1b51e2

M: 651716ab88e1f5f0336d6ca4eeda7911e94fda78 192.168.76.128:6387

slots:[0-1364],[5461-6826],[10923-12287] (4096 slots) master

M: cb374d152deac1e5c3fee9bb6dbfe284ac1b51e2 192.168.76.128:6377

slots:[1365-5460] (4096 slots) master

1 additional replica(s)

S: 553fd59f59b521ac42ad82bebfe08d5130881c12 192.168.76.128:6386

slots: (0 slots) slave

replicates 99c8874b6fa1f72e949d6753554a532962e9371f

S: 8cfc4ce1766a0b214cd334100937320a14bf1a47 192.168.76.128:6384

slots: (0 slots) slave

replicates 9933b8ebf6cd8038df9be855d44e2a6930263070

M: 9933b8ebf6cd8038df9be855d44e2a6930263070 192.168.76.128:6383

slots:[12288-16383] (4096 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

可以从6387节点的信息中看出,重新分配槽点并不是平均分配,而是从之前的集群主节点中分别融出一个区间

M: 651716ab88e1f5f0336d6ca4eeda7911e94fda78 192.168.76.128:6387 slots:[0-1364],[5461-6826],[10923-12287] (4096 slots) master

为6387分配从节点6388

redis-cli --cluster add-node 192.168.76.128:6388 192.168.76.128:6387 --cluster-slave --cluster-master-id 651716ab88e1f5f0336d6ca4eeda7911e94fda78

--cluster-master-id这个是6387的编号,按照自己实际情况填写

root@192:/data# redis-cli --cluster add-node 192.168.76.128:6388 192.168.76.128:6387 --cluster-slave --cluster-master-id 651716ab88e1f5f0336d6ca4eeda7911e94fda78

>>> Adding node 192.168.76.128:6388 to cluster 192.168.76.128:6387

>>> Performing Cluster Check (using node 192.168.76.128:6387)

M: 651716ab88e1f5f0336d6ca4eeda7911e94fda78 192.168.76.128:6387

slots:[0-1364],[5461-6826],[10923-12287] (4096 slots) master

S: fd1c36788ae8cbe0091c9514875928d421c86462 192.168.76.128:6385

slots: (0 slots) slave

replicates cb374d152deac1e5c3fee9bb6dbfe284ac1b51e2

S: 8cfc4ce1766a0b214cd334100937320a14bf1a47 192.168.76.128:6384

slots: (0 slots) slave

replicates 9933b8ebf6cd8038df9be855d44e2a6930263070

M: 99c8874b6fa1f72e949d6753554a532962e9371f 192.168.76.128:6382

slots:[6827-10922] (4096 slots) master

1 additional replica(s)

S: 553fd59f59b521ac42ad82bebfe08d5130881c12 192.168.76.128:6386

slots: (0 slots) slave

replicates 99c8874b6fa1f72e949d6753554a532962e9371f

M: cb374d152deac1e5c3fee9bb6dbfe284ac1b51e2 192.168.76.128:6377

slots:[1365-5460] (4096 slots) master

1 additional replica(s)

M: 9933b8ebf6cd8038df9be855d44e2a6930263070 192.168.76.128:6383

slots:[12288-16383] (4096 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 192.168.76.128:6388 to make it join the cluster. # 注意这里

Waiting for the cluster to join

>>> Configure node as replica of 192.168.76.128:6387. # 注意这里

[OK] New node added correctly.

7、主从缩容

目的 4主4从想变回3主3从

查看6388节点信息

# redis-cli --cluster check 192.168.76.128:6382 根据自己的IP进行改动

root@192:/data# redis-cli --cluster check 192.168.76.127:6382

删除6388节点

# 命令:redis-cli --cluster del-node ip:从机端口 从机6388节点ID

redis-cli --cluster del-node 192.168.76.128:6388 8e47e663be4dcfb4362aa20e1c145c76ef7fe39f

# 检查一下

redis-cli --cluster check 192.168.76.127:6382

将分配给6387节点的槽点收回,同一分配给6377

root@192:/data# redis-cli --cluster reshard 192.168.76.128:6382

....# 省略

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 4096

What is the receiving node ID? cb374d152deac1e5c3fee9bb6dbfe284ac1b51e2 # 注意这里 接收的id,分配给谁就填谁的id

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1: 651716ab88e1f5f0336d6ca4eeda7911e94fda78 要删除节点的id

Source node #2: done

...# 省略

Do you want to proceed with the proposed reshard plan (yes/no)? yes

再次检查集群信息

root@192:/data# redis-cli --cluster check 192.168.76.128:6382

192.168.76.128:6382 (99c8874b...) -> 1 keys | 4096 slots | 1 slaves.

192.168.76.128:6387 (651716ab...) -> 0 keys | 0 slots | 0 slaves.

192.168.76.128:6377 (cb374d15...) -> 3 keys | 8192 slots | 1 slaves.

192.168.76.128:6383 (9933b8eb...) -> 0 keys | 4096 slots | 1 slaves.

[OK] 4 keys in 4 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 192.168.76.128:6382)

M: 99c8874b6fa1f72e949d6753554a532962e9371f 192.168.76.128:6382

slots:[6827-10922] (4096 slots) master

1 additional replica(s)

S: fd1c36788ae8cbe0091c9514875928d421c86462 192.168.76.128:6385

slots: (0 slots) slave

replicates cb374d152deac1e5c3fee9bb6dbfe284ac1b51e2

M: 651716ab88e1f5f0336d6ca4eeda7911e94fda78 192.168.76.128:6387

slots: (0 slots) master # 注意这里

M: cb374d152deac1e5c3fee9bb6dbfe284ac1b51e2 192.168.76.128:6377

slots:[0-6826],[10923-12287] (8192 slots) master # 注意这里

1 additional replica(s)

S: 553fd59f59b521ac42ad82bebfe08d5130881c12 192.168.76.128:6386

slots: (0 slots) slave

replicates 99c8874b6fa1f72e949d6753554a532962e9371f

S: 8cfc4ce1766a0b214cd334100937320a14bf1a47 192.168.76.128:6384

slots: (0 slots) slave

replicates 9933b8ebf6cd8038df9be855d44e2a6930263070

M: 9933b8ebf6cd8038df9be855d44e2a6930263070 192.168.76.128:6383

slots:[12288-16383] (4096 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

删除6387

命令:redis-cli --cluster del-node ip:端口 6387节点ID

redis-cli --cluster del-node 192.168.76.128:6387 651716ab88e1f5f0336d6ca4eeda7911e94fda78

再次查看集群信息,已被删除!

293

293

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?