前提

- 需要Docker已安装了docker-compose,安装教程参见文章:Docker-compose容器编排

- 需要docker中已安装mysql5.7,安装教程参见文章

- 需要docker中已安装Nacos,安装教程参见文章

以上环境均为单机版,如需集群环境,可参考本人主页其他文章

搭建Seata

-

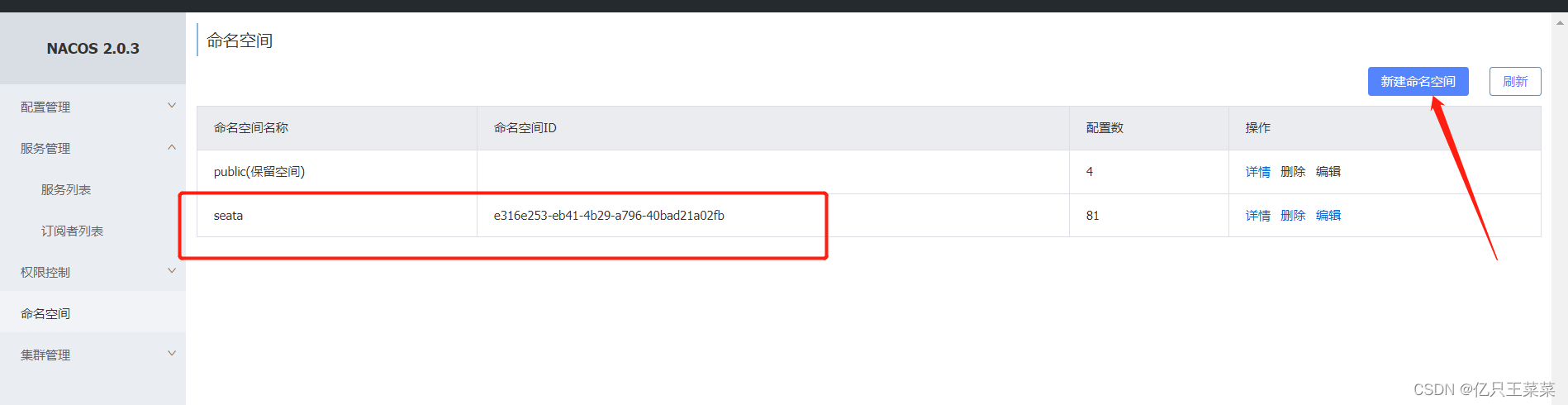

在Nacos中创建一个

seata命名空间

-

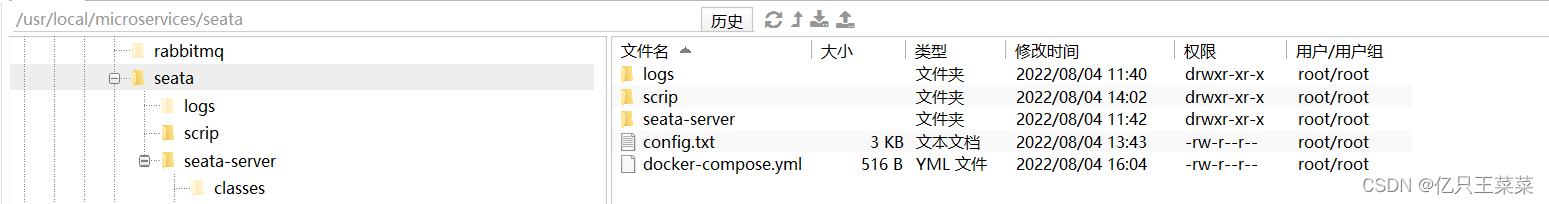

在docker宿主机中创建如下文件路径

mkdir -p /usr/local/microservices/seata/{logs,scrip}

-

最终的文件路径如下

-

拉取seata镜像

docker pull seataio/seata-server:1.3.0

- 运行容器

docker run --name seata-server -p 8091:8091 -d seataio/seata-server:1.3.0

- 将容器配置拷贝到

/usr/local/microservices/seata目录下

docker cp seata-server:/seata-server /usr/local/microservices/seata

- 停止容器

docker stop seata-server

- 删除容器

docker rm seata-server

- 进入拷贝的文件目录

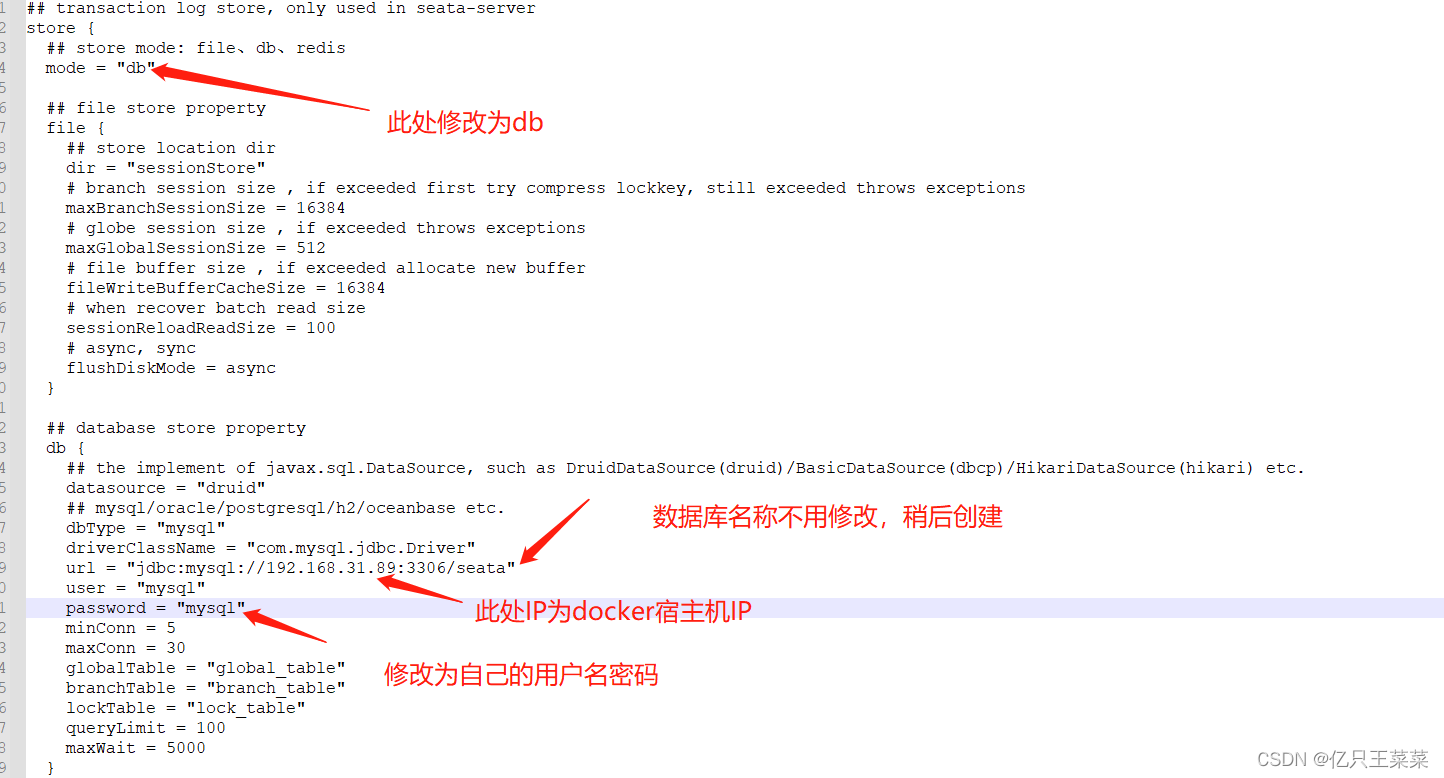

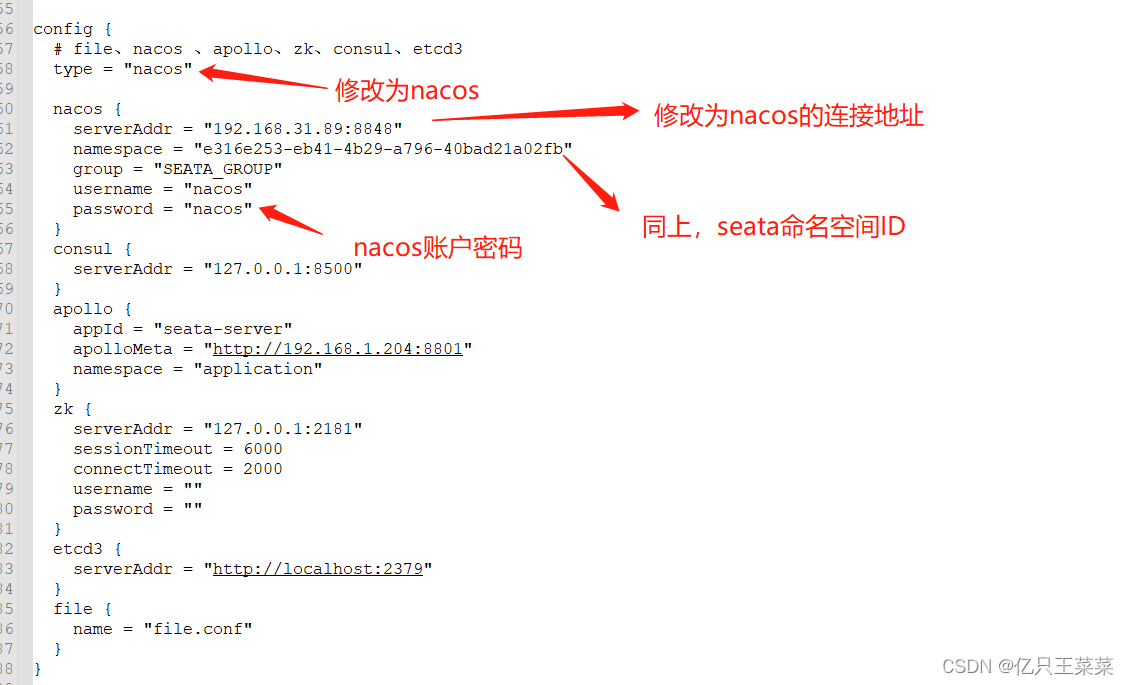

/usr/local/microservices/seata/seata-server/resources,改写配置文件file.conf和registry.conf

修改后的file.conf

## transaction log store, only used in seata-server

store {

## store mode: file、db、redis

mode = "db"

## file store property

file {

## store location dir

dir = "sessionStore"

# branch session size , if exceeded first try compress lockkey, still exceeded throws exceptions

maxBranchSessionSize = 16384

# globe session size , if exceeded throws exceptions

maxGlobalSessionSize = 512

# file buffer size , if exceeded allocate new buffer

fileWriteBufferCacheSize = 16384

# when recover batch read size

sessionReloadReadSize = 100

# async, sync

flushDiskMode = async

}

## database store property

db {

## the implement of javax.sql.DataSource, such as DruidDataSource(druid)/BasicDataSource(dbcp)/HikariDataSource(hikari) etc.

datasource = "druid"

## mysql/oracle/postgresql/h2/oceanbase etc.

dbType = "mysql"

driverClassName = "com.mysql.jdbc.Driver"

url = "jdbc:mysql://192.168.31.89:3306/seata"

user = "mysql"

password = "mysql"

minConn = 5

maxConn = 30

globalTable = "global_table"

branchTable = "branch_table"

lockTable = "lock_table"

queryLimit = 100

maxWait = 5000

}

## redis store property

redis {

host = "127.0.0.1"

port = "6379"

password = ""

database = "0"

minConn = 1

maxConn = 10

queryLimit = 100

}

}

需要修改的位置:

修改后的registry.conf:

registry {

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "nacos"

nacos {

application = "seata-server"

serverAddr = "192.168.31.89:8848"

group = "SEATA_GROUP"

namespace = "e316e253-eb41-4b29-a796-40bad21a02fb"

cluster = "default"

username = "nacos"

password = "nacos"

}

eureka {

serviceUrl = "http://localhost:8761/eureka"

application = "default"

weight = "1"

}

redis {

serverAddr = "localhost:6379"

db = 0

password = ""

cluster = "default"

timeout = 0

}

zk {

cluster = "default"

serverAddr = "127.0.0.1:2181"

sessionTimeout = 6000

connectTimeout = 2000

username = ""

password = ""

}

consul {

cluster = "default"

serverAddr = "127.0.0.1:8500"

}

etcd3 {

cluster = "default"

serverAddr = "http://localhost:2379"

}

sofa {

serverAddr = "127.0.0.1:9603"

application = "default"

region = "DEFAULT_ZONE"

datacenter = "DefaultDataCenter"

cluster = "default"

group = "SEATA_GROUP"

addressWaitTime = "3000"

}

file {

name = "file.conf"

}

}

config {

# file、nacos 、apollo、zk、consul、etcd3

type = "nacos"

nacos {

serverAddr = "192.168.31.89:8848"

namespace = "e316e253-eb41-4b29-a796-40bad21a02fb"

group = "SEATA_GROUP"

username = "nacos"

password = "nacos"

}

consul {

serverAddr = "127.0.0.1:8500"

}

apollo {

appId = "seata-server"

apolloMeta = "http://192.168.1.204:8801"

namespace = "application"

}

zk {

serverAddr = "127.0.0.1:2181"

sessionTimeout = 6000

connectTimeout = 2000

username = ""

password = ""

}

etcd3 {

serverAddr = "http://localhost:2379"

}

file {

name = "file.conf"

}

}

需要修改的位置:

1.注册中心配置

2.配置中心

- 进入目录

/usr/local/microservices/seata/,创建脚本文件config.txt,并修改相应配置

transport.type=TCP

transport.server=NIO

transport.heartbeat=true

transport.enableClientBatchSendRequest=false

transport.threadFactory.bossThreadPrefix=NettyBoss

transport.threadFactory.workerThreadPrefix=NettyServerNIOWorker

transport.threadFactory.serverExecutorThreadPrefix=NettyServerBizHandler

transport.threadFactory.shareBossWorker=false

transport.threadFactory.clientSelectorThreadPrefix=NettyClientSelector

transport.threadFactory.clientSelectorThreadSize=1

transport.threadFactory.clientWorkerThreadPrefix=NettyClientWorkerThread

transport.threadFactory.bossThreadSize=1

transport.threadFactory.workerThreadSize=default

transport.shutdown.wait=3

service.vgroupMapping.my_test_tx_group=default

service.default.grouplist=127.0.0.1:8091

service.enableDegrade=false

service.disableGlobalTransaction=false

client.rm.asyncCommitBufferLimit=10000

client.rm.lock.retryInterval=10

client.rm.lock.retryTimes=30

client.rm.lock.retryPolicyBranchRollbackOnConflict=true

client.rm.reportRetryCount=5

client.rm.tableMetaCheckEnable=false

client.rm.sqlParserType=druid

client.rm.reportSuccessEnable=false

client.rm.sagaBranchRegisterEnable=false

client.tm.commitRetryCount=5

client.tm.rollbackRetryCount=5

client.tm.defaultGlobalTransactionTimeout=60000

client.tm.degradeCheck=false

client.tm.degradeCheckAllowTimes=10

client.tm.degradeCheckPeriod=2000

##将此处的file修改为db

store.mode=db

store.file.dir=file_store/data

store.file.maxBranchSessionSize=16384

store.file.maxGlobalSessionSize=512

store.file.fileWriteBufferCacheSize=16384

store.file.flushDiskMode=async

store.file.sessionReloadReadSize=100

##配置数据

store.db.datasource=druid

store.db.dbType=mysql

store.db.driverClassName=com.mysql.jdbc.Driver

store.db.url=jdbc:mysql://192.168.31.89:3306/seata?useUnicode=true ##修改为docker中的mysql连接地址,同file.conf文件中的配置一样

store.db.user=root

store.db.password=root

store.db.minConn=5

store.db.maxConn=30

store.db.globalTable=global_table

store.db.branchTable=branch_table

store.db.queryLimit=100

store.db.lockTable=lock_table

store.db.maxWait=5000

store.redis.host=127.0.0.1

store.redis.port=6379

store.redis.maxConn=10

store.redis.minConn=1

store.redis.database=0

store.redis.password=null

store.redis.queryLimit=100

server.recovery.committingRetryPeriod=1000

server.recovery.asynCommittingRetryPeriod=1000

server.recovery.rollbackingRetryPeriod=1000

server.recovery.timeoutRetryPeriod=1000

server.maxCommitRetryTimeout=-1

server.maxRollbackRetryTimeout=-1

server.rollbackRetryTimeoutUnlockEnable=false

client.undo.dataValidation=true

client.undo.logSerialization=jackson

client.undo.onlyCareUpdateColumns=true

server.undo.logSaveDays=7

server.undo.logDeletePeriod=86400000

client.undo.logTable=undo_log

client.log.exceptionRate=100

transport.serialization=seata

transport.compressor=none

metrics.enabled=false

metrics.registryType=compact

metrics.exporterList=prometheus

metrics.exporterPrometheusPort=9898

service.vgroup-mapping.sub-tx-group=default

service.vgroup-mapping.admin-tx-group=

- 进入目录

/usr/local/microservices/seata/scrip,创建脚本文件nacos-config.sh

脚本连接

nacos-config.sh:

opyright 1999-2019 Seata.io Group.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at、

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

while getopts ":h:p:g:t:" opt

do

case $opt in

h)

host=$OPTARG

;;

p)

port=$OPTARG

;;

g)

group=$OPTARG

;;

t)

tenant=$OPTARG

;;

?)

echo " USAGE OPTION: $0 [-h host] [-p port] [-g group] [-t tenant] "

exit 1

;;

esac

done

if [[ -z ${host} ]]; then

host=localhost

fi

if [[ -z ${port} ]]; then

port=8848

fi

if [[ -z ${group} ]]; then

group="SEATA_GROUP"

fi

if [[ -z ${tenant} ]]; then

tenant=""

fi

nacosAddr=$host:$port

contentType="content-type:application/json;charset=UTF-8"

echo "set nacosAddr=$nacosAddr"

echo "set group=$group"

failCount=0

tempLog=$(mktemp -u)

function addConfig() {

curl -X POST -H "${1}" "http://$2/nacos/v1/cs/configs?dataId=$3&group=$group&content=$4&tenant=$tenant" >"${tempLog}" 2>/dev/null

if [[ -z $(cat "${tempLog}") ]]; then

echo " Please check the cluster status. "

exit 1

fi

if [[ $(cat "${tempLog}") =~ "true" ]]; then

echo "Set $3=$4 successfully "

else

echo "Set $3=$4 failure "

(( failCount++ ))

fi

}

count=0

for line in $(cat $(dirname "$PWD")/config.txt | sed s/[[:space:]]//g); do

(( count++ ))

key=${line%%=*}

value=${line#*=}

addConfig "${contentType}" "${nacosAddr}" "${key}" "${value}"

done

echo "========================================================================="

echo " Complete initialization parameters, total-count:$count , failure-count:$failCount "

echo "========================================================================="

if [[ ${failCount} -eq 0 ]]; then

echo " Init nacos config finished, please start seata-server. "

else

echo " init nacos config fail. "

fi

- 在目录

/usr/local/microservices/seata/scrip下执行如下命令

修改sh文件的权限

chmod +x nacos-config.sh

将配置文件推送至Nacos

sh nacos-config.sh -h 192.168.31.89 -p 8848 -g SEATA_GROUP -t e316e253-eb41-4b29-a796-40bad21a02fb

注意:以上命令需要按照如下格式自行替换

sh nacos-config.sh -h Nacos地址 -p nacos端口号 -g SEATA_GROUP(此处是配置文件的分组名称,可以不改) -t 在nacos配置的seata命名空间ID

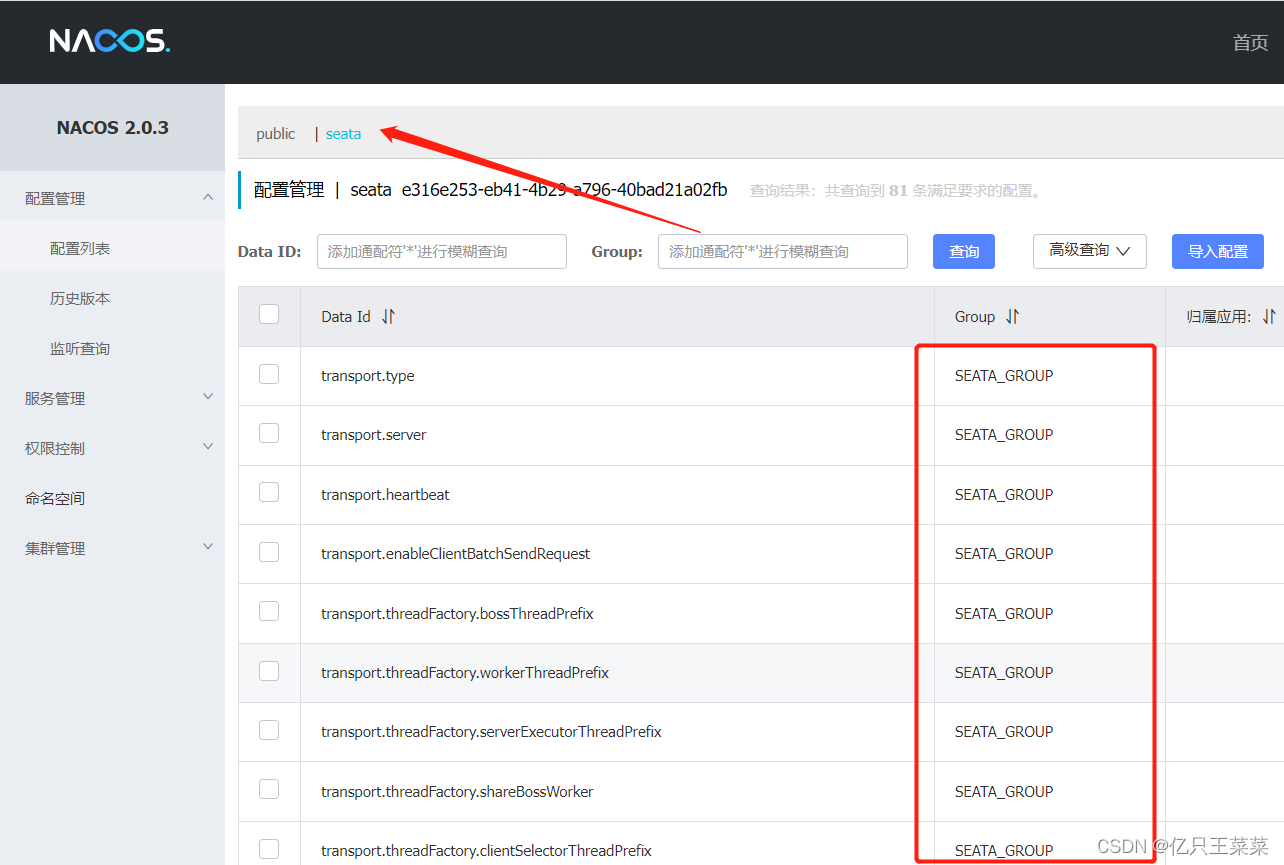

- 执行成功后登录Naocs查看

存在如下分组及配置文件说明推送成功

- 登录docker中安装的mysql,创建

seata数据库 - 在

seata数据库中新创建如下表

sql脚本:

SET NAMES utf8mb4;

SET FOREIGN_KEY_CHECKS = 0;

-- ----------------------------

-- Table structure for branch_table

-- ----------------------------

DROP TABLE IF EXISTS `branch_table`;

CREATE TABLE `branch_table` (

`branch_id` bigint(20) NOT NULL,

`xid` varchar(128) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`transaction_id` bigint(20) NULL DEFAULT NULL,

`resource_group_id` varchar(32) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

`resource_id` varchar(256) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

`branch_type` varchar(8) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

`status` tinyint(4) NULL DEFAULT NULL,

`client_id` varchar(64) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

`application_data` varchar(2000) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

`gmt_create` datetime(6) NULL DEFAULT NULL,

`gmt_modified` datetime(6) NULL DEFAULT NULL,

PRIMARY KEY (`branch_id`) USING BTREE,

INDEX `idx_xid`(`xid`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Records of branch_table

-- ----------------------------

-- ----------------------------

-- Table structure for global_table

-- ----------------------------

DROP TABLE IF EXISTS `global_table`;

CREATE TABLE `global_table` (

`xid` varchar(128) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`transaction_id` bigint(20) NULL DEFAULT NULL,

`status` tinyint(4) NOT NULL,

`application_id` varchar(32) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

`transaction_service_group` varchar(32) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

`transaction_name` varchar(128) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

`timeout` int(11) NULL DEFAULT NULL,

`begin_time` bigint(20) NULL DEFAULT NULL,

`application_data` varchar(2000) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

`gmt_create` datetime(0) NULL DEFAULT NULL,

`gmt_modified` datetime(0) NULL DEFAULT NULL,

PRIMARY KEY (`xid`) USING BTREE,

INDEX `idx_gmt_modified_status`(`gmt_modified`, `status`) USING BTREE,

INDEX `idx_transaction_id`(`transaction_id`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Records of global_table

-- ----------------------------

-- ----------------------------

-- Table structure for lock_table

-- ----------------------------

DROP TABLE IF EXISTS `lock_table`;

CREATE TABLE `lock_table` (

`row_key` varchar(128) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`xid` varchar(96) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

`transaction_id` bigint(20) NULL DEFAULT NULL,

`branch_id` bigint(20) NOT NULL,

`resource_id` varchar(256) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

`table_name` varchar(32) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

`pk` varchar(36) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

`gmt_create` datetime(0) NULL DEFAULT NULL,

`gmt_modified` datetime(0) NULL DEFAULT NULL,

PRIMARY KEY (`row_key`) USING BTREE,

INDEX `idx_branch_id`(`branch_id`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Records of lock_table

-- ----------------------------

SET FOREIGN_KEY_CHECKS = 1;

- 在

/usr/local/microservices/seata目录下创建docker-compose.yml文件

docker-compose.yml:

version: "3"

services:

seata-server:

image: seataio/seata-server:1.3.0

container_name: seata-server

hostname: seata-server

ports:

- "8091:8091"

environment:

- SEATA_PORT=8091

- STORE_MODE=db

- SEATA_IP=192.168.31.89 ##将此处的IP替换为docker宿主机的IP

volumes:

- ./seata-server/resources/registry.conf:/seata-server/resources/registry.conf

- ./logs:/data/logs/seata

restart: always

- **在

/usr/local/microservices/seata目录下执行如下命令启动seata

docker-compose up -d

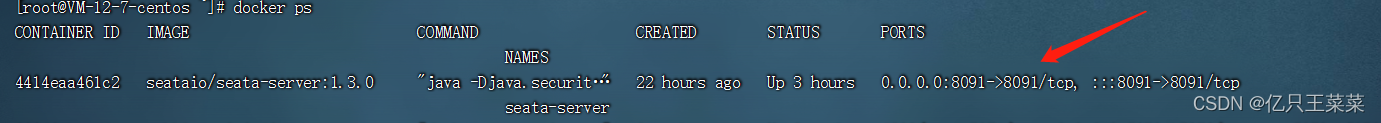

- 查看是否启动成功

docker ps

- 查看nacos是否注册成功

- 至此搭建成功

整合SpringBoot 2.3.12.RELEASE

本人使用的依赖:

| 依赖 | 版本号 |

|---|---|

| SpringCloud | Hoxton.SR12 |

| Springboot | 2.3.12.RELEASE |

| Spring Cloud Alibaba | 2.2.7.RELEASE |

| sentinel | 1.8.1 |

| Nacos | 2.0.3 |

| Seata | 1.3.0 |

-

假设存在如下情景

订单服务A创建订单------>调用库存服务B减少库存 -

服务A和服务B都需要添加如下依赖

<!-- seata-->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-seata</artifactId>

<exclusions>

<!-- 排除依赖 -->

<exclusion>

<artifactId>seata-all</artifactId>

<groupId>io.seata</groupId>

</exclusion>

<!-- 排除依赖seata-spring-boot-starter 不然或报错 SeataDataSourceBeanPostProcessor异常 -->

<exclusion>

<groupId>io.seata</groupId>

<artifactId>seata-spring-boot-starter</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>io.seata</groupId>

<artifactId>seata-spring-boot-starter</artifactId>

<version>1.3.0</version>

</dependency>

- 服务A和服务B都需要添加如下配置

server:

port: 9003

spring:

application:

name: service-A

##以上配置只是作为参考,需要根据服务A和服务B自行调整

#-------------------------seata相关配置-------------------------------------------------------------

seata:

application-id: ${spring.application.name}

enabled: true

tx-service-group: my_test_tx_group #此处的配置来源于上述步骤中config.txt中的service.vgroupMapping.my_test_tx_group=default

registry:

type: nacos

nacos:

server-addr: 192.168.31.89:8848 #nacos的连接地址

namespace: e316e253-eb41-4b29-a796-40bad21a02fb #在nacos中创建的seata命名空间ID

group: SEATA_GROUP #seata配置的分组名称

cluster: default

username: nacos

password: nacos

config:

type: nacos

nacos:

server-addr: 192.168.31.89:8848 #nacos的连接地址

namespace: e316e253-eb41-4b29-a796-40bad21a02fb #在nacos中创建的seata命名空间ID

group: SEATA_GROUP #seata配置的分组名称

username: nacos

password: nacos

service:

vgroup-mapping:

my_test_tx_group: default #此处的配置来源于上述步骤中config.txt中的service.vgroupMapping.my_test_tx_group=default

#注意:此处的my_test_tx_group需要和上面seata.tx-service-group以及config.txt中的配置对应

- 使用

在服务A调用服务B的方法上,增加@GlobalTransactional(rollbackFor = Exception.class)注解

rollbackFor = Exception.class:表示当前方法发生何种异常,才会回滚事务,此处表示是所有异常都会回滚事务

服务A(创建订单,调用服务B):

/**

* 插入订单的mapper

*/

@Autowired

private OrderMapper mapper;

/**

* feign 远程调用库存微服务

*/

@Resource

StockFeignService service;

@GlobalTransactional(rollbackFor = Exception.class)

public void create () {

//此处模拟在订单服务的数据库中插入一条订单记录

if(mapper.insert("创建订单的参数")>0){

//此处模拟调用服务B减少库存

service.reduceStock("减少库存的参数");

}

}

服务B(接收调用,减少库存):

/**

* 减少库存的mapper

*/

@Autowired

private StockMapper mapper;

public void reduceStock() {

//此处模拟在库存微服务中减少对应商品的库存

if(mapper.update("减少库存的参数")>0){

//此处模拟两条数据都已经添加到了数据库,但是业务逻辑发生了错误

int a=10/0;

}

}

- 效果

当订单服务A在调用库存服务B后,由于服务B发生异常,从而导致A,B的事务应该都回滚

出现的问题

- seata已经注册到nacos,并且配置文件也推送成功,但是服务调用,运行时出现如下错误:

no available service 'default' found, please make sure registry config correct

可参考如下文章

本人是因为多了如下配置,删除后就好了

seata:

registry:

nacos:

application: service-seata

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?