Pytorch工具箱

加载数据

Dataset

提供一种方式去获取数据及其label

如何获取每一个数据及其label?

告诉我们总共有多少个数据?

from torch.utils.data import Dataset

from PIL import Image

import os

class Mydataset(Dataset):

#init中可以存储一个img_path图片名称的数组,则可以在getitem中使用这个打开图片

def __init__(self,root_dir,label_dir):

self.root_dir = root_dir

self.label_dir = label_dir

self.path = os.path.join(self.root_dir,self.label_dir)

self.img_path = os.listdir(self.path)

def __getitem__(self,idx):

img_name = self.img_path[idx]

img_item_path = os.path.join(self.root_dir,self.label_dir,img_name)

img = Image.open(img_item_path)

label = self.label_dir

return img,label

def __len__(self):

return len(self.img_path)

root_dir = 'E:\\ml\\hymenoptera_data\\train'

ants_dir = 'ants'

bees_dir = 'bees'

ants_dataset = Mydataset(root_dir,ants_dir)

bees_dataset = Mydataset(root_dir,bees_dir)

img,label = bees_dataset[1]

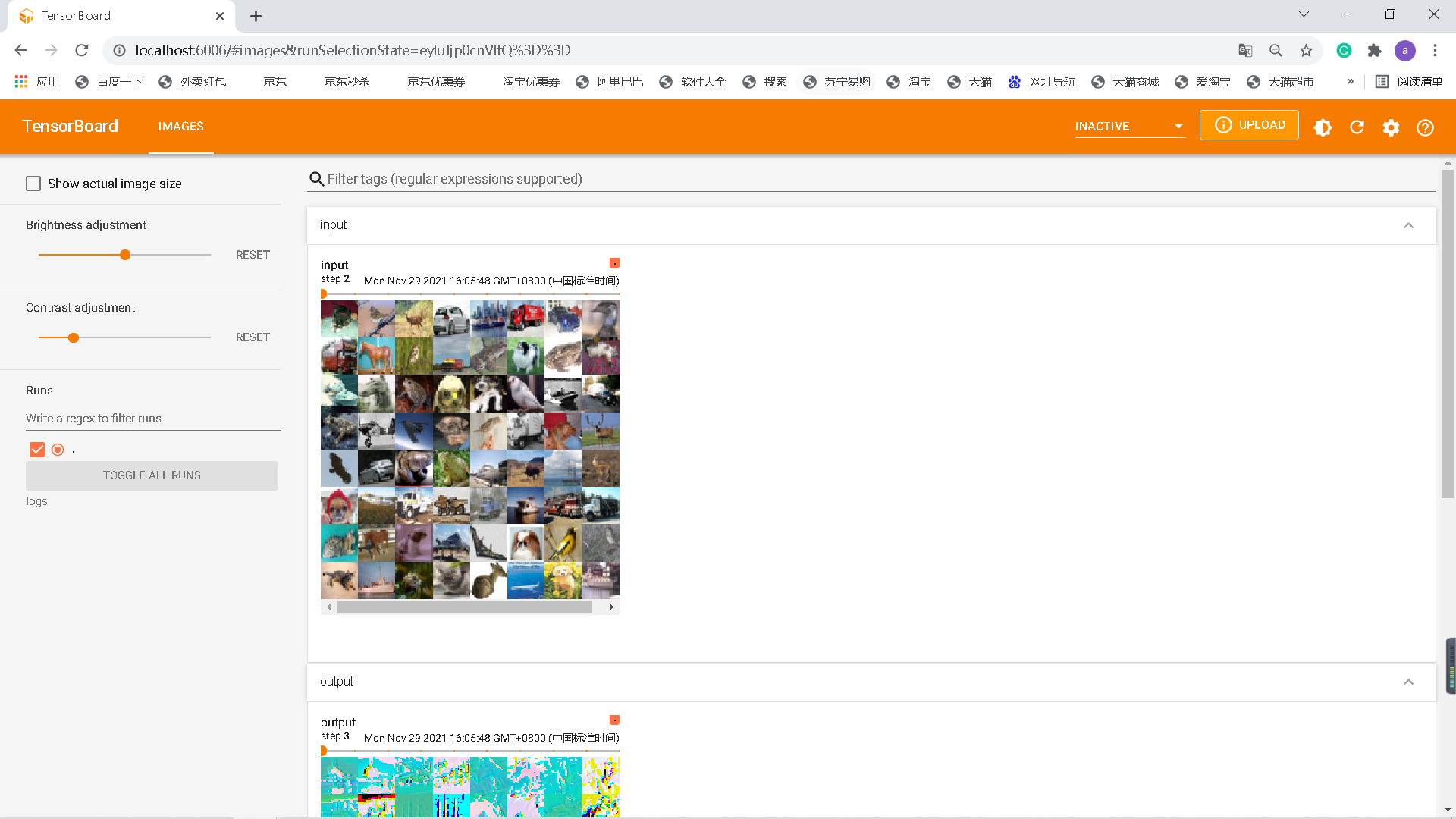

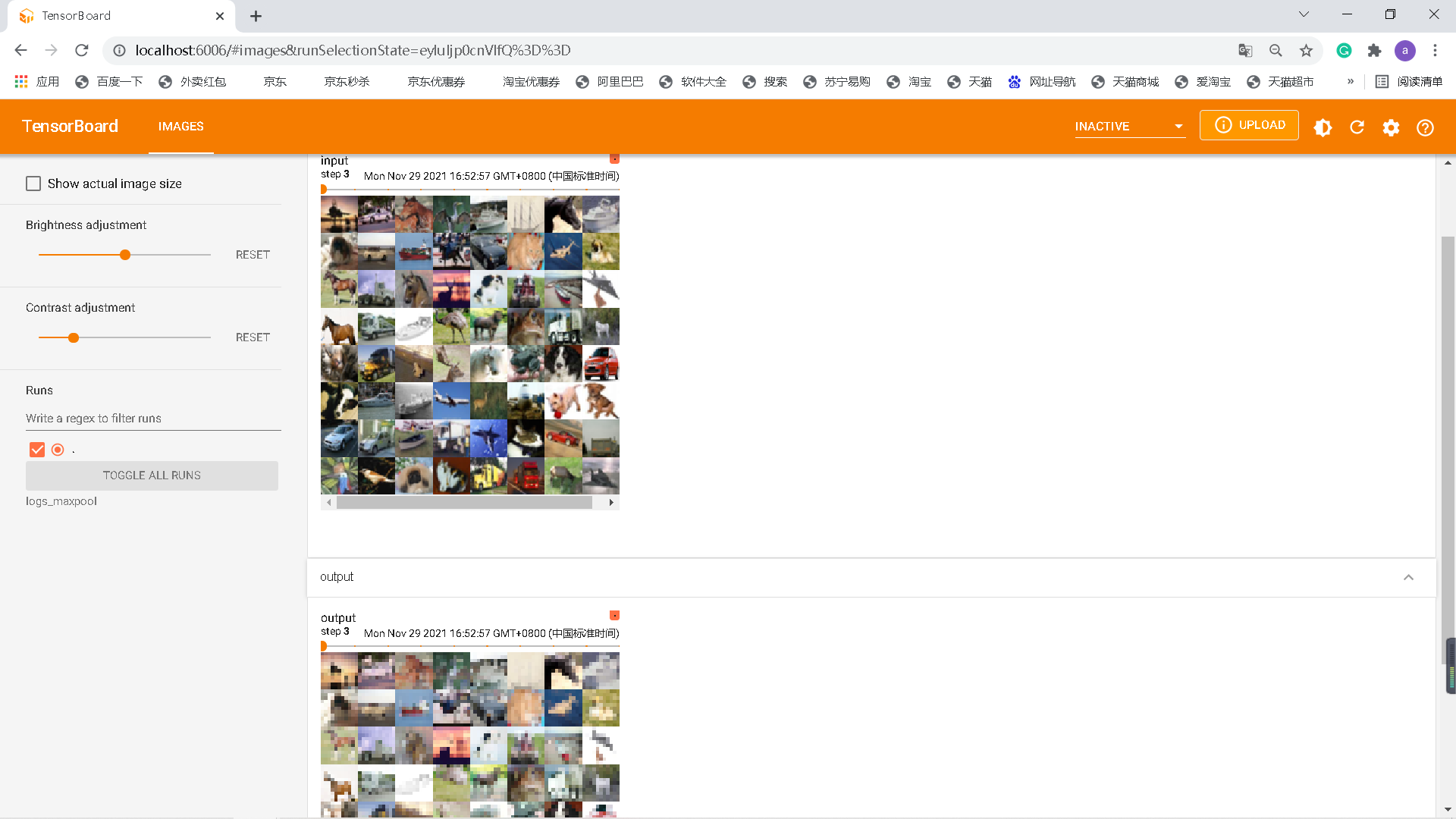

TensorBoard

from tensorboardX import SummaryWriter

writter = SummaryWriter("../logs")

writter.add_images("input",imgs,step)

writter.add_images("output",output,step)

##打开:

tensorboard --logdir=logs

图像变换,transform的转变:

ToTensor 把一些数据转化为tensor的数据类型

import torchvision.transforms as transforms

from PIL import Image

#transforms如何使用

#tensor数据类型

img_path = 'E:\\ml\\hymenoptera_data\\train\\ants\\0013035.jpg'

img = Image.open(img_path)

print(img)

#创建具体的工具,result = tool(input)

tensor_trans = transforms.ToTensor()

tensor_img = tensor_trans(img)

print(tensor_img)

Normalize

#有两个参数,'mean' and 'std'

trans_norm = transforms.Normalize([0.5,0.5,0.5],[0.5,0.5,0.5])

img_norm = trans_norm(tensor_img)

print(img_norm)

Resize

trans_resize = transforms.Resize((128,128))

#img PIL -> resize -> Normalize -> img_resize

img_resize = trans_resize(img_norm)

print(img_resize)

Compose

Dataloader

dataloader = DataLoader(dataset,batch_size = 64)

for data in dataloader:

imgs,targets = data

为后面的网络提供不同的数据形式

神经网络nn.module

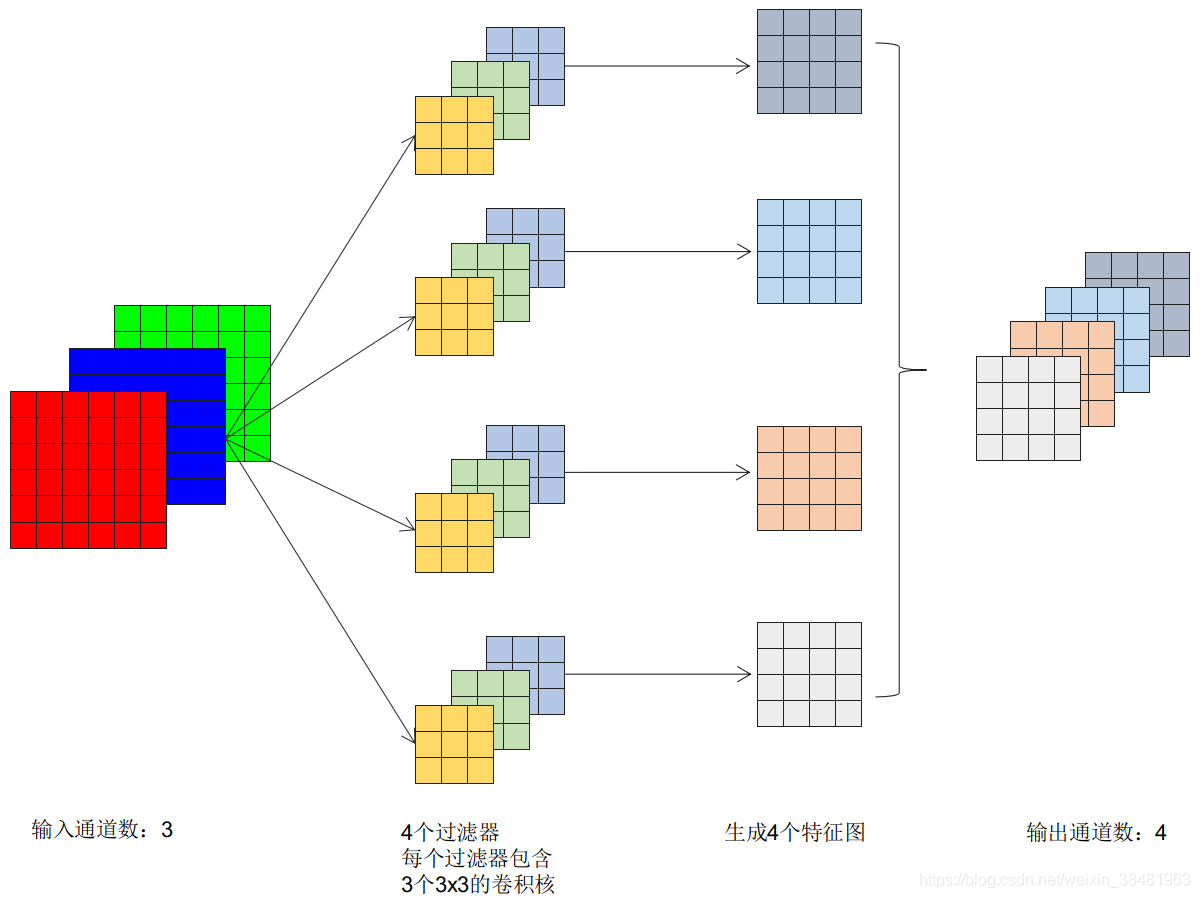

channel的理解

- 对于最初输入图片样本的通道数

in_channels取决于图片的类型,如果是彩色的,即RGB类型,这时候通道数固定为3,如果是灰色的,通道数为1。 - 卷积完成之后,输出的通道数

out_channels取决于过滤器的数量。从这个方向理解,这里的out_channels设置的就是过滤器的数目。 - 对于第二层或者更多层的卷积,此时的

in_channels就是上一层的out_channels,out_channels还是取决于过滤器数目。

在第2条我用的是过滤器,而不是卷积核,跟原作者观点有些不同,因为我认为在这里用过滤器描述更合适。卷积核和过滤器的区别可以看我的这篇文章 卷积核(kernel)和过滤器(filter)的区别

对于第1点可以参考下图:

这里输入通道数是3,每个通道都需要跟一个卷积核做卷积运算,然后将结果相加得到一个特征图的输出,这里有4个过滤器,因此得到4个特征图的输出,输出通道数为4。

class Myren(nn.Module):

def __init__(self):

super().__init__()

def forward(self,input):

output = input + 1

return output

myren = Myren()

x = torch.tensor(1.0)

output = myren(x)

print(output)

############################

#我们定义了一个自己的神经网络Myren,其的forward方法提供了+1的传递方式

tensor(2.)

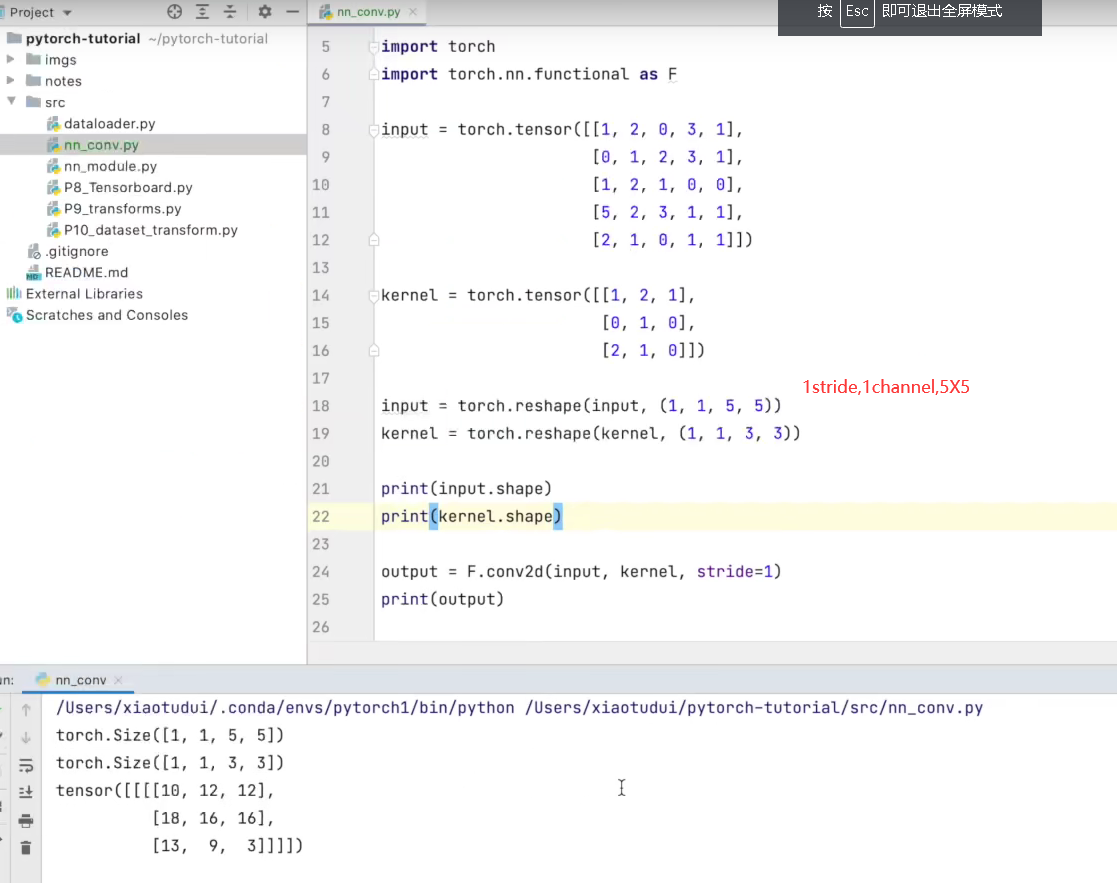

Conv2d

相关参数

- in_channels (int) – Number of channels in the input image

- out_channels (int) – Number of channels produced by the convolution

- kernel_size (int or tuple) – Size of the convolving kernel

- stride (int or tuple, optional) – Stride of the convolution. Default: 1

- padding (int, tuple or str, optional) – Padding added to all four sides of the input. Default: 0

- padding_mode (string*,* optional) –

'zeros','reflect','replicate'or'circular'. Default:'zeros' - dilation (int or tuple, optional) – Spacing between kernel elements. Default: 1

- groups (int, optional) – Number of blocked connections from input channels to output channels. Default: 1

- bias (bool, optional) – If

True, adds a learnable bias to the output. Default:True

x = torch.randn(1,1,28,28)

'''

输入:[ batch_size, channels, height_1, width_1 ]

batch_size,一个batch中样本的个数 1

channels,通道数,也就是当前层的深度 1 一层就是黑白图像

height_1, 图片的高 28

width_1, 图片的宽 28

'''

conv = torch.nn.Conv2d(1,25,(3,3),1)

'''

in_channel,通道数,和上面保持一致,也就是当前层的深度 1

out_channel ,输出的深度 25【需要25个filter】 25个卷积核

height_2,卷积核的高 3

width_2,卷积核的宽 3

stride, 卷积的距离是1

'''

ans = conv(x)

print(len(ans))

print(len(ans[0]))

print(len(ans[0][0]))

print(len(ans[0][0][0]))

###################################

1

25

26

26

测试

import torchvision

from torch import nn

import torch

from torch.nn import Conv2d

from torch.utils.data import DataLoader

from tensorboardX import SummaryWriter

dataset = torchvision.datasets.CIFAR10('../data',train = False,transform = torchvision.transforms.ToTensor(),download = True)

dataloader = DataLoader(dataset,batch_size = 64)

class Myren(nn.Module):

def __init__(self):

super(Myren,self).__init__()

#彩色图像是3层,6个kernel,3X3,1偏移,0,裁剪

self.conv1 = Conv2d(3,6,3,1,0)

def forward(self,x):

x = self.conv1(x)

return x

myren = Myren()

print(myren)

step = 0

writter = SummaryWriter("../logs")

for data in dataloader:

imgs,targets = data

output = myren.forward(imgs)

print(imgs.shape)

print(output.shape)

#torch.Size([64, 3, 32, 32])

writter.add_images("input",imgs,step)

#torch.Size([64, 6, 30, 30]) -> [xxx,3,30,30]

output = torch.reshape(output,(-1,3,30,30))

writter.add_images("output",output,step)

step = step + 1

##使用tensorboard --logdir=logs打开

Max Pooling

相关参数

- kernel_size – the size of the window to take a max over 池子的大小

- stride – the stride of the window. Default value is

kernel_size - padding – implicit zero padding to be added on both sides

- dilation – a parameter that controls the stride of elements in the window

- return_indices – if

True, will return the max indices along with the outputs. Useful fortorch.nn.MaxUnpool2dlater - ceil_mode – when True, will use ceil instead of floor to compute the output shape 超界的时候是否算上

import torchvision

from torch import nn

import torch

from torch.nn import Conv2d

from torch.nn import MaxPool2d

from torch.utils.data import DataLoader

from tensorboardX import SummaryWriter

input = torch.tensor([[1,2,0,3,1],

[0,1,2,3,1],

[1,2,1,0,0],

[5,2,3,1,1],

[2,1,0,1,1]],dtype = torch.float32)

input = torch.reshape(input,(-1,1,5,5))

print(input.shape)

class Myren(nn.Module):

def __init__(self):

super().__init__()

self.maxpool1 = MaxPool2d(kernel_size = 3,ceil_mode = True)

def forward(self,x):

output = self.maxpool1(x)

return output

myren = Myren()

output = myren(input)

print(output)

测试

import torchvision

from torch import nn

import torch

from torch.nn import Conv2d

from torch.nn import MaxPool2d

from torch.utils.data import DataLoader

from tensorboardX import SummaryWriter

dataset = torchvision.datasets.CIFAR10('../data',train = False,

transform = torchvision.transforms.ToTensor(),download = True)

dataloader = DataLoader(dataset,batch_size = 64)

class Myren(nn.Module):

def __init__(self):

super().__init__()

self.maxpool1 = MaxPool2d(kernel_size = 3,ceil_mode = True)

def forward(self,x):

output = self.maxpool1(x)

return output

myren = Myren()

writer = SummaryWriter('../logs_maxpool')

step = 0

for data in dataloader:

imgs,targets = data

output = myren(imgs)

#三维图像池化后也是三维的,不影响,不需要reshape

writer.add_images('input',imgs,step)

writer.add_images('output',output,step)

step = step + 1

非线性激活

Relu

ReLU(input,inplace = True)

inplace : True 的话,是否对原来变量进行一个替换

若input = -1

Relu(input,inplace = True)

input = 0

output = Relu(input,inplace = False)

output = 0 , 原数据不变

from torch.nn import ReLU

from torch import nn

import torch

input = torch.tensor([[1,-0.5],

[-1,3]])

input = torch.reshape(input,(-1,1,2,2))

class Myren(nn.Module):

def __init__(self):

super().__init__()

self.relu1 = ReLU()

def forward(self,input):

output = self.relu1(input)

return output

myren = Myren()

print(myren(input))

print(input)

###########################

tensor([[[[1., 0.],

[0., 3.]]]])

tensor([[[[ 1.0000, -0.5000],

[-1.0000, 3.0000]]]])

sigmoid

import torchvision

from torch import nn

import torch

from torch.nn import Conv2d

from torch.nn import MaxPool2d

from torch.nn import ReLU

from torch.nn import Sigmoid

from torch.utils.data import DataLoader

from tensorboardX import SummaryWriter

dataset = torchvision.datasets.CIFAR10('../data',train = False,download = True,

transform = torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset,batch_size = 64)

writer = SummaryWriter('../logs_relu')

class Myren(nn.Module):

def __init__(self):

super().__init__()

self.relu1 = ReLU()

self.sigmoid1 = Sigmoid()

def forward(self,input):

output = self.sigmoid1(input)

return output

myren = Myren()

step = 0

for data in dataloader:

imgs,target = data

writer.add_images("input",imgs,step)

output = myren(imgs)

writer.add_images('output',output,step)

step =step + 1

线性层

Linner

import torchvision

from torch import nn

import torch

from torch.nn import Conv2d

from torch.nn import MaxPool2d

from torch.nn import ReLU

from torch.nn import Sigmoid

from torch.nn import Linear

from torch.utils.data import DataLoader

from tensorboardX import SummaryWriter

dataset = torchvision.datasets.CIFAR10('../data',train = False,download = True,

transform = torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset,batch_size = 64)

class Myren(nn.Module):

def __init__(self):

super().__init__()

self.linear1 = Linear(196608,10)

def forward(self,input):

output = self.linear1(input)

return output

myren = Myren()

for data in dataloader:

imgs,targets = data

print(imgs.shape)

output = torch.reshape(imgs,(1,1,1,-1))

torch.flatten()

print(output.shape)

output = myren(output)

print(output.shape)

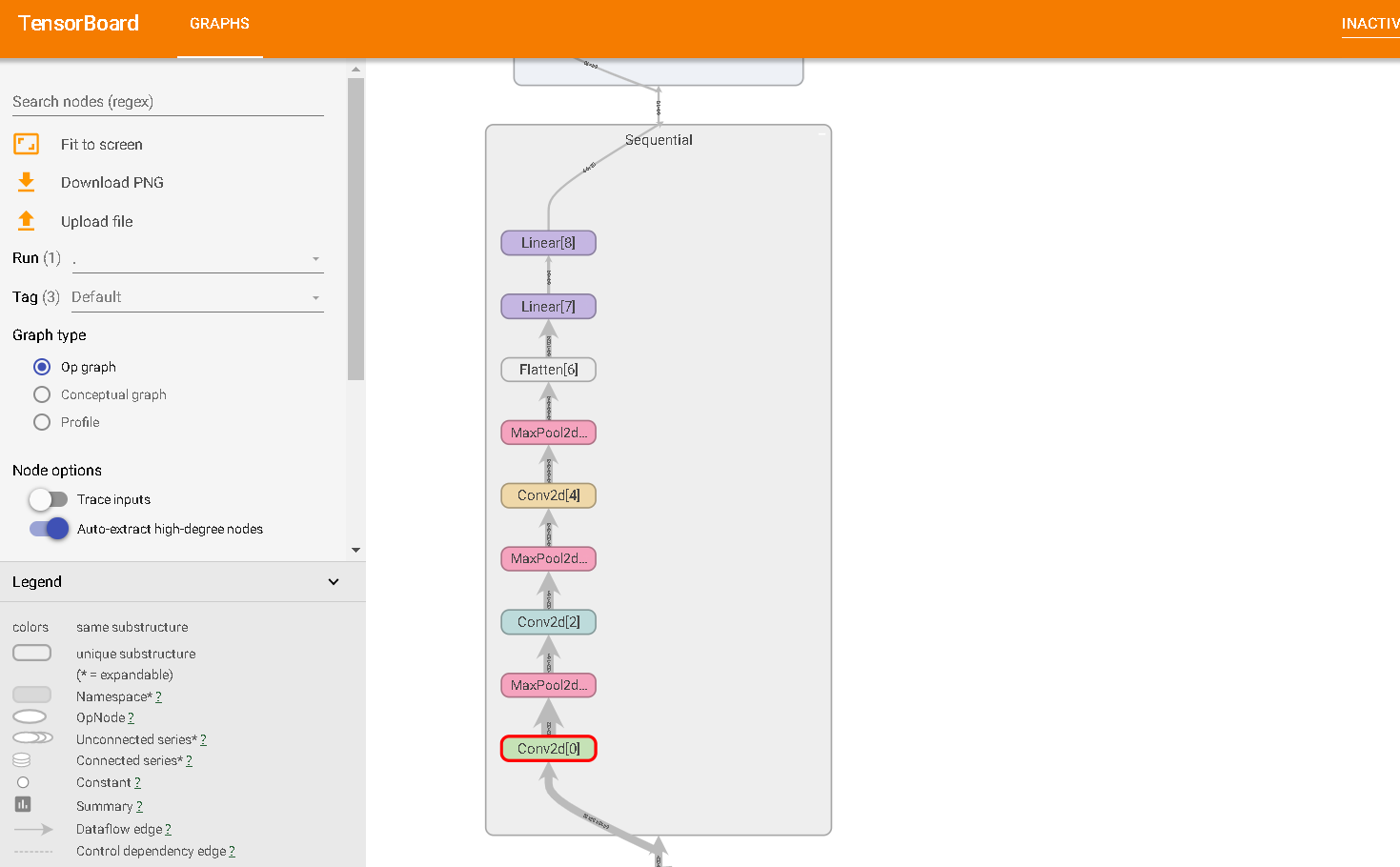

Sequential

在Sequential中定义一系列模型

import torchvision

from torch import nn

import torch

from torch.nn import Conv2d

from torch.nn import MaxPool2d

from torch.utils.data import DataLoader

from tensorboardX import SummaryWriter

class Myren(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(3,32,(5,5),padding = 2)

self.maxpool1 = nn.MaxPool2d(kernel_size = 2)

self.conv2 = nn.Conv2d(32,32,(5,5),padding = 2)

self.maxpool2 = nn.MaxPool2d(kernel_size = 2)

self.conv3 = nn.Conv2d(32,64,(5,5),padding = 2)

self.maxpool3 = nn.MaxPool2d(kernel_size = 2)

self.flatten = nn.Flatten()

self.linear1 = nn.Linear(1024,64)

#最后分为10个类别

self.linear2 = nn.Linear(64,10)

def forward(self,input):

input = self.conv1(input)

input = self.maxpool1(input)

input = self.conv2(input)

input = self.maxpool2(input)

input = self.conv3(input)

input = self.maxpool3(input)

input = self.flatten(input)

input = self.linear1(input)

output = self.linear2(input)

return output

myren = Myren()

input = torch.ones((64,3,32,32))

output = myren(input)

print(output.shape)

######################################

torch.Size([64, 10])

使用Sequential

import torchvision

from torch import nn

import torch

from torch.nn import Conv2d

from torch.nn import MaxPool2d

from torch.utils.data import DataLoader

from tensorboardX import SummaryWriter

class Myren(nn.Module):

def __init__(self):

super().__init__()

self.model1 = nn.Sequential(

nn.Conv2d(3,32,(5,5),padding = 2),

nn.MaxPool2d(kernel_size = 2),

nn.Conv2d(32,32,(5,5),padding = 2),

nn.MaxPool2d(kernel_size = 2),

nn.Conv2d(32,64,(5,5),padding = 2),

nn.MaxPool2d(kernel_size = 2),

nn.Flatten(),

nn.Linear(1024,64),

nn.Linear(1024,10))

def forward(self,input):

input = self.model1(input)

return output

myren = Myren()

input = torch.ones((64,3,32,32))

output = myren(input)

print(output.shape)

####################################

torch.Size([64, 10])

#展示我们的模型

import torchvision

from torch import nn

import torch

from torch.nn import Conv2d

from torch.nn import MaxPool2d

from torch.utils.data import DataLoader

from tensorboardX import SummaryWriter

class Myren(nn.Module):

def __init__(self):

super().__init__()

self.model1 = nn.Sequential(

nn.Conv2d(3,32,(5,5),padding = 2),

nn.MaxPool2d(kernel_size = 2),

nn.Conv2d(32,32,(5,5),padding = 2),

nn.MaxPool2d(kernel_size = 2),

nn.Conv2d(32,64,(5,5),padding = 2),

nn.MaxPool2d(kernel_size = 2),

nn.Flatten(),

nn.Linear(1024,64),

nn.Linear(64,10))

def forward(self,input):

input = self.model1(input)

return output

myren = Myren()

input = torch.ones((64,3,32,32))

output = myren(input)

print(output.shape)

writer = SummaryWriter("../logs_seq")

writer.add_graph(myren.model1,input)

writer.close()

Loss Function

nn.CrossEntropyLoss()

在其中自动帮你做了softmax并求出了交叉熵

import torch

from torch.nn import L1Loss

import torch.nn as nn

inputs = torch.tensor([1,2,3],dtype = torch.float32)

targets = torch.tensor([1,2,5],dtype = torch.float32)

inputs = torch.reshape(inputs,(1,1,1,3))

targets = torch.reshape(targets,(1,1,1,3))

loss = L1Loss(reduction='sum')#默认是求和后取平均值

result = loss(inputs,targets)

print(result)

x = torch.tensor([0.1,0.2,0.3])

y = torch.tensor([1]) #代表选第二个0.2的

#batchsize : 1

x = torch.reshape(x,(1,3))

loss_cross = nn.CrossEntropyLoss()

result_cross = loss_cross(x,y)

print(result_cross)

#########################

tensor(2.)

tensor(1.1019)

优化器Optim

torch.optim.SGD随机梯度下降

import torchvision

from torch import nn

import torch

import torch.nn as nn

from torch.nn import MaxPool2d

from torch.utils.data import DataLoader

from tensorboardX import SummaryWriter

dataset = torchvision.datasets.CIFAR10('../data',train = False,

transform = torchvision.transforms.ToTensor(),download = True)

dataloader = DataLoader(dataset,batch_size = 64)

class Myren(nn.Module):

def __init__(self):

super().__init__()

self.mode = nn.Sequential(

nn.Conv2d(3,32,(5,5),padding = 2),

nn.MaxPool2d(kernel_size = 2),

nn.Conv2d(32,32,(5,5),padding = 2),

nn.MaxPool2d(kernel_size = 2),

nn.Conv2d(32,64,(5,5),padding = 2),

nn.MaxPool2d(kernel_size = 2),

nn.Flatten(),

nn.Linear(1024,64),

nn.Linear(64,10))

def forward(self,input):

output = self.mode(input)

return output

loss = nn.CrossEntropyLoss()

myren = Myren()

#随机梯度下降

optim = torch.optim.SGD(myren.parameters(),lr=0.01)

for epoch in range(20):

running_loss = 0.0

for data in dataloader:

imgs,targets = data

outputs = myren(imgs)

result_loss = loss(outputs,targets)

optim.zero_grad()

#反向传播

result_loss.backward()

optim.step()

running_loss = running_loss + result_loss

print(running_loss)

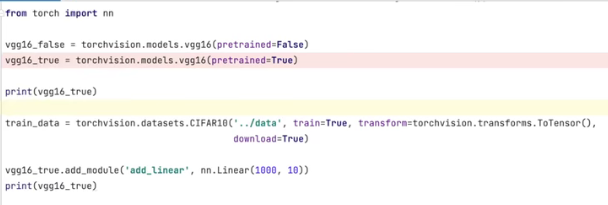

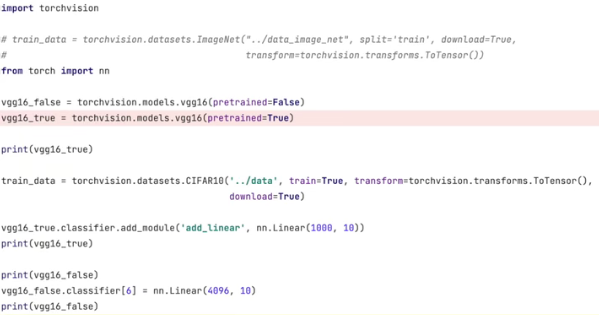

模型使用修改

模型添加:model.add_module(‘name’, nn.Linear())

模型修改:model.classifier[x] = nn.xxx()

模型保存加载

保存和加载

import torch

import torchvision

vgg16 = torchvision.models.vgg16(pretrained = False)

#保存方式1,保存了模型结构也保存了参数

torch.save(vgg16,"vgg16_metod1.pth")

#保存方式2,保存参数

torch.save(vgg16.state_dict(),"vgg16_metod2.pth")

import torch

import torchvision

#加载模型1

model = torch.load("vgg16_metod1.pth")

print(model)

#加载模型2

vgg16 = torchvision.models.vgg16(pretrained = False)

vgg16.load_state_dict(torch.load("vgg16_metod2.pth"))

print(vgg16)

完整模型训练

import torchvision

import torch

import torch.nn as nn

from torch.utils.data import DataLoader

from tensorboardX import SummaryWriter

#准备数据集

train_data = torchvision.datasets.CIFAR10(root = "../data",train = True,

transform = torchvision.transforms.ToTensor(),

download = True)

test_data = torchvision.datasets.CIFAR10(root = "../data",train = False,

transform = torchvision.transforms.ToTensor(),

download = True)

train_data_size = len(train_data)

test_data_size = len(test_data)

print(train_data_size)

print(test_data_size)

#加载数据集

train_dataloader = DataLoader(train_data,batch_size = 64)

test_dataloader = DataLoader(test_data,batch_size = 64)

#搭建神经网络

class Myren(nn.Module):

def __init__(self):

super().__init__()

self.model = nn.Sequential(

nn.Conv2d(3,32,5,1,2),

nn.MaxPool2d(2),

nn.Conv2d(32,32,5,1,2),

nn.MaxPool2d(2),

nn.Conv2d(32,64,5,1,2),

nn.MaxPool2d(2),

nn.Flatten(),

nn.Linear(1024,64),

nn.Linear(64,10)

)

def forward(self,x):

x = self.model(x)

return x

myren = Myren()

#损失函数

loss_fn = nn.CrossEntropyLoss()

#优化器

optimizer = torch.optim.SGD(myren.parameters(),lr = 0.01)

#训练次数和步数

total_train_step = 0

total_test_step = 0

epoch = 10

#添加tensorboard

writer = SummaryWriter("../logs_train")

for i in range(epoch):

print("--------训练轮数:{}--------".format(i+1))

for data in train_dataloader:

imgs,targets = data

outputs = myren(imgs)

loss = loss_fn(outputs,targets)

#优化器优化

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step += 1

if(total_train_step%100 == 0):

print("训练次数:{},loss:{}".format(total_train_step,loss.item()))

writer.add_scalar("train_loss",loss.item(),total_train_step)

#测试

total_test_loss = 0

total_test_step += 1

total_accuracy = 0

with torch.no_grad():

for data in test_dataloader:

imgs,targets = data

outputs = myren(imgs)

loss = loss_fn(outputs,targets)

accuracy = (outputs.argmax(1) == targets).sum()

total_accuracy += accuracy

total_test_loss = total_test_loss + loss

print("整体测试集上的Loss:{}".format(total_test_loss))

print("整体测试集上的正确率:{}".format(total_accuracy/test_data_size))

writer.add_scalar("test_accuracy",total_accuracy/total_test_step,total_test_step)

writer.add_scalar("test_loss",total_test_loss,total_test_step)

writer.close()

252

252

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?