1、环境准备:

| hostname | IP | 组件 |

| m1 | 192.168.150.140 | keepalived,haproxy,etcd,apiserver,docker,controller-manager,scheduler |

| m2 | 192.168.150.141 | keepalived,haproxy,etcd,apiserver,docker,controller-manager,scheduler |

| n1 | 192.168.150.142 | kubelet,kube-proxy,docker |

| n2 | 192.168.150.144 | kubelet,kube-proxy,docker |

| VIP(虚拟IP地址) | 192.168.150.143 |

2、系统初始化

2、1 关闭防火墙

$ systemctl stop firewalld

$ systemctl disable firewalld2、2 关闭selinux

$ sed -i 's/enforcing/disabled/' /etc/selinux/config

$ setenforce 02、3 关闭swap分区

$ swapoff -a

$ sed -ri 's/.*swap.*/#&/' /etc/fstab 2、4 设置主机名

$ hostnamectl set-hostname <hostname>2、5 添加hosts

$ cat >> /etc/hosts << EOF

192.168.150.143 master.k8s.io k8s-vip

192.168.150.140 master01.k8s.io master1

192.168.150.141 master02.k8s.io master2

192.168.150.142 node01.k8s.io node1

192.168.150.144 node02.k8s.io node2

EOF2、6 将桥接的IPv4流量传递到iptables的链

$ cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

$ sysctl --system2、7 设置时间同步

$ yum install ntpdate -y

$ ntpdate time.windows.com3、所有master节点部署keepalived

3.1 安装相关包和keepalived

$ yum install -y conntrack-tools libseccomp libtool-ltdl

$ yum install -y keepalived3、2 配置master节点

master1和master2节点配置:

cat > /etc/keepalived/keepalived.conf <<EOF

! Configuration File for keepalived

global_defs {

router_id k8s

}

vrrp_script check_haproxy {

script "killall -0 haproxy"

interval 3

weight -2

fall 10

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface 自己虚拟机的网卡名

virtual_router_id 51

priority 250

advert_int 1

authentication {

auth_type PASS

auth_pass ceb1b3ec013d66163d6ab

}

virtual_ipaddress {

自己的虚拟IP

}

track_script {

check_haproxy

}

}

EOF3、3 启动和设置开机启动并查看状态

$ systemctl start keepalived

$ systemctl enable keepalived

$ systemctl status keepalived3、4 查看master1网卡信息

$ ip a s ens334、所有master节点部署haproxy

4、1 安装

$ yum install -y haproxy4、2 配置

$ cat > /etc/haproxy/haproxy.cfg << EOF

#---------------------------------------------------------------------

# Global settings

#---------------------------------------------------------------------

global

# to have these messages end up in /var/log/haproxy.log you will

# need to:

# 1) configure syslog to accept network log events. This is done

# by adding the '-r' option to the SYSLOGD_OPTIONS in

# /etc/sysconfig/syslog

# 2) configure local2 events to go to the /var/log/haproxy.log

# file. A line like the following can be added to

# /etc/sysconfig/syslog

#

# local2.* /var/log/haproxy.log

#

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

#---------------------------------------------------------------------

# kubernetes apiserver frontend which proxys to the backends

#---------------------------------------------------------------------

frontend kubernetes-apiserver

mode tcp

bind *:16443

option tcplog

default_backend kubernetes-apiserver

#---------------------------------------------------------------------

# round robin balancing between the various backends

#---------------------------------------------------------------------

backend kubernetes-apiserver

mode tcp

balance roundrobin

server master01.k8s.io master1节点ip:6443 check

server master02.k8s.io master2节点ip:6443 check

#---------------------------------------------------------------------

# collection haproxy statistics message

#---------------------------------------------------------------------

listen stats

bind *:1080

stats auth admin:awesomePassword

stats refresh 5s

stats realm HAProxy\ Statistics

stats uri /admin?stats

EOF4、2 启动和设置开机启动并查看状态

$ systemctl enable haproxy

$ systemctl start haproxy

$ systemctl status haproxy

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2022-10-28 06:50:12 EDT; 47s ago

Process: 9700 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 9701 (keepalived)

CGroup: /system.slice/keepalived.service

├─9701 /usr/sbin/keepalived -D

├─9702 /usr/sbin/keepalived -D

└─9703 /usr/sbin/keepalived -D

4、3 检查端口

$ netstat -lntup|grep haproxy

tcp 0 0 0.0.0.0:1080 0.0.0.0:* LISTEN 9772/haproxy

tcp 0 0 0.0.0.0:16443 0.0.0.0:* LISTEN 9772/haproxy

udp 0 0 0.0.0.0:34419 0.0.0.0:* 9771/haproxy

5、所有节点安装Docker/kubeadm/kubelet/kubectl

5、1 安装Docker

$ wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

$ yum -y install docker-ce-18.06.1.ce-3.el7启动和设置开机启动

$ systemctl enable docker && systemctl start docker设置docker镜像仓库

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["自己的阿里云镜像仓库地址"]

}

EOF重启docker

$ systemctl daemon-reload

$ systemctl restart docker查看是否配置成功

$ docker info

Experimental: false

Insecure Registries:

127.0.0.0/8

Registry Mirrors:

https://fxt824bw.mirror.aliyuncs.com/

Live Restore Enabled: false

可以看到配置已经成功。

5、2 添加阿里云k8s的YUM软件源

$ cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF5、3 安装kubeadm,kubelet和kubectl

$ yum install -y kubelet-1.18.0 kubeadm-1.18.0 kubectl-1.18.0设置开机启动

$ systemctl enable kubelet6、部署Kubernetes Master

6、1 创建kubeadm配置文件

注意:在具有vip的master上操作

$ mkdir /usr/local/kubernetes/manifests -p

$ cd /usr/local/kubernetes/manifests/

$ vi kubeadm-config.yaml

# yaml文件内容如下:

apiServer:

certSANs:

- master1

- master2

- master.k8s.io

- 虚拟地址

- master1节点IP

- master2节点IP

- 127.0.0.1

extraArgs:

authorization-mode: Node,RBAC

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: "master.k8s.io:16443"

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.18.0

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.1.0.0/16

scheduler: {}6、2 在m2节点执行配置文件

$ kubeadm init --config kubeadm-config.yaml6、3 按照提示配置环境变量

$ mkdir -p $HOME/.kube

$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

$ sudo chown $(id -u):$(id -g) $HOME/.kube/config6、4 查看node和pods

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

m2 NotReady master 4m43s v1.18.0

$ kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7ff77c879f-2vl5p 0/1 Pending 0 4m16s

coredns-7ff77c879f-vmrkc 0/1 Pending 0 4m16s

etcd-m2 1/1 Running 0 4m34s

kube-apiserver-m2 1/1 Running 0 4m34s

kube-controller-manager-m2 1/1 Running 0 4m34s

kube-proxy-nsdmf 1/1 Running 0 4m17s

kube-scheduler-m2 1/1 Running 0 4m34s

此时的m2节点的状态为NotReady,因此需要配置集群网络。

7、安装calico集群网络

7、1 获取calico.yaml文件

$ mkdir flannel

$ cd flannel

$ curl https://docs.projectcalico.org/v3.18/manifests/calico.yaml -O注意:calico.yaml文件需要对应k8s的版本,否则无法安装。

7、2 安装calico网络

$ kubectl apply -f calico.yaml7、3 查看Pods状态和nodes状态

$ kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-7b5bcff94c-x46rj 1/1 Running 0 9m25s

calico-node-5cwbc 1/1 Running 0 9m25s

calico-node-kvnmc 1/1 Running 0 3m15s

coredns-7ff77c879f-2vl5p 1/1 Running 0 11m

coredns-7ff77c879f-vmrkc 1/1 Running 0 11m

etcd-m1 1/1 Running 0 3m5s

etcd-m2 1/1 Running 0 11m

kube-apiserver-m1 1/1 Running 0 3m14s

kube-apiserver-m2 1/1 Running 0 11m

kube-controller-manager-m1 1/1 Running 0 3m13s

kube-controller-manager-m2 1/1 Running 1 11m

kube-proxy-nsdmf 1/1 Running 0 11m

kube-proxy-wcrxk 1/1 Running 0 3m15s

kube-scheduler-m1 1/1 Running 0 3m5s

kube-scheduler-m2 1/1 Running 1 11m

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

m2 Ready master 2m39s v1.18.0

可以看到m2的状态变成了Ready,此时calico网络已经安装成功。

8、m1节点加入集群

8、1 复制密钥及相关文件

从m2复制密钥及相关文件到m1:

$ ssh root@m1节点IP mkdir -p /etc/kubernetes/pki/etcd

$ scp /etc/kubernetes/admin.conf root@m1节点IP:/etc/kubernetes

$ scp /etc/kubernetes/pki/{ca.*,sa.*,front-proxy-ca.*} root@m1节点IP:/etc/kubernetes/pki

$ scp /etc/kubernetes/pki/etcd/ca.* root@m1节点IP:/etc/kubernetes/pki/etcd8、2 m1加入集群

$ kubeadm join master.k8s.io:16443 --token ckf7bs.30576l0okocepg8b --discovery-token-ca-cert-hash sha256:19afac8b11182f61073e254fb57b9f19ab4d798b70501036fc69ebef46094aba --control-plane注意,这条命令是在执行kubeadm init 命令时产生的。

8、3 查看m1是否加入集群

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

m1 Ready master 2m39s v1.18.0

m2 Ready master 11m v1.18.09、加入Kubernetes Node

9、1 所有node节点执行如下命令

$ kubeadm join master.k8s.io:16443 --token ckf7bs.30576l0okocepg8b --discovery-token-ca-cert-hash sha256:19afac8b11182f61073e254fb57b9f19ab4d798b70501036fc69ebef46094aba

注意,此命令也是在执行kubeadm init 时产生的。

9、2 查看node节点是否加入集群

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

m1 Ready master 8m3s v1.18.0

m2 Ready master 16m v1.18.0

n1 Ready <none> 105s v1.18.0

n2 Ready <none> 105s v1.18.0

可以看到n1和n2已经加入集群。

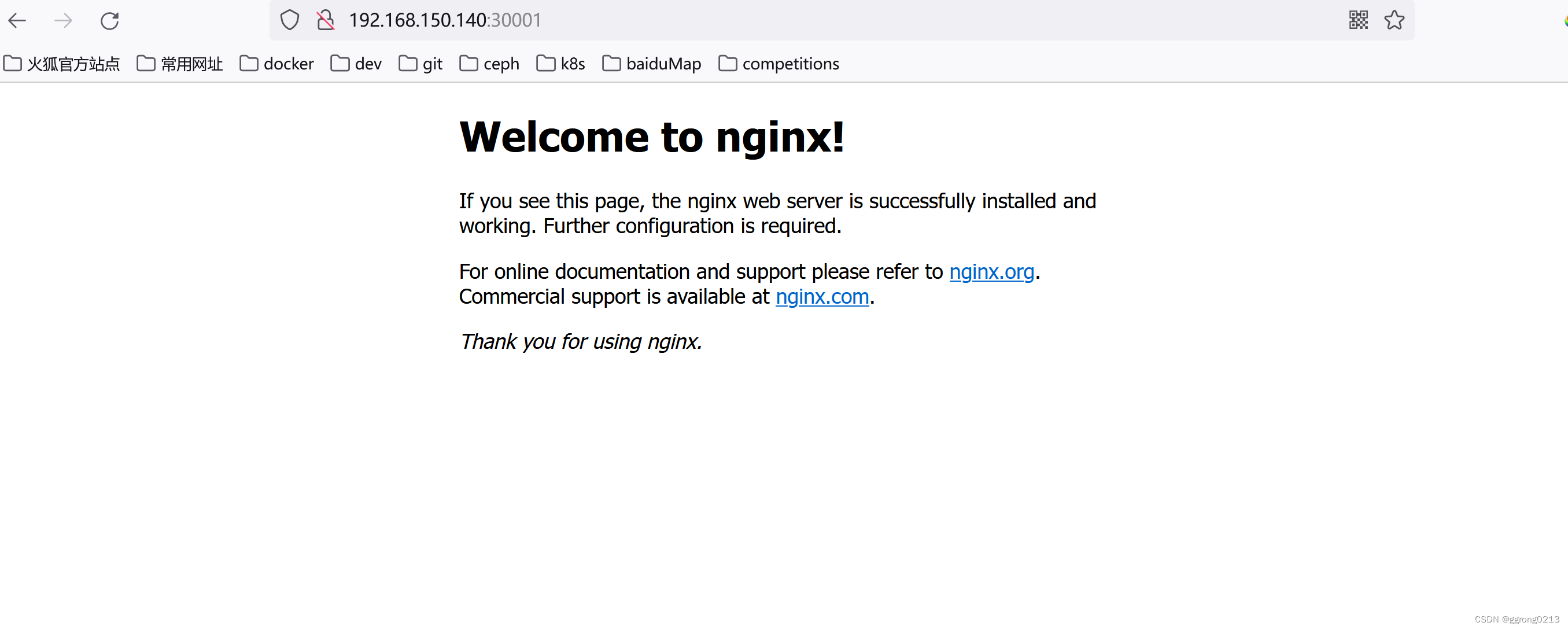

10、测试kubernetes集群

10、1 创建Pod容器应用

$ kubectl create deployment nginx --image=nginx10、2 对外暴露应用

$ kubectl expose deployment nginx --name nginx --port 80 --target-port 80 --type NodePort10、3 查看service

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 18m

nginx NodePort 10.1.218.137 <none> 80:30241/TCP 13s

10、4 修改service的对外暴露的端口号

$ kubectl edit service nginx

$ 指定nodePort为3000110、5 再次查看service

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 20m

nginx NodePort 10.1.218.137 <none> 80:30001/TCP 2m17s

11、访问应用

访问地址URL:IP:端口号

12、验证集群的高可用

将m2关机后再访问应用:

至此,高可用k8s集群搭建完成。

712

712

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?