最近练习的项目中需要用到FastDfs 和Nginx,这里记录一下安装和配置过程,个人使用部署过程遇到了很多的坑,准备把过程记下来不然忘了。

首先,购买/试用阿里云 CentOS 7.9 64位Scc版系统,进入远程桌面。

由于项目较老,所以我安装的是老版本。不过各组件版本得对应得上。

fastdfs 5.11版本对照:Version 5.11对应的fastdfs-nginx-module的Version 1.20

fastdfs 5.10版本对照:Version 5.10对应的fastdfs-nginx-module的Version 1.19

防止因版本不对产生错误。

我装的是fastdfs 5.11和fastdfs-nginx-module-1.20

1、安装GCC

FastDFS是C语言开发,安装FastDFS需要先将官网下载的源码进行编译,编译依赖gcc环境,如果没有gcc环境,需要安装gcc

yum install -y gcc gcc-c++2、安装libevent

FastDFS依赖libevent库

yum -y install libevent3、 安装libfastcommon

libfastcommon是FastDFS官方提供的,libfastcommon包含了FastDFS运行所需要的一些基础库。

- 获取libfastcommon安装包:

-

wget https://github.com/happyfish100/libfastcommon/archive/V1.0.38.tar.gz - 解压安装包:tar -zxvf V1.0.38.tar.gz

- 进入目录:cd libfastcommon-1.0.38

- 执行编译:./make.sh

- 安装:./make.sh install

libfastcommon安装好后会在/usr/lib64 目录下生成 libfastcommon.so 库文件;

注意:由于FastDFS程序引用usr/lib目录所以需要将/usr/lib64下的库文件拷贝至/usr/lib下。

cp libfastcommon.so /usr/lib

4、安装FastDFS

-

获取fdfs安装包:

wget https://github.com/happyfish100/fastdfs/archive/V5.11.tar.gz -

解压安装包:tar -zxvf V5.11.tar.gz

-

进入目录:cd fastdfs-5.11

-

执行编译:./make.sh

-

安装:./make.sh install

-

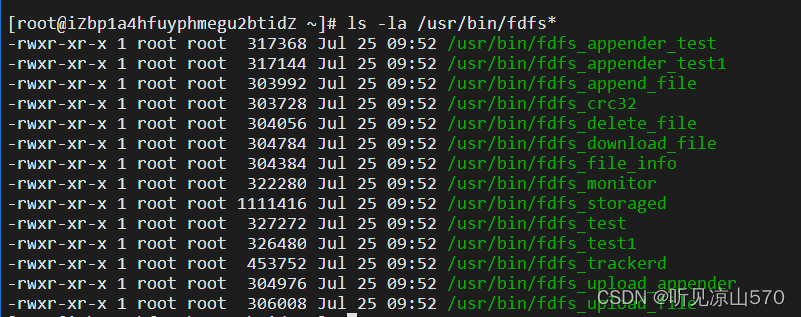

查看可执行命令:ls -la /usr/bin/fdfs*

5、安装tracker,配置Tracker服务

- 进入/etc/fdfs目录,有三个.sample后缀的文件(自动生成的fdfs模板配置文件),通过cp命令拷贝tracker.conf.sample,删除.sample后缀作为正式文件:

-

编辑tracker.conf:vi tracker.conf,修改相关参数

要改动的地方有几处,昨天改的,今天不记得是哪了,直接贴源文件。

("i":插入,修改文件, "Esc"退出修改模式 , "Shift+:(输入 :)"再输入wq 再按Ent 就能保存并退出文件了。 w write的意思,q quit退出) -

# is this config file disabled # false for enabled # true for disabled disabled=false # bind an address of this host # empty for bind all addresses of this host bind_addr= # the tracker server port port=22122 # connect timeout in seconds # default value is 30s connect_timeout=30 # network timeout in seconds # default value is 30s network_timeout=30 # the base path to store data and log files base_path=/home/fastdfs/tracker # max concurrent connections this server supported max_connections=256 # accept thread count # default value is 1 # since V4.07 accept_threads=1 # work thread count, should <= max_connections # default value is 4 # default value is 4 # since V2.00 work_threads=4 # min buff size # default value 8KB #min_buff_size = 8KB # max buff size # default value 128KB #max_buff_size = 128KB # the method of selecting group to upload files # 0: round robin # 1: specify group # 2: load balance, select the max free space group to upload file store_lookup=2 # which group to upload file # when store_lookup set to 1, must set store_group to the group name store_group=group1 # which storage server to upload file # 0: round robin (default) # 1: the first server order by ip address # 2: the first server order by priority (the minimal) # Note: if use_trunk_file set to true, must set store_server to 1 or 2 store_server=0 # which path(means disk or mount point) of the storage server to upload file # 0: round robin # 1: the source storage server which the current file uploaded to download_server=0 # reserved storage space for system or other applications. # if the free(available) space of any stoarge server in # a group <= reserved_storage_space, # no file can be uploaded to this group. # bytes unit can be one of follows: ### G or g for gigabyte(GB) ### M or m for megabyte(MB) ### K or k for kilobyte(KB) ### no unit for byte(B) ### XX.XX% as ratio such as reserved_storage_space = 10% reserved_storage_space = 10% #standard log level as syslog, case insensitive, value list: ### emerg for emergency ### alert ### crit for critical ### error ### warn for warning ### notice ### info ### debug log_level=info #unix group name to run this program, #not set (empty) means run by the group of current user run_by_group= #unix username to run this program, #not set (empty) means run by current user run_by_user= # allow_hosts can ocur more than once, host can be hostname or ip address, # "*" (only one asterisk) means match all ip addresses # we can use CIDR ips like 192.168.5.64/26 # and also use range like these: 10.0.1.[0-254] and host[01-08,20-25].domain.com # for example: # allow_hosts=10.0.1.[1-15,20] # allow_hosts=host[01-08,20-25].domain.com # allow_hosts=192.168.5.64/26 allow_hosts=* # sync log buff to disk every interval seconds # default value is 10 seconds sync_log_buff_interval = 10 # check storage server alive interval seconds check_active_interval = 120 # thread stack size, should >= 64KB # default value is 64KB thread_stack_size = 64KB # auto adjust when the ip address of the storage server changed # default value is true storage_ip_changed_auto_adjust = true # storage sync file max delay seconds # default value is 86400 seconds (one day) # since V2.00 storage_sync_file_max_delay = 86400 # the max time of storage sync a file # default value is 300 seconds # since V2.00 storage_sync_file_max_time = 300 # if use a trunk file to store several small files # default value is false # since V3.00 use_trunk_file = false # the min slot size, should <= 4KB # default value is 256 bytes # since V3.00 slot_min_size = 256 # the max slot size, should > slot_min_size # store the upload file to trunk file when it's size <= this value # default value is 16MB # since V3.00 slot_max_size = 16MB # the trunk file size, should >= 4MB # default value is 64MB # since V3.00 trunk_file_size = 64MB # if create trunk file advancely # default value is false # since V3.06 trunk_create_file_advance = false # the time base to create trunk file # the time format: HH:MM # default value is 02:00 # since V3.06 trunk_create_file_time_base = 02:00 # the interval of create trunk file, unit: second # default value is 38400 (one day) # since V3.06 trunk_create_file_interval = 86400 # the threshold to create trunk file # when the free trunk file size less than the threshold, will create # the trunk files # default value is 0 # since V3.06 trunk_create_file_space_threshold = 20G # if check trunk space occupying when loading trunk free spaces # the occupied spaces will be ignored # default value is false # since V3.09 # NOTICE: set this parameter to true will slow the loading of trunk spaces # when startup. you should set this parameter to true when neccessary. trunk_init_check_occupying = false # if ignore storage_trunk.dat, reload from trunk binlog # default value is false # since V3.10 # set to true once for version upgrade when your version less than V3.10 trunk_init_reload_from_binlog = false # the min interval for compressing the trunk binlog file # unit: second # default value is 0, 0 means never compress # FastDFS compress the trunk binlog when trunk init and trunk destroy # recommand to set this parameter to 86400 (one day) # since V5.01 trunk_compress_binlog_min_interval = 0 # if use storage ID instead of IP address # default value is false # since V4.00 use_storage_id = false # specify storage ids filename, can use relative or absolute path # since V4.00 storage_ids_filename = storage_ids.conf # id type of the storage server in the filename, values are: ## ip: the ip address of the storage server ## id: the server id of the storage server # this paramter is valid only when use_storage_id set to true # default value is ip # since V4.03 id_type_in_filename = ip # if store slave file use symbol link # default value is false # since V4.01 store_slave_file_use_link = false # if rotate the error log every day # default value is false # since V4.02 rotate_error_log = false # rotate error log time base, time format: Hour:Minute # Hour from 0 to 23, Minute from 0 to 59 # default value is 00:00 # since V4.02 error_log_rotate_time=00:00 # rotate error log when the log file exceeds this size # 0 means never rotates log file by log file size # default value is 0 # since V4.02 rotate_error_log_size = 0 # keep days of the log files # 0 means do not delete old log files # default value is 0 log_file_keep_days = 0 # if use connection pool # default value is false # since V4.05 connection_pool_max_idle_time = 3600 # HTTP port on this tracker server http.server_port=8080 # check storage HTTP server alive interval seconds # <= 0 for never check # default value is 30 http.check_alive_interval=30 # check storage HTTP server alive type, values are: # tcp : connect to the storge server with HTTP port only, # do not request and get response # http: storage check alive url must return http status 200 # default value is tcp http.check_alive_type=tcp # check storage HTTP server alive uri/url # NOTE: storage embed HTTP server support uri: /status.html http.check_alive_uri=/status.html -

注意一下存放数据和日志的目录,启动后需要查看日志信息

刚刚配置的目录可能不存在,我们创建出来

mkdir -p /home/fastdfs/tracker -

启动tracker(支持start|stop|restart):支持以下方式启动

-

/usr/bin/fdfs_trackerd /etc/fdfs/tracker.conf start -

fdfs_trackerd /etc/fdfs/tracker.conf -

可以采用熟悉的服务启动方式:

service fdfs_trackerd start # -

另外:

启动fdfs_trackerd服务,停止用stop - 我们可以通过以下命令,设置tracker开机启动:

sudo chkconfig fdfs_trackerd on

-

查看tracker启动日志:进入刚刚指定的base_path(/home/fastdfs/tracker)中有个logs目录,查看tracker.log文件

cd /home/fastdfs/tracker/logs cat trackerd.log

6.查看端口情况:netstat -apn|grep fdfs

附: 可能遇到的报错:

/usr/bin/fdfs_trackerd: error while loading shared libraries: libfastcommon.so: cannot open shared object file: No such file or directory

解决方案:建立libfastcommon.so软链接ln -s /usr/lib64/libfastcommon.so /usr/local/lib/libfastcommon.so

ln -s /usr/lib64/libfastcommon.so /usr/lib/libfastcommon.so

6、配置和启动storage

由于上面已经安装过FastDFS,这里只需要配置storage就好了;

(1)切换目录到: /etc/fdfs/ 目录下;

(2)拷贝一份新的storage配置文件

cp storage.conf.sample storage.conf刚刚配置的目录可能不存在,我们创建出来

mkdir -p /home/fastdfs/storage(3)修改storage.conf ; (同样需要修改的地方不多)

# is this config file disabled

# false for enabled

# true for disabled

disabled=false

# bind an address of this host

# empty for bind all addresses of this host

bind_addr=

# the tracker server port

port=22122

# connect timeout in seconds

# default value is 30s

connect_timeout=30

# network timeout in seconds

# default value is 30s

network_timeout=30

# the base path to store data and log files

base_path=/home/fastdfs/tracker

# max concurrent connections this server supported

max_connections=256

# accept thread count

# default value is 1

# since V4.07

accept_threads=1

# work thread count, should <= max_connections

# default value is 4

# since V2.00

work_threads=4

# if disk read / write separated

## false for mixed read and write

## true for separated read and write

# default value is true

# since V2.00

disk_rw_separated = true

# disk reader thread count per store base path

# for mixed read / write, this parameter can be 0

# default value is 1

# since V2.00

disk_reader_threads = 1

# disk writer thread count per store base path

# for mixed read / write, this parameter can be 0

# default value is 1

# since V2.00

disk_writer_threads = 1

# when no entry to sync, try read binlog again after X milliseconds

# must > 0, default value is 200ms

sync_wait_msec=50

# after sync a file, usleep milliseconds

# 0 for sync successively (never call usleep)

sync_interval=0

# storage sync start time of a day, time format: Hour:Minute

# Hour from 0 to 23, Minute from 0 to 59

sync_start_time=00:00

# storage sync end time of a day, time format: Hour:Minute

# Hour from 0 to 23, Minute from 0 to 59

sync_end_time=23:59

# write to the mark file after sync N files

# default value is 500

write_mark_file_freq=500

# path(disk or mount point) count, default value is 1

store_path_count=1

# store_path#, based 0, if store_path0 not exists, it's value is base_path

# the paths must be exist

store_path0=/home/fastdfs/storage

#store_path1=/home/yuqing/fastdfs2

# subdir_count * subdir_count directories will be auto created under each

# store_path (disk), value can be 1 to 256, default value is 256

subdir_count_per_path=256

# tracker_server can ocur more than once, and tracker_server format is

# "host:port", host can be hostname or ip address

tracker_server=****.****.****.****:22122(填自己服务器的ip)

#standard log level as syslog, case insensitive, value list:

### emerg for emergency

### alert

### crit for critical

### error

### warn for warning

### notice

### info

### debug

log_level=debug

#unix group name to run this program,

#not set (empty) means run by the group of current user

run_by_group=

#unix username to run this program,

#not set (empty) means run by current user

run_by_user=

# allow_hosts can ocur more than once, host can be hostname or ip address,

# "*" (only one asterisk) means match all ip addresses

# we can use CIDR ips like 192.168.5.64/26

# and also use range like these: 10.0.1.[0-254] and host[01-08,20-25].domain.com

# for example:

# allow_hosts=10.0.1.[1-15,20]

# allow_hosts=host[01-08,20-25].domain.com

# allow_hosts=192.168.5.64/26

allow_hosts=*

# the mode of the files distributed to the data path

# 0: round robin(default)

# 1: random, distributted by hash code

file_distribute_path_mode=0

# valid when file_distribute_to_path is set to 0 (round robin),

# when the written file count reaches this number, then rotate to next path

# default value is 100

file_distribute_rotate_count=100

# call fsync to disk when write big file

# 0: never call fsync

# other: call fsync when written bytes >= this bytes

# default value is 0 (never call fsync)

fsync_after_written_bytes=0

# sync log buff to disk every interval seconds

# must > 0, default value is 10 seconds

sync_log_buff_interval=10

# sync binlog buff / cache to disk every interval seconds

# default value is 60 seconds

sync_binlog_buff_interval=10

# sync storage stat info to disk every interval seconds

# default value is 300 seconds

sync_stat_file_interval=300

# thread stack size, should >= 512KB

# default value is 512KB

thread_stack_size=512KB

# the priority as a source server for uploading file.

# the lower this value, the higher its uploading priority.

# default value is 10

upload_priority=10

# the NIC alias prefix, such as eth in Linux, you can see it by ifconfig -a

# multi aliases split by comma. empty value means auto set by OS type

# default values is empty

if_alias_prefix=

# if check file duplicate, when set to true, use FastDHT to store file indexes

# 1 or yes: need check

# 0 or no: do not check

# default value is 0

check_file_duplicate=0

# file signature method for check file duplicate

## hash: four 32 bits hash code

## md5: MD5 signature

# default value is hash

# since V4.01

file_signature_method=hash

# namespace for storing file indexes (key-value pairs)

# this item must be set when check_file_duplicate is true / on

key_namespace=FastDFS

# set keep_alive to 1 to enable persistent connection with FastDHT servers

# default value is 0 (short connection)

keep_alive=0

# you can use "#include filename" (not include double quotes) directive to

# load FastDHT server list, when the filename is a relative path such as

# pure filename, the base path is the base path of current/this config file.

# must set FastDHT server list when check_file_duplicate is true / on

# please see INSTALL of FastDHT for detail

##include /home/yuqing/fastdht/conf/fdht_servers.conf

# if log to access log

# default value is false

# since V4.00

use_access_log = false

# if rotate the access log every day

# default value is false

# since V4.00

rotate_access_log = true

# rotate access log time base, time format: Hour:Minute

# Hour from 0 to 23, Minute from 0 to 59

# default value is 00:00

# since V4.00

access_log_rotate_time=00:00

# if rotate the error log every day

# default value is false

# since V4.02

rotate_error_log = true

# rotate error log time base, time format: Hour:Minute

# Hour from 0 to 23, Minute from 0 to 59

# default value is 00:00

# since V4.02

error_log_rotate_time=00:00

# rotate access log when the log file exceeds this size

# 0 means never rotates log file by log file size

# default value is 0

# since V4.02

rotate_access_log_size = 0

# rotate error log when the log file exceeds this size

# 0 means never rotates log file by log file size

# default value is 0

# since V4.02

rotate_error_log_size = 0

# keep days of the log files

# 0 means do not delete old log files

# default value is 0

log_file_keep_days = 7

# if skip the invalid record when sync file

# default value is false

# since V4.02

file_sync_skip_invalid_record=false

# if use connection pool

# default value is false

# since V4.05

use_connection_pool = false

# connections whose the idle time exceeds this time will be closed

# unit: second

# default value is 3600

# since V4.05

connection_pool_max_idle_time = 3600

# use the ip address of this storage server if domain_name is empty,

# else this domain name will ocur in the url redirected by the tracker server

http.domain_name=

# the port of the web server on this storage server

http.server_port=8888

注意tracker的地址配置是否正确,否则启动时会报错

2、启动Storage

fdfs_storaged /etc/fdfs/storage.conf

sh /etc/init.d/fdfs_storaged

service fdfs_storaged start # 启动fdfs_storaged服务,停止用stop

另外,我们可以通过以下命令,设置tracker开机启动:chkconfig fdfs_storaged on3、查看日志

cd /home/fastdfs/storage/logs

cat storaged.log

[2023-07-26 15:48:35] INFO - FastDFS v5.11, base_path=/home/fastdfs/storage, store_path_count=1, subdir_count_per_path=256, group_name=group1, run_by_group=, run_by_user=, connect_timeout=30s, network_timeout=60s, port=23000, bind_addr=, client_bind=1, max_connections=256, accept_threads=1, work_threads=4, disk_rw_separated=1, disk_reader_threads=1, disk_writer_threads=1, buff_size=256KB, heart_beat_interval=30s, stat_report_interval=60s, tracker_server_count=1, sync_wait_msec=50ms, sync_interval=0ms, sync_start_time=00:00, sync_end_time=23:59, write_mark_file_freq=500, allow_ip_count=-1, file_distribute_path_mode=0, file_distribute_rotate_count=100, fsync_after_written_bytes=0, sync_log_buff_interval=10s, sync_binlog_buff_interval=10s, sync_stat_file_interval=300s, thread_stack_size=512 KB, upload_priority=10, if_alias_prefix=, check_file_duplicate=0, file_signature_method=hash, FDHT group count=0, FDHT server count=0, FDHT key_namespace=, FDHT keep_alive=0, HTTP server port=8888, domain name=, use_access_log=0, rotate_access_log=1, access_log_rotate_time=00:00, rotate_error_log=1, error_log_rotate_time=00:00, rotate_access_log_size=0, rotate_error_log_size=0, log_file_keep_days=7, file_sync_skip_invalid_record=0, use_connection_pool=0, g_connection_pool_max_idle_time=3600s

[2023-07-26 15:48:35] INFO - file: storage_param_getter.c, line: 191, use_storage_id=0, id_type_in_filename=ip, storage_ip_changed_auto_adjust=1, store_path=0, reserved_storage_space=10.00%, use_trunk_file=0, slot_min_size=256, slot_max_size=16 MB, trunk_file_size=64 MB, trunk_create_file_advance=0, trunk_create_file_time_base=02:00, trunk_create_file_interval=86400, trunk_create_file_space_threshold=20 GB, trunk_init_check_occupying=0, trunk_init_reload_from_binlog=0, trunk_compress_binlog_min_interval=0, store_slave_file_use_link=0

[2023-07-26 15:48:35] INFO - file: storage_func.c, line: 257, tracker_client_ip: ********, my_server_id_str: ********, g_server_id_in_filename: -822470484

[2023-07-26 15:48:35] DEBUG - file: storage_ip_changed_dealer.c, line: 241, last my ip is ********, current my ip is ********

[2023-07-26 15:48:35] ERROR - file: sockopt.c, line: 861, bind port 23000 failed, errno: 98, error info: Address already in use.

[2023-07-26 15:48:35] CRIT - exit abnormally!

这是因为此时Storage已经启动了,再执行启动命令就会报错地址已被使用,此时可以执行restart命令进行重启.

fdfs_storaged /etc/fdfs/storage.conf restart

出现上述信息表示Storage已启动成功.

[2023-07-26 15:51:09] INFO - FastDFS v5.11, base_path=/home/fastdfs/storage, store_path_count=1, subdir_count_per_path=256, group_name=group1, run_by_group=, run_by_user=, connect_timeout=30s, network_timeout=60s, port=23000, bind_addr=, client_bind=1, max_connections=256, accept_threads=1, work_threads=4, disk_rw_separated=1, disk_reader_threads=1, disk_writer_threads=1, buff_size=256KB, heart_beat_interval=30s, stat_report_interval=60s, tracker_server_count=1, sync_wait_msec=50ms, sync_interval=0ms, sync_start_time=00:00, sync_end_time=23:59, write_mark_file_freq=500, allow_ip_count=-1, file_distribute_path_mode=0, file_distribute_rotate_count=100, fsync_after_written_bytes=0, sync_log_buff_interval=10s, sync_binlog_buff_interval=10s, sync_stat_file_interval=300s, thread_stack_size=512 KB, upload_priority=10, if_alias_prefix=, check_file_duplicate=0, file_signature_method=hash, FDHT group count=0, FDHT server count=0, FDHT key_namespace=, FDHT keep_alive=0, HTTP server port=8888, domain name=, use_access_log=0, rotate_access_log=1, access_log_rotate_time=00:00, rotate_error_log=1, error_log_rotate_time=00:00, rotate_access_log_size=0, rotate_error_log_size=0, log_file_keep_days=7, file_sync_skip_invalid_record=0, use_connection_pool=0, g_connection_pool_max_idle_time=3600s

[2023-07-26 15:51:09] INFO - file: storage_param_getter.c, line: 191, use_storage_id=0, id_type_in_filename=ip, storage_ip_changed_auto_adjust=1, store_path=0, reserved_storage_space=10.00%, use_trunk_file=0, slot_min_size=256, slot_max_size=16 MB, trunk_file_size=64 MB, trunk_create_file_advance=0, trunk_create_file_time_base=02:00, trunk_create_file_interval=86400, trunk_create_file_space_threshold=20 GB, trunk_init_check_occupying=0, trunk_init_reload_from_binlog=0, trunk_compress_binlog_min_interval=0, store_slave_file_use_link=0

[2023-07-26 15:51:09] INFO - file: storage_func.c, line: 257, tracker_client_ip: ********, my_server_id_str: ********, g_server_id_in_filename: -822470484

[2023-07-26 15:51:09] DEBUG - file: storage_ip_changed_dealer.c, line: 241, last my ip is ********, current my ip is ********

[2023-07-26 15:51:09] DEBUG - file: fast_task_queue.c, line: 227, max_connections: 256, init_connections: 256, alloc_task_once: 256, min_buff_size: 262144, max_buff_size: 262144, block_size: 263288, arg_size: 1008, max_data_size: 268435456, total_size: 67401728

[2023-07-26 15:51:09] DEBUG - file: fast_task_queue.c, line: 284, malloc task info as whole: 1, malloc loop count: 1

[2023-07-26 15:51:09] DEBUG - file: tracker_client_thread.c, line: 225, report thread to tracker server ********:22122 started

[2023-07-26 15:51:09] INFO - file: tracker_client_thread.c, line: 310, successfully connect to tracker server ********:22122, as a tracker client, my ip is********

[2023-07-26 15:51:09] INFO - file: tracker_client_thread.c, line: 1263, tracker server ********:22122, set tracker leader: ********:22122

或者

ps -ef | grep storage

#杀死对应的进程号

kill -9 xxxx

fdfs_storaged /etc/fdfs/storage.conf最后,通过ps -ef | grep fdfs 查看进程:

7、安装Nginx和fastdfs-nginx-module模块

-

下载Nginx安装包

wget http://nginx.org/download/nginx-1.15.2.tar.gz -

下载fastdfs-nginx-module安装包

wget https://github.com/happyfish100/fastdfs-nginx-module/archive/V1.20.tar.gz -

解压nginx:tar -zxvf nginx-1.15.2.tar.gz

-

安装nginx的依赖库

yum install pcre yum install pcre-devel yum install zlib yum install zlib-devel yum install openssl yum install openssl-devel

-

-

解压fastdfs-nginx-module:tar -xvf V1.20.tar.gz

将fastdfs-nginx-module/src下的mod_fastdfs.conf拷贝至/etc/fdfs/下-

cp mod_fastdfs.conf /etc/fdfs/ 并修改 /etc/fdfs/mod_fastdfs.conf 的内容;vi /etc/fdfs/mod_fastdfs.conf

2. 更改下面几处配置的内容:

base_path=/home/fastdfs tracker_server=10.129.44.128:22122 #tracker_server=192.168.172.20:22122 #(多个tracker配置多行) url_have_group_name=true #url中包含group名称 store_path0=/home/fastdfs/storage #指定文件存储路径(上面配置的store路径)

3.将libfdfsclient.so拷贝至/usr/lib下

cp /usr/lib64/libfdfsclient.so /usr/lib/

4.创建nginx/client目录

mkdir -p /var/temp/nginx/client

-

-

进入nginx目录:cd nginx-1.15.2

- 配置,并加载fastdfs-nginx-module模块:

./configure --prefix=/usr/local/nginx --add-module=/opt/fastdfs-nginx-module-1.20/src/ 编译安装make & make installfastdfs安装好了,nginx安装好了, 到了安装fastdfs-nginx-module的时候, 编译报错了

安装成功后查看生成的目录,如下所示:

cd /usr/local/nginx

ll

8.拷贝配置文件到 /etc/fdfs 下;

cd /opt/fastdfs-5.11/conf/

cp http.conf mime.types /etc/fdfs/

9.修改nginx配置文件

- mkdir /usr/local/nginx/logs # 创建logs目录

- cd /usr/local/nginx/conf/ vim nginx.conf

- 做如下的修改:

-

(我目前没改,因为没 ngx_fastdfs_module这个文件/文件夹)

说明:

location /group1/M00/:group1为nginx 服务FastDFS的分组名称,M00是FastDFS自动生成编号,对应store_path0=/home/fdfs_storage,如果FastDFS定义store_path1,这里就是M01

注意: 如若安装fastdfs-nginx-module的时候遇到如下报错:

/usr/local/include/fastdfs/fdfs_define.h:15:27: 致命错误:common_define.h:没有那个文件或目录

解决方法:

编辑 fastdfs-nginx-module-1.20/src/config 文件

vim fastdfs-nginx-module-1.20/src/config

改变的文件内容

ngx_module_incs="/usr/include/fastdfs /usr/include/fastcommon/"

CORE_INCS="$CORE_INCS /usr/include/fastdfs /usr/include/fastcommon/"

再重新到nginx目录下

./configure --prefix=/usr/local/nginx --add-module=/opt/fastdfs-nginx-module-1.20/src/ make & make install

消除错误。

10.查看安装路径:whereis nginx

![]()

11.启动、停止:

cd /usr/local/nginx/sbin/

./nginx

./nginx -s stop #此方式相当于先查出nginx进程id再使用kill命令强制杀掉进程

./nginx -s quit #此方式停止步骤是待nginx进程处理任务完毕进行停止

./nginx -s reload

12.查看此时的nginx版本:发现fastdfs模块已经安装好了

(我把fastdfs-nginx-module-1.20文件夹改成了astdfs-nginx-module,之前找不到文件名astdfs-nginx-module-1.20,不过后来感觉不改也行。出了不存在文件名的报错主要看自己的文件名是不是都有、都对上了)

2452

2452

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?