小车(RK3588s)yolov5模型转化部署

1 将训练好的.pt文件转化为.onnx文件

首先,参照官方的RKNN文档的操作,将对应的部分进行修改。RKNN官方文档

yolov5工程中的 ./models/yolo.py 文件中的 class Detect(nn.Module): 类 里 forward 代码进行修改,内容如下。

def forward(self, x):

z = [] # inference output

for i in range(self.nl):

if os.getenv('RKNN_model_hack', '0') != '0':

z.append(torch.sigmoid(self.m[i](x[i])))

continue

x[i] = self.m[i](x[i]) # conv

bs, _, ny, nx = x[i].shape # x(bs,255,20,20) to x(bs,3,20,20,85)

x[i] = x[i].view(bs, self.na, self.no, ny, nx).permute(0, 1, 3, 4, 2).contiguous()

if not self.training: # inference

if self.onnx_dynamic or self.grid[i].shape[2:4] != x[i].shape[2:4]:

self.grid[i], self.anchor_grid[i] = self._make_grid(nx, ny, i)

y = x[i].sigmoid()

if self.inplace:

y[..., 0:2] = (y[..., 0:2] * 2 + self.grid[i]) * self.stride[i] # xy

y[..., 2:4] = (y[..., 2:4] * 2) ** 2 * self.anchor_grid[i] # wh

else: # for YOLOv5 on AWS Inferentia https://github.com/ultralytics/yolov5/pull/2953

xy, wh, conf = y.split((2, 2, self.nc + 1), 4) # y.tensor_split((2, 4, 5), 4) # torch 1.8.0

xy = (xy * 2 + self.grid[i]) * self.stride[i] # xy

wh = (wh * 2) ** 2 * self.anchor_grid[i] # wh

y = torch.cat((xy, wh, conf), 4)

z.append(y.view(bs, -1, self.no))

if os.getenv('RKNN_model_hack', '0') != '0':

return z

return x if self.training else (torch.cat(z, 1),) if self.export else (torch.cat(z, 1), x)修改为:

def forward(self, x):

z = [] # inference output

for i in range(self.nl):

x[i] = self.m[i](x[i]) # conv

return x注意:修改后此工程将不可进行训练模型程序,会出现报错。

修改完成后我们可以直接对export.py进行修改导出,也可通过传参的方法运行export.py进行导出。将训练得到的best.pt复制到export.py同一级文件夹下。

在命令终端输入以下命令即可传参导出

python export.py --weights best.pt --img 640 --batch 1 --include onnx之后,在export.py的同级目录下,就会生成best.onnx这个文件,我们需要做的就是把这个文件复制到我们的Ubuntu18.0.或者20.0系统里面,进行处理。

2 将onnx转化为rknn

此步骤在Ubuntu系统中进行操作,请提前在电脑中安装Ubuntu双系统或者Ubuntu虚拟机。

首先,我们在Ubuntu系统中安装anaconda,创建一个rknn的虚拟环境。使用anaconda可以保证环境之间的库不会出现彼此冲突。如果未安装anaconda,可参考之前一篇文章基于rk3588s的yolov5深度学习从训练到部署--讯飞智能车识别任务(一)_0-Linxin的博客-CSDN博客原理类似。

conda create -n rknn python=3.8进入我们创建的虚拟环境后,我们需要安装python3所需要的依赖

sudo apt-get install python3 python3-dev python3-pip

sudo apt-get install zlib1g zlib1g-dev

sudo apt-get install libxslt1-dev libglib2.0-0 libsm6 libgl1-mesa-glx libprotobuf-dev gcc安装完依赖后,下载 rknn,在GitHub - rockchip-linux/rknn-toolkit2里将整个项目下载下来,解压rknn-toolkit2-master进入刚刚创立虚拟环境下配置rknn-toolkit2。进入doc目录后,输入命令

pip3 install -r requirements_cp38-1.5.0.txt -i https://mirror.baidu.com/pypi/simple如果出现某些库报错的情况,就单独安装出问题的库,具体问题就网络查找资料解决,在此不过多赘述。

成功安装完全部的依赖后,继续安装rknn-toolkit2,进入packages文件夹,输入命令

pip3 install rknn_toolkit2-1.5.0+1fa95b5c-cp38-cp38-linux_x86_64.whl具体文件名根据所下载的版本,后续更新版本文件名可能不同,根据具体情况进行更改。

成功安装无报错,我们即可在终端验证是否安装成功,终端输入python3,进入python的交互界面,再输入以下命令,如果没有报错,即成功安装

from rknn.api import RKNN

接下来,进入example/onnx/yolov5文件夹下,找到test.py文件,修改模型地址,和类别。

anchors是否需要更改视情况而定,如果在前期训练时更改过模型输入层或者文件anchors内容不一致,则需要进行更改。具体内容在例如ylov5s.yaml中有。基于rk3588s的yolov5深度学习从训练到部署--讯飞智能车识别任务(三)_0-Linxin的博客-CSDN博客

完成上述操作后我们还需要修改一下后处理process函数,将代码修改为以下格式

def process(input, mask, anchors):

anchors = [anchors[i] for i in mask]

grid_h, grid_w = map(int, input.shape[0:2])

box_confidence = input[..., 4]

box_confidence = np.expand_dims(box_confidence, axis=-1)

box_class_probs = input[..., 5:]

box_xy = input[..., :2]*2 - 0.5

col = np.tile(np.arange(0, grid_w), grid_w).reshape(-1, grid_w)

row = np.tile(np.arange(0, grid_h).reshape(-1, 1), grid_h)

col = col.reshape(grid_h, grid_w, 1, 1).repeat(3, axis=-2)

row = row.reshape(grid_h, grid_w, 1, 1).repeat(3, axis=-2)

grid = np.concatenate((col, row), axis=-1)

box_xy += grid

box_xy *= int(IMG_SIZE/grid_h)

box_wh = pow(input[..., 2:4]*2, 2)

box_wh = box_wh * anchors

box = np.concatenate((box_xy, box_wh), axis=-1)

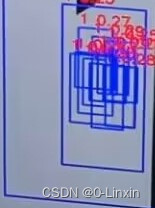

return box, box_confidence, box_class_probs不进行更改可能会出现以下重复框的情况,具体情况具体分析,如果更改后仍然出现,就将更改的代码恢复为官方代码再次进行尝试。

至此电脑端安装完毕,我们在需要转化的onnx模型所在的文件夹中打开终端输入以下命令

python3 test.py

将会生成一个best.rknn的文件。

3 小车(rk3588板卡)运行模型

首先在小车的Ubuntu系统安装Miniconda Miniconda,原理相同避免环境混乱。在miniconda官网找到适用板卡的aarch64架构的安装包,python版本我这里选择的是3.8。下载完成后,找到安装包所在的文件夹,打开终端输入命令

bash Miniconda3-py38_23.3.1-0-Linux-aarch64.sh

具体命令按照你所下载的版本所更改。

安装成功后,创建并激活rknn虚拟环境,python版本我这为3.8。操作与anaconda一样。

进入虚拟环境后,安装rknn依赖。以下是经过实验可行的python==3.8的依赖。

# base deps

numpy==1.19.5

protobuf==3.12.2

flatbuffers==1.12

# utils

requests==2.27.1

psutil==5.9.0

ruamel.yaml==0.17.4

scipy==1.5.4

tqdm==4.64.0

opencv-python==4.5.5.64

fast-histogram==0.11

# base

onnx==1.10.0

onnxoptimizer==0.2.7

onnxruntime==1.10.0

torch==1.10.1

torchvision==0.11.2

tensorflow==2.6.2创建一个rknn_cp38.txt的文本文件,将上述的依赖复制到文本文件中。

并在该文件所在位置打开终端进入创建的rknn虚拟环境,输入以下命令,安装依赖。

pip3 install -r rknn_cp38.txt -i https://mirror.baidu.com/pypi/simple

成功安装后。进入rknn-toolkit2-master\rknn_toolkit_lite2\packages 目录,安装rknn

pip3 install rknn_toolkit_lite2-1.5.0-cp38-cp38-linux_aarch64.whl

因为rk3588的npu驱动需要下载,所以需要安装RKNPU2。GitHub - rockchip-linux/rknpu2RKNPU2的安装只需要将动态链接库和C头文件放到指定路径即可。这里传输选择使用sudo cp 命令(具体如何使用Linux的sudo cp 自行查找)。

(1)将rknpu2-master/runtime/RK3588/Linux/librknn_api/aarch64中的librknnrt.so、librknn_api.so两个文件放在开发板的/usr/lib目录。

(2)将rknpu2-master/runtime/RK3588/Linux/librknn_api/include中的rknn_api.h文件放在开发板的/usr/include目录。

(3)将rknpu2-master/runtime/RK3588/Linux/rknn_server/aarch64/usr/bin中的restart_rknn.sh、rknn_server、start_rknn.sh三个文件放在开发板的/usr/bin目录。

完成以上步骤,进行验证:

进入目录 examples/inference_with_lite ,

执行 python test.py 无报错即可。

在此我提供一个demo.py可进行摄像头实时检测

import os

import urllib

import traceback

import time

import datetime as dt

import sys

import numpy as np

import cv2

from rknnlite.api import RKNNLite

RKNN_MODEL = 'best.rknn'

QUANTIZE_ON = True

OBJ_THRESH = 0.25

NMS_THRESH = 0.45

IMG_SIZE = 640

'''CLASSES = ("person", "bicycle", "car", "motorbike ", "aeroplane ", "bus ", "train", "truck ", "boat", "traffic light",

"fire hydrant", "stop sign ", "parking meter", "bench", "bird", "cat", "dog ", "horse ", "sheep", "cow", "elephant",

"bear", "zebra ", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee", "skis", "snowboard", "sports ball", "kite",

"baseball bat", "baseball glove", "skateboard", "surfboard", "tennis racket", "bottle", "wine glass", "cup", "fork", "knife ",

"spoon", "bowl", "banana", "apple", "sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza ", "donut", "cake", "chair", "sofa",

"pottedplant", "bed", "diningtable", "toilet ", "tvmonitor", "laptop ", "mouse ", "remote ", "keyboard ", "cell phone", "microwave ",

"oven ", "toaster", "sink", "refrigerator ", "book", "clock", "vase", "scissors ", "teddy bear ", "hair drier", "toothbrush ")

'''

CLASSES = ("0","1","2","3","4","5","6","7","8","9")

def sigmoid(x):

return 1 / (1 + np.exp(-x))

def xywh2xyxy(x):

# Convert [x, y, w, h] to [x1, y1, x2, y2]

y = np.copy(x)

y[:, 0] = x[:, 0] - x[:, 2] / 2 # top left x

y[:, 1] = x[:, 1] - x[:, 3] / 2 # top left y

y[:, 2] = x[:, 0] + x[:, 2] / 2 # bottom right x

y[:, 3] = x[:, 1] + x[:, 3] / 2 # bottom right y

return y

def process(input, mask, anchors):

anchors = [anchors[i] for i in mask]

grid_h, grid_w = map(int, input.shape[0:2])

box_confidence = sigmoid(input[..., 4])

box_confidence = np.expand_dims(box_confidence, axis=-1)

box_class_probs = sigmoid(input[..., 5:])

box_xy = sigmoid(input[..., :2])*2 - 0.5

col = np.tile(np.arange(0, grid_w), grid_w).reshape(-1, grid_w)

row = np.tile(np.arange(0, grid_h).reshape(-1, 1), grid_h)

col = col.reshape(grid_h, grid_w, 1, 1).repeat(3, axis=-2)

row = row.reshape(grid_h, grid_w, 1, 1).repeat(3, axis=-2)

grid = np.concatenate((col, row), axis=-1)

box_xy += grid

box_xy *= int(IMG_SIZE/grid_h)

box_wh = pow(sigmoid(input[..., 2:4])*2, 2)

box_wh = box_wh * anchors

box = np.concatenate((box_xy, box_wh), axis=-1)

return box, box_confidence, box_class_probs

def filter_boxes(boxes, box_confidences, box_class_probs):

"""Filter boxes with box threshold. It's a bit different with origin yolov5 post process!

# Arguments

boxes: ndarray, boxes of objects.

box_confidences: ndarray, confidences of objects.

box_class_probs: ndarray, class_probs of objects.

# Returns

boxes: ndarray, filtered boxes.

classes: ndarray, classes for boxes.

scores: ndarray, scores for boxes.

"""

boxes = boxes.reshape(-1, 4)

box_confidences = box_confidences.reshape(-1)

box_class_probs = box_class_probs.reshape(-1, box_class_probs.shape[-1])

_box_pos = np.where(box_confidences >= OBJ_THRESH)

boxes = boxes[_box_pos]

box_confidences = box_confidences[_box_pos]

box_class_probs = box_class_probs[_box_pos]

class_max_score = np.max(box_class_probs, axis=-1)

classes = np.argmax(box_class_probs, axis=-1)

_class_pos = np.where(class_max_score >= OBJ_THRESH)

boxes = boxes[_class_pos]

classes = classes[_class_pos]

scores = (class_max_score* box_confidences)[_class_pos]

return boxes, classes, scores

def nms_boxes(boxes, scores):

"""Suppress non-maximal boxes.

# Arguments

boxes: ndarray, boxes of objects.

scores: ndarray, scores of objects.

# Returns

keep: ndarray, index of effective boxes.

"""

x = boxes[:, 0]

y = boxes[:, 1]

w = boxes[:, 2] - boxes[:, 0]

h = boxes[:, 3] - boxes[:, 1]

areas = w * h

order = scores.argsort()[::-1]

keep = []

while order.size > 0:

i = order[0]

keep.append(i)

xx1 = np.maximum(x[i], x[order[1:]])

yy1 = np.maximum(y[i], y[order[1:]])

xx2 = np.minimum(x[i] + w[i], x[order[1:]] + w[order[1:]])

yy2 = np.minimum(y[i] + h[i], y[order[1:]] + h[order[1:]])

w1 = np.maximum(0.0, xx2 - xx1 + 0.00001)

h1 = np.maximum(0.0, yy2 - yy1 + 0.00001)

inter = w1 * h1

ovr = inter / (areas[i] + areas[order[1:]] - inter)

inds = np.where(ovr <= NMS_THRESH)[0]

order = order[inds + 1]

keep = np.array(keep)

return keep

def yolov5_post_process(input_data):

masks = [[0, 1, 2], [3, 4, 5], [6, 7, 8]]

anchors = [[199, 371], [223, 481], [263, 428], [278, 516], [320, 539], [323, 464], [361, 563], [402, 505], [441, 584]]

boxes, classes, scores = [], [], []

for input, mask in zip(input_data, masks):

b, c, s = process(input, mask, anchors)

b, c, s = filter_boxes(b, c, s)

boxes.append(b)

classes.append(c)

scores.append(s)

boxes = np.concatenate(boxes)

boxes = xywh2xyxy(boxes)

classes = np.concatenate(classes)

scores = np.concatenate(scores)

nboxes, nclasses, nscores = [], [], []

for c in set(classes):

inds = np.where(classes == c)

b = boxes[inds]

c = classes[inds]

s = scores[inds]

keep = nms_boxes(b, s)

nboxes.append(b[keep])

nclasses.append(c[keep])

nscores.append(s[keep])

if not nclasses and not nscores:

return None, None, None

boxes = np.concatenate(nboxes)

classes = np.concatenate(nclasses)

scores = np.concatenate(nscores)

return boxes, classes, scores

def draw(image, boxes, scores, classes, fps):

"""Draw the boxes on the image.

# Argument:

image: original image.

boxes: ndarray, boxes of objects.

classes: ndarray, classes of objects.

scores: ndarray, scores of objects.

fps: int.

all_classes: all classes name.

"""

for box, score, cl in zip(boxes, scores, classes):

top, left, right, bottom = box

print('class: {}, score: {}'.format(CLASSES[cl], score))

print('box coordinate left,top,right,down: [{}, {}, {}, {}]'.format(top, left, right, bottom))

top = int(top)

left = int(left)

right = int(right)

bottom = int(bottom)

cv2.rectangle(image, (top, left), (right, bottom), (255, 0, 0), 2)

cv2.putText(image, '{0} {1:.2f}'.format(CLASSES[cl], score),

(top, left - 6),

cv2.FONT_HERSHEY_SIMPLEX,

0.6, (0, 0, 255), 2)

def letterbox(im, new_shape=(640, 640), color=(0, 0, 0)):

# Resize and pad image while meeting stride-multiple constraints

shape = im.shape[:2] # current shape [height, width]

if isinstance(new_shape, int):

new_shape = (new_shape, new_shape)

# Scale ratio (new / old)

r = min(new_shape[0] / shape[0], new_shape[1] / shape[1])

# Compute padding

ratio = r, r # width, height ratios

new_unpad = int(round(shape[1] * r)), int(round(shape[0] * r))

dw, dh = new_shape[1] - new_unpad[0], new_shape[0] - new_unpad[1] # wh padding

dw /= 2 # divide padding into 2 sides

dh /= 2

if shape[::-1] != new_unpad: # resize

im = cv2.resize(im, new_unpad, interpolation=cv2.INTER_LINEAR)

top, bottom = int(round(dh - 0.1)), int(round(dh + 0.1))

left, right = int(round(dw - 0.1)), int(round(dw + 0.1))

im = cv2.copyMakeBorder(im, top, bottom, left, right, cv2.BORDER_CONSTANT, value=color) # add border

return im, ratio, (dw, dh)

# ==================================

rknn = RKNNLite()

print('--> Load RKNN model')

ret = rknn.load_rknn(RKNN_MODEL)

print('--> Init runtime environment')

ret = rknn.init_runtime(core_mask=RKNNLite.NPU_CORE_0_1_2)

if ret != 0:

print('Init runtime environment failed!')

exit(ret)

print('done')

cap = cv2.VideoCapture(0)

if (cap.isOpened()== False):

print("Error opening video stream or file")

while(cap.isOpened()):

start = dt.datetime.utcnow()

# Capture frame-by-frame

ret, img = cap.read()

if not ret:

break

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

img = cv2.resize(img, (IMG_SIZE, IMG_SIZE))

# Inference

#print('--> Running model')

outputs = rknn.inference(inputs=[img])

#print('done')

# post process

input0_data = outputs[0]

input1_data = outputs[1]

input2_data = outputs[2]

input0_data = input0_data.reshape([3, -1]+list(input0_data.shape[-2:]))

input1_data = input1_data.reshape([3, -1]+list(input1_data.shape[-2:]))

input2_data = input2_data.reshape([3, -1]+list(input2_data.shape[-2:]))

input_data = list()

input_data.append(np.transpose(input0_data, (2, 3, 0, 1)))

input_data.append(np.transpose(input1_data, (2, 3, 0, 1)))

input_data.append(np.transpose(input2_data, (2, 3, 0, 1)))

boxes, classes, scores = yolov5_post_process(input_data)

duration = dt.datetime.utcnow() - start

fps = round(1000000 / duration.microseconds)

# draw process result and fps

img_1 = cv2.cvtColor(img, cv2.COLOR_RGB2BGR)

cv2.putText(img_1, f'fps: {fps}',

(20, 20),

cv2.FONT_HERSHEY_SIMPLEX,

0.6, (0, 125, 125), 2)

if boxes is not None:

draw(img_1, boxes, scores, classes, fps)

# show output

cv2.imshow("post process result", img_1)

# Press Q on keyboard to exit

if cv2.waitKey(25) & 0xFF == ord('q'):

break

# When everything done, release the video capture object

cap.release()

# Closes all the frames

cv2.destroyAllWindows()0

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?