准备机器

需要准备三台4G, 4核,200G(推荐)的虚拟机,作为k8s集群的master, node-1和node-2

安装环境

centos:7.9, k8s:1-20-6, docker:24.0.6初始化安装k8s集群的实验环境

1.修改k8s集群各个主机的名字

[root@localhost ~]# hostnamectl set-hostname master && bash

[root@master ~]#

[root@localhost ~]# hostnamectl set-hostname node-1 && su

[root@node-1 ~]#

[root@localhost ~]# hostnamectl set-hostname node-2 && su

[root@node-2 ~]#2.关闭firewalld和seliunx

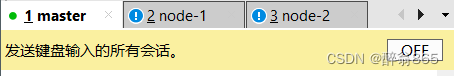

如果你用的是xshell,可以对三台机器同时操作,选择工具->发送键输入到->已连接的会话,会出现下面黄色的信息。

[root@master ~]# service firewalld stop

Redirecting to /bin/systemctl stop firewalld.service

[root@master ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@master ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

sed -i 's/要被取代的字串/新的字串/g' 目标文件路径 -i insert 插入

也可以直接进入文件改

[root@master ~]# vim /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX= enforcing 改为disabled -> SELINUX=disabled

# SELINUXTYPE= can take one of three values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

[root@node-1 ~]# service firewalld stop

Redirecting to /bin/systemctl stop firewalld.service

[root@node-1 ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@node-1 ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

[root@node-2 ~]# service firewalld stop

Redirecting to /bin/systemctl stop firewalld.service

[root@node-2 ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@node-2 ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

改完之后,每个机器重启一下,使更改的selinux生效

[root@master ~]# reboot

[root@master ~]# getenforce

Disabled

3.将k8s集群的机器ip配成静态ip

步骤 :进入ifcfg-ens33配置文件,dd删除一行,u是撤销操作, 一般模式时可以的操作;

重启服务 service network restart;

ping外网域名或ip,看是否能上网。

ip add看ip, 没有ip一般是IPADDR打错了,解析不了域名,看DNS, DNS1, 不是DNS,看vim /etc/resolv.conf,上不了网,一般是网关配错了,用ip route,先ping网关能不能通,再ping外网。要注意,配ip最好是你dhcp分配的ip,如果你想配其他的,一定要先ping一下看是否有人使用。nat模式就没有这个,桥接模式要注意。

[root@master ~]# vim /etc/sysconfig/network-scripts/ifcfg-ens33

BOOTPROTO="none"

NAME="ens33"

DEVICE="ens33"

ONBOOT="yes"

IPADDR=192.168.249.141

PREFIX=24

GATEWAY=192.168.249.2

DNS1=114.114.114.114

[root@master ~]# service network restart

Restarting network (via systemctl): [ 确定 ]

[root@master ~]# ping www.qq.com

PING ins-r23tsuuf.ias.tencent-cloud.net (61.241.54.232) 56(84) bytes of data.

64 bytes from 61.241.54.232 (61.241.54.232): icmp_seq=1 ttl=128 time=18.6 ms

64 bytes from 61.241.54.232 (61.241.54.232): icmp_seq=2 ttl=128 time=23.4 ms

64 bytes from 61.241.54.232 (61.241.54.232): icmp_seq=3 ttl=128 time=18.5 ms

[root@node-1 ~]# vim /etc/sysconfig/network-scripts/ifcfg-ens33

BOOTPROTO="none"

NAME="ens33"

DEVICE="ens33"

ONBOOT="yes"

IPADDR=192.168.249.142

NETMASK=255.255.255.0

GATEWAY=192.168.249.2

DNS1=114.114.114.114

[root@node-1 ~]# service network restart

Restarting network (via systemctl): [ 确定 ]

[root@node-1 ~]# ping www.qq.com

PING ins-r23tsuuf.ias.tencent-cloud.net (61.241.54.211) 56(84) bytes of data.

64 bytes from 61.241.54.211 (61.241.54.211): icmp_seq=1 ttl=128 time=19.8 ms

64 bytes from 61.241.54.211 (61.241.54.211): icmp_seq=2 ttl=128 time=27.6 ms

64 bytes from 61.241.54.211 (61.241.54.211): icmp_seq=3 ttl=128 time=38.7 ms

[root@node-2 ~]# vim /etc/sysconfig/network-scripts/ifcfg-ens33

BOOTPROTO="none"

NAME="ens33"

DEVICE="ens33"

ONBOOT="yes"

IPADDR=192.168.249.143

PREFIX=24

GATEWAY=192.168.249.2

DNS1=114.114.114.114

[root@node-2 ~]# service network restart

Restarting network (via systemctl): [ 确定 ]

[root@node-2 ~]# ping www.qq.com

PING ins-r23tsuuf.ias.tencent-cloud.net (61.241.54.211) 56(84) bytes of data.

64 bytes from 61.241.54.211 (61.241.54.211): icmp_seq=1 ttl=128 time=20.3 ms

64 bytes from 61.241.54.211 (61.241.54.211): icmp_seq=2 ttl=128 time=20.2 ms

64 bytes from 61.241.54.211 (61.241.54.211): icmp_seq=3 ttl=128 time=19.3 ms

4.升级操作系统

[root@elk ~]# uname -a

Linux elk 3.10.0-1160.el7.x86_64 #1 SMP Mon Oct 19 16:18:59 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux

查看内核版本是3.10, 要更新

3.10内核在大规模集群具有不稳定性,所以需要升级内核

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org#导入该源的秘钥

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm#启用该源仓库

yum --disablerepo="*" --enablerepo="elrepo-kernel" list available#查看有哪些内核版本可供安装

yum --enablerepo=elrepo-kernel install kernel-lt -y

reboot

打开并编辑 /etc/default/grub 并设置 GRUB_DEFAULT=0。意思是 GRUB 初始化页面的第一个内核将作为默认内核。

GRUB_TIMEOUT=5

GRUB_DISTRIBUTOR="$(sed 's, release .*$,,g' /etc/system-release)"

GRUB_DEFAULT=saved #这里的saved改为0即可,重启后,默认就是你上次所选的版本了。

GRUB_DISABLE_SUBMENU=true

GRUB_TERMINAL_OUTPUT="console"

GRUB_CMDLINE_LINUX="rhgb quiet"

GRUB_DISABLE_RECOVERY="true"

执行命令:grub2-mkconfig -o /boot/grub2/grub.cfg

[root@elk ~]# uname -a

Linux elk 5.4.256-1.el7.elrepo.x86_64 #1 SMP Sat Sep 2 23:00:29 EDT 2023 x86_64 x86_64 x86_64 GNU/Linux

可以选择不升级,如果你想升级,这里只是做一个示范。此处参考原文链接:https://blog.csdn.net/alwaysbefine/article/details/108931626

5.修改/etc/hosts文件,添加k8s集群里的主机和ip地址映射关系

Xshell多窗口执行同一个命令,只要一遍即可。

[root@master ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.249.141 master

192.168.249.142 node-1

192.168.249.143 node-2

[root@node-1 ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.249.141 master

192.168.249.142 node-1

192.168.249.143 node-2

[root@node-2 ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.249.141 master

192.168.249.142 node-1

192.168.249.143 node-2

6.各个节点之间建立免密通道

为了各个节点间传送文件和远程操作方便,免密登录对提高操作部署的效率非常有必要。

步骤 ssh-keygen(创建公私钥)一直回车 -> ssh-copy-id -i id_rsa.pub node-1 把公钥给其它节点-> ssh 节点名 用来验证 上一步每个节点的/etc/hosts文件都有ip和域名的映射关系的建立。

注意:每次传完最好验证一下即ssh, 看是否成功。

一般来说,master建立各个节点的免密通道就行了。当然你也可以各个节点都建。一传多。

[root@master ~]# ssh-keygen 输入这个命令,然后一直回车,因为是免密通道,所以密码为空。

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Passphrases do not match. Try again.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:0Rc8zFERD3YhEULRJ52EdgT7vACb4LAxEyEMqB1/vJg root@master

The key's randomart image is:

+---[RSA 2048]----+

| ..o. o. .B=B#*+|

| .. .. .. *BoO |

|.. o . =....oo+ .|

|. . . o B...+ o |

| + oS. o . o |

| E . . .|

| . |

| |

| |

+----[SHA256]-----+

[root@master ~]# cd ~/.ssh/

[root@master .ssh]# ls

id_rsa id_rsa.pub known_hosts

[root@master .ssh]# ssh-copy-id -i id_rsa.pub node-1 看第二个即可

[root@master .ssh]# ssh-copy-id -i id_rsa.pub node-2

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "id_rsa.pub"

The authenticity of host 'node-2 (192.168.249.143)' can't be established.

ECDSA key fingerprint is SHA256:s6DxL8DrsqXZ39YVwlXWiPlsM/+LhRjh04BQHN8JBCM.

ECDSA key fingerprint is MD5:79:7a:71:70:96:4a:d7:ff:29:bb:5f:1a:bb:0f:f6:7d.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@node-2's password: 填写密码

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'node-2'"

and check to make sure that only the key(s) you wanted were added.

[root@master .ssh]# ssh 'node-2'

Last login: Mon Sep 11 19:01:04 2023 from 192.168.249.1

7.关闭交换分区

Swap是交换分区,如果机器内存不够,会使用swap分区,但是swap分区的性能较低,k8s设计的时候为了能提升性能,默认是不允许使用交换分区的。Kubeadm初始化的时候会检测swap是否关闭,如果没关闭,那就初始化失败。如果不想要关闭交换分区,安装k8s的时候可以指定--ignore-preflight-errors=Swap来解决。

[root@master .ssh]# swapoff -a

[root@master .ssh]# vim /etc/fstab

#

# /etc/fstab

# Created by anaconda on Mon Sep 11 14:30:50 2023

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos-root / xfs defaults 0 0

UUID=6366332d-f703-4769-b869-494a0e1dde11 /boot xfs defaults 0 0

/dev/mapper/centos-home /home xfs defaults 0 0

#/dev/mapper/centos-swap swap swap defaults 0 0

上面的一行加个#就行

[root@node-1 ~]# swapoff -a

[root@node-1 ~]# vim /etc/fstab

[root@node-2 ~]# swapoff -a

[root@node-2 ~]# vim /etc/fstab

8.修改机器内核参数

在运行时配置内核参数 -p 从指定的文件加载系统参数,如不指定即从/etc/sysctl.conf中加载

为什么要开启net.ipv4.ip_forward = 1参数?

开启路由功能

[root@master .ssh]# modprobe br_netfilter

[root@master .ssh]# echo "modprobe br_netfilter" >> /etc/profile

[root@master .ssh]# cat > /etc/sysctl.d/k8s.conf <<EOF

> net.bridge.bridge-nf-call-ip6tables = 1

> net.bridge.bridge-nf-call-iptables = 1

> net.ipv4.ip_forward = 1

> EOF

[root@master .ssh]# sysctl -p /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

[root@node-1 ~]# modprobe br_netfilter

[root@node-1 ~]# echo "modprobe br_netfilter" >> /etc/profile

[root@node-1 ~]# cat > /etc/sysctl.d/k8s.conf <<EOF

> net.bridge.bridge-nf-call-ip6tables = 1

> net.bridge.bridge-nf-call-iptables = 1

> net.ipv4.ip_forward = 1

> EOF

[root@node-1 ~]# sysctl -p /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

[root@node-2 ~]# modprobe br_netfilter

[root@node-2 ~]# echo "modprobe br_netfilter" >> /etc/profile

[root@node-2 ~]# cat > /etc/sysctl.d/k8s.conf <<EOF

> net.bridge.bridge-nf-call-ip6tables = 1

> net.bridge.bridge-nf-call-iptables = 1

> net.ipv4.ip_forward = 1

> EOF

[root@node-2 ~]# sysctl -p /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

为什么要执行modprobe br_netfilter?

修改/etc/sysctl.d/k8s.conf文件,增加如下三行参数:

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

sysctl -p /etc/sysctl.d/k8s.conf出现报错:

sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-ip6tables: No such file or directory

sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-iptables: No such file or directory

解决方法:

modprobe br_netfilter

为什么开启net.bridge.bridge-nf-call-iptables内核参数?

在centos下安装docker,执行docker info出现如下警告:

WARNING: bridge-nf-call-iptables is disabled

WARNING: bridge-nf-call-ip6tables is disabled

解决办法:

vim /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

9.配置阿里云的repo源

配置docker的repo源

[root@master .ssh]# yum install -y yum-utils

[root@master .ssh]# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@master .ssh]# yum install -y yum-utils device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet ipvsadm

每个机器执行这三条命令

配置安装k8s组件需要的阿里云的repo源

[root@master .ssh]# vim /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

每个机器都要配,xshell多窗口执行同一命令功能。10.配置时间同步

主机时间同步的原因:涉及了多台主机的问题,如果时间不一致,会则导致我们的服务不可用。

[root@master .ssh]# yum install ntpdate -y

[root@master .ssh]# ntpdate cn.pool.ntp.org

11 Sep 20:42:32 ntpdate[8794]: adjust time server 202.112.31.197 offset -0.012143 sec

[root@master .ssh]# crontab -e 将时间同步作为计划任务

* */1 * * * /usr/sbin/ntpdate cn.pool.ntp.org

[root@master .ssh]# service crond restart 重启crond使这个计划任务生效

Redirecting to /bin/systemctl restart crond.service

三个机器都要做11. 安装docker服务

[root@master .ssh]# sudo yum install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin -y

https://docs.docker.com/engine/install/centos/下载docker的命令,之前的步骤安装了对应环境

[root@master .ssh]# systemctl start docker && systemctl enable docker.service

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

配置docker镜像加速器和驱动

[root@master .ssh]# vim /etc/docker/daemon.json

{

"registry-mirrors":["https://rsbud4vc.mirror.aliyuncs.com","https://registry.docker-cn.com","https://docker.mirrors.ustc.edu.cn","https://dockerhub.azk8s.cn","http://hub-mirror.c.163.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

[root@master .ssh]# systemctl daemon-reload && systemctl restart docker

每个节点都要安装

12.安装初始化k8s需要的软件包

安装高版本的kubeadm也是可以安装的

yum install -y kubelet-1.23.7 kubeadm-1.23.7 kubectl-1.23.7

k8s 1.24开始就不再使用docker作为底层的容器运行时软件,采用containerd作为底层的容器运行时软件

[root@master .ssh]# yum install -y kubelet-1.20.6 kubeadm-1.20.6 kubectl-1.20.6

[root@master .ssh]# systemctl enable kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

您在 /var/spool/mail/root 中有新邮件

每个节点执行

用Xftp传输离线包

[root@master ~]# ls

anaconda-ks.cfg k8simage-1-20-6.tar.gz

[root@master ~]# docker load -i k8simage-1-20-6.tar.gz

3e61d935b3ba: Loading layer [==================================================>] 88.58kB/88.58kB

2c60f0a98c2c: Loading layer [==================================================>] 13.82kB/13.82kB

da5efa7f8202: Loading layer [==================================================>] 131.1MB/131.1MB

Loaded image: calico/cni:v3.18.0

225df95e717c: Loading layer [==================================================>] 336.4kB/336.4kB

96d17b0b58a7: Loading layer [==================================================>] 45.02MB/45.02MB

Loaded image: registry.aliyuncs.com/google_containers/coredns:1.7.0

ba0dae6243cc: Loading layer [==================================================>] 684.5kB/684.5kB

Loaded image: registry.aliyuncs.com/google_containers/pause:3.2

28699c71935f: Loading layer [==================================================>] 3.062MB/3.062MB

[root@master ~]# scp k8simage-1-20-6.tar.gz root@node-1:/root

k8simage-1-20-6.tar.gz 100% 1033MB 14.5MB/s 01:11

您在 /var/spool/mail/root 中有新邮件

[root@master ~]# scp k8simage-1-20-6.tar.gz root@node-2:/root

[root@node-1 ~]# docker load -i k8simage-1-20-6.tar.gz

[root@node-2 ~]# docker load -i k8simage-1-20-6.tar.gz

13.使用kubeadm初始化k8s集群

只在master节点运行

[root@master ~]# kubeadm config print init-defaults > kubeadm.yaml

生成初始化文件

[root@master ~]# vim kubeadm.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.249.141 master节点的ip

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: master master节点的名字

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers 镜像源改成阿里的

kind: ClusterConfiguration

kubernetesVersion: v1.20.0

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16 新增pod的网段

scheduler: {}

--- 新增的俩行,指定模式为ipvs, 默认是iptables,太慢了。

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd

[root@master ~]# kubeadm init --config=kubeadm.yaml --ignore-preflight-errors=SystemVerification

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.249.141:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:79bc11ce53085b5cb8175f985d06bdb4c879041a6d4ca9b1d029d9a00a479db0

您在 /var/spool/mail/root 中有新邮件

成功完成的信息如上

按照上面的提示输入

[root@master ~]# mkdir -p $HOME/.kube

[root@master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 4m14s v1.20.6

此时集群状态还是NotReady状态,因为没有安装网络插件。

[root@master ~]# kubeadm token create --print-join-command

kubeadm join 192.168.249.141:6443 --token b6vppx.xfpo9899svpqajcj --discovery-token-ca-cert-hash sha256:79bc11ce53085b5cb8175f985d06bdb4c879041a6d4ca9b1d029d9a00a479db0

[root@node-1 ~]# kubeadm join 192.168.249.141:6443 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:79bc11ce53085b5cb8175f985d06bdb4c879041a6d4ca9b1d029d9a00a479db0

[root@node-2 ~]# kubeadm join 192.168.249.141:6443 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:79bc11ce53085b5cb8175f985d06bdb4c879041a6d4ca9b1d029d9a00a479db0

将节点加入k8s集群

[root@master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 10m v1.20.6

node-1 NotReady <none> 107s v1.20.6

node-2 NotReady <none> 89s v1.20.6

节点设置角色worker

[root@master ~]# kubectl label node node-1 node-role.kubernetes.io/worker=worker

node/node-1 labeled

[root@master ~]# kubectl label node node-2 node-role.kubernetes.io/worker=worker

node/node-2 labeled

[root@master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 11m v1.20.6

node-1 NotReady worker 3m29s v1.20.6

node-2 NotReady worker 3m11s v1.20.6

wget https://docs.projectcalico.org/v3.23/manifests/calico.yaml --no-check-certificate 下载下面的yaml文件

[root@master ~]# kubectl apply -f calico.yaml 布置网络

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 15m v1.20.6

node-1 Ready worker 7m31s v1.20.6

node-2 Ready worker 7m13s v1.20.6

[root@master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-6949477b58-d4fxw 1/1 Running 1 2m7s

calico-node-8cdqt 1/1 Running 0 2m7s

calico-node-crjmz 1/1 Running 0 2m7s

calico-node-z68wd 1/1 Running 0 2m7s

coredns-7f89b7bc75-c2gg7 1/1 Running 0 16m

coredns-7f89b7bc75-c8l9s 1/1 Running 0 16m

etcd-master 1/1 Running 0 16m

kube-apiserver-master 1/1 Running 0 16m

kube-controller-manager-master 1/1 Running 0 16m

kube-proxy-bb29p 1/1 Running 0 8m11s

kube-proxy-hbhsl 1/1 Running 0 16m

kube-proxy-s9td7 1/1 Running 0 8m29s

kube-scheduler-master 1/1 Running 0 16m

如果你看到这个画面,k8s基本安装成功。

安装metrics server Kubernetes 集群核心监控数据的聚合器,可以通过 Metrics API 的形式获取 Metrics 数据 Metrics Server 还可以与 Kubectl 工具结合使用,提供 kubectl top 命令来展示集群中的指标数据

[root@master ~]# unzip metrics-server.zip

Archive: metrics-server.zip

inflating: metrics-server/2023-3-27-╩ё╔╜-k8s└я░▓╫░metrics-server.txt

inflating: metrics-server/components.yaml

inflating: metrics-server/metrics-server-v0.6.3.tar

[root@master ~]# cd metrics-server

[root@master metrics-server]# ls

2023-3-27-╩ё╔╜-k8s└я░▓╫░metrics-server.txt components.yaml metrics-server-v0.6.3.tar

[root@master metrics-server]# scp metrics-server-v0.6.3.tar root@node-1:/root

metrics-server-v0.6.3.tar 100% 67MB 88.0MB/s 00:00

[root@master metrics-server]# scp metrics-server-v0.6.3.tar root@node-2:/root

metrics-server-v0.6.3.tar 100% 67MB 79.7MB/s 00:00

[root@node-1 ~]# docker load -i metrics-server-v0.6.3.tar

d0157aa0c95a: Loading layer [==================================================>] 327.7kB/327.7kB

6fbdf253bbc2: Loading layer [==================================================>] 51.2kB/51.2kB

1b19a5d8d2dc: Loading layer [==================================================>] 3.185MB/3.185MB

ff5700ec5418: Loading layer [==================================================>] 10.24kB/10.24kB

d52f02c6501c: Loading layer [==================================================>] 10.24kB/10.24kB

e624a5370eca: Loading layer [==================================================>] 10.24kB/10.24kB

1a73b54f556b: Loading layer [==================================================>] 10.24kB/10.24kB

d2d7ec0f6756: Loading layer [==================================================>] 10.24kB/10.24kB

4cb10dd2545b: Loading layer [==================================================>] 225.3kB/225.3kB

ebc813d4c836: Loading layer [==================================================>] 66.45MB/66.45MB

Loaded image: registry.k8s.io/metrics-server/metrics-server:v0.6.3

[root@node-2 ~]# docker load -i metrics-server-v0.6.3.tar

[root@master metrics-server]# kubectl apply -f components.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

[root@master ~]# kubectl get ns

NAME STATUS AGE

default Active 21h # 没有指定 -n 命名空间的pod在这个空间里

kube-node-lease Active 21h #该命名空间含有与每个节点关联的Lease对象。节点租用允许kubelet发送heartbeat(心跳),以便控制平面能检测节点故障。

kube-public Active 21h # 此命名空间下的资源可以被所有人访问(包括未认证用户)

kube-system Active 21h # k8s创建资源存放处

[root@master metrics-server]# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-6949477b58-d4fxw 1/1 Running 5 21h 10.244.84.131 node-1 <none> <none>

calico-node-8cdqt 1/1 Running 2 21h 192.168.249.143 node-2 <none> <none>

calico-node-crjmz 1/1 Running 2 21h 192.168.249.141 master <none> <none>

calico-node-z68wd 1/1 Running 2 21h 192.168.249.142 node-1 <none> <none>

coredns-7f89b7bc75-c2gg7 1/1 Running 2 21h 10.244.247.6 node-2 <none> <none>

coredns-7f89b7bc75-c8l9s 1/1 Running 2 21h 10.244.247.7 node-2 <none> <none>

etcd-master 1/1 Running 2 21h 192.168.249.141 master <none> <none>

kube-apiserver-master 1/1 Running 2 21h 192.168.249.141 master <none> <none>

kube-controller-manager-master 1/1 Running 2 21h 192.168.249.141 master <none> <none>

kube-proxy-bb29p 1/1 Running 2 21h 192.168.249.143 node-2 <none> <none>

kube-proxy-hbhsl 1/1 Running 2 21h 192.168.249.141 master <none> <none>

kube-proxy-s9td7 1/1 Running 2 21h 192.168.249.142 node-1 <none> <none>

kube-scheduler-master 1/1 Running 2 21h 192.168.249.141 master <none> <none>

metrics-server-769f6c8464-dxr7s 1/1 Running 0 39s 10.244.247.8 node-2 <none> <none>

402

402

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?