Hadoop上传文件失败

今天在上传文件至Hadoop集群时,报错上传失败。 Exception in createBlockOutputStream java.net.NoRouteToHostException: No route to host/

[root@master ~]# hadoop fs -put /software/hellodata/* /InputData

24/03/13 20:44:14 INFO hdfs.DataStreamer: Exception in createBlockOutputStream

java.net.NoRouteToHostException: No route to host

at sun.nio.ch.SocketChannelImpl.checkConnect( Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect (SocketChannelImpl.java:717)

at org.apache.hadoop.net.SocketIOWithTimeout. connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(Net Utils.java:531)

at org.apache.hadoop.hdfs.DataStreamer.create SocketForPipeline(DataStreamer.java:259)

at org.apache.hadoop.hdfs.DataStreamer.create BlockOutputStream(DataStreamer.java:1699)

at org.apache.hadoop.hdfs.DataStreamer.nextBl ockOutputStream(DataStreamer.java:1655)

at org.apache.hadoop.hdfs.DataStreamer.run(Da taStreamer.java:710)

24/03/13 20:44:14 WARN hdfs.DataStreamer: Abandoning BP-1989384429-192.168.100.1-1710348227580:blk_1073741 829_1005

24/03/13 20:44:14 WARN hdfs.DataStreamer: Excluding d atanode DatanodeInfoWithStorage[192.168.100.2:50010,D S-1975d5d9-37cd-4a4a-9c1a-ca114a9fff2b,DISK]

24/03/13 20:44:14 INFO hdfs.DataStreamer: Exception i n createBlockOutputStream

java.net.NoRouteToHostException: No route to host

at sun.nio.ch.SocketChannelImpl.checkConnect( Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect (SocketChannelImpl.java:717)

at org.apache.hadoop.net.SocketIOWithTimeout. connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(Net Utils.java:531)

at org.apache.hadoop.hdfs.DataStreamer.create SocketForPipeline(DataStreamer.java:259)

at org.apache.hadoop.hdfs.DataStreamer.create BlockOutputStream(DataStreamer.java:1699)

at org.apache.hadoop.hdfs.DataStreamer.nextBl ockOutputStream(DataStreamer.java:1655)

at org.apache.hadoop.hdfs.DataStreamer.run(Da taStreamer.java:710)

24/03/13 20:44:14 WARN hdfs.DataStreamer: Abandoning BP-1989384429-192.168.100.1-1710348227580:blk_1073741 830_1006

24/03/13 20:44:14 WARN hdfs.DataStreamer: Excluding d atanode DatanodeInfoWithStorage[192.168.100.3:50010,D S-4447e81b-13b7-4182-abb5-6c7ddde99342,DISK]

24/03/13 20:44:14 WARN hdfs.DataStreamer: DataStreame r Exception

org.apache.hadoop.ipc.RemoteException(java.io.IOExcep tion): File /InputData/file1.txt._COPYING_ could only be replicated to 0 nodes instead of minReplication ( =1). There are 2 datanode(s) running and 2 node(s) a re excluded in this operation.

at org.apache.hadoop.hdfs.server.blockmanagem ent.BlockManager.chooseTarget4NewBlock(BlockManager.j ava:1814)

at org.apache.hadoop.hdfs.server.namenode.FSD irWriteFileOp.chooseTargetForNewBlock(FSDirWriteFileO p.java:265)

at org.apache.hadoop.hdfs.server.namenode.FSN amesystem.getAdditionalBlock(FSNamesystem.java:2569)

at org.apache.hadoop.hdfs.server.namenode.Nam eNodeRpcServer.addBlock(NameNodeRpcServer.java:846)

at org.apache.hadoop.hdfs.protocolPB.ClientNa menodeProtocolServerSideTranslatorPB.addBlock(ClientN amenodeProtocolServerSideTranslatorPB.java:510)

at org.apache.hadoop.hdfs.protocol.proto.Clie ntNamenodeProtocolProtos$ClientNamenodeProtocol$2.cal lBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Se rver$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:5 03)

at org.apache.hadoop.ipc.RPC$Server.call(RPC. java:989)

at org.apache.hadoop.ipc.Server$RpcCall.run(S erver.java:871)

at org.apache.hadoop.ipc.Server$RpcCall.run(S erver.java:817)

at java.security.AccessController.doPrivilege d(Native Method)

at javax.security.auth.Subject.doAs(Subject.j ava:422)

at org.apache.hadoop.security.UserGroupInform ation.doAs(UserGroupInformation.java:1893)

at org.apache.hadoop.ipc.Server$Handler.run(S erver.java:2606)at org.apache.hadoop.ipc.Client.getRpcRespons e(Client.java:1507)

at org.apache.hadoop.ipc.Client.call(Client.j ava:1453)

at org.apache.hadoop.ipc.Client.call(Client.j ava:1363)

at org.apache.hadoop.ipc.ProtobufRpcEngine$In voker.invoke(ProtobufRpcEngine.java:227)

at org.apache.hadoop.ipc.ProtobufRpcEngine$In voker.invoke(ProtobufRpcEngine.java:116)

at com.sun.proxy.$Proxy10.addBlock(Unknown So urce)

at org.apache.hadoop.hdfs.protocolPB.ClientNa menodeProtocolTranslatorPB.addBlock(ClientNamenodePro tocolTranslatorPB.java:444)

at sun.reflect.NativeMethodAccessorImpl.invok e0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invok e(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.i nvoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.jav a:498)

at org.apache.hadoop.io.retry.RetryInvocation Handler.invokeMethod(RetryInvocationHandler.java:422)

at org.apache.hadoop.io.retry.RetryInvocation Handler$Call.invokeMethod(RetryInvocationHandler.java :165)

at org.apache.hadoop.io.retry.RetryInvocation Handler$Call.invoke(RetryInvocationHandler.java:157)

at org.apache.hadoop.io.retry.RetryInvocation Handler$Call.invokeOnce(RetryInvocationHandler.java:9 5)

at org.apache.hadoop.io.retry.RetryInvocation Handler.invoke(RetryInvocationHandler.java:359)

at com.sun.proxy.$Proxy11.addBlock(Unknown So urce)

at org.apache.hadoop.hdfs.DataStreamer.locate FollowingBlock(DataStreamer.java:1845)

at org.apache.hadoop.hdfs.DataStreamer.nextBl ockOutputStream(DataStreamer.java:1645)

at org.apache.hadoop.hdfs.DataStreamer.run(Da taStreamer.java:710)

put: File /InputData/file1.txt._COPYING_ could only b e replicated to 0 nodes instead of minReplication (=1 ). There are 2 datanode(s) running and 2 node(s) are excluded in this operation.

24/03/13 20:44:14 INFO hdfs.DataStreamer: Exception i n createBlockOutputStream

java.net.NoRouteToHostException: No route to host

at sun.nio.ch.SocketChannelImpl.checkConnect( Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect (SocketChannelImpl.java:717)

at org.apache.hadoop.net.SocketIOWithTimeout. connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(Net Utils.java:531)

at org.apache.hadoop.hdfs.DataStreamer.create SocketForPipeline(DataStreamer.java:259)

at org.apache.hadoop.hdfs.DataStreamer.create BlockOutputStream(DataStreamer.java:1699)

at org.apache.hadoop.hdfs.DataStreamer.nextBl ockOutputStream(DataStreamer.java:1655)

at org.apache.hadoop.hdfs.DataStreamer.run(Da taStreamer.java:710)

24/03/13 20:44:14 WARN hdfs.DataStreamer: Abandoning BP-1989384429-192.168.100.1-1710348227580:blk_1073741 831_1007

24/03/13 20:44:14 WARN hdfs.DataStreamer: Excluding d atanode DatanodeInfoWithStorage[192.168.100.3:50010,D S-4447e81b-13b7-4182-abb5-6c7ddde99342,DISK]

24/03/13 20:44:14 INFO hdfs.DataStreamer: Exception i n createBlockOutputStream

java.net.NoRouteToHostException: No route to host

at sun.nio.ch.SocketChannelImpl.checkConnect( Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect (SocketChannelImpl.java:717)

at org.apache.hadoop.net.SocketIOWithTimeout. connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(Net Utils.java:531)

at org.apache.hadoop.hdfs.DataStreamer.create SocketForPipeline(DataStreamer.java:259)

at org.apache.hadoop.hdfs.DataStreamer.create BlockOutputStream(DataStreamer.java:1699)

at org.apache.hadoop.hdfs.DataStreamer.nextBl ockOutputStream(DataStreamer.java:1655)

at org.apache.hadoop.hdfs.DataStreamer.run(Da taStreamer.java:710)

24/03/13 20:44:14 WARN hdfs.DataStreamer: Abandoning BP-1989384429-192.168.100.1-1710348227580:blk_1073741 832_1008

24/03/13 20:44:14 WARN hdfs.DataStreamer: Excluding d atanode DatanodeInfoWithStorage[192.168.100.2:50010,D S-1975d5d9-37cd-4a4a-9c1a-ca114a9fff2b,DISK]

24/03/13 20:44:14 WARN hdfs.DataStreamer: DataStreame r Exception

org.apache.hadoop.ipc.RemoteException(java.io.IOExcep tion): File /InputData/file2.txt._COPYING_ could only be replicated to 0 nodes instead of minReplication ( =1). There are 2 datanode(s) running and 2 node(s) a re excluded in this operation.

at org.apache.hadoop.hdfs.server.blockmanagem ent.BlockManager.chooseTarget4NewBlock(BlockManager.j ava:1814)

at org.apache.hadoop.hdfs.server.namenode.FSD irWriteFileOp.chooseTargetForNewBlock(FSDirWriteFileO p.java:265)

at org.apache.hadoop.hdfs.server.namenode.FSN amesystem.getAdditionalBlock(FSNamesystem.java:2569)

at org.apache.hadoop.hdfs.server.namenode.Nam eNodeRpcServer.addBlock(NameNodeRpcServer.java:846)

at org.apache.hadoop.hdfs.protocolPB.ClientNa menodeProtocolServerSideTranslatorPB.addBlock(ClientN amenodeProtocolServerSideTranslatorPB.java:510)

at org.apache.hadoop.hdfs.protocol.proto.Clie ntNamenodeProtocolProtos$ClientNamenodeProtocol$2.cal lBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Se rver$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:5 03)

at org.apache.hadoop.ipc.RPC$Server.call(RPC. java:989)

at org.apache.hadoop.ipc.Server$RpcCall.run(S erver.java:871)

at org.apache.hadoop.ipc.Server$RpcCall.run(S erver.java:817)

at java.security.AccessController.doPrivilege d(Native Method)

at javax.security.auth.Subject.doAs(Subject.j ava:422)

at org.apache.hadoop.security.UserGroupInform ation.doAs(UserGroupInformation.java:1893)

at org.apache.hadoop.ipc.Server$Handler.run(S erver.java:2606)at org.apache.hadoop.ipc.Client.getRpcRespons e(Client.java:1507)

at org.apache.hadoop.ipc.Client.call(Client.j ava:1453)

at org.apache.hadoop.ipc.Client.call(Client.j ava:1363)

at org.apache.hadoop.ipc.ProtobufRpcEngine$In voker.invoke(ProtobufRpcEngine.java:227)

at org.apache.hadoop.ipc.ProtobufRpcEngine$In voker.invoke(ProtobufRpcEngine.java:116)

at com.sun.proxy.$Proxy10.addBlock(Unknown So urce)

at org.apache.hadoop.hdfs.protocolPB.ClientNa menodeProtocolTranslatorPB.addBlock(ClientNamenodePro tocolTranslatorPB.java:444)

at sun.reflect.NativeMethodAccessorImpl.invok e0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invok e(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.i nvoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.jav a:498)

at org.apache.hadoop.io.retry.RetryInvocation Handler.invokeMethod(RetryInvocationHandler.java:422)

at org.apache.hadoop.io.retry.RetryInvocation Handler$Call.invokeMethod(RetryInvocationHandler.java :165)

at org.apache.hadoop.io.retry.RetryInvocation Handler$Call.invoke(RetryInvocationHandler.java:157)

at org.apache.hadoop.io.retry.RetryInvocation Handler$Call.invokeOnce(RetryInvocationHandler.java:95)

at org.apache.hadoop.io.retry.RetryInvocation Handler.invoke(RetryInvocationHandler.java:359)

at com.sun.proxy.$Proxy11.addBlock(Unknown Source)

at org.apache.hadoop.hdfs.DataStreamer.locate FollowingBlock(DataStreamer.java:1845)

at org.apache.hadoop.hdfs.DataStreamer.nextBl ockOutputStream(DataStreamer.java:1645)

at org.apache.hadoop.hdfs.DataStreamer.run(DataStreamer.java:710)

put: File /InputData/file2.txt._COPYING_ could only be replicated to 0 nodes instead of minReplication (=1 ). There are 2 datanode(s) running and 2 node(s) are excluded in this operation.

查看集群状态也正常

[root@master ~]# hadoop dfsadmin -report

DEPRECATED: Use of this script to execute hdfs command is depr

Instead use the hdfs command for it.Configured Capacity: 38002491392 (35.39 GB)

Present Capacity: 24131321856 (22.47 GB)

DFS Remaining: 24131297280 (22.47 GB)

DFS Used: 24576 (24 KB)

DFS Used%: 0.00%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

Missing blocks (with replication factor 1): 0

Pending deletion blocks: 0-------------------------------------------------

Live datanodes (2):Name: 192.168.100.2:50010 (slave1)

Hostname: slave1

Decommission Status : Normal

Configured Capacity: 19001245696 (17.70 GB)

DFS Used: 12288 (12 KB)

Non DFS Used: 6934548480 (6.46 GB)

DFS Remaining: 12066684928 (11.24 GB)

DFS Used%: 0.00%

DFS Remaining%: 63.50%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Wed Mar 13 20:45:54 PDT 2024

Last Block Report: Wed Mar 13 20:34:12 PDT 2024

Name: 192.168.100.3:50010 (slave2)

Hostname: slave2

Decommission Status : Normal

Configured Capacity: 19001245696 (17.70 GB)

DFS Used: 12288 (12 KB)

Non DFS Used: 6936621056 (6.46 GB)

DFS Remaining: 12064612352 (11.24 GB)

DFS Used%: 0.00%

DFS Remaining%: 63.49%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Wed Mar 13 20:45:54 PDT 2024

Last Block Report: Wed Mar 13 20:34:12 PDT 2024

解决方法

关闭集群防火墙(master,slave1,slave2),重启集群

1.关闭防火墙

systemctl stop firewalld.service

systemctl disable firewalld.service

修改配置文件 /etc/selinux/config

将SELINUX设置为disable

2.重启集群

stop-all.sh

start-all.sh

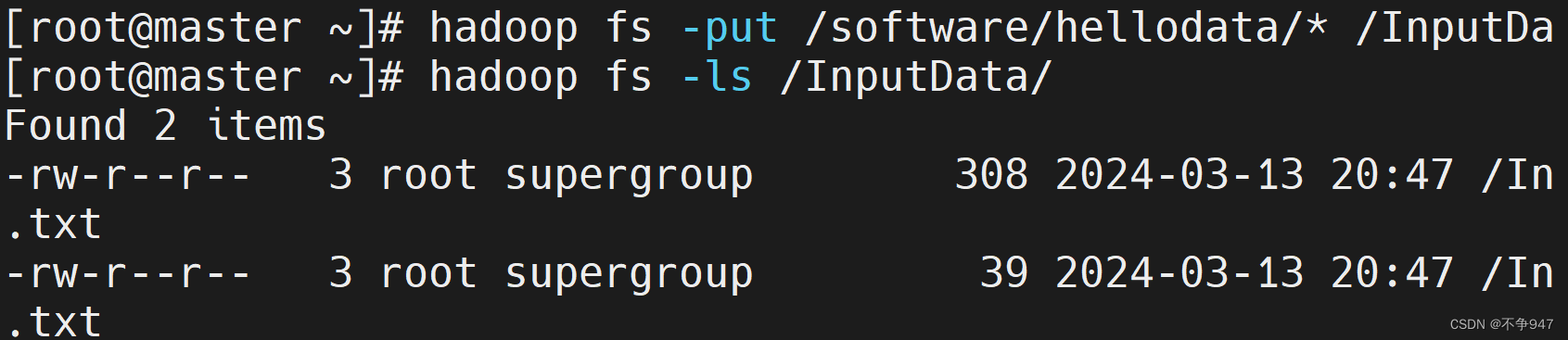

重新上传

成功解决

1566

1566

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?