#主机规划

| 服务器名称(hostname) | 系统版本 | 配置 | 内网IP | 外网IP(模拟) |

| k8s-master | CentOS7.9 | 2C/4G/50G | 192.168.10.110 | 192.168.31.110 |

| k8s-node01 | CentOS7.9 | 2C/4G/50G | 192.168.10.111 | 192.168.31.111 |

| k8s-node02 | CentOS7.9 | 2C/4G/50G | 192.168.10.112 | 192.168.31.112 |

一、环境准备

1.节点配置

##三节点(k8s-master、k8s-node01、k8s-node02)重复操作,注意IP地址和主机名等关键信息

#主机名修改

[root@localhost ~]# hostnamectl set-hostname k8s-master

[root@k8s-master ~]# hostname

k8s-master

#关闭防火墙

[root@k8s-master ~]# systemctl stop firewalld

[root@k8s-master ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@k8s-master ~]# systemctl status firewalld

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled)

Active: inactive (dead)

Docs: man:firewalld(1)

Nov 22 05:09:05 localhost.localdomain systemd[1]: Starting firewalld - dynami...

Nov 22 05:09:06 localhost.localdomain systemd[1]: Started firewalld - dynamic...

Nov 22 05:09:06 localhost.localdomain firewalld[731]: WARNING: AllowZoneDrift...

Nov 22 05:34:48 k8s-master systemd[1]: Stopping firewalld - dynamic firewal.....

Nov 22 05:34:50 k8s-master systemd[1]: Stopped firewalld - dynamic firewall...n.

Hint: Some lines were ellipsized, use -l to show in full.

#永久关闭selinux

[root@k8s-master ~]# vi /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled #此处enforcing修改为disabled

# SELINUXTYPE= can take one of three values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

#双网卡修改为静态

#网卡ens33

[root@k8s-master~]# vi /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=3699e7a6-fe8f-4ffb-b8b1-17f0aeb052aa

DEVICE=ens33

ONBOOT=yes

IPADDR=192.168.10.110

NETMASK=255.255.255.0

DNS1=8.8.8.8

DNS2=114.114.114.114

#网卡ens34

[root@k8s-master~]# vi /etc/sysconfig/network-scripts/ifcfg-ens34

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens34

UUID=8f4ced2f-c3be-45d0-931d-af87294683cf

DEVICE=ens34

ONBOOT=yes

IPADDR=192.168.31.110

GATEWAY=192.168.31.2

NETMASK=255.255.255.0

DNS1=8.8.8.8

DNS2=114.114.114.114

#修改sshd服务配置文件

[root@k8s-master~]# vi /etc/ssh/sshd_config

UseDNS no

PermitRootLogin yes

[root@k8s-master~]# systemctl restart network sshd

#测试网卡

[root@k8s-master ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:40:f4:77 brd ff:ff:ff:ff:ff:ff

inet 192.168.10.110/24 brd 192.168.10.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::4dc3:568f:f29a:cdd3/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: ens34: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:40:f4:81 brd ff:ff:ff:ff:ff:ff

inet 192.168.31.110/24 brd 192.168.31.255 scope global noprefixroute ens34

valid_lft forever preferred_lft forever

inet6 fe80::864f:9bc9:e5d0:4880/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@k8s-master ~]# ping 192.168.10.110 -c 3 #网卡ens33

PING 192.168.10.110 (192.168.10.110) 56(84) bytes of data.

64 bytes from 192.168.10.110: icmp_seq=1 ttl=64 time=0.030 ms

64 bytes from 192.168.10.110: icmp_seq=2 ttl=64 time=0.065 ms

64 bytes from 192.168.10.110: icmp_seq=3 ttl=64 time=0.078 ms

--- 192.168.10.110 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2024ms

rtt min/avg/max/mdev = 0.030/0.057/0.078/0.022 ms

[root@k8s-master ~]# ping 192.168.31.110 -c 3 #网卡ens34

PING 192.168.31.110 (192.168.31.110) 56(84) bytes of data.

64 bytes from 192.168.31.110: icmp_seq=1 ttl=64 time=0.031 ms

64 bytes from 192.168.31.110: icmp_seq=2 ttl=64 time=0.053 ms

64 bytes from 192.168.31.110: icmp_seq=3 ttl=64 time=0.110 ms

--- 192.168.31.110 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2011ms

rtt min/avg/max/mdev = 0.031/0.064/0.110/0.034 ms

[root@k8s-master ~]# ping www.baidu.com -c 3 #外网测试

PING www.a.shifen.com (120.232.145.144) 56(84) bytes of data.

64 bytes from 120.232.145.144 (120.232.145.144): icmp_seq=1 ttl=128 time=362 ms

64 bytes from 120.232.145.144 (120.232.145.144): icmp_seq=2 ttl=128 time=194 ms

64 bytes from 120.232.145.144 (120.232.145.144): icmp_seq=3 ttl=128 time=139 ms

--- www.a.shifen.com ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2009ms

rtt min/avg/max/mdev = 139.475/232.272/362.503/94.820 ms

#yum源检查

[root@k8s-master ~]# yum clean all && yum repolist

Loaded plugins: fastestmirror

Cleaning repos: base extras updates

Cleaning up list of fastest mirrors

Loaded plugins: fastestmirror

Determining fastest mirrors

* base: mirrors.cqu.edu.cn

* extras: mirrors.cqu.edu.cn

* updates: mirrors.cqu.edu.cn

base | 3.6 kB 00:00

extras | 2.9 kB 00:00

updates | 2.9 kB 00:00

(1/4): base/7/x86_64/group_gz | 153 kB 00:00

(2/4): extras/7/x86_64/primary_db | 250 kB 00:01

(3/4): updates/7/x86_64/primary_db | 24 MB 00:12

(4/4): base/7/x86_64/primary_db | 6.1 MB 00:16

repo id repo name status

base/7/x86_64 CentOS-7 - Base 10,072

extras/7/x86_64 CentOS-7 - Extras 518

updates/7/x86_64 CentOS-7 - Updates 5,434

repolist: 16,024

#安装vim wget net-tools等软件包

[root@k8s-master ~]# yum install -y vim wget net-tools

#端口检查

[root@k8s-master ~]# netstat -ntlp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1115/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1332/master

tcp6 0 0 :::22 :::* LISTEN 1115/sshd

tcp6 0 0 ::1:25 :::* LISTEN 1332/master

2.检查梳理

#确保以上基础环境正确设置

##主机映射(三节点执行)

[root@k8s-master ~]# echo "192.168.10.110 k8s-master" >> /etc/hosts

[root@k8s-master ~]# echo "192.168.10.111 k8s-node1" >> /etc/hosts

[root@k8s-master ~]# echo "192.168.10.112 k8s-node2" >> /etc/hosts

[root@k8s-master ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.10.110 k8s-master

192.168.10.111 k8s-node1

192.168.10.112 k8s-node2

二、kubernetes搭建

3.1 Kubeadm 方式

1.主机准备

#远程连接

#内核

[root@k8s-master ~]# uname -r

3.10.0-1160.el7.x86_64

2.系统初始化

注意:下文中的操作步骤,除特别说明在哪个节点操作之外,未说明的均表示该操作在所有节点都得执行。

第一步:关闭防火墙

[root@k8s-master ~]# systemctl status firewalld #检查防火墙状态

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled)

Active: inactive (dead)

Docs: man:firewalld(1)

第二步:关闭 selinux

[root@k8s-master ~]# sed -i '/selinux/s/enforcing/disabled/' /etc/selinux/config#永久关闭selinux

[root@k8s-master ~]# getenforce #检查selinux

Disabled

第三步:关闭 swap

[root@k8s-master ~]# sed -ri 's/.*swap.*/#&/' /etc/fstab #永久关闭swap

[root@k8s-master ~]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Wed Nov 22 04:34:31 2023

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos-root / xfs defaults 0 0

UUID=d21474f0-0a88-42fb-8851-05eb117d4b4a /boot xfs defaults 0 0

#/dev/mapper/centos-swap swap swap defaults 0 0

第四步:主机映射

[root@k8s-master ~]# cat /etc/hosts #主机映射,在虚拟机设置部分已做

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.10.110 k8s-master

192.168.10.111 k8s-node1

192.168.10.112 k8s-node2

第五步:将桥接的 IPv4 流量传递到 iptables 的链(所有节点都设置);

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

[root@k8s-master ~]# sysctl --system #生效

第六步:时间同步,让各个节点(虚拟机)中的时间与本机时间保持一致。

[root@k8s-master ~]# yum install ntpdate -y

[root@k8s-master ~]# ntpdate time.windows.com

23 Nov 01:36:19 ntpdate[1820]: adjust time server 20.189.79.72 offset 0.002690 sec

[root@k8s-master ~]# systemctl status ntpdate

● ntpdate.service - Set time via NTP

Loaded: loaded (/usr/lib/systemd/system/ntpdate.service; disabled; vendor preset: disabled)

Active: active (exited) since Thu 2023-11-23 01:39:36 EST; 10s ago

Process: 1993 ExecStart=/usr/libexec/ntpdate-wrapper (code=exited, status=0/SUCCESS)

Main PID: 1993 (code=exited, status=0/SUCCESS)

Nov 23 01:39:32 k8s-master systemd[1]: Starting Set time via NTP...

Nov 23 01:39:36 k8s-master systemd[1]: Started Set time via NTP.

[root@k8s-master ~]# date

Thu Nov 23 01:39:50 EST 2023

3.Docker的安装

#Kubernetes 默认容器运行时(CRI)为 Docker,所以需要先在各个节点中安装 Docker

[root@k8s-master ~]# curl -fsSL https://get.docker.com | bash -s docker --mirror Aliyun #docker

安装

[root@k8s-node1 ~]# cat > /etc/docker/daemon.json << Eof #配置镜像源

{

"registry-mirrors": ["https://docker.mirrors.ustc.edu.cn"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

Eof

[root@k8s-master ~]# systemctl daemon-reload && systemctl enable docker --now && systemctl status docker #重启与自启动docker服务并查看当前状态

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

● docker.service - Docker Application Container Engine

Loaded: loaded (/usr/lib/systemd/system/docker.service; enabled; vendor preset: disabled)

Active: active (running) since Thu 2023-11-23 02:09:07 EST; 69ms ago

Docs: https://docs.docker.com

Main PID: 11972 (dockerd)

CGroup: /system.slice/docker.service

└─11972 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

Nov 23 02:09:07 k8s-node1 systemd[1]: Starting Docker Application Container Engine...

Nov 23 02:09:07 k8s-node1 dockerd[11972]: time="2023-11-23T02:09:07.194865885-05:00" level=info msg="Starting up"

Nov 23 02:09:07 k8s-node1 dockerd[11972]: time="2023-11-23T02:09:07.218641431-05:00" level=info msg="[graphdriver] using prior storage...verlay2"

Nov 23 02:09:07 k8s-node1 dockerd[11972]: time="2023-11-23T02:09:07.218864167-05:00" level=info msg="Loading containers: start."

Nov 23 02:09:07 k8s-node1 dockerd[11972]: time="2023-11-23T02:09:07.334494169-05:00" level=info msg="Default bridge (docker0) is assig...address"

Nov 23 02:09:07 k8s-node1 dockerd[11972]: time="2023-11-23T02:09:07.383115712-05:00" level=info msg="Loading containers: done."

Nov 23 02:09:07 k8s-node1 dockerd[11972]: time="2023-11-23T02:09:07.400734690-05:00" level=info msg="Docker daemon" commit=311b9ff gra...n=24.0.7

Nov 23 02:09:07 k8s-node1 dockerd[11972]: time="2023-11-23T02:09:07.400798549-05:00" level=info msg="Daemon has completed initialization"

Nov 23 02:09:07 k8s-node1 dockerd[11972]: time="2023-11-23T02:09:07.425744856-05:00" level=info msg="API listen on /run/docker.sock"

Nov 23 02:09:07 k8s-node1 systemd[1]: Started Docker Application Container Engine.

Hint: Some lines were ellipsized, use -l to show in full.

[root@k8s-master ~]# docker --version #docker版本

Docker version 24.0.7, build afdd53b

4.添加阿里云 yum 源

#此步骤是为了便于今后的下载,在每个节点中执行以下配置;

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[Kubernetes]

name=kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

5.kubeadm、kubelet、kubectl 的安装

[root@k8s-master ~]# yum clean all && yum repolist #查看yum源

Loaded plugins: fastestmirror

Cleaning repos: Kubernetes base extras updates

Cleaning up list of fastest mirrors

Other repos take up 2.6 M of disk space (use --verbose for details)

Loaded plugins: fastestmirror

Determining fastest mirrors

* base: mirrors.tuna.tsinghua.edu.cn

* extras: mirrors.bupt.edu.cn

* updates: mirrors.tuna.tsinghua.edu.cn

Kubernetes | 1.4 kB 00:00:00

base | 3.6 kB 00:00:00

extras | 2.9 kB 00:00:00

updates | 2.9 kB 00:00:00

(1/5): base/7/x86_64/group_gz | 153 kB 00:00:00

(2/5): extras/7/x86_64/primary_db | 250 kB 00:00:01

(3/5): Kubernetes/primary | 137 kB 00:00:01

(4/5): base/7/x86_64/primary_db | 6.1 MB 00:00:04

(5/5): updates/7/x86_64/primary_db | 24 MB 00:00:10

Kubernetes 1022/1022

repo id repo name status

Kubernetes kubernetes 1,022

base/7/x86_64 CentOS-7 - Base 10,072

extras/7/x86_64 CentOS-7 - Extras 518

updates/7/x86_64 CentOS-7 - Updates 5,434

repolist: 17,046

[root@k8s-master ~]# yum list kube* #查看关于包含kube的软件包及信息

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

* base: mirrors.tuna.tsinghua.edu.cn

* extras: mirrors.bupt.edu.cn

* updates: mirrors.tuna.tsinghua.edu.cn

Available Packages

kubeadm.x86_64 1.28.2-0 Kubernetes

kubectl.x86_64 1.28.2-0 Kubernetes

kubelet.x86_64 1.28.2-0 Kubernetes

kubernetes.x86_64 1.5.2-0.7.git269f928.el7 extras

kubernetes-client.x86_64 1.5.2-0.7.git269f928.el7 extras

kubernetes-cni.x86_64 1.2.0-0 Kubernetes

kubernetes-master.x86_64 1.5.2-0.7.git269f928.el7 extras

kubernetes-node.x86_64 1.5.2-0.7.git269f928.el7 extras

[root@k8s-master ~]# yum install -y kubelet-1.28.2-0 kubeadm-1.28.2-0 kubectl-1.28.2-0

#此处指定版本为 1.28.2-0,为当前最新版,如果不指定默认下载最新版本

[root@k8s-master ~]# systemctl start kubelet && systemctl enable kubelet #启动kubelet服务并自启动

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

6.Master 节点中部署集群

##依赖安装cri-dockerd,所有集群节点都做

- [root@k8s-master ~]# wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.6/cri-dockerd-0.3.6.amd64.tgz

- [root@k8s-master ~]# tar -zxvf cri-dockerd-0.3.6.amd64.tgz

- [root@k8s-master ~]# mv ./cri-dockerd/cri-dockerd /usr/local/bin/

- [root@k8s-master ~]# vim /etc/systemd/system/cri-dockerd.service

[Unit]

Description=CRI Interface for Docker Application Container Engine

Documentation=https://docs.mirantis.com

After=network-online.target firewalld.service docker.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/local/bin/cri-dockerd --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9 --network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin --container-runtime-endpoint=unix:///var/run/cri-dockerd.sock --cri-dockerd-root-directory=/var/lib/dockershim --docker-endpoint=unix:///var/run/docker.sock --

cri-dockerd-root-directory=/var/lib/docker

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

StartLimitBurst=3

StartLimitInterval=60s

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TasksMax=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

[root@k8s-master ~]# vim /etc/systemd/system/cri-dockerd.socket

[Unit]

Description=CRI Docker Socket for the API

PartOf=cri-docker.service

[Socket]

ListenStream=/var/run/cri-dockerd.sock

SocketMode=0660

SocketUser=root

SocketGroup=docker

[Install]

WantedBy=sockets.target

[root@k8s-master ~]# systemctl enable cri-dockerd --now && systemctl status cri-dockerd

● cri-dockerd.service - CRI Interface for Docker Application Container Engine

Loaded: loaded (/etc/systemd/system/cri-dockerd.service; enabled; vendor preset: disabled)

Active: active (running) since Thu 2023-11-23 21:25:26 EST; 1h 7min ago

Docs: https://docs.mirantis.com

Main PID: 1918 (cri-dockerd)

CGroup: /system.slice/cri-dockerd.service

└─1918 /usr/local/bin/cri-dockerd --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9 --netwo...

Nov 23 22:08:47 k8s-master cri-dockerd[1918]: time="2023-11-23T22:08:47-05:00" level=error msg="Set backoffDuration to : 1m0s for conta...a1ba6'"

Nov 23 22:09:41 k8s-master systemd[1]: [/etc/systemd/system/cri-dockerd.service:10] Unknown lvalue 'cri-dockerd-root-directory' in sec...Service'

Nov 23 22:09:42 k8s-master cri-dockerd[1918]: time="2023-11-23T22:09:42-05:00" level=info msg="Will attempt to re-write config file /va...4.114]"

Nov 23 22:09:42 k8s-master cri-dockerd[1918]: time="2023-11-23T22:09:42-05:00" level=info msg="Will attempt to re-write config file /va...4.114]"

Nov 23 22:09:42 k8s-master cri-dockerd[1918]: time="2023-11-23T22:09:42-05:00" level=info msg="Will attempt to re-write config file /va...4.114]"

Nov 23 22:09:42 k8s-master cri-dockerd[1918]: time="2023-11-23T22:09:42-05:00" level=info msg="Will attempt to re-write config file /va...4.114]"

Nov 23 22:09:46 k8s-master systemd[1]: [/etc/systemd/system/cri-dockerd.service:10] Unknown lvalue 'cri-dockerd-root-directory' in sec...Service'

Nov 23 22:10:01 k8s-master cri-dockerd[1918]: time="2023-11-23T22:10:01-05:00" level=info msg="Docker cri received runtime config &Runt...24,},}"

Nov 23 22:10:02 k8s-master cri-dockerd[1918]: time="2023-11-23T22:10:02-05:00" level=info msg="Will attempt to re-write config file /va...4.114]"

Nov 23 22:33:18 k8s-master systemd[1]: [/etc/systemd/system/cri-dockerd.service:10] Unknown lvalue 'cri-dockerd-root-directory' in sec...Service'

Hint: Some lines were ellipsized, use -l to show in full.

[root@k8s-master ~]# cri-dockerd --version

cri-dockerd 0.3.6 (877dc6a4)

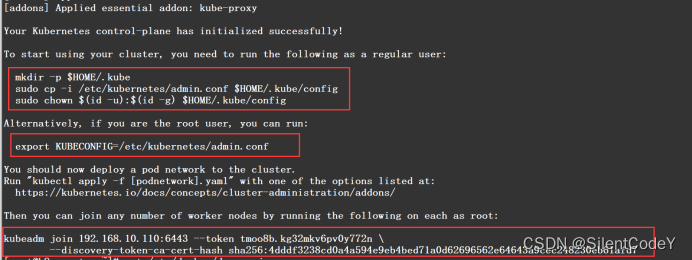

##在 k8s-master 节点中执行以下命令,注意将 master 节点 IP 和 kube 版本号修改为自己主机中所对应的

kubeadm init \

--apiserver-advertise-address=192.168.10.110 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.28.0 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16 \

--cri-socket unix:///var/run/cri-dockerd.sock

[init] Using Kubernetes version: v1.28.0

.......

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.10.110:6443 --token f6pyas.paagyxc7rrd5qj0v \

--discovery-token-ca-cert-hash sha256:cabbf912d80b144b6cd6f536c0ebc7208769ec0dda608c8866cd81138dbaaef8

#完成主节点

[root@k8s-master ~]# mkdir -p $HOME/.kube

[root@k8s-master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@k8s-master ~]# export KUBECONFIG=/etc/kubernetes/admin.conf

[root@k8s-master ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"28", GitVersion:"v1.28.2", GitCommit:"89a4ea3e1e4ddd7f7572286090359983e0387b2f", GitTreeState:"clean", BuildDate:"2023-09-13T09:34:32Z", GoVersion:"go1.20.8", Compiler:"gc", Platform:"linux/amd64"}

[root@k8s-master ~]# kubeadm config images list

registry.k8s.io/kube-apiserver:v1.28.4

registry.k8s.io/kube-controller-manager:v1.28.4

registry.k8s.io/kube-scheduler:v1.28.4

registry.k8s.io/kube-proxy:v1.28.4

registry.k8s.io/pause:3.9

registry.k8s.io/etcd:3.5.9-0

registry.k8s.io/coredns/coredns:v1.10.1

7.将 node 节点加入集群

#kubeadm join命令

[root@k8s-node1 ~]# kubeadm join 192.168.10.110:6443 --token f6pyas.paagyxc7rrd5qj0v --discovery-token-ca-cert-hash sha256:cabbf912d80b144b6cd6f536c0ebc7208769ec0dda608c8866cd81138dbaaef8 --cri-socket unix:///var/run/cri-dockerd.sock

#将 /etc/kubernetes/admin.conf 文件传至两个从节点(主节点)

[root@k8s-master ~]# scp /etc/kubernetes/admin.conf 192.168.10.111:/etc/kubernetes/admin.conf

#从节点

[root@k8s-node1 ~]# mkdir ~/.kube

[root@k8s-node1 ~]# cp /etc/kubernetes/admin.conf ~/.kube/config

[root@k8s-node1 ~]# su -

8.安装pod网络(master节点执行)

#支持kubernetes cluster 之间pod网络通信,kubernetes支持多种网络,本文使用flannel网络。

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

9.kubernetes集群完成

[root@k8s-master ~]# kubectl get nodes -owide #集群详情

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master Ready control-plane 5h47m v1.28.2 192.168.10.110 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 docker://24.0.7

k8s-node1 Ready <none> 5h46m v1.28.2 192.168.10.111 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 docker://24.0.7

k8s-node2 Ready <none> 5h44m v1.28.2 192.168.10.112 <none> CentOS Linux 7 (Core) 3.10.0-1160.e l7.x86_64 docker://24.0.7

[root@k8s-master ~]# netstat -ntlp #端口查看

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 127.0.0.1:10248 0.0.0.0:* LISTEN 3299/kubelet

tcp 0 0 127.0.0.1:10249 0.0.0.0:* LISTEN 3416/kube-proxy

tcp 0 0 127.0.0.1:9099 0.0.0.0:* LISTEN 42619/calico-node

tcp 0 0 192.168.10.110:2379 0.0.0.0:* LISTEN 3172/etcd

tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 3172/etcd

tcp 0 0 192.168.10.110:2380 0.0.0.0:* LISTEN 3172/etcd

tcp 0 0 127.0.0.1:2381 0.0.0.0:* LISTEN 3172/etcd

tcp 0 0 127.0.0.1:10257 0.0.0.0:* LISTEN 3179/kube-controlle

tcp 0 0 0.0.0.0:179 0.0.0.0:* LISTEN 42750/bird

tcp 0 0 127.0.0.1:10259 0.0.0.0:* LISTEN 3204/kube-scheduler

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1011/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1256/master

tcp6 0 0 :::10250 :::* LISTEN 3299/kubelet

tcp6 0 0 :::6443 :::* LISTEN 3218/kube-apiserver

tcp6 0 0 :::10256 :::* LISTEN 3416/kube-proxy

tcp6 0 0 :::22 :::* LISTEN 1011/sshd

tcp6 0 0 ::1:25 :::* LISTEN 1256/master

tcp6 0 0 :::35676 :::* LISTEN 1698/cri-dockerd

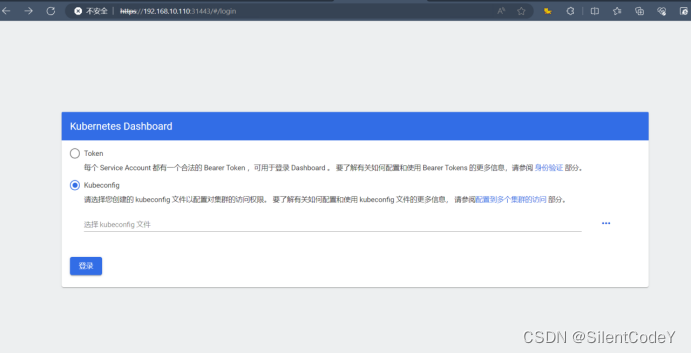

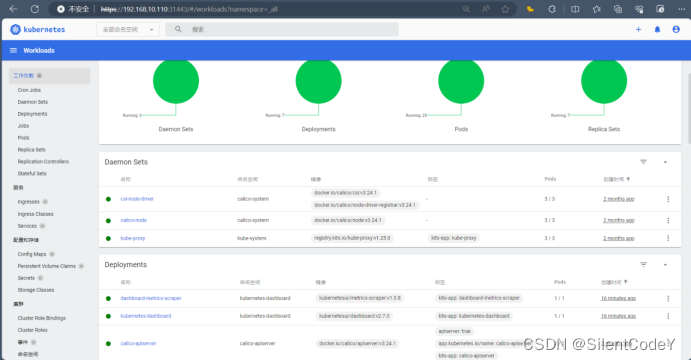

10.部署Dashboard

[root@k8s-master ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

[root@k8s-master ~]# kubectl create ns kubernetes-dashboard

[root@k8s-master ~]# cat recommended.yaml

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort #添加此字段,暴露端口

ports:

- port: 443

targetPort: 8443

nodePort: 31443 #添加此字段,暴露端口

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.7.0

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.8

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

[root@k8s-master ~]# kubectl apply -f recommended.yaml

Warning: resource namespaces/kubernetes-dashboard is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

namespace/kubernetes-dashboard configured

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

[root@k8s-master ~]# kubectl get all -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

pod/dashboard-metrics-scraper-64bcc67c9c-5hlh7 1/1 Running 0 5m59s

pod/kubernetes-dashboard-5c8bd6b59-kgl55 1/1 Running 0 5m59s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/dashboard-metrics-scraper ClusterIP 10.96.0.148 <none> 8000/TCP 5m59s

service/kubernetes-dashboard NodePort 10.96.1.112 <none> 443:31443/TCP 6m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/dashboard-metrics-scraper 1/1 1 1 5m59s

deployment.apps/kubernetes-dashboard 1/1 1 1 5m59s

NAME DESIRED CURRENT READY AGE

replicaset.apps/dashboard-metrics-scraper-64bcc67c9c 1 1 1 5m59s

replicaset.apps/kubernetes-dashboard-5c8bd6b59 1 1 1 5m59s

[root@k8s-master ~]# cat k8s-dashboard-user.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: admin-user

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

[root@k8s-master ~]# kubectl apply -f k8s-dashboard-user.yaml

serviceaccount/admin-user created

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

[root@k8s-master ~]# kubectl -n kubernetes-dashboard create token admin-user

eyJhbGciOiJSUzI1NiIsImtpZCI6IkJYY2N0VjZOeWtwWHphN2tjUWhPMjFxV1UzZlBFRDRieXViYVhYQ2lORm8ifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNzA2ODk4OTQ0LCJpYXQiOjE3MDY4OTUzNDQsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJhZG1pbi11c2VyIiwidWlkIjoiNDY2ZDM1YzktYTFlZi00ZTg0LTg5NjAtNTM1YjA5YmM1ZTIwIn19LCJuYmYiOjE3MDY4OTUzNDQsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDphZG1pbi11c2VyIn0.NZTiZJwW44XM5EoXmFHDhVWphNksayRH7tJ4kGuKnqku-uOVEXZuSYGnJfc4tL39MgPNnJKNqqV7GXRHg8SOJ-emZVRguwAP2O2bDKskJ0J40XpuOSYO3soYEH8bisBVrcvBtdGnYpjcC7T8JLeoR07X1nIFmnHKf7QMTdUdE83apdjIhC0AS0A3owqafOJmeWUnPfv_Tmw5hu-fPHaJmwO508YNMIWmGCcqrJwckxGxuGnwVdWzX3OlYY9UtAYgq9kRp5bVGJhTzJbIYLYTnmpbtrVvqsH1JXC-dl_dio-boGxWPo-3dqRHaLIi1vWfantcWo6RSOAE3e3HTApUtw

11.扩展

##kubectl命令自动补全,提高运维效率

[root@k8s-master ~]# yum -y install bash-completion #安装bash命令行自动补全扩展包

[root@k8s-master ~]# source /usr/share/bash-completion/bash_completion #执行加载

[root@k8s-master ~]# source <(kubectl completion bash) #临时生效

[root@k8s-master ~]# echo "source <(k ubectl completion bash)" >> ~/.bashrc ##设置当前用户永久生效

306

306

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?