理论

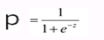

- Logistic回归:用于预测结果是界于0和1之间的概率

- 在Logistic回归中,对数几率是关于X是线性变化的。

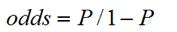

- 几率(odds):该事件发生的概率与该事件不发生的概率的比值

- 0-1事件中事件1发生的概率为P,0发生的概率就是1-P;

- 事件1发生的几率:

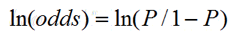

- 对数几率:

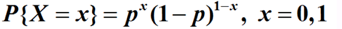

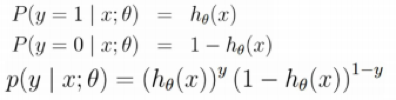

- 根据概率公式:

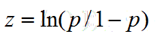

- 通过对数几率:

推导出:

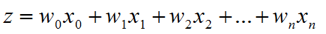

根据线性关系:

W为回归系数;

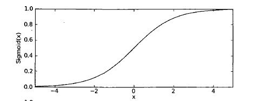

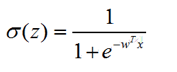

这个函数对应的sigmoid函数为:

这里的w怎么求??(可以对比线性回归里面的w是利用梯度下降法求解的)

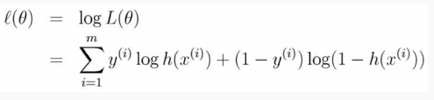

利用极大似然估算( 方便利用梯度上升的方法)

这里简单讲一下何为极大似然估算:

概率函数:

对数似然:

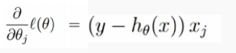

梯度:

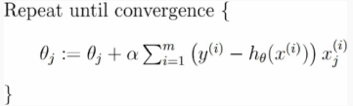

梯度上升的伪代码:

其中:θ 表示回归系数(这里的θ指的是下面中的weights), α 表示步长

代码

import numpy as np

# import pysnooper

# @pysnooper.snoop()

def loadDataSet():

dataMat = []

labelMat = []

fr = open('testSet.txt')

for line in fr.readlines(): #'-0.017612 14.053064 0'; 一行一行地读取数据

lineArr = line.strip().split() #strip()是移除空格的意思,strip() 方法用于移除字符串头尾指定的字符(默认为空格或换行符)或字符序列;

# split() 通过指定分隔符对字符串进行切片,如果参数 num 有指定值,则分隔 num+1 个子字符串,默认以空格为分隔符,包含 \n

# print(line) #-0.017612 14.053064 0(这是数据集里面的第一行)--->-1.395634 4.662541 1(数据集里面的第二行)----->...

# print(lineArr) #['-0.017612', '14.053064', '0']--->['-1.395634', '4.662541', '1']---->....

dataMat.append([1.0, float(lineArr[0]), float(lineArr[1])]) #将训练集添加至dataMat里面

labelMat.append(int(lineArr[2])) #这是是类别标签

# print(dataMat)

#[[1.0, -0.017612, 14.053064], [1.0, -1.395634, 4.662541], [1.0, -0.752157, 6.53862], [1.0, -1.322371, 7.152853], [1.0, 0.423363, 11.054677], [1.0, 0.406704, 7.067335], [1.0, 0.667394, 12.741452], [1.0, -2.46015, 6.866805], [1.0, 0.569411, 9.548755], [1.0, -0.026632, 10.427743], [1.0, 0.850433, 6.920334], [1.0, 1.347183, 13.1755], [1.0, 1.176813, 3.16702], [1.0, -1.781871, 9.097953], [1.0, -0.566606, 5.749003], [1.0, 0.931635, 1.589505], [1.0, -0.024205, 6.151823], [1.0, -0.036453, 2.690988], [1.0, -0.196949, 0.444165], [1.0, 1.014459, 5.754399], [1.0, 1.985298, 3.230619], [1.0, -1.693453, -0.55754], [1.0, -0.576525, 11.778922], [1.0, -0.346811, -1.67873], [1.0, -2.124484, 2.672471], [1.0, 1.217916, 9.597015], [1.0, -0.733928, 9.098687], [1.0, -3.642001, -1.618087], [1.0, 0.315985, 3.523953], [1.0, 1.416614, 9.619232], [1.0, -0.386323, 3.989286], [1.0, 0.556921, 8.294984], [1.0, 1.224863, 11.58736], [1.0, -1.347803, -2.406051], [1.0, 1.196604, 4.951851], [1.0, 0.275221, 9.543647], [1.0, 0.470575, 9.332488], [1.0, -1.889567, 9.542662], [1.0, -1.527893, 12.150579], [1.0, -1.185247, 11.309318], [1.0, -0.445678, 3.297303], [1.0, 1.042222, 6.105155], [1.0, -0.618787, 10.320986], [1.0, 1.152083, 0.548467], [1.0, 0.828534, 2.676045], [1.0, -1.237728, 10.549033], [1.0, -0.683565, -2.166125], [1.0, 0.229456, 5.921938], [1.0, -0.959885, 11.555336], [1.0, 0.492911, 10.993324], [1.0, 0.184992, 8.721488], [1.0, -0.355715, 10.325976], [1.0, -0.397822, 8.058397], [1.0, 0.824839, 13.730343], [1.0, 1.507278, 5.027866], [1.0, 0.099671, 6.835839], [1.0, -0.344008, 10.717485], [1.0, 1.785928, 7.718645], [1.0, -0.918801, 11.560217], [1.0, -0.364009, 4.7473], [1.0, -0.841722, 4.119083], [1.0, 0.490426, 1.960539], [1.0, -0.007194, 9.075792], [1.0, 0.356107, 12.447863], [1.0, 0.342578, 12.281162], [1.0, -0.810823, -1.466018], [1.0, 2.530777, 6.476801], [1.0, 1.296683, 11.607559], [1.0, 0.475487, 12.040035], [1.0, -0.783277, 11.009725], [1.0, 0.074798, 11.02365], [1.0, -1.337472, 0.468339], [1.0, -0.102781, 13.763651], [1.0, -0.147324, 2.874846], [1.0, 0.518389, 9.887035], [1.0, 1.015399, 7.571882], [1.0, -1.658086, -0.027255], [1.0, 1.319944, 2.171228], [1.0, 2.056216, 5.019981], [1.0, -0.851633, 4.375691], [1.0, -1.510047, 6.061992], [1.0, -1.076637, -3.181888], [1.0, 1.821096, 10.28399], [1.0, 3.01015, 8.401766], [1.0, -1.099458, 1.688274], [1.0, -0.834872, -1.733869], [1.0, -0.846637, 3.849075], [1.0, 1.400102, 12.628781], [1.0, 1.752842, 5.468166], [1.0, 0.078557, 0.059736], [1.0, 0.089392, -0.7153], [1.0, 1.825662, 12.693808], [1.0, 0.197445, 9.744638], [1.0, 0.126117, 0.922311], [1.0, -0.679797, 1.22053], [1.0, 0.677983, 2.556666], [1.0, 0.761349, 10.693862], [1.0, -2.168791, 0.143632], [1.0, 1.38861, 9.341997], [1.0, 0.317029, 14.739025]]

# print(labelMat)

#[0, 1, 0, 0, 0, 1, 0, 1, 0, 0, 1, 0, 1, 0, 1, 1, 1, 1, 1, 1, 1, 1, 0, 1, 1, 0, 0, 1, 1, 0, 1, 1, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 1, 1, 0, 1, 1, 0, 0, 0, 0, 0, 0, 1, 1, 0, 1, 0, 1, 1, 1, 0, 0, 0, 1, 1, 0, 0, 0, 0, 1, 0, 1, 0, 0, 1, 1, 1, 1, 0, 1, 0, 1, 1, 1, 1, 0, 1, 1, 1, 0, 0, 1, 1, 1, 0, 1, 0, 0]

return dataMat, labelMat

dataMat, labelMat = loadDataSet()

# @pysnooper.snoop()

#设置sigmoid函数,通过传进去的值算出概率,通过概率来做出判断

def sigmoid(inX): #inX指的是wT*x,这里的w和x都是向量

return 1.0 / (1 + np.exp(-inX))

# @pysnooper.snoop()

#梯度上升的方法

def gradAscent(dataMatIn, classLabels):

#dataMatIn= <class 'list'>: [[1.0, -0.017612, 14.053064], [1.0, -1.395634, 4.662541], [1.0, -0.752157, 6.53862], [1.0, -1.322371, 7.152853], [1.0, 0.423363, 11.054677], [1.0, 0.406704, 7.067335], [1.0, 0.667394, 12.741452], [1.0, -2.46015, 6.866805], [1.0, 0.569411, 9.548755], [1.0, -0.026632, 10.427743], [1.0, 0.850433, 6.920334], [1.0, 1.347183, 13.1755], [1.0, 1.176813, 3.16702], [1.0, -1.781871, 9.097953], [1.0, -0.566606, 5.749003], [1.0, 0.931635, 1.589505], [1.0, -0.024205, 6.151823], [1.0, -0.036453, 2.690988], [1.0, -0.196949, 0.444165], [1.0, 1.014459, 5.754399], [1.0, 1.985298, 3.230619], [1.0, -1.693453, -0.55754], [1.0, -0.576525, 11.778922], [1.0, -0.346811, -1.67873], [1.0, -2.124484, 2.672471], [1.0, 1.217916, 9.597015], [1.0, -0.733928, 9.098687], [1.0, -3.642001, -1.618087], [1.0, 0.315985, 3.523953], [1.0, 1.416614, 9.619232], [1.0, -0.386323, 3.989286], [1.0, 0.556921, 8.294984], [1.0, 1.224863, 11.58736], [1.0, -1.347803, -2.406051], [1.0, 1.196604, 4.951851], [1.0, 0.275221, 9.543647], [1.0, 0.470575, 9.332488], [1.0, -1.889567, 9.542662], [1.0, -1.527893, 12.150579], [1.0, -1.185247, 11.309318], [1.0, -0.445678, 3.297303], [1.0, 1.042222, 6.105155], [1.0, -0.618787, 10.320986], [1.0, 1.152083, 0.548467], [1.0, 0.828534, 2.676045], [1.0, -1.237728, 10.549033], [1.0, -0.683565, -2.166125], [1.0, 0.229456, 5.921938], [1.0, -0.959885, 11.555336], [1.0, 0.492911, 10.993324], [1.0, 0.184992, 8.721488], [1.0, -0.355715, 10.325976], [1.0, -0.397822, 8.058397], [1.0, 0.824839, 13.730343], [1.0, 1.507278, 5.027866], [1.0, 0.099671, 6.835839], [1.0, -0.344008, 10.717485], [1.0, 1.785928, 7.718645], [1.0, -0.918801, 11.560217], [1.0, -0.364009, 4.7473], [1.0, -0.841722, 4.119083], [1.0, 0.490426, 1.960539], [1.0, -0.007194, 9.075792], [1.0, 0.356107, 12.447863], [1.0, 0.342578, 12.281162], [1.0, -0.810823, -1.466018], [1.0, 2.530777, 6.476801], [1.0, 1.296683, 11.607559], [1.0, 0.475487, 12.040035], [1.0, -0.783277, 11.009725], [1.0, 0.074798, 11.02365], [1.0, -1.337472, 0.468339], [1.0, -0.102781, 13.763651], [1.0, -0.147324, 2.874846], [1.0, 0.518389, 9.887035], [1.0, 1.015399, 7.571882], [1.0, -1.658086, -0.027255], [1.0, 1.319944, 2.171228], [1.0, 2.056216, 5.019981], [1.0, -0.851633, 4.375691], [1.0, -1.510047, 6.061992], [1.0, -1.076637, -3.181888], [1.0, 1.821096, 10.28399], [1.0, 3.01015, 8.401766], [1.0, -1.099458, 1.688274], [1.0, -0.834872, -1.733869], [1.0, -0.846637, 3.849075], [1.0, 1.400102, 12.628781], [1.0, 1.752842, 5.468166], [1.0, 0.078557, 0.059736], [1.0, 0.089392, -0.7153], [1.0, 1.825662, 12.693808], [1.0, 0.197445, 9.744638], [1.0, 0.126117, 0.922311], [1.0, -0.679797, 1.22053], [1.0, 0.677983, 2.556666], [1.0, 0.761349, 10.693862], [1.0, -2.168791, 0.143632], [1.0, 1.38861, 9.341997], [1.0, 0.317029, 14.739025]]

#这里的dataMatIn就是上面的加载函数里面的dataMat

dataMat = np.mat(dataMatIn) #mat函数功能:可以转换为矩阵,就是变成了矩阵的"形式"(100行3列的矩阵),数据和dataMatIn一模一样

labelMat = np.mat(classLabels).transpose() #transpose这里我写过一篇文章可以看看,transpose的作用就是改变高维数组的形状,这里的labelMat为100行1列

m,n = np.shape(dataMat) #这里是求出矩阵的行和列,为100行,3列

alpha = 0.001 #设置默认步长

maxCycles = 500 #设置迭代次数

weights = np.ones((n, 1)) #这里的n指的是上面的矩阵的列数,这里为3,这里是weights初始化

# print(weights)

# [[1.]

# [1.]

# [1.]]

for k in range(maxCycles):

h = sigmoid(dataMat * weights) #这里的dataMat(100*3)指的是x值,weight(3*1)指的是w,初始w为[1,1,1]T

error = labelMat - h #labelMat为目标值(就是训练集里面告诉你它是属于哪一类(这里为0或者1)的),h为预测值(通过sigmoid预测出来的嗯),两者相减得到错误率,error也是100行1列

weights = weights + alpha * dataMat.transpose() * error #alpha为学习率,error为错误率;dataMat.transpose(3行100列)为梯度上升公式里面的x值,(梯度上升公式可以对比一下梯度下降公式,只是把减号改成了加),weights每次迭代后数据都不一样,每次都是三行一列,这里的weights为回归系数

return weights

weights = gradAscent(dataMat, labelMat)

# @pysnooper.snoop()

def result():

dataMat2 = np.array([1.0, -1.395634, 4.662541]) #这里的数据是lineArr的第二行,测试集

# print(dataMat2) #[ 1. -1.395634 4.662541]

prob = sigmoid(dataMat2 * weights) #prob这里指的是概率,要获取weights还是需要调用梯度上升函数(gradAscent)的,这里的weights是经过梯度上升优化后的值

if prob > 0.5: #0.5是个阈值

return 1.0

else:

return 0

print(result())

1935

1935

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?