本文只是在原博主基础上把自己遇到的问题记录下来,大多数按照原博主文档操作就行

原文链接:https://blog.csdn.net/qq_35745940/article/details/131149881

本人遇到的主要几个问题

1、构建镜像时候没有把jdk添加进去镜像,导致fe启动不起来

2、因为不懂docker-compose,一开始不知道.env文件应该放在哪里,后面才知道放在yaml文件同个目录底下,也就是我自己的/data/starrocks目录下

3、docker-compose.yaml会将配置文件拷贝到容器里面的对应的目录,因为目录没搞对,导致创建容器失败。

如若侵权,请私聊联系

一、前期准备

部署docker

# 安装yum-config-manager配置工具

yum -y install yum-utils

# 建议使用阿里云yum源:(推荐)

#yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 安装docker-ce版本

yum install -y docker-ce

# 启动并开机启动

systemctl enable --now docker

docker --version

部署 docker-compose

curl -SL https://github.com/docker/compose/releases/download/v2.16.0/docker-compose-linux-x86_64 -o /usr/local/bin/docker-compose

chmod +x /usr/local/bin/docker-compose

docker-compose --version

创建网络

# 创建,注意不能使用hadoop_network,要不然启动hs2服务的时候会有问题!!!

docker network create hadoop-network

# 查看

docker network ls二、StarRocks 编排部署

下载 StarRocks 部署包

官网下载自己想要的版本,我下载的是3.2.6

https://www.starrocks.io/download/community配置文件

注意这些配置文件可以编辑解压出来的文件目录下,后面这些文件会打包到镜像里面

fe.conf文件配置

#####################################################################

## The uppercase properties are read and exported by bin/start_fe.sh.

## To see all Frontend configurations,

## see fe/src/com/starrocks/common/Config.java

# the output dir of stderr/stdout/gc

LOG_DIR = ${STARROCKS_HOME}/log

#这里配置JDK的版本和位置,配置不对容器会无法启动

JAVA_HOME = /data/starrocks/jdk1.8.0_131

DATE = "$(date +%Y%m%d-%H%M%S)"

#这里需要根据自己主机情况修改jvm内存,我改成了1G

JAVA_OPTS="-Dlog4j2.formatMsgNoLookups=true -Xmx1024m -XX:+UseG1GC -XX:+PrintGCDetails -XX:+PrintGCDateStamps -Xloggc:${LOG_DIR}/fe.gc.log.$DATE -XX:+PrintConcurrentLocks -Djava.security.policy=${STARROCKS_HOME}/conf/udf_security.policy"

# For jdk 11+, this JAVA_OPTS will be used as default JVM options

JAVA_OPTS_FOR_JDK_11="-Dlog4j2.formatMsgNoLookups=true -Xmx1024m -XX:+UseG1GC -Xlog:gc*:${LOG_DIR}/fe.gc.log.$DATE:time -Djava.security.policy=${STARROCKS_HOME}/conf/udf_security.policy"

##

## the lowercase properties are read by main program.

##

# DEBUG, INFO, WARN, ERROR, FATAL

sys_log_level = INFO

# store metadata, create it if it is not exist.

# Default value is ${STARROCKS_HOME}/meta

# meta_dir = ${STARROCKS_HOME}/meta

http_port = 8030

rpc_port = 9020

query_port = 9030

edit_log_port = 9010

mysql_service_nio_enabled = true

be.conf文件配置,原博主改了,我这里没改

# specific language governing permissions and limitations

# under the License.

# INFO, WARNING, ERROR, FATAL

sys_log_level = INFO

# ports for admin, web, heartbeat service

be_port = 9060

be_http_port = 8040

heartbeat_service_port = 9050

brpc_port = 8060

starlet_port = 9070

# Enable jaeger tracing by setting jaeger_endpoint

# jaeger_endpoint = localhost:6831

# Choose one if there are more than one ip except loopback address.

# Note that there should at most one ip match this list.

# If no ip match this rule, will choose one randomly.

# use CIDR format, e.g. 10.10.10.0/24

# Default value is empty.

# priority_networks = 10.10.10.0/24;192.168.0.0/16

# data root path, separate by ';'

# you can specify the storage medium of each root path, HDD or SSD, seperate by ','

# eg:

# storage_root_path = /data1,medium:HDD;/data2,medium:SSD;/data3

# /data1, HDD;

# /data2, SSD;

# /data3, HDD(default);

#

# Default value is ${STARROCKS_HOME}/storage, you should create it by hand.

# storage_root_path = ${STARROCKS_HOME}/storage

# Advanced configurations

# sys_log_dir = ${STARROCKS_HOME}/log

# sys_log_roll_mode = SIZE-MB-1024

# sys_log_roll_num = 10

# sys_log_verbose_modules = *

# log_buffer_level = -1

# JVM options for be

# eg:

# JAVA_OPTS="-Djava.security.krb5.conf=/etc/krb5.conf"

# For jdk 9+, this JAVA_OPTS will be used as default JVM options

# JAVA_OPTS_FOR_JDK_9="-Djava.security.krb5.conf=/etc/krb5.conf"

conf/apache_hdfs_broker.conf这个文件不用动-

broker_ipc_port=8000 client_expire_seconds=300

启动脚本bootstrap.sh

#!/usr/bin/env sh

wait_for() {

echo Waiting for $1 to listen on $2...

while ! nc -z $1 $2; do echo waiting...; sleep 1s; done

}

startStarRocks() {

node_type="$1"

fe_leader="$2"

fe_query_port="$3"

fqdn=`hostname`

if [ "$node_type" = "fe" ];then

if [ "$fe_leader" ];then

wait_for $fe_leader $fe_query_port

mysql -h $fe_leader -P${fe_query_port} -uroot -e "ALTER SYSTEM ADD FOLLOWER \"${fqdn}:9010\"";

${StarRocks_HOME}/fe/bin/start_fe.sh --helper ${fe_leader}:9010 --host_type FQDN

else

${StarRocks_HOME}/fe/bin/start_fe.sh --host_type FQDN

fi

#tail -f ${StarRocks_HOME}/fe/log/fe.log

elif [ "$node_type" = "be" ];then

wait_for $fe_leader $fe_query_port

mysql -h ${fe_leader} -P${fe_query_port} -uroot -e "ALTER SYSTEM ADD BACKEND \"${fqdn}:9050\"";

${StarRocks_HOME}/be/bin/start_be.sh

tail -f ${StarRocks_HOME}/be/log/be.log

elif [ "$node_type" = "broker" ];then

wait_for $fe_leader $fe_query_port

mysql -h ${fe_leader} -P${fe_query_port} -uroot -e "ALTER SYSTEM ADD BROKER ${fqdn} \"${fqdn}:8000\"";

${StarRocks_HOME}/apache_hdfs_broker/bin/start_broker.sh

tail -f ${StarRocks_HOME}/apache_hdfs_broker/log/apache_hdfs_broker.log

fi

}

startStarRocks $@

构建镜像 Dockerfile

FROM registry.cn-hangzhou.aliyuncs.com/bigdata_cloudnative/centos-jdk:7.7.1908

# install client mysql

RUN yum -y install mysql

# 添加 StarRocks 包

ENV StarRocks_VERSION 3.2.6

RUN sudo mkdir -p /data/starrocks/StarRocks-${StarRocks_VERSION}

ADD StarRocks-${StarRocks_VERSION}.tar.gz /data/starrocks/

ENV StarRocks_HOME /data/starrocks/StarRocks

RUN ln -s /data/starrocks/StarRocks-${StarRocks_VERSION} $StarRocks_HOME

# 创建存储目录

RUN sudo mkdir -p /data/starrocks/StarRocks/fe/meta

RUN sudo mkdir -p /data/starrocks/StarRocks/be/storage

# copy bootstrap.sh

COPY bootstrap.sh /data/starrocks/StarRocks/

RUN chmod +x /data/starrocks/StarRocks/bootstrap.sh

#add java 这里和原博主不同,创建镜像不把jdk带进去,fe无法启动,路径要和fe.conf配置路径一致

ADD jdk-8u131-linux-x64.tar.gz /data/starrocks/

#RUN chown -R hadoop:hadoop /opt/apache

WORKDIR $StarRocks_HOME

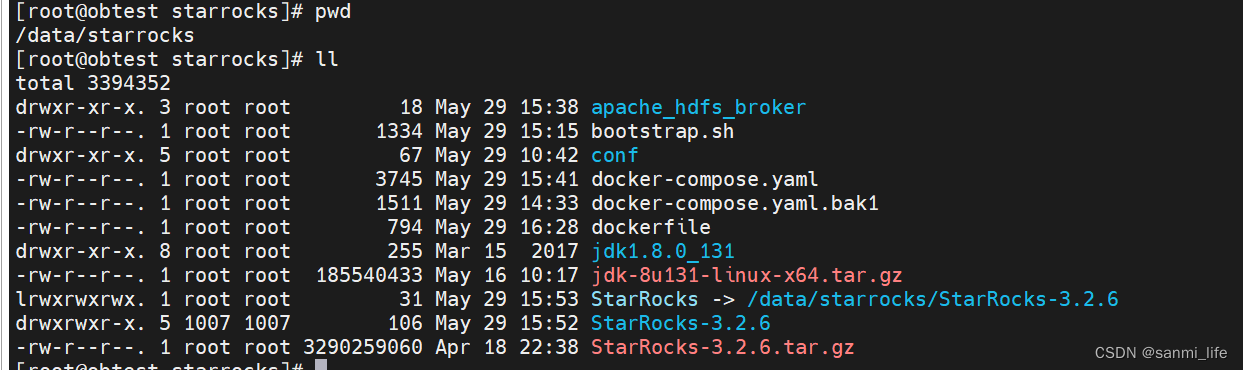

构建镜像前要确认你的文件是否都在,我的全部文件都在/data/starrocks里

开始构建镜像

docker build -t starrocks:3.2.6 . --no-cache

### 参数解释

# -t:指定镜像名称

# . :当前目录Dockerfile

# -f:指定Dockerfile路径

# --no-cache:不缓存编排 docker-compose.yaml

version: '3'

services:

starrocks-fe-1:

image: starrocks:3.2.6

user: "root:root"

container_name: starrocks-fe-1

hostname: starrocks-fe-1

restart: always

privileged: true

env_file:

- .env

#因为我是直接修改解压包里面的conf文件,路径要修改一下,否则构建容器会报错找不到文件,后面同上

volumes:

- ./StarRocks/fe/conf/fe.conf:${StarRocks_HOME}/fe/conf/fe.conf

ports:

- "${StarRocks_FE_HTTP_PORT}"

expose:

- "${StarRocks_FE_RPC_PORT}"

- "${StarRocks_FE_QUERY_PORT}"

- "${StarRocks_FE_EDIT_LOG_PORT}"

command: ["sh","-c","/data/starrocks/StarRocks/bootstrap.sh fe"]

networks:

- hadoop-network

healthcheck:

test: ["CMD-SHELL", "netstat -tnlp|grep :${StarRocks_FE_HTTP_PORT} || exit 1"]

interval: 10s

timeout: 20s

retries: 3

starrocks-fe-2:

image: starrocks:3.2.6

user: "root:root"

container_name: starrocks-fe-2

hostname: starrocks-fe-2

restart: always

privileged: true

env_file:

- .env

volumes:

- ./StarRocks/fe/conf/fe.conf:${StarRocks_HOME}/fe/conf/fe.conf

ports:

- "${StarRocks_FE_HTTP_PORT}"

expose:

- "${StarRocks_FE_RPC_PORT}"

- "${StarRocks_FE_QUERY_PORT}"

- "${StarRocks_FE_EDIT_LOG_PORT}"

command: ["sh","-c","/data/starrocks/StarRocks/bootstrap.sh fe starrocks-fe-1 ${StarRocks_FE_QUERY_PORT}"]

networks:

- hadoop-network

healthcheck:

test: ["CMD-SHELL", "netstat -tnlp|grep :${StarRocks_FE_HTTP_PORT} || exit 1"]

interval: 10s

timeout: 20s

retries: 3

starrocks-fe-3:

image: starrocks:3.2.6

user: "root:root"

container_name: starrocks-fe-3

hostname: starrocks-fe-3

restart: always

privileged: true

env_file:

- .env

volumes:

- ./StarRocks/fe/conf/fe.conf:${StarRocks_HOME}/fe/conf/fe.conf

ports:

- "${StarRocks_FE_HTTP_PORT}"

expose:

- "${StarRocks_FE_RPC_PORT}"

- "${StarRocks_FE_QUERY_PORT}"

- "${StarRocks_FE_EDIT_LOG_PORT}"

command: ["sh","-c","/data/starrocks/StarRocks/bootstrap.sh fe starrocks-fe-1 ${StarRocks_FE_QUERY_PORT}"]

networks:

- hadoop-network

healthcheck:

test: ["CMD-SHELL", "netstat -tnlp|grep :${StarRocks_FE_HTTP_PORT} || exit 1"]

interval: 10s

timeout: 20s

retries: 3

starrocks-be:

image: starrocks:3.2.6

user: "root:root"

restart: always

privileged: true

deploy:

replicas: 3

env_file:

- .env

volumes:

- ./StarRocks/be/conf/be.conf:${StarRocks_HOME}/be/conf/be.conf

ports:

- "${StarRocks_BE_HTTP_PORT}"

expose:

- "${StarRocks_BE_PORT}"

- "${StarRocks_BE_HEARTBEAT_SERVICE_PORT}"

- "${StarRocks_BE_BRPC_PORT}"

command: ["sh","-c","/data/starrocks/StarRocks/bootstrap.sh be starrocks-fe-1 ${StarRocks_FE_QUERY_PORT}"]

networks:

- hadoop-network

healthcheck:

test: ["CMD-SHELL", "netstat -tnlp|grep :${StarRocks_BE_HTTP_PORT} || exit 1"]

interval: 10s

timeout: 10s

retries: 3

starrocks-apache_hdfs_broker:

image: starrocks:3.2.6

user: "root:root"

restart: always

privileged: true

deploy:

replicas: 3

env_file:

- .env

volumes:

- ./StarRocks/apache_hdfs_broker/conf/apache_hdfs_broker.conf:${StarRocks_HOME}/apache_hdfs_broker/conf/apache_hdfs_broker.conf

expose:

- "${StarRocks_BROKER_IPC_PORT}"

command: ["sh","-c","/data/starrocks/StarRocks/bootstrap.sh broker starrocks-fe-1 ${StarRocks_FE_QUERY_PORT}"]

networks:

- hadoop-network

healthcheck:

test: ["CMD-SHELL", "netstat -tnlp|grep :${StarRocks_BROKER_IPC_PORT} || exit 1"]

interval: 10s

timeout: 10s

retries: 3

# 连接外部网络

networks:

hadoop-network:

external: true

.env 文件内容

.env和docker-compose.yaml文件放在同一个目录就可以,直接创建一个

StarRocks_HOME=/data/starrocks/StarRocks

StarRocks_FE_HTTP_PORT=8030

StarRocks_FE_RPC_PORT=9020

StarRocks_FE_QUERY_PORT=9030

StarRocks_FE_EDIT_LOG_PORT=9010

StarRocks_BE_HTTP_PORT=8040

StarRocks_BE_PORT=9060

StarRocks_BE_HEARTBEAT_SERVICE_PORT=9050

StarRocks_BE_BRPC_PORT=8060

StarRocks_BROKER_IPC_PORT=8000

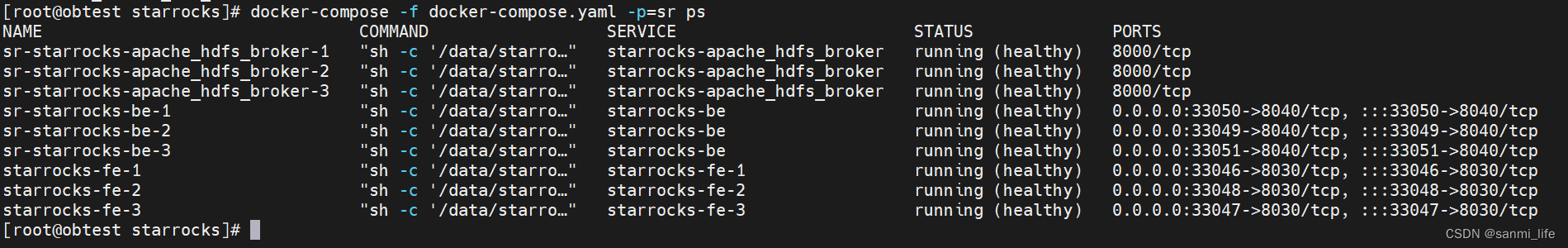

6)开始部署

# p=sr:项目名,默认项目名是当前目录名称

docker-compose -f docker-compose.yaml -p=sr up -d

# 查看

docker-compose -f docker-compose.yaml -p=sr ps

# 卸载

docker-compose -f docker-compose.yaml -p=sr down

到这里就完成部署了

本文仅学习记录文档,非原创文档,比较潦草

1511

1511

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?