简介

oozie运行mapreduce任务需要把用到的lib包放到任务目录的lib文件夹内,其他的文件和shell一样,只是里面的内容有变动。

运行Hadoop的官方示例-wordCount

示例jar包在hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.5.0-cdh5.3.6.jar

HDFS的文件也创建好了。

运行任务:

root@master:/opt/module/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce# hadoop jar hadoop-mapreduce-examples-2.5.0-cdh5.3.6.jar wordcount /wordcount/word.txt /wordcount/out

任务运行成功了。看下任务的配置:

注意看mapred.reducer.new-api mapreduce.job.reduce.class 这两个属性内容,mapred.reducer.new-api表示使用新的mapreduce的API,mapreduce.job.reduce.class表示reduce类 下面使用oozie就会使用这样的属性值

oozie运行mapreduce

job.properties

nameNode=hdfs://master:8020

jobTracker=master:8032

queueName=default

examplesRoot=oozie-apps

oozie.wf.application.path=${nameNode}/user/${user.name}/${examplesRoot}/map-reduce/workflow.xml

outputDir=map-reduce

workflow.xml

<workflow-app xmlns="uri:oozie:workflow:0.2" name="map-reduce-wf">

<start to="mr-node"/>

<action name="mr-node">

<map-reduce>

<job-tracker>${jobTracker}</job-tracker>

<name-node>${nameNode}</name-node>

<!-- 准备阶段先删除输出目录 -->

<prepare>

<delete path="${nameNode}/wordcount/out"/>

</prepare>

<!-- 配置项 -->

<configuration>

<property>

<name>mapred.job.queue.name</name>

<value>${queueName}</value>

</property>

<!-- 配置调度MR任务时,使用新的API -->

<property>

<name>mapred.mapper.new-api</name>

<value>true</value>

</property>

<property>

<name>mapred.reducer.new-api</name>

<value>true</value>

</property>

<!-- 指定Job Key输出类型 -->

<property>

<name>mapreduce.job.output.key.class</name>

<value>org.apache.hadoop.io.Text</value>

</property>

<!-- 指定Job Value输出类型 -->

<property>

<name>mapreduce.job.output.value.class</name>

<value>org.apache.hadoop.io.IntWritable</value>

</property>

<!-- 指定输入路径 -->

<property>

<name>mapred.input.dir</name>

<value>/wordcount/word.txt</value>

</property>

<!-- 指定输出路径 -->

<property>

<name>mapred.output.dir</name>

<value>/wordcount/out</value>

</property>

<!-- 指定Map类 -->

<property>

<name>mapreduce.job.map.class</name>

<value>org.apache.hadoop.examples.WordCount$TokenizerMapper</value>

</property>

<!-- 指定Reduce类 -->

<property>

<name>mapreduce.job.reduce.class</name>

<value>org.apache.hadoop.examples.WordCount$IntSumReducer</value>

</property>

<!-- 指定task 个数 -->

<property>

<name>mapred.map.tasks</name>

<value>1</value>

</property>

</configuration>

</map-reduce>

<ok to="end"/>

<error to="fail"/>

</action>

<kill name="fail">

<message>Map/Reduce failed, error message[${wf:errorMessage(wf:lastErrorNode())}]</message>

</kill>

<end name="end"/>

</workflow-app>

任务目录上传到HDFS

root@master:/opt/module/oozie-4.0.0-cdh5.3.6/oozie-apps# hadoop fs -put map-reduce/ /user/root/oozie-apps

任务jar包或者依赖包都必须放在 lib 目录下

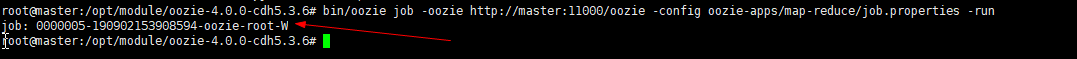

提交oozie任务

root@master:/opt/module/oozie-4.0.0-cdh5.3.6# bin/oozie job -oozie http://master:11000/oozie -config oozie-apps/map-reduce/job.properties -run

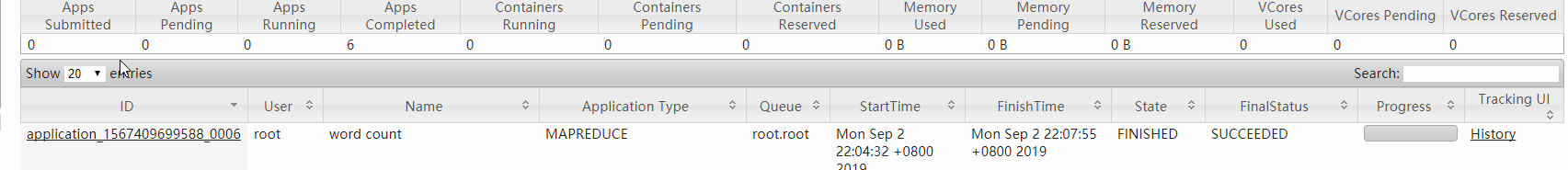

oozie运行截图:

HDFS截图:

查看结果:

424

424

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?