一.单机部署

1.拉取镜像

docker pull wurstmeister/zookeeper

docker pull wurstmeister/kafka2.定义docker-compose.yml

version: '2'

services:

zookeeper:

image: wurstmeister/zookeeper

volumes:

- /data/zookeeper:/data

ports:

- "2181:2181"

environment:

ZOO_MY_ID: 1

ZOO_PORT: 2181

ZOO_SERVERS: server.1=zoo1:2888:3888

restart: always

kafka:

image: wurstmeister/kafka

ports:

- "9092:9092"

environment:

KAFKA_ADVERTISED_HOST_NAME: 192.168.0.120

KAFKA_MESSAGE_MAX_BYTES: 2000000

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

volumes:

- /data/kafka-logs:/kafka

- /var/run/docker.sock:/var/run/docker.sock

restart: always 3.启动服务

docker-compose up -d4.启动测试

docker exec -it kafka_kafka_1 bash

找到执行脚本

bash-5.1# find / -type f -name "kafka-topics.sh"5.创建topic

./kafka-topics.sh --create --topic mytopic --partitions 2 --zookeeper 192.168.0.120:2181 --replication-factor 16.列出所有的topic

./kafka-topics.sh -zookeeper 192.168.0.120:2181 --list7.发布消息

./kafka-console-producer.sh --broker-list 192.168.0.120:9092 --topic mytopic8.消费消息

./kafka-console-consumer.sh --bootstrap-server 192.168.0.120:9092 --topic mytopic --from-beginning二.集群部署

1.选择主机三台

192.168.0.100 ####zook1 #######kafka1

192.168.0.90 ####zook2 #######kafka2

192.168.0.120 ####zook3 #######kafka32.部署zookeeper

192.168.0.100上执行

创建目录

mkdir /data/zk-kafkadocker run -tid --name=zk-1 \

--restart=always \

--privileged=true \

-p 2888:2888 \

-p 3888:3888 \

-p 2181:2181 \

-e ZOO_MY_ID=1 \

-e ZOO_SERVERS=server.1="0.0.0.0:2888:3888 server.2=192.168.0.90:2888:3888 server.3=192.168.0.120:2888:3888" \

-v /data/zk-kafka/zookeeper/data:/data \

zookeeper:3.4.9192.168.0.90上执行

创建目录

mkdir /data/zk-kafkadocker run -tid --name=zk-2 \

--restart=always \

--privileged=true \

-p 2888:2888 \

-p 3888:3888 \

-p 2181:2181 \

-e ZOO_MY_ID=2 \

-e ZOO_SERVERS=server.1="192.168.0.100:2888:3888 server.2=0.0.0.0:2888:3888 server.3=192.168.0.120:2888:3888" \

-v /data/zk-kafka/zookeeper/data:/data \

zookeeper:3.4.9192.168.0.120上执行

创建目录

mkdir /data/zk-kafkadocker run -tid --name=zk-3 \

--restart=always \

--privileged=true \

-p 2888:2888 \

-p 3888:3888 \

-p 2181:2181 \

-e ZOO_MY_ID=3 \

-e ZOO_SERVERS=server.1="192.168.0.100:2888:3888 server.2=192.168.0.90:2888:3888 server.3=0.0.0.0:2888:3888" \

-v /data/zk-kafka/zookeeper/data:/data \

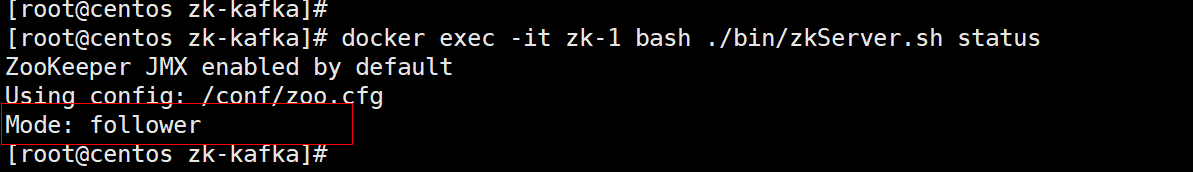

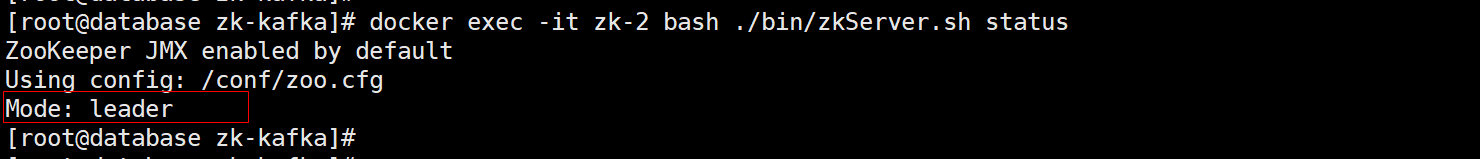

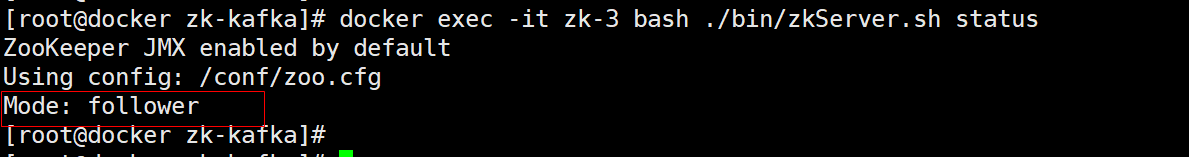

zookeeper:3.4.93.查看集群状态

docker exec -it zk-1 bash ./bin/zkServer.sh status

4.部署kafka

192.168.0.100上执行

docker run -itd --name=kafka \

--restart=always \

--net=host \

--privileged=true \

-v /etc/hosts:/etc/hosts \

-v /data/zk-kafka/kafka/data:/kafka/ \

-v /data/zk-kafka/kafka/logs:/opt/kafka/logs \

-e KAFKA_ADVERTISED_HOST_NAME=192.168.0.100 \

-e HOST_IP=192.168.0.100 \

-e KAFKA_ADVERTISED_PORT=9092 \

-e KAFKA_ZOOKEEPER_CONNECT=192.168.0.100:2181,192.168.0.90:2181,192.168.0.120:2181 \

-e KAFKA_BROKER_ID=0 \

-e KAFKA_HEAP_OPTS="-Xmx2048M -Xms2048M" \

wurstmeister/kafka 192.168.0.90上执行

docker run -itd --name=kafka \

--restart=always \

--net=host \

--privileged=true \

-v /etc/hosts:/etc/hosts \

-v /data/zk-kafka/kafka/data:/kafka \

-v /data/zk-kafka/kafka/logs:/opt/kafka/logs \

-e KAFKA_ADVERTISED_HOST_NAME=192.168.0.90 \

-e HOST_IP=192.168.0.90 \

-e KAFKA_ADVERTISED_PORT=9092 \

-e KAFKA_ZOOKEEPER_CONNECT=192.168.0.100:2181,192.168.0.90:2181,192.168.0.120:2181 \

-e KAFKA_BROKER_ID=1 \

-e KAFKA_HEAP_OPTS="-Xmx2048M -Xms2048M" \

wurstmeister/kafka192.168.0.120上执行

docker run -itd --name=kafka \

--restart=always \

--net=host \

--privileged=true \

-v /etc/hosts:/etc/hosts \

-v /data/zk-kafka/kafka/data:/kafka \

-v /data/zk-kafka/kafka/logs:/opt/kafka/logs \

-e KAFKA_ADVERTISED_HOST_NAME=192.168.0.120 \

-e HOST_IP=192.168.0.120 \

-e KAFKA_ADVERTISED_PORT=9092 \

-e KAFKA_ZOOKEEPER_CONNECT=192.168.0.100:2181,192.168.0.90:2181,192.168.0.120:2181 \

-e KAFKA_BROKER_ID=2 \

-e KAFKA_HEAP_OPTS="-Xmx2048M -Xms2048M" \

wurstmeister/kafka5.创建topic

bash /opt/kafka/bin/kafka-topics.sh --zookeeper 192.168.0.100:2181,192.168.0.90:2181,192.168.0.120:2181 --create --replication-factor 1 --partitions 2 --topic mytopic6.查看topic

cd /opt/kafka/bin/

./kafka-topics.sh -zookeeper 192.168.0.120:2181 --list7.发送消息

bash-5.1# bash /opt/kafka/bin/kafka-console-producer.sh --broker-list 192.168.0.100:9092,192.168.0.90:9092,192.168.0.120:9092 --topic mytopic8.消费消息

bash /opt/kafka/bin/kafka-console-consumer.sh --bootstrap-server 192.168.0.100:9092,192.168.0.90:9092,192.168.0.120:9092 --topic mytopic --from-beginning

1351

1351

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?