一、环境说明

1、机器:三台centos虚拟机

2、linux版本:[root@hadoop1 ~]# cat /etc/issue

CentOS release 6.5 (Final)

Kernel \r on an \m

3、[root@hadoop1 ~]# java -version

java version “1.7.0_67”

Java(TM) SE Runtime Environment (build 1.7.0_67-b01)

Java HotSpot(TM) 64-Bit Server VM (build 24.65-b04, mixed mode)

4、集群节点:两个 master(hadoop0、hadoop1),一个Slave(hadoop2)

二、准备工作

1、安装Java jdk前一篇文章撰写了:http://blog.csdn.net/shandadadada/article/details/47817431

2、ssh免密码验证 :http://blog.csdn.net/shandadadada/article/details/49594075

3、下载Hadoop版本:http://mirror.bit.edu.cn/apache/hadoop/common/

三、安装Hadoop

这是下载后的hadoop-2.5.2.tar.gz压缩包,

1、解压 tar -xzvf hadoop-2.5.2.tar.gz

2、hadoop目录 [root@hadoop0 hadoop]# ls

bin include libexec NOTICE.txt sbin

etc lib LICENSE.txt README.txt share

3.配置之前,先在本地文件系统创建以下文件夹:~/hadoop/tmp、~/hadoop/hdfs/data、~/hadoop/hdfs/name。 主要涉及的配置文件有7个:都在/home/hadoop文件夹下,可以用gedit命令对其进行编辑。

-rw-r--r--. 1 10021 10021 3443 Nov 14 2014 hadoop-env.sh

-rw-r--r--. 1 10021 10021 4567 Nov 14 2014 yarn-env.sh

-rw-r--r--. 1 10021 10021 10 Nov 14 2014 slaves

-rw-r--r--. 1 10021 10021 774 Nov 14 2014 core-site.xml

-rw-r--r--. 1 10021 10021 775 Nov 14 2014 hdfs-site.xml

-rw-r--r--. 1 10021 10021 758 Nov 14 2014 mapred-site.xml

-rw-r--r--. 1 10021 10021 690 Nov 14 2014 yarn-site.xml

4.进入配置目录:

[root@hadoop0 hadoop]# ll

total 124

-rw-r--r--. 1 10021 10021 3589 Nov 14 2014 capacity-scheduler.xml

-rw-r--r--. 1 10021 10021 1335 Nov 14 2014 configuration.xsl

-rw-r--r--. 1 10021 10021 318 Nov 14 2014 container-executor.cfg

-rw-r--r--. 1 10021 10021 774 Nov 14 2014 core-site.xml

-rw-r--r--. 1 10021 10021 3670 Nov 14 2014 hadoop-env.cmd

-rw-r--r--. 1 10021 10021 3443 Nov 14 2014 hadoop-env.sh

-rw-r--r--. 1 10021 10021 1774 Nov 14 2014 hadoop-metrics2.properties

-rw-r--r--. 1 10021 10021 2490 Nov 14 2014 hadoop-metrics.properties

-rw-r--r--. 1 10021 10021 9201 Nov 14 2014 hadoop-policy.xml

-rw-r--r--. 1 10021 10021 775 Nov 14 2014 hdfs-site.xml

-rw-r--r--. 1 10021 10021 1449 Nov 14 2014 httpfs-env.sh

-rw-r--r--. 1 10021 10021 1657 Nov 14 2014 httpfs-log4j.properties

-rw-r--r--. 1 10021 10021 21 Nov 14 2014 httpfs-signature.secret

-rw-r--r--. 1 10021 10021 620 Nov 14 2014 httpfs-site.xml

-rw-r--r--. 1 10021 10021 11118 Nov 14 2014 log4j.properties

-rw-r--r--. 1 10021 10021 938 Nov 14 2014 mapred-env.cmd

-rw-r--r--. 1 10021 10021 1383 Nov 14 2014 mapred-env.sh

-rw-r--r--. 1 10021 10021 4113 Nov 14 2014 mapred-queues.xml.template

-rw-r--r--. 1 10021 10021 758 Nov 14 2014 mapred-site.xml.template

-rw-r--r--. 1 10021 10021 10 Nov 14 2014 slaves

-rw-r--r--. 1 10021 10021 2316 Nov 14 2014 ssl-client.xml.example

-rw-r--r--. 1 10021 10021 2268 Nov 14 2014 ssl-server.xml.example

-rw-r--r--. 1 10021 10021 2237 Nov 14 2014 yarn-env.cmd

-rw-r--r--. 1 10021 10021 4567 Nov 14 2014 yarn-env.sh

-rw-r--r--. 1 10021 10021 690 Nov 14 2014 yarn-site.xml

4.1、配置 hadoop-env.sh文件–>修改JAVA_HOME

export JAVA_HOME=/home/guolin/software/jdk1.7.0_674.2、配置 yarn-env.sh 文件–>>修改JAVA_HOME

export JAVA_HOME=/home/guolin/software/jdk1.7.0_674.3、配置slaves文件–>>增加slave节点

hadoop1

hadoop2

4.4、配置 core-site.xml文件–>>增加hadoop核心配置(hdfs文件端口是9000、file:/home/hadoopdata/tmp)

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop0:9000</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/home/hadoopdata/tmp</value>

<description>Abasefor other temporary directories.</description>

</property>

</configuration>

4.5、配置 hdfs-site.xml 文件–>>增加hdfs配置信息(namenode、datanode端口和目录位置)

<configuration>

<property>

<name>dfs.nameservices</name>

<value>hadoop-cluster1</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>haddop0:50090</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///home/hadoopdata/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///home/hadoopdata/dfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

</configuration>

4.6、配置 mapred-site.xml 文件–>>增加mapreduce配置(使用yarn框架、jobhistory使用地址以及web地址)

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>hadoop0:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>hadoop0:19888</value>

</property>

</configuration>

4.7、配置 yarn-site.xml 文件–>>增加yarn功能

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>hadoop0:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>hadoop0:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>hadoop0:8035</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>hadoop0:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>hadoop0:8088</value>

</property>

</configuration>

四、验证

1、格式化namenode:

[root@hadoop0 hadoop]# ./bin/hdfs namenode -format

2、启动hdfs:

[root@hadoop0 hadoop]# ./sbin/start-dfs.sh

15/12/06 06:48:56 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Starting namenodes on [hadoop0]

hadoop0: starting namenode, logging to /home/hadoop/logs/hadoop-root-namenode-hadoop0.out

hadoop2: starting datanode, logging to /home/hadoop/logs/hadoop-root-datanode-hadoop2.out

hadoop1: starting datanode, logging to /home/hadoop/logs/hadoop-root-datanode-hadoop1.out

15/12/06 06:49:17 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable[root@hadoop0 hadoop]# jps

31954 Jps

31514 NameNode

3、停止hdfs:

[root@hadoop0 hadoop]# ./sbin/stop-dfs.sh

15/12/06 07:01:12 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Stopping namenodes on [hadoop0]

hadoop0: stopping namenode

hadoop2: stopping datanode

hadoop1: stopping datanode

15/12/06 07:01:29 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@hadoop0 hadoop]# jps

32229 ResourceManager

32787 Jps4、启动yarn:

[root@hadoop0 hadoop]# ./sbin/start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /home/hadoop/logs/yarn-root-resourcemanager-hadoop0.out

hadoop2: starting nodemanager, logging to /home/hadoop/logs/yarn-root-nodemanager-hadoop2.out

hadoop1: starting nodemanager, logging to /home/hadoop/logs/yarn-root-nodemanager-hadoop1.out

[root@hadoop0 hadoop]# jps

32229 ResourceManager

31514 NameNode

32295 Jps

5、停止yarn:

[root@hadoop0 hadoop]# ./sbin/stop-yarn.sh

stopping yarn daemons

stopping resourcemanager

hadoop2: stopping nodemanager

hadoop1: stopping nodemanager

no proxyserver to stop6、启动yarn和hdfs查看集群状态:

[root@hadoop0 hadoop]# ./bin/hdfs dfsadmin -report

15/12/06 07:04:17 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Configured Capacity: 37558796288 (34.98 GB)

Present Capacity: 28664430592 (26.70 GB)

DFS Remaining: 28664381440 (26.70 GB)

DFS Used: 49152 (48 KB)

DFS Used%: 0.00%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

-------------------------------------------------

Live datanodes (2):

Name: 192.168.213.143:50010 (hadoop1)

Hostname: hadoop1

Decommission Status : Normal

Configured Capacity: 18779398144 (17.49 GB)

DFS Used: 24576 (24 KB)

Non DFS Used: 4447133696 (4.14 GB)

DFS Remaining: 14332239872 (13.35 GB)

DFS Used%: 0.00%

DFS Remaining%: 76.32%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Sun Dec 06 07:04:17 PST 2015

Name: 192.168.213.144:50010 (hadoop2)

Hostname: hadoop2

Decommission Status : Normal

Configured Capacity: 18779398144 (17.49 GB)

DFS Used: 24576 (24 KB)

Non DFS Used: 4447232000 (4.14 GB)

DFS Remaining: 14332141568 (13.35 GB)

DFS Used%: 0.00%

DFS Remaining%: 76.32%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Sun Dec 06 07:04:18 PST 2015

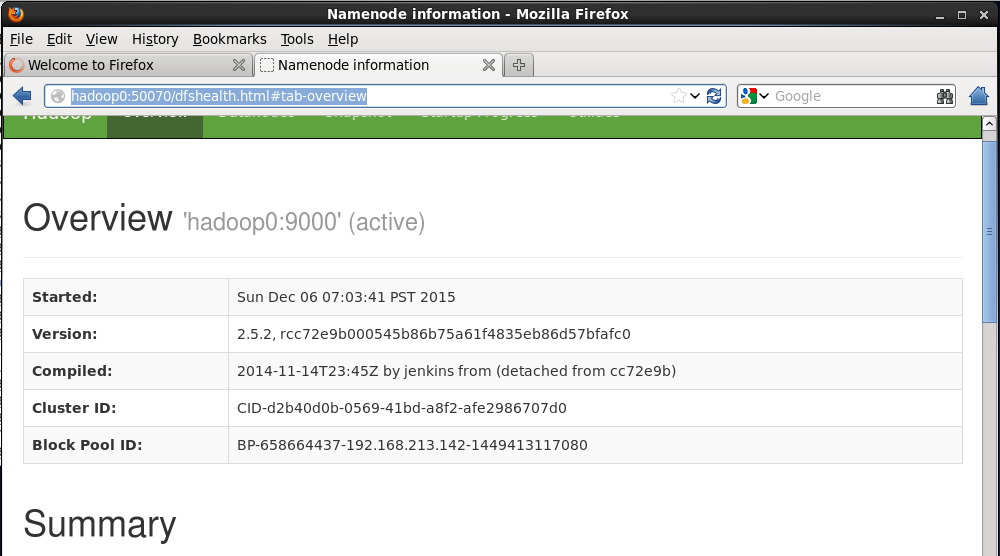

7、查看hdfs:http://hadoop0:50070/dfshealth.html#tab-overview

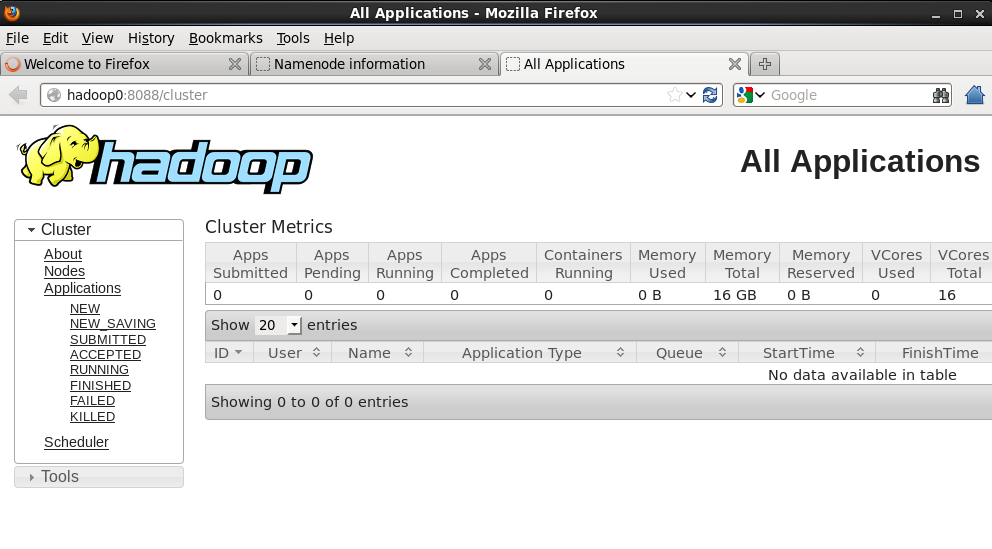

8、查看RM:http://hadoop0:8088/cluster

9、运行wordcount程序

9.1、创建 input目录:

[root@hadoop0 hadoop]$ mkdir input9.2、在input创建f1、f2并写内容

[root@hadoop0 hadoop]$ cat input/f1

Hello world bye jj

[root@hadoop0 hadoop]$ cat input/f2

Hello Hadoop bye Hadoop9.3、在hdfs创建/tmp/input目录

[root@hadoop0 hadoop]# ./bin/hadoop fs -mkdir /tmp

15/12/06 07:14:09 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@hadoop0 hadoop]# ./bin/hadoop fs -mkdir /tmp/input

15/12/06 07:14:14 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable9.4、将f1、f2文件copy到hdfs /tmp/input目录

[root@hadoop0 hadoop]# ./bin/hadoop fs -put /home/hadooptest/input/ /tmp

15/12/06 07:15:52 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable9.5、查看hdfs上是否有f1、f2文件

[root@hadoop0 hadoop]# ./bin/hadoop fs -ls /tmp/input/

15/12/06 07:16:43 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 2 items

-rw-r--r-- 2 root supergroup 14 2015-12-06 07:15 /tmp/input/f1

-rw-r--r-- 2 root supergroup 19 2015-12-06 07:15 /tmp/input/f29.6、执行wordcount程序

[root@hadoop0 hadoop]# ./bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.5.2.jar wordcount /tmp/input /output

15/12/06 07:18:57 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

15/12/06 07:18:59 INFO client.RMProxy: Connecting to ResourceManager at hadoop0/192.168.213.142:8032

15/12/06 07:19:01 INFO input.FileInputFormat: Total input paths to process : 2

15/12/06 07:19:01 INFO mapreduce.JobSubmitter: number of splits:2

15/12/06 07:19:02 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1449414252641_0001

15/12/06 07:19:02 INFO impl.YarnClientImpl: Submitted application application_1449414252641_0001

15/12/06 07:19:02 INFO mapreduce.Job: The url to track the job: http://hadoop0:8088/proxy/application_1449414252641_0001/

15/12/06 07:19:02 INFO mapreduce.Job: Running job: job_1449414252641_0001

15/12/06 07:19:18 INFO mapreduce.Job: Job job_1449414252641_0001 running in uber mode : false

15/12/06 07:19:18 INFO mapreduce.Job: map 0% reduce 0%

15/12/06 07:19:40 INFO mapreduce.Job: map 100% reduce 0%

15/12/06 07:19:51 INFO mapreduce.Job: map 100% reduce 100%

15/12/06 07:19:52 INFO mapreduce.Job: Job job_1449414252641_0001 completed successfully

15/12/06 07:19:52 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=60

FILE: Number of bytes written=291779

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=227

HDFS: Number of bytes written=28

HDFS: Number of read operations=9

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=2

Launched reduce tasks=1

Data-local map tasks=2

Total time spent by all maps in occupied slots (ms)=39087

Total time spent by all reduces in occupied slots (ms)=7310

Total time spent by all map tasks (ms)=39087

Total time spent by all reduce tasks (ms)=7310

Total vcore-seconds taken by all map tasks=39087

Total vcore-seconds taken by all reduce tasks=7310

Total megabyte-seconds taken by all map tasks=40025088

Total megabyte-seconds taken by all reduce tasks=7485440

Map-Reduce Framework

Map input records=2

Map output records=6

Map output bytes=55

Map output materialized bytes=66

Input split bytes=194

Combine input records=6

Combine output records=5

Reduce input groups=4

Reduce shuffle bytes=66

Reduce input records=5

Reduce output records=4

Spilled Records=10

Shuffled Maps =2

Failed Shuffles=0

Merged Map outputs=2

GC time elapsed (ms)=513

CPU time spent (ms)=9090

Physical memory (bytes) snapshot=518291456

Virtual memory (bytes) snapshot=2516721664

Total committed heap usage (bytes)=257171456

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=33

File Output Format Counters

Bytes Written=289.7、查看执行结果

[root@hadoop0 hadoop]# ./bin/hadoop fs -ls /output

15/12/06 07:23:57 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 2 items

-rw-r--r-- 2 root supergroup 0 2015-12-06 07:19 /output/_SUCCESS

-rw-r--r-- 2 root supergroup 28 2015-12-06 07:19 /output/part-r-00000

[root@hadoop0 hadoop]# ./bin/hadoop fs -cat /output/part-r-00000

15/12/06 07:24:19 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Hadoop 2

bye 2

jj 1

world 1

289

289

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?