1.Flume介绍及设计思想

一.介绍

Flume是一个分布式、可靠、和高可用的海量日志采集、聚合和传输的系统。

目的:把不同数据源的数据,汇集到一个集中存储中心。

版本: 0.9.x(OG) VS 1.x(NG) 还没了解OG和NG的区别

特点:分布式/可靠及高可用性/实时性/扩展性

二.设计思想

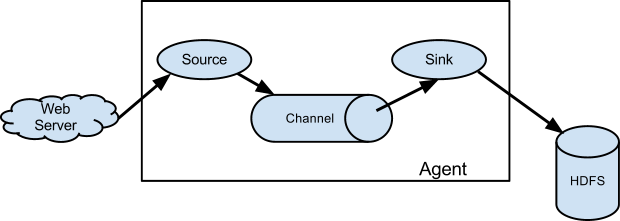

模块化设计:内部按职能划分Source、Channel、Sink等模块

组合式设计:根据不同场景选择适合的Source、Channel、Sink进行组合

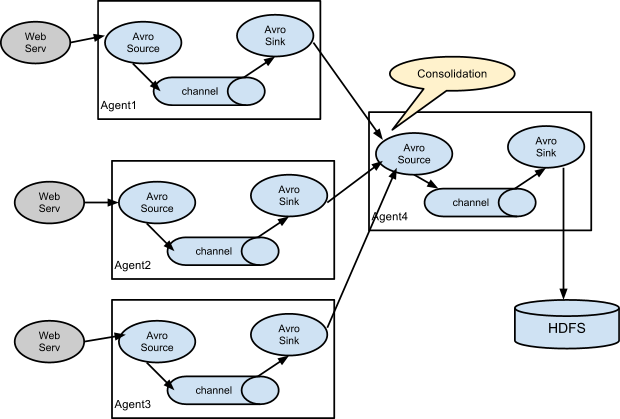

数据流模型:支持复杂的数据流(fan-in、fan-out),构建自己的拓扑

扩展性/插件式设计:可以根据自己业务需要来定制实现某些组件

可靠保障

数据存储于各agent的channel中,用事务来保证agent之间的数据传递

flume提供基于文件的channel

三.基本组件

Agent:一个Flume JVM进程,可包含多个Source,Sink,Channel

Source:采集数据,写入Channel

Sink:读取Channel数据,持久化

Channel:连接Source及Sink

Event:数据的传递形式,包含header及body两部分

拓扑:Flume传输的架构,通过串联Agent及三大组件形成

四:常用组件

Source:Avro、Thrift、Syslog、Netcat、Exec、Http

Sink:HDFS、Kafka、Avro、File、HBase

Channel:Memory、File、Kafka、Spillable-Memory

五:使用场景

什么时候使用fan-out?什么时候使用fan-in?

fan-out:比如我们接受tomcat日志,这时候日志可能会打到kafka/es等不同channel处理

fan-in:比如我们一台机器上有两台tomcat,这时候两个source都写入到同一个channel.

2.安装

一.下载地址

http://www.apache.org/dyn/closer.lua/flume/1.6.0/apache-flume-1.6.0-bin.tar.gz

JDK

1.6 or later (Java 1.7 Recommended)

二.配置

配置文件目录:apache-flume-1.6.0-bin/conf

flume-env.sh:环境变量(JDK、Classpath)

flume-conf.properties:Agent配置

配置样例:

# list the sources, sinks and channels for the agent

<Agent>.sources = <Source>

<Agent>.sinks = <Sink>

<Agent>.channels = <Channel1> <Channel2>

# set channel for source

<Agent>.sources.<Source>.channels = <Channel1> <Channel2> ...

# set channel for sink

<Agent>.sinks.<Sink>.channel = <Channel1>启动命令:

bin/flume-ng agent --conf ./conf/ -f conf/flume-conf.properties

-Dflume.root.logger=DEBUG,console -n <Agent>3.实践

1.example01:通过tcp接收数据,然后打印出来

# ============== test read telnet ========================================

# bin/flume-ng agent --conf ./conf/ -f conf/example01.conf -Dflume.root.logger=INFO,console -n a1

a1.sources = r1

a1.channels = c1

a1.sinks = k1

a1.sources.r1.type = netcat

a1.sources.r1.channels = c1

a1.sources.r1.bind = 0.0.0.0

a1.sources.r1.port = 4140

a1.channels.c1.type = memory

a1.channels.c1.capacity = 100

a1.sinks.k1.type = logger

a1.sinks.k1.channel = c1telnet:

pc:~/software/flume/apache-flume-1.6.0-bin$ telnet localhost 4140

Trying 127.0.0.1...

Connected to localhost.

Escape character is '^]'.

dad

OK

dafdfasdf

OK

kafka输出:

2016-03-13 18:02:10,999 (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.LoggerSink.process(LoggerSink.java:94)] Event: { headers:{} body: 64 61 64 0D dad. }

2016-03-13 18:02:16,200 (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.LoggerSink.process(LoggerSink.java:94)] Event: { headers:{} body: 64 61 66 64 66 61 73 64 66 0D dafdfasdf. }

2.example02:tail一个文件,然后写入Kafka

# ============== test tail file ========================================

# bin/flume-ng agent --conf ./conf/ -f conf/example02.conf -Dflume.root.logger=INFO,console -n a1

a1.sources = r1

a1.channels = c1

a1.sinks = k1

a1.sources.r1.type = exec

a1.sources.r1.command = tail -F /tmp/flume.test

a1.sources.r1.channels = c1

a1.channels.c1.type = memory

a1.channels.c1.capacity = 100

a1.sinks.k1.type = org.apache.flume.sink.kafka.KafkaSink

a1.sinks.k1.channel = c1

a1.sinks.k1.topic = test.flume.topic

a1.sinks.k1.brokerList = 192.168.6.13:9092

a1.sinks.k1.requiredAcks = 0

a1.sinks.k1.batchSize = 2000

a1.sinks.k1.kafka.producer.type = async

a1.sinks.k1.kafka.batch.num.messages = 1000echo 2323sd >> flume.testbin/kafka-console-consumer.sh --zookeeper localhost:2181 --topic test.flume.topic --from-beginningxueguolin@xueguolin-pc:~/software/kafka/kafka_2.10-0.8.2.0$ [2016-05-11 22:38:17,557] INFO Closing socket connection to /127.0.0.1. (kafka.network.Processor)

bin/kafka-console-consumer.sh --zookeeper localhost:2181 --topic test.flume.topic --from-beginning

[2016-05-11 22:38:24,397] INFO Closing socket connection to /127.0.0.1. (kafka.network.Processor)

23

[2016-05-11 22:41:34,727] INFO Closing socket connection to /127.0.0.1. (kafka.network.Processor)

qw

we

sd

2323sd

2323sd

2323sd

2323sd

2323sd

adad

adad2323

3.example03:tail两个文件,在聚集端打印出来

agent:

# ============== test write kafka ========================================

# bin/flume-ng agent --conf ./conf/ -f conf/example03-agent.conf -Dflume.root.logger=INFO,console -n flumeAgent

flumeAgent.sources = r1 r2

flumeAgent.channels = c1

flumeAgent.sinks = k1

flumeAgent.sources.r1.type = exec

flumeAgent.sources.r1.command = tail -F /tmp/flume.test1

flumeAgent.sources.r1.channels = c1

flumeAgent.sources.r2.type = exec

flumeAgent.sources.r2.command = tail -F /tmp/flume.test2

flumeAgent.sources.r2.channels = c1

flumeAgent.channels.c1.type = memory

flumeAgent.channels.c1.capacity = 100

flumeAgent.sinks.k1.type = avro

flumeAgent.sinks.k1.channel = c1

flumeAgent.sinks.k1.hostname = 127.0.0.1

flumeAgent.sinks.k1.port = 4141server:

# ============== test write kafka ========================================

# bin/flume-ng agent --conf ./conf/ -f conf/example03-server.conf -Dflume.root.logger=INFO,console -n flumeServer

flumeServer.sources = r1

flumeServer.channels = c1

flumeServer.sinks = k1

flumeServer.sources.r1.type = avro

flumeServer.sources.r1.channels = c1

flumeServer.sources.r1.bind = 0.0.0.0

flumeServer.sources.r1.port = 4141

flumeServer.channels.c1.type = memory

flumeServer.channels.c1.capacity = 100

flumeServer.sinks.k1.type = logger

flumeServer.sinks.k1.channel = c1

xueguolin@xueguolin-pc:/tmp$ echo 12 >> flume.test1

xueguolin@xueguolin-pc:/tmp$ echo 1223 >> flume.test22016-05-15 14:47:48,273 (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.LoggerSink.process(LoggerSink.java:94)] Event: { headers:{} body: 31 32 12 }

2016-05-15 14:48:03,274 (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.LoggerSink.process(LoggerSink.java:94)] Event: { headers:{} body: 31 32 32 33 1223 }

问题1:如何区分example03的两个日志?

interceptor:可在Source把Event写入Channel之前,对Event做一些操作

selector:channel选择器

添加interceptor之后的配置:

flumeAgent.sources.r1.interceptors = i1

flumeAgent.sources.r1.interceptors.i1.type = static

flumeAgent.sources.r1.interceptors.i1.key = whoami

flumeAgent.sources.r1.interceptors.i1.value = log1

flumeAgent.sources.r2.interceptors = i1

flumeAgent.sources.r2.interceptors.i1.type = static

flumeAgent.sources.r2.interceptors.i1.key = whoami

flumeAgent.sources.r2.interceptors.i1.value = log2添加多路选择之后的配置:

selector.type

replicating:所有Channel都写入数据,默认值

multiplexing:根据规则选择Channel写数据

flumeServer.sources.r1.channels = c1 c2

flumeServer.sources.r1.selector.type = multiplexing

flumeServer.sources.r1.selector.header = whoami

flumeServer.sources.r1.selector.mapping.log1 = c1

flumeServer.sources.r1.selector.mapping.log2 = c2例子:

# ============== test write kafka ========================================

# bin/flume-ng agent --conf ./conf/ -f conf/example03-q1-agent.conf -Dflume.root.logger=INFO,console -n flumeAgent

flumeAgent.sources = r1 r2

flumeAgent.channels = c1

flumeAgent.sinks = k1

flumeAgent.sources.r1.type = exec

flumeAgent.sources.r1.command = tail -F /tmp/flume.test1

flumeAgent.sources.r1.channels = c1

flumeAgent.sources.r1.interceptors = i1

flumeAgent.sources.r1.interceptors.i1.type = static

flumeAgent.sources.r1.interceptors.i1.key = whoami

flumeAgent.sources.r1.interceptors.i1.value = log1

flumeAgent.sources.r2.type = exec

flumeAgent.sources.r2.command = tail -F /tmp/flume.test2

flumeAgent.sources.r2.channels = c1

flumeAgent.sources.r2.interceptors = i1

flumeAgent.sources.r2.interceptors.i1.type = static

flumeAgent.sources.r2.interceptors.i1.key = whoami

flumeAgent.sources.r2.interceptors.i1.value = log2

flumeAgent.channels.c1.type = memory

flumeAgent.channels.c1.capacity = 100

flumeAgent.sinks.k1.type = avro

flumeAgent.sinks.k1.channel = c1

flumeAgent.sinks.k1.hostname = 127.0.0.1

flumeAgent.sinks.k1.port = 4141# ============== test write kafka ========================================

# bin/flume-ng agent --conf ./conf/ -f conf/example03-q1-server.conf -Dflume.root.logger=INFO,console -n flumeServer

flumeServer.sources = r1

flumeServer.channels = c1 c2

flumeServer.sinks = k1 k2

flumeServer.sources.r1.type = avro

flumeServer.sources.r1.channels = c1 c2

flumeServer.sources.r1.bind = 0.0.0.0

flumeServer.sources.r1.port = 4141

flumeServer.sources.r1.selector.type = multiplexing

flumeServer.sources.r1.selector.header = whoami

flumeServer.sources.r1.selector.mapping.log1 = c1

flumeServer.sources.r1.selector.mapping.log2 = c2

#==============================================

flumeServer.channels.c1.type = memory

flumeServer.channels.c1.capacity = 100

flumeServer.channels.c2.type = memory

flumeServer.channels.c2.capacity = 100

#==============================================

flumeServer.sinks.k1.type = logger

flumeServer.sinks.k1.channel = c1

flumeServer.sinks.k2.type = logger

flumeServer.sinks.k2.channel = c2xueguolin@xueguolin-pc:/tmp$ echo 1223 >> flume.test2

xueguolin@xueguolin-pc:/tmp$ echo 1333223 >> flume.test2

xueguolin@xueguolin-pc:/tmp$ echo 12kk >> flume.test1

2016-05-15 14:54:07,313 (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.LoggerSink.process(LoggerSink.java:94)] Event: { headers:{whoami=log2} body: 31 32 32 33 1223 }

2016-05-15 14:54:38,502 (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.LoggerSink.process(LoggerSink.java:94)] Event: { headers:{whoami=log2} body: 31 33 33 33 32 32 33 1333223 }

2016-05-15 14:55:01,318 (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.LoggerSink.process(LoggerSink.java:94)] Event: { headers:{whoami=log1} body: 31 32 6B 6B 12kk }

问题2:example03只有一个Server,挂了怎么办?

sinkgroup:把多个sink捆绑成一个

processor.type

default:默认值,单sink

failover:容错处理(异常sink进入cd阶段)

load_balance:负载均衡(也具有容错功能,但会挨个尝试sink)

添加sinkgroup:

flumeAgent.sinkgroups = g1

flumeAgent.sinkgroups.g1.sinks = k1 k2

flumeAgent.sinkgroups.g1.processor.type = failover例子:

# ============== test write kafka ========================================

# bin/flume-ng agent --conf ./conf/ -f conf/example03-q2-agent.conf -Dflume.root.logger=INFO,console -n flumeAgent

flumeAgent.sources = r1 r2

flumeAgent.channels = c1

flumeAgent.sinks = k1 k2

flumeAgent.sources.r1.type = exec

flumeAgent.sources.r1.command = tail -F /tmp/flume.test1

flumeAgent.sources.r1.channels = c1

flumeAgent.sources.r2.type = exec

flumeAgent.sources.r2.command = tail -F /tmp/flume.test2

flumeAgent.sources.r2.channels = c1

flumeAgent.channels.c1.type = memory

flumeAgent.channels.c1.capacity = 100

flumeAgent.sinkgroups = g1

flumeAgent.sinkgroups.g1.sinks = k1 k2

flumeAgent.sinkgroups.g1.processor.type = failover

flumeAgent.sinks.k1.type = avro

flumeAgent.sinks.k1.channel = c1

flumeAgent.sinks.k1.hostname = 127.0.0.1

flumeAgent.sinks.k1.port = 4141

flumeAgent.sinks.k1.batch-size = 1

flumeAgent.sinks.k2.type = avro

flumeAgent.sinks.k2.channel = c1

flumeAgent.sinks.k2.hostname = 127.0.0.1

flumeAgent.sinks.k2.port = 4142

flumeAgent.sinks.k2.batch-size = 1server1:

# ============== test write kafka ========================================

# bin/flume-ng agent --conf ./conf/ -f conf/example03-q2-server1.conf -Dflume.root.logger=INFO,console -n flumeServer

flumeServer.sources = r1

flumeServer.channels = c1

flumeServer.sinks = k1

flumeServer.sources.r1.type = avro

flumeServer.sources.r1.channels = c1

flumeServer.sources.r1.bind = 0.0.0.0

flumeServer.sources.r1.port = 4141

flumeServer.channels.c1.type = memory

flumeServer.channels.c1.capacity = 100

flumeServer.sinks.k1.type = logger

flumeServer.sinks.k1.channel = c1

server2:

# ============== test write kafka ========================================

# bin/flume-ng agent --conf ./conf/ -f conf/example03-q2-server2.conf -Dflume.root.logger=INFO,console -n flumeServer

flumeServer.sources = r1

flumeServer.channels = c1

flumeServer.sinks = k1

flumeServer.sources.r1.type = avro

flumeServer.sources.r1.channels = c1

flumeServer.sources.r1.bind = 0.0.0.0

flumeServer.sources.r1.port = 4142

flumeServer.channels.c1.type = memory

flumeServer.channels.c1.capacity = 100

flumeServer.sinks.k1.type = logger

flumeServer.sinks.k1.channel = c1

两个server发给kafka:

agent:

# ============== test write kafka ========================================

# bin/flume-ng agent --conf ./conf/ -f conf/example04-kafka-agent.conf -Dflume.root.logger=INFO,console -n flumeAgent

flumeAgent.sources = r1 r2

flumeAgent.channels = c1

flumeAgent.sinks = k1 k2

flumeAgent.sources.r1.type = exec

flumeAgent.sources.r1.command = tail -F /tmp/flume.test1

flumeAgent.sources.r1.channels = c1

flumeAgent.sources.r2.type = exec

flumeAgent.sources.r2.command = tail -F /tmp/flume.test2

flumeAgent.sources.r2.channels = c1

flumeAgent.channels.c1.type = memory

flumeAgent.channels.c1.capacity = 100

flumeAgent.sinkgroups = g1

flumeAgent.sinkgroups.g1.sinks = k1 k2

flumeAgent.sinkgroups.g1.processor.type = failover

flumeAgent.sinks.k1.type = avro

flumeAgent.sinks.k1.channel = c1

flumeAgent.sinks.k1.hostname = 127.0.0.1

flumeAgent.sinks.k1.port = 4141

flumeAgent.sinks.k1.batch-size = 1

flumeAgent.sinks.k2.type = avro

flumeAgent.sinks.k2.channel = c1

flumeAgent.sinks.k2.hostname = 127.0.0.1

flumeAgent.sinks.k2.port = 4142

flumeAgent.sinks.k2.batch-size = 1server1:

# ============== test write kafka ========================================

# bin/flume-ng agent --conf ./conf/ -f conf/example04-kafka-server1.conf -Dflume.root.logger=INFO,console -n flumeServer

flumeServer.sources = r1

flumeServer.channels = c1

flumeServer.sinks = k1

flumeServer.sources.r1.type = avro

flumeServer.sources.r1.channels = c1

flumeServer.sources.r1.bind = 0.0.0.0

flumeServer.sources.r1.port = 4141

flumeServer.channels.c1.type = memory

flumeServer.channels.c1.capacity = 100

flumeServer.sinks.k1.type = org.apache.flume.sink.kafka.KafkaSink

flumeServer.sinks.k1.channel = c1

flumeServer.sinks.k1.topic = test.flume.topic

flumeServer.sinks.k1.brokerList = 127.0.0.1:9092

flumeServer.sinks.k1.requiredAcks = 0

flumeServer.sinks.k1.batchSize = 2000

flumeServer.sinks.k1.kafka.producer.type = sync

flumeServer.sinks.k1.kafka.batch.num.messages = 1000

server2:

# ============== test write kafka ========================================

# bin/flume-ng agent --conf ./conf/ -f conf/example04-kafka-server2.conf -Dflume.root.logger=INFO,console -n flumeServer

flumeServer.sources = r1

flumeServer.channels = c1

flumeServer.sinks = k1

flumeServer.sources.r1.type = avro

flumeServer.sources.r1.channels = c1

flumeServer.sources.r1.bind = 0.0.0.0

flumeServer.sources.r1.port = 4142

flumeServer.channels.c1.type = memory

flumeServer.channels.c1.capacity = 100

flumeServer.sinks.k1.type = org.apache.flume.sink.kafka.KafkaSink

flumeServer.sinks.k1.channel = c1

flumeServer.sinks.k1.topic = test.flume.topic

flumeServer.sinks.k1.brokerList = 127.0.0.1:9092

flumeServer.sinks.k1.requiredAcks = 0

flumeServer.sinks.k1.batchSize = 2000

flumeServer.sinks.k1.kafka.producer.type = sync

flumeServer.sinks.k1.kafka.batch.num.messages = 1000

kafka:

xueguolin@xueguolin-pc:/tmp$ echo 1333223ddd >> flume.test1

xueguolin@xueguolin-pc:/tmp$ echo 1333223ddd >> flume.test1

xueguolin@xueguolin-pc:/tmp$ echo 1333223ddd >> flume.test1

xueguolin@xueguolin-pc:/tmp$ echo 1333222223ddkafafad >> flume.test1

xueguolin@xueguolin-pc:~/software/kafka/kafka_2.10-0.8.2.0$ bin/kafka-console-consumer.sh --zookeeper localhost:2181 --topic test.flume.topic --from-beginning

12

12

1333223ddd

1333223ddd

1333222223ddd

1333222223ddkafafad

148

148

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?