1.测试环境:

OS:Centos 6.8

Oralce:11.2.0.4

3节点RAC,主机名是rac1,rac2,rac3 现在需要删除rac3

3个节点实例均正常运行,在线删除节点

2.节点确认

登陆任一节点,执行

SQL> select thread#,status,instance from v$thread;

THREAD# STATUS

---------- ------

INSTANCE

--------------------------------------------------------------------------------

1 OPEN

rac1

2 OPEN

rac2

3 OPEN

rac3

说明3个节点,实例名是rac1,rac2,rac3

rac1:/u01/app/11.2.0/grid_1/bin@rac1>./olsnodes -t -s

rac1 Active Unpinned

rac2 Active Unpinned

rac3 Active Unpinned

3.在所有保留节点(rac1,rac2)上执行

[root@rac1 ~]# cd /u01/app/11.2.0/grid_1/bin/

[root@rac1 bin]# ./crsctl unpin css -n rac3

CRS-4667: Node rac3 successfully unpinned.

[root@rac2 ~]# cd /u01/app/11.2.0/grid_1/bin/

[root@rac2 bin]# ./crsctl unpin css -n rac3

CRS-4667: Node rac3 successfully unpinned.

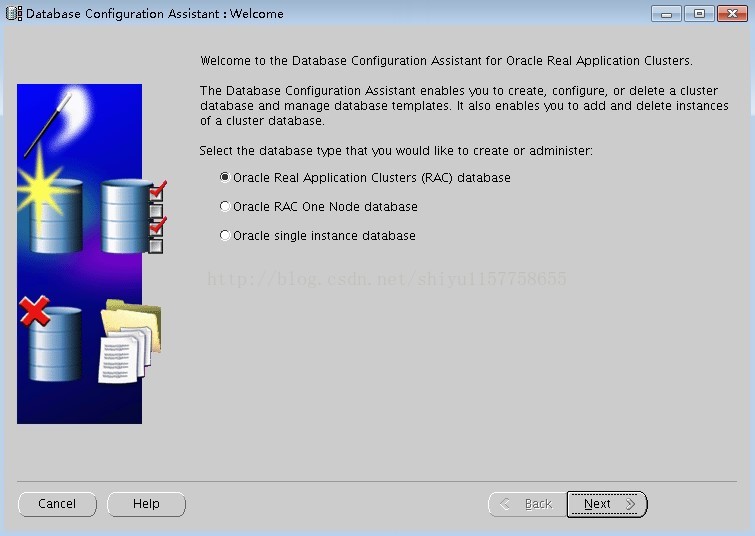

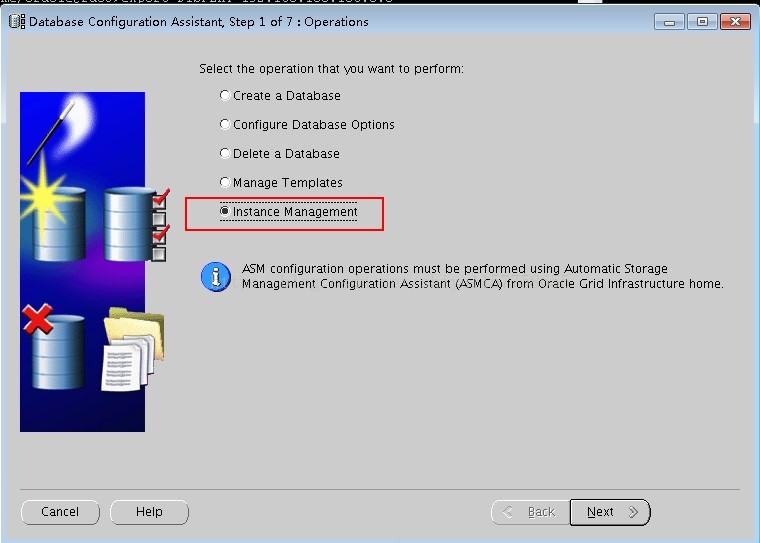

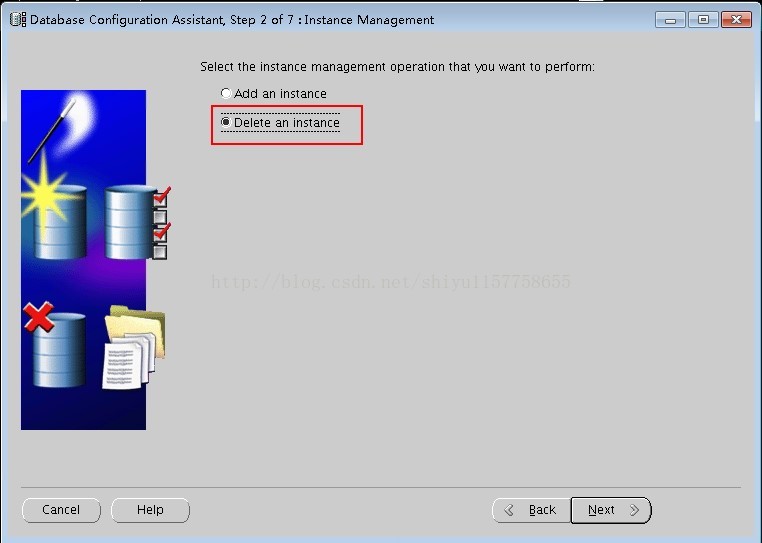

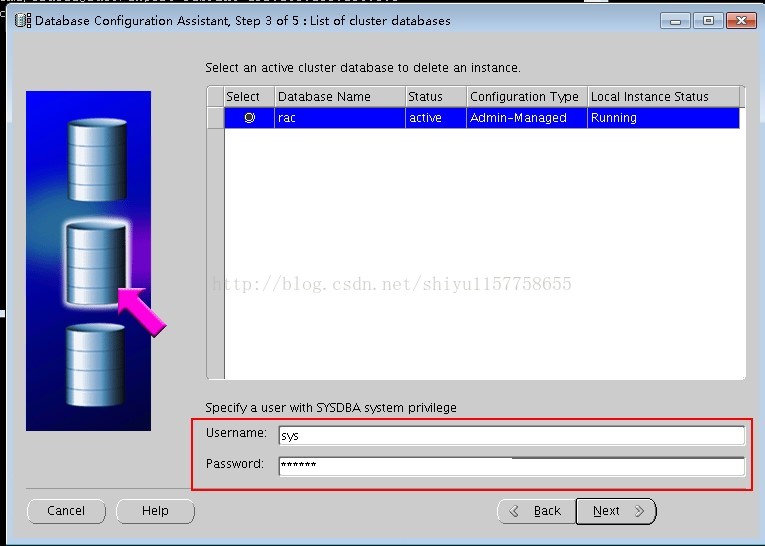

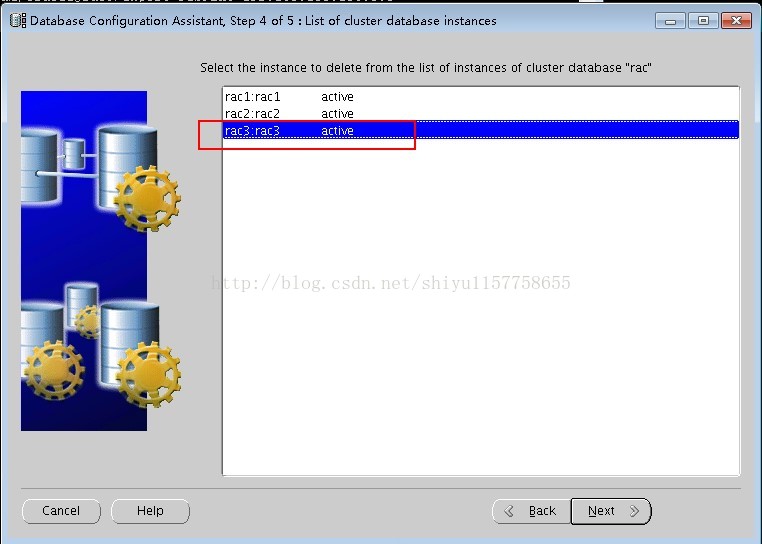

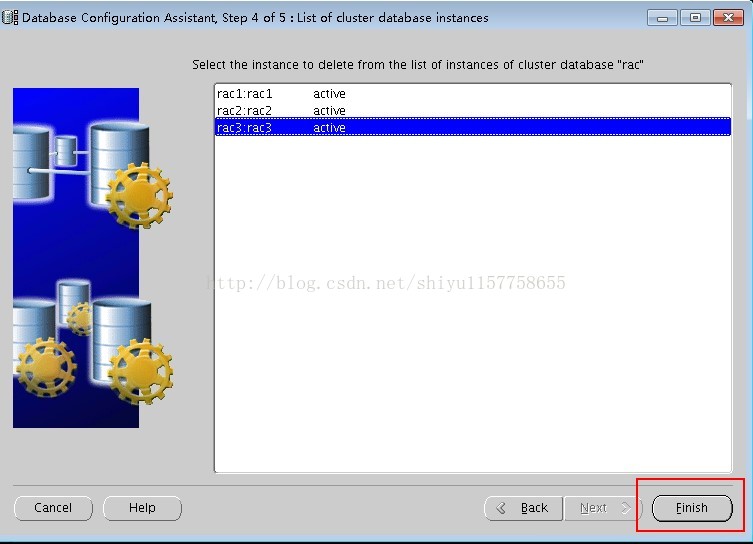

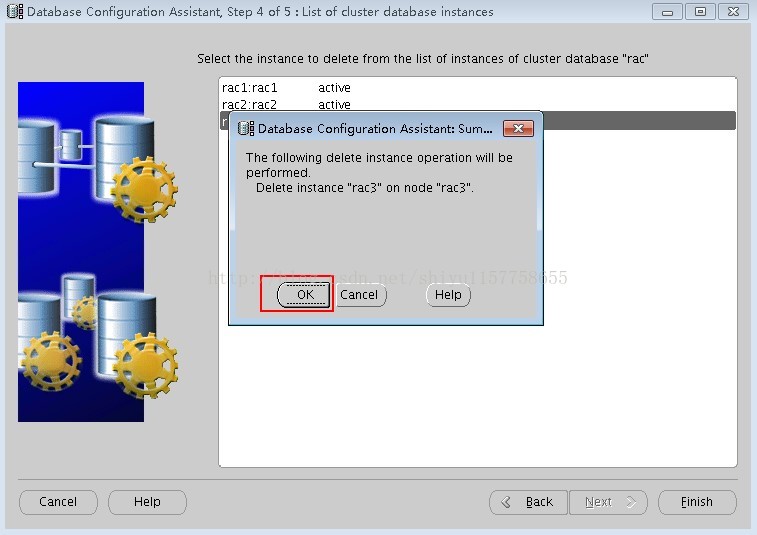

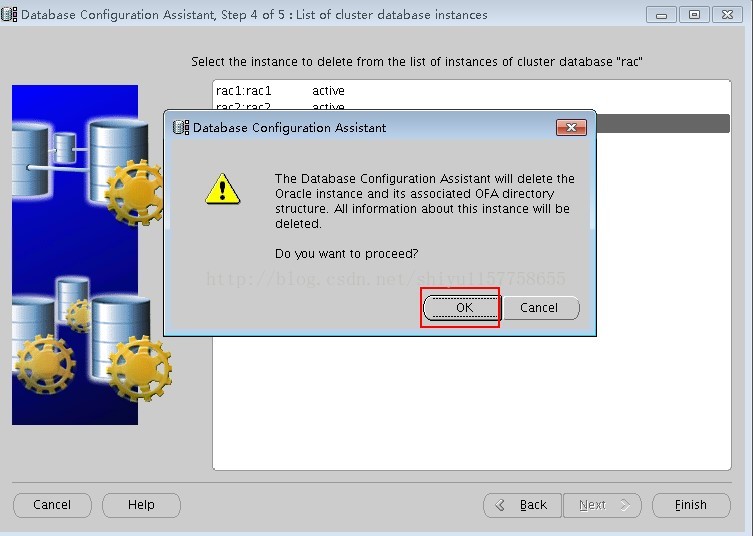

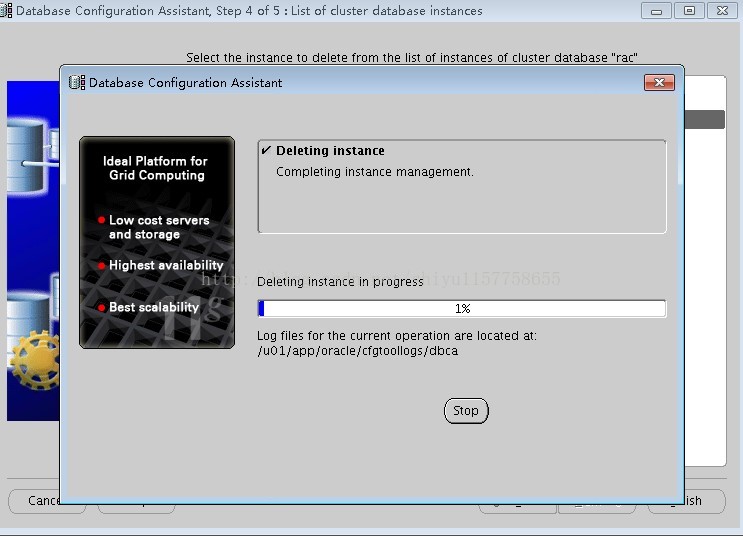

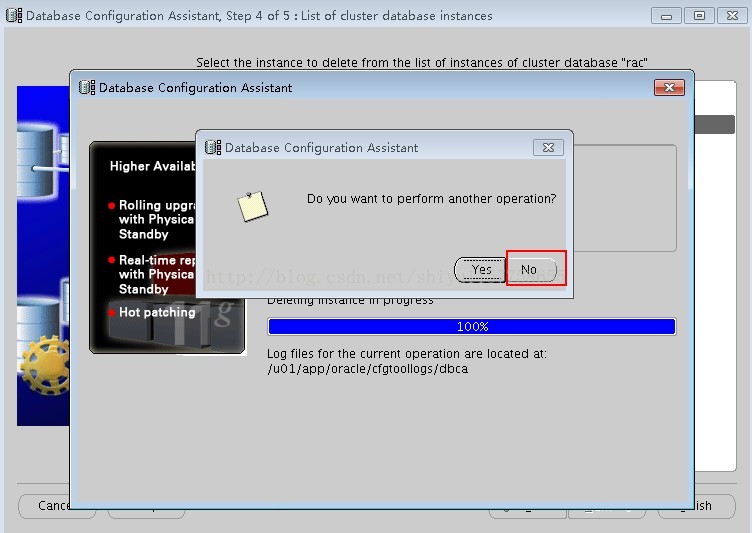

4.使用dbca删除rac3实例

注意这里需要在任一保留节点上删除rac3实例,也就是必须在rac1或rac2上执行

5.验证rac3实例已被删除

查看活动的实例:

SQL> select thread#,status,instance from v$thread;THREAD# STATUS

---------- ------

INSTANCE

--------------------------------------------------------------------------------

1 OPEN

rac1

2 OPEN

rac2

//已经没有rac3

rac3:/home/oracle@rac3>ps -ef|grep ora_

oracle 7550 30915 0 14:17 pts/0 00:00:00 grep ora_

//rac3的oracle进程已经消失

查看库的配置

rac1:/home/oracle@rac1>srvctl config database -d rac

Database unique name: rac

Database name: rac

Oracle home: /u01/app/oracle/product/11.2.0/db_1

Oracle user: oracle

Spfile: +DATA/rac/spfilerac.ora

Domain:

Start options: open

Stop options: immediate

Database role: PRIMARY

Management policy: AUTOMATIC

Server pools: rac

Database instances: rac1,rac2

Disk Groups: DATA,FRA_ARC

Mount point paths:

Services:

Type: RAC

Database is administrator managed

6.停止rac3节点的监听

rac3:/home/oracle@rac3>srvctl config listener -a

Name: LISTENER

Network: 1, Owner: grid

Home: <CRS home>

/u01/app/11.2.0/grid_1 on node(s) rac3,rac1,rac2

End points: TCP:1521

rac3:/home/oracle@rac3>srvctl disable listener -l listener -n rac3

rac3:/home/oracle@rac3>srvctl stop listener -l listener -n rac3

rac3:/home/oracle@rac3>lsnrctl status

LSNRCTL for Linux: Version 11.2.0.4.0 - Production on 13-MAR-2017 14:22:50

Copyright (c) 1991, 2013, Oracle. All rights reserved.

Connecting to (ADDRESS=(PROTOCOL=tcp)(HOST=)(PORT=1521))

TNS-12541: TNS:no listener

TNS-12560: TNS:protocol adapter error

TNS-00511: No listener

Linux Error: 111: Connection refused

//rac3监听已停止,rac1和rac2监听还在

7.在rac3节点使用oracle用户更新集群列表

rac3:/home/oracle@rac3>/u01/app/oracle/product/11.2.0/db_1/oui/bin/runInstaller -updateNodeList ORACLE_HOME=$ORACLE_HOME "CLUSTER_NODES={rac3}" -local

Starting Oracle Universal Installer...

Checking swap space: must be greater than 500 MB. Actual 3502 MB Passed

The inventory pointer is located at /etc/oraInst.loc

The inventory is located at /u01/app/oraInventory

'UpdateNodeList' was successful.

8.删除rac3节点的库软件

rac3:/home/oracle@rac3>$ORACLE_HOME/deinstall/deinstall -local

Checking for required files and bootstrapping ...

Please wait ...

Location of logs /u01/app/oraInventory/logs/

############ ORACLE DEINSTALL & DECONFIG TOOL START ############

######################### CHECK OPERATION START #########################

## [START] Install check configuration ##

Checking for existence of the Oracle home location /u01/app/oracle/product/11.2.0/db_1

Oracle Home type selected for deinstall is: Oracle Real Application Cluster Database

Oracle Base selected for deinstall is: /u01/app/oracle

Checking for existence of central inventory location /u01/app/oraInventory

Checking for existence of the Oracle Grid Infrastructure home /u01/app/11.2.0/grid_1

The following nodes are part of this cluster: rac3

Checking for sufficient temp space availability on node(s) : 'rac3'

## [END] Install check configuration ##

Network Configuration check config START

Network de-configuration trace file location: /u01/app/oraInventory/logs/netdc_check2017-03-13_02-25-52-PM.log

Network Configuration check config END

Database Check Configuration START

Database de-configuration trace file location: /u01/app/oraInventory/logs/databasedc_check2017-03-13_02-25-57-PM.log

Database Check Configuration END

Enterprise Manager Configuration Assistant START

EMCA de-configuration trace file location: /u01/app/oraInventory/logs/emcadc_check2017-03-13_02-26-01-PM.log

Enterprise Manager Configuration Assistant END

Oracle Configuration Manager check START

OCM check log file location : /u01/app/oraInventory/logs//ocm_check2384.log

Oracle Configuration Manager check END

######################### CHECK OPERATION END #########################

####################### CHECK OPERATION SUMMARY #######################

Oracle Grid Infrastructure Home is: /u01/app/11.2.0/grid_1

The cluster node(s) on which the Oracle home deinstallation will be performed are:rac3

Since -local option has been specified, the Oracle home will be deinstalled only on the local node, 'rac3', and the global configuration will be removed.

Oracle Home selected for deinstall is: /u01/app/oracle/product/11.2.0/db_1

Inventory Location where the Oracle home registered is: /u01/app/oraInventory

The option -local will not modify any database configuration for this Oracle home.

No Enterprise Manager configuration to be updated for any database(s)

No Enterprise Manager ASM targets to update

No Enterprise Manager listener targets to migrate

Checking the config status for CCR

Oracle Home exists with CCR directory, but CCR is not configured

CCR check is finished

Do you want to continue (y - yes, n - no)? [n]: y

A log of this session will be written to: '/u01/app/oraInventory/logs/deinstall_deconfig2017-03-13_02-25-43-PM.out'

Any error messages from this session will be written to: '/u01/app/oraInventory/logs/deinstall_deconfig2017-03-13_02-25-43-PM.err'

######################## CLEAN OPERATION START ########################

Enterprise Manager Configuration Assistant START

EMCA de-configuration trace file location: /u01/app/oraInventory/logs/emcadc_clean2017-03-13_02-26-01-PM.log

Updating Enterprise Manager ASM targets (if any)

Updating Enterprise Manager listener targets (if any)

Enterprise Manager Configuration Assistant END

Database de-configuration trace file location: /u01/app/oraInventory/logs/databasedc_clean2017-03-13_02-26-14-PM.log

Network Configuration clean config START

Network de-configuration trace file location: /u01/app/oraInventory/logs/netdc_clean2017-03-13_02-26-14-PM.log

De-configuring Local Net Service Names configuration file...

Local Net Service Names configuration file de-configured successfully.

De-configuring backup files...

Backup files de-configured successfully.

The network configuration has been cleaned up successfully.

Network Configuration clean config END

Oracle Configuration Manager clean START

OCM clean log file location : /u01/app/oraInventory/logs//ocm_clean2384.log

Oracle Configuration Manager clean END

Setting the force flag to false

Setting the force flag to cleanup the Oracle Base

Oracle Universal Installer clean START

Detach Oracle home '/u01/app/oracle/product/11.2.0/db_1' from the central inventory on the local node : Done

Delete directory '/u01/app/oracle/product/11.2.0/db_1' on the local node : Done

Failed to delete the directory '/u01/app/oracle'. The directory is in use.

Delete directory '/u01/app/oracle' on the local node : Failed <<<<

Oracle Universal Installer cleanup completed with errors.

Oracle Universal Installer clean END

## [START] Oracle install clean ##

Clean install operation removing temporary directory '/tmp/deinstall2017-03-13_02-25-14PM' on node 'rac3'

## [END] Oracle install clean ##

######################### CLEAN OPERATION END #########################

####################### CLEAN OPERATION SUMMARY #######################

Cleaning the config for CCR

As CCR is not configured, so skipping the cleaning of CCR configuration

CCR clean is finished

Successfully detached Oracle home '/u01/app/oracle/product/11.2.0/db_1' from the central inventory on the local node.

Successfully deleted directory '/u01/app/oracle/product/11.2.0/db_1' on the local node.

Failed to delete directory '/u01/app/oracle' on the local node.

Oracle Universal Installer cleanup completed with errors.

Oracle deinstall tool successfully cleaned up temporary directories.

#######################################################################

############# ORACLE DEINSTALL & DECONFIG TOOL END #############

执行完后,rac3的$ORACLE_HOME目录里的文件已被删除

9.在任一保留的节点上停止rac3节点NodeApps

rac1:/home/oracle@rac1>srvctl stop nodeapps -n rac3 -f //发现rac3的ons和vip 已经停了

10.在每个保留节点上执行

rac1:/home/oracle@rac1>$ORACLE_HOME/oui/bin/runInstaller -updateNodeList ORACLE_HOME=$ORACLE_HOME "CLUSTER_NODES={rac1,rac2}"

Starting Oracle Universal Installer...

Checking swap space: must be greater than 500 MB. Actual 3194 MB Passed

The inventory pointer is located at /etc/oraInst.loc

The inventory is located at /u01/app/oraInventory

'UpdateNodeList' was successful.

rac2:/home/oracle@rac2>$ORACLE_HOME/oui/bin/runInstaller -updateNodeList ORACLE_HOME=$ORACLE_HOME "CLUSTER_NODES={rac1,rac2}"

Starting Oracle Universal Installer...

Checking swap space: must be greater than 500 MB. Actual 3159 MB Passed

The inventory pointer is located at /etc/oraInst.loc

The inventory is located at /u01/app/oraInventory

'UpdateNodeList' was successful.

11.删除rac3节点的集群软件

[root@rac3 db_1]# /u01/app/11.2.0/grid_1/crs/install/rootcrs.pl -deconfig -force

Using configuration parameter file: /u01/app/11.2.0/grid_1/crs/install/crsconfig_params

shell-init: error retrieving current directory: getcwd: cannot access parent directories: No such file or directory

Error occurred during initialization of VM

java.lang.Error: Properties init: Could not determine current working directory.

shell-init: error retrieving current directory: getcwd: cannot access parent directories: No such file or directory

CRS-2673: Attempting to stop 'ora.registry.acfs' on 'rac3'

CRS-2677: Stop of 'ora.registry.acfs' on 'rac3' succeeded

shell-init: error retrieving current directory: getcwd: cannot access parent directories: No such file or directory

2017-03-13 14:37:29.128:

CLSD:An error was encountered while attempting to open log file "UNKNOWN". Additional diagnostics: (:CLSD00157:)

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'rac3'

CRS-2673: Attempting to stop 'ora.crsd' on 'rac3'

CRS-2790: Starting shutdown of Cluster Ready Services-managed resources on 'rac3'

CRS-2673: Attempting to stop 'ora.DATA.dg' on 'rac3'

CRS-2673: Attempting to stop 'ora.FRA_ARC.dg' on 'rac3'

CRS-2673: Attempting to stop 'ora.OCR_VOTING_NEW.dg' on 'rac3'

CRS-2673: Attempting to stop 'ora.ACFS.dg' on 'rac3'

CRS-2673: Attempting to stop 'ora.oc4j' on 'rac3'

CRS-2677: Stop of 'ora.FRA_ARC.dg' on 'rac3' succeeded

CRS-2677: Stop of 'ora.DATA.dg' on 'rac3' succeeded

CRS-2677: Stop of 'ora.ACFS.dg' on 'rac3' succeeded

CRS-2677: Stop of 'ora.OCR_VOTING_NEW.dg' on 'rac3' succeeded

CRS-2673: Attempting to stop 'ora.asm' on 'rac3'

CRS-2677: Stop of 'ora.asm' on 'rac3' succeeded

CRS-2677: Stop of 'ora.oc4j' on 'rac3' succeeded

CRS-2672: Attempting to start 'ora.oc4j' on 'rac2'

CRS-2676: Start of 'ora.oc4j' on 'rac2' succeeded

CRS-2792: Shutdown of Cluster Ready Services-managed resources on 'rac3' has completed

CRS-2677: Stop of 'ora.crsd' on 'rac3' succeeded

CRS-2673: Attempting to stop 'ora.mdnsd' on 'rac3'

CRS-2673: Attempting to stop 'ora.ctssd' on 'rac3'

CRS-2673: Attempting to stop 'ora.evmd' on 'rac3'

CRS-2673: Attempting to stop 'ora.asm' on 'rac3'

CRS-2677: Stop of 'ora.evmd' on 'rac3' succeeded

CRS-2677: Stop of 'ora.mdnsd' on 'rac3' succeeded

CRS-2677: Stop of 'ora.ctssd' on 'rac3' succeeded

CRS-2677: Stop of 'ora.asm' on 'rac3' succeeded

CRS-2673: Attempting to stop 'ora.cluster_interconnect.haip' on 'rac3'

CRS-2677: Stop of 'ora.cluster_interconnect.haip' on 'rac3' succeeded

CRS-2673: Attempting to stop 'ora.cssd' on 'rac3'

CRS-2677: Stop of 'ora.cssd' on 'rac3' succeeded

CRS-2673: Attempting to stop 'ora.crf' on 'rac3'

CRS-2677: Stop of 'ora.crf' on 'rac3' succeeded

CRS-2673: Attempting to stop 'ora.gipcd' on 'rac3'

CRS-2677: Stop of 'ora.gipcd' on 'rac3' succeeded

CRS-2673: Attempting to stop 'ora.gpnpd' on 'rac3'

CRS-2677: Stop of 'ora.gpnpd' on 'rac3' succeeded

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'rac3' has completed

CRS-4133: Oracle High Availability Services has been stopped.

shell-init: error retrieving current directory: getcwd: cannot access parent directories: No such file or directory

chdir: error retrieving current directory: getcwd: cannot access parent directories: No such file or directory

Removing Trace File Analyzer

Successfully deconfigured Oracle clusterware stack on this node

此时rac3的cluster 进程已被停止

[root@rac3 ~]# su - grid

+ASM3:/home/grid@rac3>crs_stat -t

CRS-0184: Cannot communicate with the CRS daemon.

12.删除rac3节点的vip

如果上一步执行顺利的话,rac3节点的vip此时已被删除,在任一保留节点执行crs_stat -t 验证一下

+ASM1:/home/grid@rac1>crs_stat -t

Name Type Target State Host

------------------------------------------------------------

ora.ACFS.dg ora....up.type ONLINE ONLINE rac1

ora.DATA.dg ora....up.type ONLINE ONLINE rac1

ora.FRA_ARC.dg ora....up.type ONLINE ONLINE rac1

ora....ER.lsnr ora....er.type ONLINE ONLINE rac1

ora....N1.lsnr ora....er.type ONLINE ONLINE rac1

ora...._NEW.dg ora....up.type ONLINE ONLINE rac1

ora.asm ora.asm.type ONLINE ONLINE rac1

ora.cvu ora.cvu.type ONLINE ONLINE rac2

ora.gsd ora.gsd.type OFFLINE OFFLINE

ora....network ora....rk.type ONLINE ONLINE rac1

ora.oc4j ora.oc4j.type ONLINE ONLINE rac2

ora.ons ora.ons.type ONLINE ONLINE rac1

ora.rac.db ora....se.type ONLINE ONLINE rac1

ora....SM1.asm application ONLINE ONLINE rac1

ora....C1.lsnr application ONLINE ONLINE rac1

ora.rac1.gsd application OFFLINE OFFLINE

ora.rac1.ons application ONLINE ONLINE rac1

ora.rac1.vip ora....t1.type ONLINE ONLINE rac1

ora....SM2.asm application ONLINE ONLINE rac2

ora....C2.lsnr application ONLINE ONLINE rac2

ora.rac2.gsd application OFFLINE OFFLINE

ora.rac2.ons application ONLINE ONLINE rac2

ora.rac2.vip ora....t1.type ONLINE ONLINE rac2

ora.rac3.vip ora....t1.type OFFLINE OFFLINE

ora....ry.acfs ora....fs.type ONLINE ONLINE rac1

ora.scan1.vip ora....ip.type ONLINE ONLINE rac1

如果仍有rac3节点的vip 服务哎,执行如下:

rac1:/home/oracle@rac1>srvctl stop vip -i ora.rac3.vip -f

rac1:/home/oracle@rac1>srvctl remove vip -i ora.rac3.vip -f

[root@rac1 ~]# crsctl delete resource ora.rac3.vip -f

13.在任一保留的节点上删除rac3节点

[root@rac1 bin]# cd /u01/app/11.2.0/grid_1/bin/

[root@rac1 bin]# ./crsctl delete node -n rac3

CRS-4661: Node rac3 successfully deleted.

[root@rac1 bin]# ./olsnodes -t -s

rac1 Active Unpinned

rac2 Active Unpinned

14.rac3节点使用grid 用户更新集群列表

+ASM3:/home/grid@rac3>$ORACLE_HOME/oui/bin/runInstaller -updateNodeList ORACLE_HOME=$ORACLE_HOME "CLUSTER_NODES={rac3}" CRS=true -local

Starting Oracle Universal Installer...

Checking swap space: must be greater than 500 MB. Actual 3988 MB Passed

The inventory pointer is located at /etc/oraInst.loc

The inventory is located at /u01/app/oraInventory

'UpdateNodeList' was successful.

15.rac3 节点删除集群软件

+ASM3:/home/grid@rac3>$ORACLE_HOME/deinstall/deinstall -local

Checking for required files and bootstrapping ...

Please wait ...

Location of logs /tmp/deinstall2017-03-16_02-13-37PM/logs/

############ ORACLE DEINSTALL & DECONFIG TOOL START ############

######################### CHECK OPERATION START #########################

## [START] Install check configuration ##

Checking for existence of the Oracle home location /u01/app/11.2.0/grid_1

Oracle Home type selected for deinstall is: Oracle Grid Infrastructure for a Cluster

Oracle Base selected for deinstall is: /u01/app/grid

Checking for existence of central inventory location /u01/app/oraInventory

Checking for existence of the Oracle Grid Infrastructure home

The following nodes are part of this cluster: rac3

Checking for sufficient temp space availability on node(s) : 'rac3'

## [END] Install check configuration ##

Traces log file: /tmp/deinstall2017-03-16_02-13-37PM/logs//crsdc.log

Enter an address or the name of the virtual IP used on node "rac3"[rac3-vip]

>

The following information can be collected by running "/sbin/ifconfig -a" on node "rac3"

Enter the IP netmask of Virtual IP "192.168.180.11" on node "rac3"[255.255.255.0]

>

Enter the network interface name on which the virtual IP address "192.168.180.11" is active

>

Enter an address or the name of the virtual IP[]

>

Network Configuration check config START

Network de-configuration trace file location: /tmp/deinstall2017-03-16_02-13-37PM/logs/netdc_check2017-03-16_02-14-56-PM.log

Specify all RAC listeners (do not include SCAN listener) that are to be de-configured [LISTENER,LISTENER_SCAN1]:

Network Configuration check config END

Asm Check Configuration START

ASM de-configuration trace file location: /tmp/deinstall2017-03-16_02-13-37PM/logs/asmcadc_check2017-03-16_02-15-00-PM.log

######################### CHECK OPERATION END #########################

####################### CHECK OPERATION SUMMARY #######################

Oracle Grid Infrastructure Home is:

The cluster node(s) on which the Oracle home deinstallation will be performed are:rac3

Since -local option has been specified, the Oracle home will be deinstalled only on the local node, 'rac3', and the global configuration will be removed.

Oracle Home selected for deinstall is: /u01/app/11.2.0/grid_1

Inventory Location where the Oracle home registered is: /u01/app/oraInventory

Following RAC listener(s) will be de-configured: LISTENER,LISTENER_SCAN1

Option -local will not modify any ASM configuration.

Do you want to continue (y - yes, n - no)? [n]:

Exited from program.

############# ORACLE DEINSTALL & DECONFIG TOOL END #############

+ASM3:/home/grid@rac3>$ORACLE_HOME/deinstall/deinstall -local

Checking for required files and bootstrapping ...

Please wait ...

Location of logs /tmp/deinstall2017-03-16_02-15-44PM/logs/

############ ORACLE DEINSTALL & DECONFIG TOOL START ############

######################### CHECK OPERATION START #########################

## [START] Install check configuration ##

Checking for existence of the Oracle home location /u01/app/11.2.0/grid_1

Oracle Home type selected for deinstall is: Oracle Grid Infrastructure for a Cluster

Oracle Base selected for deinstall is: /u01/app/grid

Checking for existence of central inventory location /u01/app/oraInventory

Checking for existence of the Oracle Grid Infrastructure home

The following nodes are part of this cluster: rac3

Checking for sufficient temp space availability on node(s) : 'rac3'

## [END] Install check configuration ##

Traces log file: /tmp/deinstall2017-03-16_02-15-44PM/logs//crsdc.log

Enter an address or the name of the virtual IP used on node "rac3"[rac3-vip]

>

The following information can be collected by running "/sbin/ifconfig -a" on node "rac3"

Enter the IP netmask of Virtual IP "192.168.180.11" on node "rac3"[255.255.255.0]

>

Enter the network interface name on which the virtual IP address "192.168.180.11" is active

>

Enter an address or the name of the virtual IP[]

>

Network Configuration check config START

Network de-configuration trace file location: /tmp/deinstall2017-03-16_02-15-44PM/logs/netdc_check2017-03-16_02-16-13-PM.log

Specify all RAC listeners (do not include SCAN listener) that are to be de-configured [LISTENER,LISTENER_SCAN1]:

Network Configuration check config END

Asm Check Configuration START

ASM de-configuration trace file location: /tmp/deinstall2017-03-16_02-15-44PM/logs/asmcadc_check2017-03-16_02-16-18-PM.log

######################### CHECK OPERATION END #########################

####################### CHECK OPERATION SUMMARY #######################

Oracle Grid Infrastructure Home is:

The cluster node(s) on which the Oracle home deinstallation will be performed are:rac3

Since -local option has been specified, the Oracle home will be deinstalled only on the local node, 'rac3', and the global configuration will be removed.

Oracle Home selected for deinstall is: /u01/app/11.2.0/grid_1

Inventory Location where the Oracle home registered is: /u01/app/oraInventory

Following RAC listener(s) will be de-configured: LISTENER,LISTENER_SCAN1

Option -local will not modify any ASM configuration.

Do you want to continue (y - yes, n - no)? [n]: y

A log of this session will be written to: '/tmp/deinstall2017-03-16_02-15-44PM/logs/deinstall_deconfig2017-03-16_02-16-00-PM.out'

Any error messages from this session will be written to: '/tmp/deinstall2017-03-16_02-15-44PM/logs/deinstall_deconfig2017-03-16_02-16-00-PM.err'

######################## CLEAN OPERATION START ########################

ASM de-configuration trace file location: /tmp/deinstall2017-03-16_02-15-44PM/logs/asmcadc_clean2017-03-16_02-16-20-PM.log

ASM Clean Configuration END

Network Configuration clean config START

Network de-configuration trace file location: /tmp/deinstall2017-03-16_02-15-44PM/logs/netdc_clean2017-03-16_02-16-20-PM.log

De-configuring RAC listener(s): LISTENER,LISTENER_SCAN1

De-configuring listener: LISTENER

Stopping listener on node "rac3": LISTENER

Warning: Failed to stop listener. Listener may not be running.

Listener de-configured successfully.

De-configuring listener: LISTENER_SCAN1

Stopping listener on node "rac3": LISTENER_SCAN1

Warning: Failed to stop listener. Listener may not be running.

Listener de-configured successfully.

De-configuring backup files...

Backup files de-configured successfully.

The network configuration has been cleaned up successfully.

Network Configuration clean config END

---------------------------------------->

The deconfig command below can be executed in parallel on all the remote nodes. Execute the command on the local node after the execution completes on all the remote nodes.

Remove the directory: /tmp/deinstall2017-03-16_02-15-44PM on node:

Setting the force flag to false

Setting the force flag to cleanup the Oracle Base

Oracle Universal Installer clean START

Detach Oracle home '/u01/app/11.2.0/grid_1' from the central inventory on the local node : Done

Delete directory '/u01/app/11.2.0/grid_1' on the local node : Done

Delete directory '/u01/app/oraInventory' on the local node : Done

Failed to delete the directory '/u01/app/grid/oradiag_root/diag/clients/user_root/host_333714774_80/metadata_pv'. The directory is in use.

Failed to delete the directory '/u01/app/grid/oradiag_root/diag/clients/user_root/host_333714774_80/incident'. The directory is in use.

Failed to delete the directory '/u01/app/grid/oradiag_root/diag/clients/user_root/host_333714774_80/metadata_dgif'. The directory is in use.

Failed to delete the directory '/u01/app/grid/oradiag_root/diag/clients/user_root/host_333714774_80/cdump'. The directory is in use.

Failed to delete the directory '/u01/app/grid/oradiag_root/diag/clients/user_root/host_333714774_80/stage'. The directory is in use.

Failed to delete the directory '/u01/app/grid/oradiag_root/diag/clients/user_root/host_333714774_80/incpkg'. The directory is in use.

Failed to delete the directory '/u01/app/grid/oradiag_root/diag/clients/user_root/host_333714774_80/sweep'. The directory is in use.

The Oracle Base directory '/u01/app/grid' will not be removed on local node. The directory is not empty.

Oracle Universal Installer cleanup was successful.

Oracle Universal Installer clean END

## [START] Oracle install clean ##

Clean install operation removing temporary directory '/tmp/deinstall2017-03-16_02-15-44PM' on node 'rac3'

## [END] Oracle install clean ##

######################### CLEAN OPERATION END #########################

####################### CLEAN OPERATION SUMMARY #######################

Following RAC listener(s) were de-configured successfully: LISTENER,LISTENER_SCAN1

Oracle Clusterware was already stopped and de-configured on node "rac3"

Oracle Clusterware is stopped and de-configured successfully.

Successfully detached Oracle home '/u01/app/11.2.0/grid_1' from the central inventory on the local node.

Successfully deleted directory '/u01/app/11.2.0/grid_1' on the local node.

Successfully deleted directory '/u01/app/oraInventory' on the local node.

Oracle Universal Installer cleanup was successful.

Run 'rm -rf /etc/oraInst.loc' as root on node(s) 'rac3' at the end of the session.

Run 'rm -rf /opt/ORCLfmap' as root on node(s) 'rac3' at the end of the session.

Run 'rm -rf /etc/oratab' as root on node(s) 'rac3' at the end of the session.

Oracle deinstall tool successfully cleaned up temporary directories.

#######################################################################

############# ORACLE DEINSTALL & DECONFIG TOOL END #############

+ASM3:/home/grid@rac3>

注意上面需要执行一些脚本,按提示执行就可以。

16.保留节点使用grid用户更新集群列表

[root@rac1 ~]# su - grid

+ASM1:/home/grid@rac1>$ORACLE_HOME/oui/bin/runInstaller -updateNodeList ORACLE_HOME=$ORACLE_HOME "CLUSTER_NODES={rac1,rac2}" CRS=true

Starting Oracle Universal Installer...

Checking swap space: must be greater than 500 MB. Actual 3183 MB Passed

The inventory pointer is located at /etc/oraInst.loc

The inventory is located at /u01/app/oraInventory

'UpdateNodeList' was successful.

root@rac2 ~]# su - grid

+ASM2:/home/grid@rac2>$ORACLE_HOME/oui/bin/runInstaller -updateNodeList ORACLE_HOME=$ORACLE_HOME "CLUSTER_NODES={rac1,rac2}" CRS=true

Starting Oracle Universal Installer...

Checking swap space: must be greater than 500 MB. Actual 3156 MB Passed

The inventory pointer is located at /etc/oraInst.loc

The inventory is located at /u01/app/oraInventory

'UpdateNodeList' was successful.

17.验证rac3节点被删除

在任一保留的节点上执行

+ASM1:/home/grid@rac1>cluvfy stage -post nodedel -n rac3

Performing post-checks for node removal

Checking CRS integrity...

Clusterware version consistency passed

CRS integrity check passed

Node removal check passed

Post-check for node removal was successful.

+ASM1:/home/grid@rac1>crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.ACFS.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.DATA.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.FRA_ARC.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.LISTENER.lsnr

ONLINE OFFLINE rac1

ONLINE OFFLINE rac2

ora.OCR_VOTING_NEW.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.asm

ONLINE ONLINE rac1 Started

ONLINE ONLINE rac2 Started

ora.gsd

OFFLINE OFFLINE rac1

OFFLINE OFFLINE rac2

ora.net1.network

OFFLINE OFFLINE rac1

OFFLINE OFFLINE rac2

ora.ons

OFFLINE OFFLINE rac1

OFFLINE OFFLINE rac2

ora.registry.acfs

ONLINE ONLINE rac1

ONLINE ONLINE rac2

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 OFFLINE OFFLINE

ora.cvu

1 OFFLINE OFFLINE

ora.oc4j

1 ONLINE ONLINE rac2

ora.rac.db

1 ONLINE ONLINE rac1 Open

2 ONLINE ONLINE rac2 Open

ora.rac1.vip

1 OFFLINE OFFLINE

ora.rac2.vip

1 OFFLINE OFFLINE

ora.rac3.vip

1 OFFLINE OFFLINE

ora.scan1.vip

1 OFFLINE OFFLINE

验证rac3节点被删除

查看活动的实例

SQL> select thread#,status,instance from v$thread;

THREAD# STATUS

---------- ------

INSTANCE

--------------------------------------------------------------------------------

1 OPEN

rac1

2 OPEN

rac2

SQL>

至此rac 集群下删除节点完成

1092

1092

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?