文章目录

Join操作

连接数据准备

- 数据准备

[yao@master data]$ vim studenta.txt

[yao@master data]$ vim studentb.txt

数据内容

//studenta.txt

10001 shiny

10002 mark

10003 angel

10005 ella

10009 jack

10014 eva

10018 judy

10020 cendy

//studentb.txt

10001 23

10004 22

10007 24

10008 21

10009 25

10012 25

10015 20

10018 19

10020 26

- 创建表studenta和表studentb

hive> use yr_test;

OK

Time taken: 1.022 seconds

hive> create table studenta(

> id int,name string)

> row format delimited fields terminated by '\t'

> stored as textfile;

OK

Time taken: 2.258 seconds

hive> create table studentb(

> id int,name string)

> row format delimited fields terminated by '\t'

> stored as textfile;

OK

Time taken: 0.092 seconds

- 加载数据

hive> load data local inpath '/home/yao/data/studenta.txt' overwrite into table studenta;

Loading data to table yr_test.studenta

Table yr_test.studenta stats: [numFiles=1, numRows=0, totalSize=90, rawDataSize=0]

OK

Time taken: 4.047 seconds

hive> load data local inpath '/home/yao/data/studentb.txt' overwrite into table studentb;

Loading data to table yr_test.studentb

Table yr_test.studentb stats: [numFiles=1, numRows=0, totalSize=81, rawDataSize=0]

OK

Time taken: 0.612 seconds

1 内连接 JOIN

把符合两边条件的数据查找出来

hive> select * from studenta a join studentb b on a.id=b.id;

//结果

Total MapReduce CPU Time Spent: 3 seconds 690 msec

OK

10001 shiny 10001 23

10009 jack 10009 25

10018 judy 10018 19

10020 cendy 10020 26

2 外连接 OUTER JOIN

- 左外连接 LEFT JOIN

1.以左表数据为匹配标准,左大右小

2.匹配不上的就是null

3.返回的数据条数与左表相同

hive> select * from studenta a left join studentb b on a.id=b.id;

//结果

Total MapReduce CPU Time Spent: 2 seconds 570 msec

OK

10001 shiny 10001 23

10002 mark NULL NULL

10003 angel NULL NULL

10005 ella NULL NULL

10009 jack 10009 25

10014 eva NULL NULL

10018 judy 10018 19

10020 cendy 10020 26

- 右外连接 RIGHT JOIN

1.以右表数据为匹配标准,右大左小

2.匹配不上的就是null

3.返回的数据条数与右表相同

hive> select * from studenta a right join studentb b on a.id=b.id;

//结果

Total MapReduce CPU Time Spent: 2 seconds 670 msec

OK

10001 shiny 10001 23

NULL NULL 10004 22

NULL NULL 10007 24

NULL NULL 10008 21

10009 jack 10009 25

NULL NULL 10012 25

NULL NULL 10015 20

10018 judy 10018 19

10020 cendy 10020 26

- 全外连接 FULL JOIN

1.以两个表数据为匹配标准

2.匹配不上的就是null

3.返回的数据条数等于两表数据去重之和

hive> select * from studenta a full join studentb b on a.id=b.id;

//结果

Total MapReduce CPU Time Spent: 8 seconds 50 msec

OK

10001 shiny 10001 23

10002 mark NULL NULL

10003 angel NULL NULL

NULL NULL 10004 22

10005 ella NULL NULL

NULL NULL 10007 24

NULL NULL 10008 21

10009 jack 10009 25

NULL NULL 10012 25

10014 eva NULL NULL

NULL NULL 10015 20

10018 judy 10018 19

10020 cendy 10020 26

3 左半连接 LEFT SEMI

1.把符合两边连接条件的左表数据显示出来

2.右表只能在ON子句中设置过滤条件,在where子句、select子句或者其他地方过滤都不行

因为如果连接语句中有WHERE子句,会先执行JOIN子句,再执行WHERE子句。

hive> select * from studenta a left semi join studentb b on a.id=b.id;

//结果

Total MapReduce CPU Time Spent: 3 seconds 250 msec

OK

10001 shiny

10009 jack

10018 judy

10020 cendy

数据类型

原子数据类型

数值、布尔、字符串、日期

复杂数据类型

数组、映射、结构体

复杂数据类型实例

1.array

- 数据准备

[yao@master data]$ vim array.txt

shiny 23 beijing,tianjin,qingdao

jack 34 shanghai,guangzhou

mark 26 beijing,xian

ella 21 beijing

judy 30 shanghai,hangzhou,chongqing

cendy 28 beijing,shanghai,dalian,chengdu

- 创建表并导入数据

hive> create table employee(

> name string,

> age int,

> work_locations array<string>)

> row format delimited fields terminated by '\t'

> collection items terminated by ','

> stored as textfile;

OK

Time taken: 0.639 seconds

hive> load data local inpath '/home/yao/data/array.txt' into table employee;

- 查询数据

hive> select name,age,work_locations[0] from employee;

OK

shiny 23 beijing

jack 34 shanghai

mark 26 beijing

ella 21 beijing

judy 30 shanghai

cendy 28 beijing

Time taken: 0.131 seconds, Fetched: 6 row(s)

2.MAP

- 数据准备

[yao@master data]$ vim scores.txt

shiny chinese:90,math:100,english:99

mark chinese:89,math:56,english:87

judy chinese:94,math:78,english:81

ella chinese:54,math:23,english:48

jack chinese:100,math:95,english:69

cendy chinese:67,math:83,english:45

- 创建表并导入数据

hive> create table scores(

> name string,

> scores map<string,int>)

> row format delimited fields terminated by '\t'

> collection items terminated by ','

> map keys terminated by ':'

> stored as textfile;

OK

Time taken: 0.087 seconds

hive> load data local inpath '/home/yao/data/scores.txt' into table scores;

- 查询数据

hive> select name,scores['math'] from scores;

OK

shiny 100

mark 56

judy 78

ella 23

jack 95

cendy 83

Time taken: 0.107 seconds, Fetched: 6 row(s)

hive> select name,'math',scores['math'] from scores;

OK

shiny math 100

mark math 56

judy math 78

ella math 23

jack math 95

cendy math 83

Time taken: 0.075 seconds, Fetched: 6 row(s)

hive> select name,'.学',scores['math'] from scores;

OK

shiny 数学 100

mark 数学 56

judy 数学 78

ella 数学 23

jack 数学 95

cendy 数学 83

Time taken: 0.092 seconds, Fetched: 6 row(s)

3.struct

- 数据准备

[yao@master data]$ vim coursescore.txt

1 chinese,100

2 math,98

3 english,99

4 computer,78

- 创建表并导入数据

hive> create table coursescore(

> id int,

> course struct<name:string,score:int>)

> row format delimited fields terminated by '\t'

> collection items terminated by ','

> stored as textfile;

OK

Time taken: 0.138 seconds

hive> load data local inpath '/home/yao/data/coursescore.txt' into table coursescore;

- 查询数据

hive> select * from coursescore;

OK

1 {"name":"chinese","score":100}

2 {"name":"math","score":98}

3 {"name":"english","score":99}

4 {"name":"computer","score":78}

Time taken: 0.084 seconds, Fetched: 4 row(s)

hive> select id,course.name from coursescore;

OK

1 chinese

2 math

3 english

4 computer

Time taken: 0.081 seconds, Fetched: 4 row(s)

hive> select * from coursescore;

OK

1 {"name":"chinese","score":100}

2 {"name":"math","score":98}

3 {"name":"english","score":99}

4 {"name":"computer","score":78}

Time taken: 0.084 seconds, Fetched: 4 row(s)

hive> select id,course.name,course.score from coursescore;

OK

1 chinese 100

2 math 98

3 english 99

4 computer 78

Time taken: 0.081 seconds, Fetched: 4 row(s)

函数

内置函数

- 查看内置函数

show functions;

- 查看函数的详细信息

desc function 【函数名】;

- 显示函数的扩展信息

desc function extended 【函数名】;

自定义函数

-

应用场景

当 Hive 提供的内置函数无法满足业务处理需要时,此时就可以考虑使用用户自定义函数 -

类型

(1)UDF(user-defined function):用户自定义函数作用于单个数据行,

产生一个数据行作为输出。(数学函数,字符串函数)

(2)UDAF(User- Defined Aggregation Funcation):用户自定义聚合函数**接收多个输入数据行,并产生一个输出数据行。(COUNT、MAX)

Json数据解析

1.内置函数

- 准备数据

rating.json

{"movie":"1193","rate":"5","timeStamp":"978300760","uid":"1"}

{"movie":"661","rate":"3","timeStamp":"978302109","uid":"1"}

{"movie":"914","rate":"3","timeStamp":"978301968","uid":"1"}

{"movie":"3408","rate":"4","timeStamp":"978300275","uid":"1"}

{"movie":"2355","rate":"5","timeStamp":"978824291","uid":"1"}

{"movie":"1197","rate":"3","timeStamp":"978302268","uid":"1"}

{"movie":"1287","rate":"5","timeStamp":"978302039","uid":"1"}

{"movie":"2804","rate":"5","timeStamp":"978300719","uid":"1"}

{"movie":"594","rate":"4","timeStamp":"978302268","uid":"1"}

...

- 创建表并加载数据

hive> create table rat_json(line string) row format delimited;

OK

Time taken: 0.06 seconds

hive> load data local inpath '/home/yao/data/rating.json' into table rat_json;

Loading data to table yr_test.rat_json

Table yr_test.rat_json stats: [numFiles=1, totalSize=65602705]

OK

Time taken: 0.961 seconds

hive> select * from rat_json limit 5;

OK

{"movie":"1193","rate":"5","timeStamp":"978300760","uid":"1"}

{"movie":"661","rate":"3","timeStamp":"978302109","uid":"1"}

{"movie":"914","rate":"3","timeStamp":"978301968","uid":"1"}

{"movie":"3408","rate":"4","timeStamp":"978300275","uid":"1"}

{"movie":"2355","rate":"5","timeStamp":"978824291","uid":"1"}

- 创建存放数据的表

需要解析Json数据成四个字段,插入一张新的表 rate(用于存放处理的数据,需要有四个字段)

hive> create table rate(

> movie int,

> rate int,

> ts int,

> userid int)

> row format delimited fields terminated by '\t';

OK

Time taken: 0.09 seconds

- 插入数据解析Json

解析Json,得到结果之后存入rate表

insert into table rate select

get_json_object(line,'$.movie') as movie,

get_json_object(line,'$.rate') as rate,

get_json_object(line,'$.timeStamp') as ts,

get_json_object(line,'$.uid') as userid

from rat_json;

- 查看解析出来的Json数据

hive> select * from rate limit 10;

OK

1193 5 978300760 1

661 3 978302109 1

914 3 978301968 1

3408 4 978300275 1

2355 5 978824291 1

1197 3 978302268 1

1287 5 978302039 1

2804 5 978300719 1

594 4 978302268 1

919 4 978301368 1

Time taken: 0.078 seconds, Fetched: 10 row(s)

2.自定义函数

1.新建一个项目HiveDemo,导入apache-hive-1.2.1-bin\lib下的所有包

- 在windows下解压hive的安装包文件apache-hive-1.2.1-bin.tar

- 新建java project

- 添加包

2.开发一个简单的 Java 类,继承org.apache.hadoop.hive.ql.exec.UDF,重载 evaluate 方法

package org.yao.hive.udf;

import org.apache.hadoop.hive.ql.exec.UDF;

import org.json.JSONException;

import org.json.JSONObject;

//解析Json格式数据

public class JsonUDF extends UDF {

//必须是public

public String evaluate(String jsonStr,String fields) throws JSONException {

//创建一个json对象

JSONObject json=new JSONObject(jsonStr);

//把这个json对象转换为string类型的对象

String result=(String)json.get(fields);

//返回这个string类型的对象

return result;

}

}

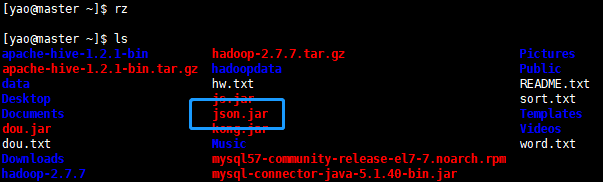

3.打成json.jar包上传到Linux服务器

4.将jar包添加到Hive的classpath下

hive> add jar /home/yao/json.jar;

Added [/home/yao/json.jar] to class path

Added resources: [/home/yao/json.jar]

hive> list jar;

/home/yao/json.jar //查看jar包是否加入成功

5.创建临时函数与开发好的 class 关联起来

hive> hive> create temporary function jsontostring as 'org.yao.hive.udf.JsonUDF';

OK

Time taken: 0.011 seconds

//jsontostring :临时函数的名字

//'org.yao.hive.udf.JsonUDF'是'包名.类名'

6.解析Json,得到结果之后存入rates表(rates表用于存放处理的数据,所以需要有四个字段)

create table rates as select

jsontostring(line,'movie') as movie,

jsontostring(line,'rate') as rate,

jsontostring(line,'timeStamp') as ts,

jsontostring(line,'uid') as userid

from rat_json;

7.至此,便可以使用自定义的函数

hive> select * from rates limit 10;

OK

1193 5 978300760 1

661 3 978302109 1

914 3 978301968 1

3408 4 978300275 1

2355 5 978824291 1

1197 3 978302268 1

1287 5 978302039 1

2804 5 978300719 1

594 4 978302268 1

919 4 978301368 1

Time taken: 0.063 seconds, Fetched: 10 row(s)

Hive Shell

-

参数

(1)-i:从文件初始化 HQL

(2)-e:从命令行执行指定的 HQL

(3)-f:执行 HQL 脚本

(4)-v:输出执行的 HQL 语句到控制台

(5)-p: connect to Hive Server on port number

(6)-hiveconf x=y(Use this to set hive/hadoop configuration variables)

(7)-S:表示以不打印日志的形式执行命名操作 -

从命令行执行指定的HQL

[yao@master ~]$ cd data/

[yao@master data]$ touch studenta

//将数据库yr_test中的表studenta写入这个新建的空文件中

[yao@master data]$ hive -e 'select * from yr_test.studenta' > studenta

//如果当前目录不存在studentaaa,那么会自动创建

[yao@master ~]$ hive -e 'select * from yr_test.studenta' > studentaaa

[yao@master ~]$ cat studentaaa

10001 shiny

10002 mark

10003 angel

10005 ella

10009 jack

10014 eva

10018 judy

10020 cendy

- 运行一个文件

1.创建一个HQL文件

[yao@master ~]$ vim hive.hql

[yao@master ~]$ cat hive.hql

create database if not exists yr_0322;

use yr_0322;

create table if not exists stu(id int,name string)

row format delimited fields terminated by ','

stored as textfile;

load data local inpath '/home/yao/data/stu.txt' into table stu;

insert overwrite directory '/yr_0322/stu' row format delimited fields terminated by '\t' select * from stu;

overwrite是复写,如果不写就是在后面追加

stu.txt

1,张三

2,李四

3,rose

4,jack

5,haha

6,hehe

7,aaaaaaaaaaaaa

2.执行HQL脚本

[yao@master ~]$ hive -f hive.hql

//在hdfs上查看

[yao@master ~]$ hadoop fs -ls /yr_0322/stu

Found 1 items

-rwxr-xr-x 1 yao supergroup 52 2019-03-22 17:25 /yr_0322/stu/000000_0

[yao@master ~]$ hadoop fs -cat /yr_0322/stu/000000_0

1 张三

2 李四

3 rose

4 jack

5 haha

6 hehe

7 aaaaaaaaaaaaa

//在hive上查看

hive> use yr_0322;

OK

Time taken: 0.026 seconds

hive> select * from stu;

OK

1 张三

2 李四

3 rose

4 jack

5 haha

6 hehe

7 aaaaaaaaaaaaa

Time taken: 0.188 seconds, Fetched: 7 row(s)

1879

1879

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?