In today’s long post, I’m going to explain the guidelines we follow at Retibus Software in order to handle Unicode text in Windows programs written in C and C++ with Microsoft Visual Studio. Our approach is based on using the types char and std::string and imposing the rule that text must always be encoded in UTF-8. Any other encodings or character types are only allowed as temporary variables to interact with other libraries, like the Win32 API.

Note that a lot of books on Windows programming recommend using wide characters for internationalised text, but I find that using single bytes encoded as UTF-8 as the internal representation for strings is a much more powerful and elegant approach. The reason for this is that it is easier to use char-based functions in standard C and C++. Developers are usually much more familiar with functions like strcpy in C or the C++ std::string class than with the wide-character equivalents wcscpy and std::wstring, and the support for wide characters is not completely consistent in either standard. For example, the C++ std::exception class only accepts std::string descriptions in its constructor. In addition, using the char and std::string types makes the code much more portable across platforms, as the char type is always, by sheer definition, one byte long, whereas sizeof(wchar_t) can typically be 2 or 4 depending on the platform.

Even if we are developing a Windows-only application, it is good practice to isolate the Windows-dependent parts as much as possible, and using UTF-8-encoded strings is a solid way of providing full Unicode support with a highly portable and readable coding style.

But of course, there are a number of caveats that must be taken into account when we use UTF-8 in C and C++ strings. I have summed up my coding guidelines regarding strings in five rules that I list below. I hope others will find this approach useful. Feel free to use the comments section for any criticism or suggestions.

1. First rule: Use UTF-8 as the internal representation for text.

The original type for characters in the C language is char, which doubles as the type that represents single bytes, the atomic size of memory that can be addressed by a C program, and which is typically made up of eight bits (an ‘octet’). C programmers are used to treating text as an array of char units, and the standard C library offers a set of functions that handle text such as strcmp and strcat, that any self-respecting C programmer should be completely familiar with. The standard C++ library offers a class std::string that encapsulates a C-style char array, and all C++ programmers are familiar with that class. Because using char and std::string is the original and natural way to code text in the C and C++ languages, I think it is better to stick to those types and avoid the wide character types.

Why are wide characters better avoided? Well, the support for wide characters in C and C++ has been somewhat erratic and inconsistent. They were added to both C and C++ at a time when it was thought that 16 bits would be big enough to hold any Unicode character, which is no longer the case. The standard C99 and C++98 specifications include the wchar_t type (as a standard typedef in C and as a built-in type in C++) and text literals preceded by the L operator, such as L”hello”, are treated as an array of wchar_t characters. In order to handle wide characters, standard C functions such as strcpy and strcmp have been replicated in wide-character versions (wcscpy, wcscmp, and so on) that are also part of the standard specifications, and the C++ standard library defines its string class as a template std::basic_string with a parameterised character type, which allows the existence of two specialisations std::string and std::wstring. But the use of wide characters can’t replace the plain old chars completely. In fact, there’s still a number of standard functions and classes that require char-based strings, and using wide characters often depends on compiler-specific extensions like _wfopen. Another serious drawback is that the wchar_t type doesn’t have a fixed size across compilers, as the standard specifications in both C and C++ don’t mandate any size or encoding. This can lead to trouble when serialising and deserialising text across different platforms. In Visual Studio,sizeof(wchar_t) returns 2, which means that it can only store text represented as UTF-16, as Windows does by default. UTF-16 is a variable-width encoding, just like UTF-8. I mentioned in my previous post how there was a time in the past when it was thought that 16 bits would be enough for the whole Unicode character repertoire, but that idea was abandoned a long time ago, and 16-bit characters can only be used to support Unicode when one accepts that there are ‘surrogate pairs’, i.e. characters (in the sense of Unicode code points) that span a couple of 16-bit units in their representation. So, the idea that each wchar_t would be a full Unicode character is not true any more, and even when using wchar_t-based strings we may come across fragments of full characters.

The problem of the variable size of wchar_t units across compilers has been addressed by the forthcoming C and C++ standards (C1X and C++0x), which define the new wide character types char16_t and char32_t. These new types, as is clear from their names, have a fixed size. With the new types, string literals can now come in five (!) flavours:

char plainString[] = “hello”; //Local encoding, whatever that may be.

wchar_t wideString[] = L”hello”; //Wide characters, usually UTF-16 or UTF-32.

char utf8String[] = u8“hello”; //UTF-8 encoding.

char16_t utf16String[] = u”hello”; //UTF-16 encoding.

char32_t utf32String[] = U”hello”; //UTF-32 encoding.

So, in C1x and C++0x we now have five different ways of declaring string literals to account for different encodings and four different character types. C++0x also defines the types std::u16string and std::u32string based on the std::basic_string template, just like std::string and std::wstring. However, support for these types is still poor and any code that relies on these types will end up managing lots of encoding conversions when dealing with third-party libraries and legacy code. Because of these limitations of wide character types, I think it is much better to stick to plain old chars as the best text representation in the C and C++ languages.

Note that, contrary to a widespread misconception, it is perfectly possible to use char-based strings for text in C and C++ and fully support Unicode. We simply need to ensure that all text is encoded as UTF-8, rather than a narrow region-dependent set of characters such as the ISO-8859-1 encoding used for some Western European languages. Support of Unicode depends on which encoding we enforce for text, not on whether we choose single bytes or wide characters as our atomic character type.

An apparent drawback of the use of chars and UTF-8 to support Unicode is that there is not a one-to-one correspondence between the characters as the atomic units of the string and the Unicode code points that represent the full characters rendered by fonts. For example, in a UTF-8-encoded string, an accented letter like ‘á’ is made up of two char units, whereas a Chinese character like ‘中’ is made up of three chars. Because of that, a UTF-8-encoded string can be regarded as a glorified array of bytes rather than as a real sequence of characters. However, this is rarely a problem because there are few situations when the boundaries between Unicode code points are relevant at all. When using UTF-8, we don’t need to iterate code point by code point in order to find a particular character or piece of text in a string. This is because of the brilliant UTF-8 guarantee that the binary representation of one character cannot be part of the binary representation of another character. And when we deal with string sizes, we may need to know how many bytes make up the string for allocation purposes, but the size in terms of Unicode code points is quite useless in any realistic scenario. Besides, the concept of what constitutes one individual character is fraught with grey areas. There are many scripts (Arabic andDevanagari, for example) where letters can be merged together in ligature forms and it may be a bit of a moot point whether such ligatures should be considered as one character or a sequence of separate characters. In the few cases where we may need to iterate through Unicode code points, like for example a word wrap algorithm, we can do that through a utility function or class. It is not difficult to write such a class in C++, but I’ll leave that for a future post.

A problem that is more serious is that of Unicode normalisation. Basically, Unicode allows diacritics to be codified on their own, so that ‘á’ may be codified as one character or as ‘a’ + ‘´’. Because of that, a piece of text like ‘árbol’ can be codified in two different ways in valid Unicode. This affects comparison, as comparing for equality byte by byte would fail to take into account such alternative codes for combined characters. The problem is, however, highly theoretical. I have yet to come across a case where I find two equal strings where the diacritics have been codified in different ways. Since we usually read text from files using a unified approach, I can’t think of any real-life scenario where we would need to be aware of a need for normalisation. For some interesting discussions about these issues, seethis thread in the Usenet group comp.lang.c++.moderated (I agree with comment 7 by user Brendan) and this question in the MSDN forums (I agree with the comments by user Igor Tandetnik). For the issue of Unicode normalisation, see this part of the Unicode standard.

2. Second rule: In Visual Studio, avoid any non-ASCII characters in source code.

It is always advisable to keep any hard-coded text to a minimum in the source code. We don’t want to have to recompile a program just to translate its user interface or to correct a spelling mistake in a message displayed to the user. So, hard-coded text usually ought to be restricted to simple keywords and tokens and the odd file name or registry key, which shouldn’t need any non-ASCII characters. If, for whatever reason, you want to have some hard-coded non-ASCII text within the source, you have to be aware that there is a very serious limitation: Visual Studio doesn’t support Unicode strings embedded in the source code. By default, Visual Studio saves all the source files in the local encoding specific to your system. I’m using an English version of Visual Studio 2010 Express running on Spanish Windows 7, and all the source files get saved as ISO-8859-1. If I try to write:

const

char

kChineseSampleText[] =

"中文"

;

|

Visual Studio will warn me when it attempts to save the file that the current encoding doesn’t support all the characters that appear in the document, and will offer me the possibility of saving it in a different encoding, like UTF-8 or UTF-16 (which Visual Studio, like other Microsoft programs, sloppily calls ‘Unicode’). Even if we save the source file as UTF-8, Visual Studio will save the file with the right characters, but the compiler will issue a warning:

"warning C4566: character represented by universal-character-name '\u4E2D' cannot be represented in the current code page (1252)"

This means that even if we save the file as UTF-8, the Visual Studio compiler still tries to interpret literals as being in the current code page (the narrow character set) of the system. Things will get better with the forthcoming C++0x standard. In the newer standard, only partially supported by current compilers, it will be possible to write:

const

char

kChineseSampleText[] = u8

"中文"

;

|

And the u8 prefix specifies that the literal is encoded as UTF-8. Similar prefixes are ‘u’ for UTF-16 text as an array of char16_t characters, and ‘U’ for UTF-32 text as an array of char32_t characters. These prefixes will join the currently available ‘L’ used for wchar_t-based strings. But until this feature becomes available in the major compilers, we’d better ignore it.

Because of these limitations with the use of UTF-8 text in source files, I completely avoid the use of any non-ASCII characters in my source code. This is not difficult for me because I write all the comments in English. Programmers who write the comments in a different language may benefit from saving the files in the UTF-8 encoding, but even that would be a hassle with Visual Studio and not really necessary if the language used for the comments is part of the narrow character set of the system. Unfortunately, there is no way to tell Visual Studio, at least the Express edition, to save all new files with a Unicode encoding, so we would have to use an external editor like Notepad++ in order to resave all our source files as UTF-8 with a BOM, so that Visual Studio would recognise and respect the UTF-8 encoding from that moment on. But it would be a pain to depend on either saving the files using an external editor or on typing some Chinese to force Visual Studio to ask us about the encoding to use, so I prefer to stick to all-ASCII characters in my source code. In that way, the source files are plain ASCII and I don’t have to worry about encoding issues.

Note that on my English version, Visual Studio will warn me about Chinese characters falling outside the narrow character set it defaults to, but it won’t warn me if I write:

const

char

kSpanishSampleText[] =

"cañón"

;

|

That’s because ‘cañón’ can be represented in the local code page. But the above line would then be encoded as ISO-8859-1, not UTF-8, so such a hard-coded text would violate the rule to use UTF-8 as the internal representation of text, and care must be taken to avoid such hard-coded literals. Of course, if you use a Chinese-language version of Visual Studio you should swap the examples in my explanation. On a Chinese system, ‘cañón’ would issue the warnings, whereas ‘中文’ would be accepted by the compiler, but would be stored in a Chinese encoding such as GB or Big5.

As long as Microsoft doesn’t add support for the ‘u8′ prefix and better options to enforce UTF-8 as the default encoding for source files, the only way of hard-coding non-ASCII string literals in UTF-8 consists in listing the numeric values for each byte. This is what I do when I want to test internationalisation support and consistency in my use of UTF-8. I typically use the next three lines:

// Chinese characters for "zhongwen" ("Chinese language").

const char kChineseSampleText[] = {-28, -72, -83, -26, -106, -121, 0};

// Arabic "al-arabiyya" ("Arabic").

const char kArabicSampleText[] = {-40, -89, -39, -124, -40, -71, -40, -79, -40, -88, -39, -118, -40, -87, 0};

// Spanish word "canon" with an "n" with "~" on top and an "o" with an acute accent.

const char kSpanishSampleText[] = {99, 97, -61, -79, -61, -77, 110, 0};

These hard-coded strings are useful for testing. The first one is the Chinese word ‘中文’, the second one, useful to check if right-to-left text is displayed correctly, is the Arabic ‘العربية’, and the third one is the Spanish word ‘cañón’. Note how I have avoided the non-ASCII characters in the comments. Of course in a final application, the UTF-8-encoded text displayed in the GUI should be loaded from resource files, but the hard-coded text above comes in handy for quick tests.

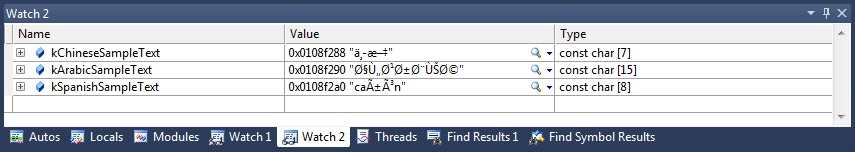

Using UTF-8 as our internal representation for text has another important consequence when using Visual Studio, which is that the text won’t be displayed correctly in the watch windows that show the value of variables while debugging. This is because these debugging windows also assume that the text is encoded in the local narrow character set. This is how the three constant literals above appear on my version of Visual Studio:

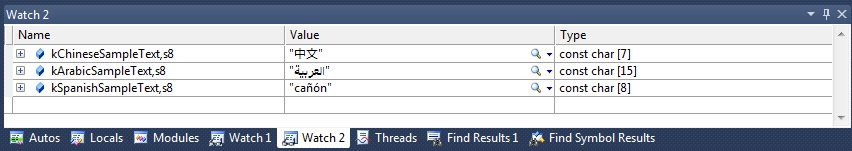

Fortunately, there is a way to display the right values thanks to the ‘s8′ format specifier. If we append ‘,s8′ to the variable names, Visual Studio reparses the text in UTF-8 and renders the text correctly:

The ‘s8′ format specifier works with plain char arrays but not with std::string variables. Ireported this last year to Microsoft, but they have apparently deferred fixing it to a future edition of Visual Studio.

3. Third rule: Translate between UTF-8 and UTF-16 when calling Win32 functions.

When programming for Windows we need to understand how the Win32 API functions, and other libraries built on top of that like MFC, handle characters and Unicode. Before Windows NT, previous versions of the operating system like Windows 95 would not support Unicode internally, but just the narrow character set that depended on the system language. So, for example, an English-language version of Windows 95 would support characters in the Western European 1252 code page, which is nearly the same as ISO-8859-1. Because of the assumption that all these regional encodings would fall under some standard or other, these character sets are referred to as ‘ANSI’ (American National Standards Institute) in Microsoft’s dated terminology. At those pre-Unicode times, the functions and structs in the Win32 API would use plain char-based strings as parameters. With the advent of Windows NT, Microsoft made the decision to support Unicode internally, so that the file system would be able to cope with file names using any arbitrary characters rather than a narrow character set varying from region to region. In retrospect, I think Microsoft should have chosen to support UTF-8 encoding and stick with the plain chars, but what they did at the time was split the functions and structs that make use of strings into two alternative varieties, one that uses plain chars and another one that uses wchar_t’s. For example, the old MessageBox function was split into two functions, the so-called ANSI version:

int

MessageBoxA(

HWND

hWnd,

const

char

* lpText,

const

char

* lpCaption,

UINT

uType);

|

and the Unicode one:

int

MessageBoxW(

HWND

hWnd,

const

wchar_t

* lpText,

const

wchar_t

* lpCaption,

UINT

uType);

|

The reason for these ugly names ending in ‘A’ and ‘W’ is that Microsoft didn’t expect these functions to be used directly, but through a macro:

#ifdef UNICODE

#define MessageBox MessageBoxW

#else

#define MessageBox MessageBoxA

#endif // !UNICODE

|

All the Win32 functions and structs that handle text adhere to this paradigm, where two alternative functions or structs, one ending in ‘A’ and another one ending in ‘W’, are defined and then there is a macro that will get resolved to the ANSI version or the Unicode version depending on whether the ‘UNICODE’ macro has been #defined. Because the ANSI and Unicode varieties use different types, we need a new type that should be typedeffed as either char or wchar_t depending on whether ‘UNICODE’ has been #defined. This conditional type is TCHAR, and it allows us to treat MessageBox as if it were a function with the following signature:

int

MessageBox(

HWND

hWnd,

const

TCHAR

* lpText,

const

TCHAR

* lpCaption,

UINT

uType);

|

This is the way Win32 API functions are documented (except that Microsoft uses the ugly typedef LPTSTR for const TCHAR*). Thanks to this syntactic sugar, Microsoft treats the macros as if they were the real functions and the TCHAR type as if it were a distinct character type. The macro TEXT, together with its shorter variant _T, is also #defined in such a way that a literal like TEXT(“hello”) or _T(“hello”) can be assumed to have the const TCHAR* type, and will compile no matter whether UNICODE is #defined or not. This nifty mechanism makes it possible to write code where all characters will be compiled into either plain characters in a narrow character set or wide characters in a Unicode (UTF-16) encoding. The way characters are resolved at compile time simply depends on whether the macro UNICODE has been #defined globally for the whole project. The same mechanism is used for the C run-time library, where functions like strcmp and wcscmp can be replaced by the macro _tcscmp, and the behaviour of such macros depends on whether ‘_UNICODE’ (note the underscore) has been #defined. The ‘UNICODE’ and ‘_UNICODE’ macros can be enabled for the whole project through a checkbox in the project Settings dialog.

Now this way of writing code that can be compiled as either ANSI or Unicode by the switch of a global setting may have made sense at the time when Unicode support was not universal in Windows. But the versions of Windows that are still supported today (XP, Vista and 7) are the most recent releases of Windows NT, and all of them use Unicode internally. Because of that, being able to compile alternative ANSI and Unicode versions doesn’t make any sense nowadays, and for the sake of clarity it is better to avoid the TCHAR type altogether and use an explicit character type when calling Windows functions.

Since we have decided to use chars as our internal representation, it might seem that we can use the ANSI functions, but that would be a mistake because there is no way to make Windows use the UTF-8 encoding. If we have a char-based string encoded as UTF-8 and we pass it to the ANSI version of a Win32 function, the Windows implementation of the function will reinterpret the text according to the narrow character set, and any non-ASCII characters would end up mangled. In fact, because the so-called ANSI versions use the narrow character set, it is impossible to support Unicode using them. For example, it is impossible to create a directory with the name ‘中文-español’ using the function CreateDirectoryA. For such internationalised names you need to use the Unicode version CreateDirectoryW. So, in order to support the full Unicode repertoire of characters we definitely have to use the Unicode W-terminated functions. But because those functions use wide characters and assume a UTF-16 encoding, we will need a way to convert between that encoding and our internal UTF-8 representation. We can achieve that with a couple of utility functions. It is possible to write these conversion functions in a platform-independent way by transforming the binary layout of the strings according to the specification. If we are going to use these functions with Windows code, an alternative and quicker approach is to base these utility functions on the Win32 API functions WideCharToMultiByte and MultiByteToWideChar. In C++, we can write the conversion functions as follows:

std::string ConvertFromUtf16ToUtf8(conststd::wstring& wstr)

{

std::string convertedString;

intrequiredSize = WideCharToMultiByte(CP_UTF8, 0, wstr.c_str(), -1, 0, 0, 0, 0);

if(requiredSize > 0)

{

std::vector<char> buffer(requiredSize);

WideCharToMultiByte(CP_UTF8, 0, wstr.c_str(), -1, &buffer[0], requiredSize, 0, 0);

convertedString.assign(buffer.begin(), buffer.end() - 1);

}

returnconvertedString;

}

std::wstring ConvertFromUtf8ToUtf16(conststd::string& str)

{

std::wstring convertedString;

intrequiredSize = MultiByteToWideChar(CP_UTF8, 0, str.c_str(), -1, 0, 0);

if(requiredSize > 0)

{

std::vector<wchar_t> buffer(requiredSize);

MultiByteToWideChar(CP_UTF8, 0, str.c_str(), -1, &buffer[0], requiredSize);

convertedString.assign(buffer.begin(), buffer.end() - 1);

}

returnconvertedString;

}voidSetWindowTextUtf8(HWNDhWnd, conststd::string& str)

{

std::wstring wstr = ConvertUtf8ToUtf16(str);

SetWindowTextW(hWnd, wstr.c_str());

}

std::string GetWindowTextUtf8(HWNDhWnd)

{

std::string str;

intrequiredSize = GetWindowTextLength(hWnd) + 1; //We have to take into account the final null character.

if(requiredSize > 0)

{

std::vector<wchar_t> buffer(requiredSize);

GetWindowTextW(hWnd, &buffer[0], requiredSize);

std::wstring wstr(buffer.begin(), buffer.end() - 1);

str = ConvertUtf16ToUtf8(wstr);

}

returnstr;

}4. Fourth rule: Use wide-character versions of standard C and C++ functions that take file paths.

There is a very annoying problem with Microsoft’s implementation of the C and C++ standard libraries. Imagine we store the search path for some file resources as a member variable in one of our classes. Since we always use UTF-8 for our internal representation, this member will be a UTF-8-encoded std::string. Now we may want to open or create a temporary file in this directory adding a file name to the search path and calling the C run-time function fopen. In C++ we would preferably use a std::fstream object, but in either case, we will find that everything works fine if the full path of the file name contains ASCII characters only. The problem may remain unnoticed until we try a path that has an accented letter or a Chinese character. At that moment, the code that uses fopen or std::fstream will fail. The reason for this is that the standard non-wide functions and classes that deal with the file system, such as fopen and std::fstream, assume that the char-based string is encoded using the local system’s code page, and there is no way to make them work with UTF-8 strings. Note that at this moment (2011), Microsoft does not allow to set a UTF-8 locale using the set_locale C function. According to MSDN:

The set of available languages, country/region codes, and code pages includes all those supported by the Win32 NLS API except code pages that require more than two bytes per character, such as UTF-7 and UTF-8. If you provide a code page like UTF-7 or UTF-8, setlocale will fail, returning NULL. The set of language and country/region codes supported by setlocale is listed in Language and Country/Region Strings (source).

This is really unfortunate because the code that uses fopen or std::fstream is standard C++ and we would expect that code to be part of our fully portable code that can be compiled under Windows, Mac or Linux without any changes. However, because of the impossibility to configure Microsoft’s implementation of the standard libaries to use UTF-8 encoding, we have to provide wrappers for these functions and classes, so that the UTF-8 string is converted to a wide UTF-16 string and the functions and classes that use wide-characters such as _wfopen and std::wfstream are used internally instead. Writing a wrapper for std::fstream before Visual C++ 10 was difficult because only the wide-character file stream objects wifstream and wofstream could be constructed with wide characters, but using such objects to extract text was a pain, so they were nearly useless unless supplemented with one’s own code-conversion facets (quite a lot of work). On the other hand, it was impossible to access a file with a name including characters outside the local code page (like “中文.txt” on a Spanish system) using the char-based ifstream and ofstream. Fortunately, the latest version of the C++ compiler has extended the constructors of the standard fstream objects to support wide character types (in line with new additions to the forthcoming C++0x standard), which simplifies the task of writing such wrappers:

namespaceutf8

{

#ifdef WIN32

classifstream : publicstd::ifstream

{

public:

ifstream() : std::ifstream() {}

explicitifstream(constchar* fileName, std::ios_base::open_mode mode = std::ios_base::in) :

std::ifstream(ConvertFromUtf8ToUtf16(fileName), mode)

{

}

explicitifstream(conststd::string& fileName, std::ios_base::open_mode mode = std::ios_base::in) :

std::ifstream(ConvertFromUtf8ToUtf16(fileName), mode)

{

}

voidopen(constchar* fileName, std::ios_base::open_mode mode = std::ios_base::in)

{

std::ifstream::open(ConvertFromUtf8ToUtf16(fileName), mode);

}

voidopen(conststd::string& fileName, std::ios_base::open_mode mode = std::ios_base::in)

{

std::ifstream::open(ConvertFromUtf8ToUtf16(fileName), mode);

}

};

classofstream : publicstd::ofstream

{

public:

ofstream() : std::ofstream() {}

explicitofstream(constchar* fileName, std::ios_base::open_mode mode = std::ios_base::out) :

std::ofstream(ConvertFromUtf8ToUtf16(fileName), mode)

{

}

explicitofstream(conststd::string& fileName, std::ios_base::open_mode mode = std::ios_base::out) :

std::ofstream(ConvertFromUtf8ToUtf16(fileName), mode)

{

}

voidopen(constchar* fileName, std::ios_base::open_mode mode = std::ios_base::out)

{

std::ofstream::open(ConvertFromUtf8ToUtf16(fileName), mode);

}

voidopen(conststd::string& fileName, std::ios_base::open_mode mode = std::ios_base::out)

{

std::ofstream::open(ConvertFromUtf8ToUtf16(fileName), mode);

}

};

#else

typedefstd::ifstream ifstream;

typedefstd::ofstream ofstream;

#endif

}// namespace utf85. Fifth rule: Be careful with third-party libraries.

When using third-party libraries, care must be taken to check how these libraries support Unicode. Libraries that follow the same approach as Windows, based on wide characters, will also require encoding conversions every time text crosses the library boundaries. Libraries that use char-based strings may also assume or allow UTF-8 internally, but if a library renders text or accesses the file system we may need to tweak its settings or modify its source code to prevent it from lapsing into the narrow character set.

6. References

In addition to the references listed in the two previous posts on Unicode (Thanks for signing up, Mr. González – Welcome back, Mr. González! and Character encodings and the beauty of UTF-8) and the links within the post, I’ve also found the following discussions and articles interesting:

- Reading UTF-8 with C++ streams. Very good article by Emilio Caravaglia.

- Locale/UTF-8 file path with std::ifstream. Discussion about how to initialise a std::ifstream object with a UTF-8 path.

ref http://www.nubaria.com/en/blog/?p=289

1197

1197

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?