从本文开始,将进入Kubernetes实战阶段,后续大部分文章将以实战为基础,讲解各个知识点

本文将实现:

完整的ETCD集群

自颁发SSL证书

Docker的安装

Flanneld网络从安装到到原理深入理解

一、官方提供的三种部署方式

1.1、minikube

Minikube是一个工具,可以在本地快速运行一个单点的kubernetes,仅用于尝试kubernetes或日常开发的用户使用。

官方地址:https://kubernetes.io/docs/setup/minikube/

1.2、kubeadm

Kubeadm也是一个工具,提供kubeadm init和kubeadm join,用于快速部署kubernetes集群。

官网地址:https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm/

1.3、二进制包

推荐的生产环境部署方式,从官网下载发行版的二进制源码包,手动部署每个组件,组成kubernetes集群,也有助于理解kubernetes整个集群的构成。

下载地址:https://github.com/kubernetes/kubernetes/releases

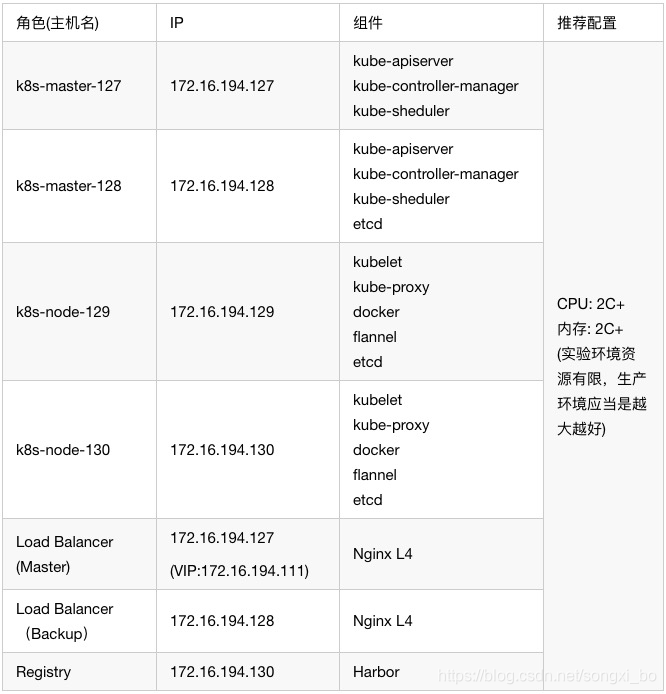

二、kubernetes平台环境规划

2.1、软件和服务器环境规划

你需要准备一台或多台服务器,这里我准备了三台虚拟机,分别如下:

OS:CentOS Linux release 7.6.1810 (Core)

软件版本:

Kubernetes 1.14

Etcd 3.3

Docker version 18.09.5

Fannel 0.10

Load Balancer我复用了两个master(因为机器不够),生产环境应该是两台负载均衡服务器;

2.2、平台架构图

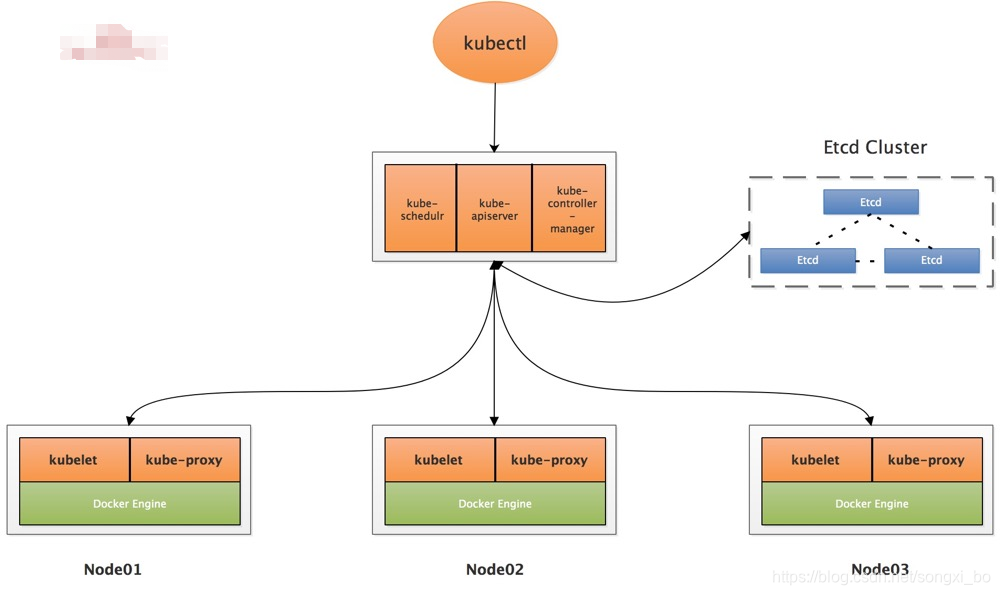

2.2.1、单Master节点

适用于做实验环境,能节省机器开销,不建议上生产。本次实验先部署单Master节点的架构图,如果需要做多Master,后面再横向扩展也很容易

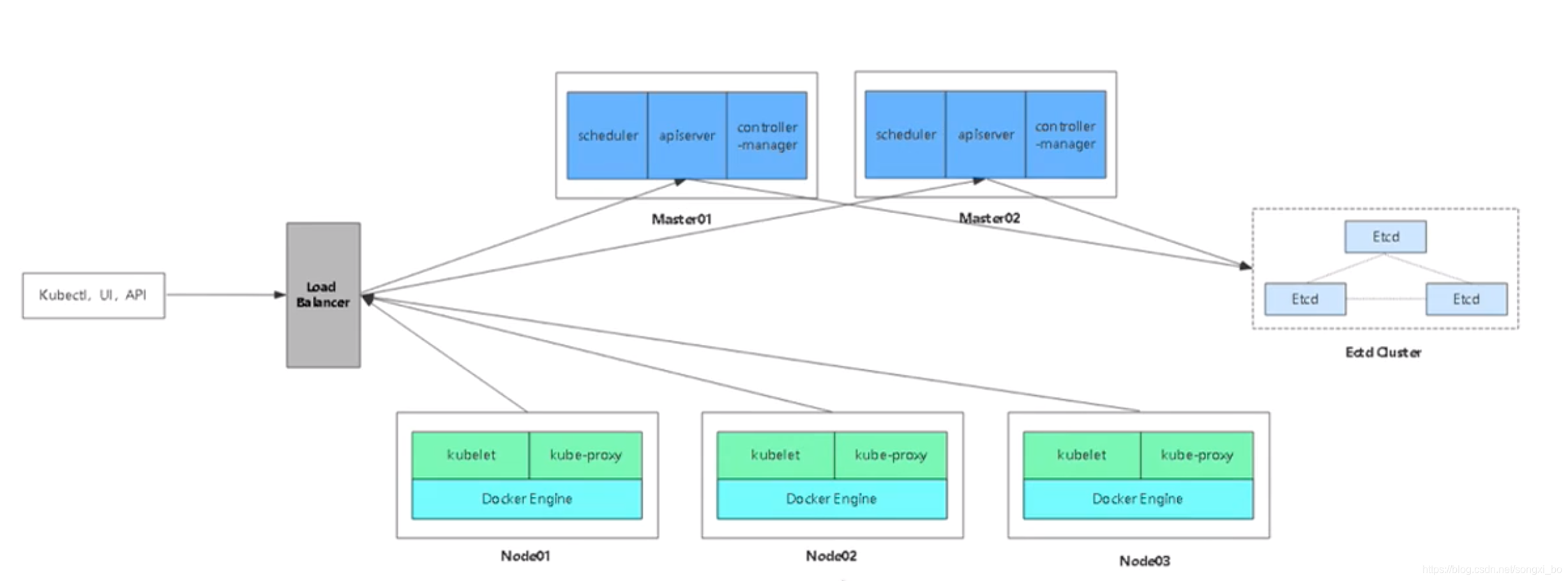

2.2.2、多Master节点

整个集群一般需要8台以上服务器,适用于生产环境,每个功能有对应的服务器支撑,保障线上k8s集群的健壮性,也是为业务保驾护航。

生产环境的Etcd集群应当是一组高可用服务器,为k8s提供稳定的服务;

Load Balancer为k8s-master的api-server提供负载均衡服务;

Master节点一般是两个以上;

Node节点根据业务需求,可横向扩展至N个;

2.3、禁用SElinux并关闭防火墙

$ vim /etc/selinux/config

SELINUX=disabled

临时禁用selinux

setenforce 0

$ systemctl stop firewalld

$ systemctl disable firewalld

2.4、关闭SWAP分区

k8s集群不允许使用swap分区,否则会出问题,详细信息自行了解。

$ swapoff -a

$ swapon -s # 没有分区信息即可。

2.5、配置host解析

vim /etc/hosts

172.16.194.127 k8s-master-127

172.16.194.128 k8s-master-128

172.16.194.129 k8s-node-129

172.16.194.130 k8s-node-130

自己顺便把主机名也根据规划配置好哈

2.6、配置时间同步

$ crontab -e

时间同步

*/5 * * * * /usr/sbin/ntpdate ntp1.aliyun.com >/dev/null 2>&1

2.7、配置主机密钥通讯信任

做这个密钥认证是为了后面分发k8s集群私钥时方便。

[root@k8s-master-128 ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory ‘/root/.ssh’.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:CxqCw48dTEwAUEIjBzEax5v9xz+2XLQVKSjJ5OVaj78 root@k8s-master-128

The key’s randomart image is:

±–[RSA 2048]----+

|&O=. . . |

|o*= + + . . |

|. * = + . o |

|…= . + o . . |

|o…o…S . o . |

| .+…o…o. o o |

| . o. … + |

| .+. . |

| .ooE |

±—[SHA256]-----+

[root@k8s-master-128 ~]# ls -lh /root/.ssh/

总用量 8.0K

-rw------- 1 root root 1.7K 4月 30 14:28 id_rsa

-rw-r–r-- 1 root root 401 4月 30 14:28 id_rsa.pub

[root@k8s-master-128 ~]# ssh-copy-id k8s-node-129

[root@k8s-master-128 ~]# ssh-copy-id k8s-node-130

[root@k8s-master-128 ~]# ssh ‘k8s-node-130’ # 测试登录下

上面做的是k8s-master单向信任认证,如果需要做多向信任认证的话,把私钥cp到其他两台节点上即可。

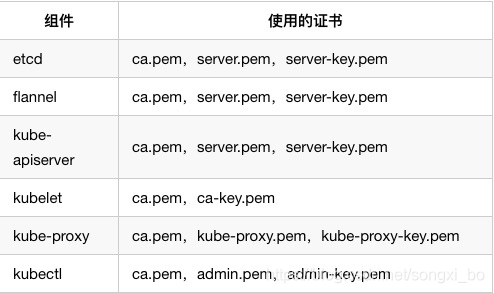

三、自签SSL证书

参考:

Kubernetes安装之证书验证

创建CA证书和秘钥

3.1、安装证书生成工具cfssl

[root@k8s-master-128 ~]# mkdir ssl

[root@k8s-master-128 ~]# cd ssl/

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64

mv cfssl_linux-amd64 /usr/local/bin/cfssl

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

3.2、生成Etcd证书

Etcd集群需要使用两个证书,ca和server证书,生成方法如下:(在Master节点生成即可)

[root@k8s-master-128 ~]# mkdir /root/ssl/etcd-cret

[root@k8s-master-128 ~]# cd /root/ssl/etcd-cret/

[root@k8s-master-128 etcd-cret]# cat etcd-cret.sh

#!/bin/bash

创建Etcd CA证书

cat > ca-config.json <<EOF

{

“signing”: {

“default”: {

“expiry”: “87600h”

},

“profiles”: {

“www”: {

“expiry”: “87600h”,

“usages”: [

“signing”,

“key encipherment”,

“server auth”,

“client auth”

]

}

}

}

}

EOF

cat > ca-csr.json <<EOF

{

“CN”: “Etcd CA”,

“key”: {

“algo”: “rsa”,

“size”: 2048

},

“names”: [

{

“C”: “CN”,

“L”: “Beijing”,

“ST”: “Beijing”

}

]

}

EOF

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

#-----------------------

创建Etcd Server证书

cat > server-csr.json <<EOF

{

“CN”: “Etcd”,

“hosts”: [

“172.16.194.128”,

“172.16.194.129”,

“172.16.194.130”

],

“key”: {

“algo”: “rsa”,

“size”: 2048

},

“names”: [

{

“C”: “CN”,

“L”: “BeiJing”,

“ST”: “BeiJing”

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

[root@k8s-master-128 etcd-cret]# chmod +x etcd-cret.sh

[root@k8s-master-128 etcd-cret]# sh etcd-cret.sh # 警告忽略即可

将证书放到它该存在的位置:

[root@k8s-master-128 etcd-cret]# mkdir -p /opt/etcd/{cfg,bin,ssl}

[root@k8s-master-128 etcd-cret]# cp /root/ssl/etcd-cret/{ca*,server*} /opt/etcd/ssl/

[root@k8s-master-128 etcd-cret]# ls -lh /opt/etcd/ssl/

总用量 24K

-rw-r–r-- 1 root root 956 4月 30 17:33 ca.csr

-rw------- 1 root root 1.7K 4月 30 17:33 ca-key.pem

-rw-r–r-- 1 root root 1.3K 4月 30 17:33 ca.pem

-rw-r–r-- 1 root root 1013 4月 30 17:33 server.csr

-rw------- 1 root root 1.7K 4月 30 17:33 server-key.pem

-rw-r–r-- 1 root root 1.4K 4月 30 17:33 server.pem

3.3、生成K8s证书

这里先k8s需要用到的证书也一并生成出来,方便后面使用。(当前的操作如果不懂没关系,先跟着做,后面慢慢就明白)

[root@k8s-master-128 ~]# mkdir /root/ssl/k8s-cret

[root@k8s-master-128 ~]# cd /root/ssl/k8s-cret/

[root@k8s-master-128 k8s-cret]# vim certificate.sh

#!/bin/bash

创建Kubernetes CA证书

cat > ca-config.json <<EOF

{

“signing”: {

“default”: {

“expiry”: “87600h”

},

“profiles”: {

“kubernetes”: {

“expiry”: “87600h”,

“usages”: [

“signing”,

“key encipherment”,

“server auth”,

“client auth”

]

}

}

}

}

EOF

cat > ca-csr.json <<EOF

{

“CN”: “kubernetes”,

“key”: {

“algo”: “rsa”,

“size”: 2048

},

“names”: [

{

“C”: “CN”,

“L”: “Beijing”,

“ST”: “Beijing”,

“O”: “k8s”,

“OU”: “System”

}

]

}

EOF

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

#-----------------------

创建Kubernetes Server证书

cat > server-csr.json <<EOF

{

“CN”: “kubernetes”,

“hosts”: [

“127.0.0.1”,

“10.10.10.1”,

“172.16.194.111”,

“172.16.194.127”,

“172.16.194.128”,

“172.16.194.129”,

“172.16.194.130”,

“kubernetes”,

“kubernetes.default”,

“kubernetes.default.svc”,

“kubernetes.default.svc.cluster”,

“kubernetes.default.svc.cluster.local”

],

“key”: {

“algo”: “rsa”,

“size”: 2048

},

“names”: [

{

“C”: “CN”,

“L”: “BeiJing”,

“ST”: “BeiJing”,

“O”: “k8s”,

“OU”: “System”

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

#-----------------------

创建admin证书

cat > admin-csr.json <<EOF

{

“CN”: “admin”,

“hosts”: [],

“key”: {

“algo”: “rsa”,

“size”: 2048

},

“names”: [

{

“C”: “CN”,

“L”: “BeiJing”,

“ST”: “BeiJing”,

“O”: “system:masters”,

“OU”: “System”

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

#-----------------------

创建Kube Proxy证书

cat > kube-proxy-csr.json <<EOF

{

“CN”: “system:kube-proxy”,

“hosts”: [],

“key”: {

“algo”: “rsa”,

“size”: 2048

},

“names”: [

{

“C”: “CN”,

“L”: “BeiJing”,

“ST”: “BeiJing”,

“O”: “k8s”,

“OU”: “System”

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

[root@k8s-master-128 k8s-cret]# chmod +x certificate.sh

[root@k8s-master-128 k8s-cret]# sh certificate.sh # 警告忽略即可

注意:在server-csr.json文件里的hosts字段,你需要将上面的IP更改为你自己规划的服务IP,主要替换172.16.194.x这个网段即可,用于允许集群之间证书许可认证。

将证书放到它该存在的位置:

[root@k8s-master-128 k8s-cret]# mkdir -p /opt/kubernetes/{cfg,bin,ssl,logs}

[root@k8s-master-128 k8s-cret]# cp -a {ca*,admin*,server*,kube-proxy*} /opt/kubernetes/ssl/

[root@k8s-master-128 k8s-cret]# rm -f /opt/kubernetes/ssl/*.json

[root@k8s-master-128 k8s-cret]# ls -lh /opt/kubernetes/ssl/

总用量 56K

-rw-r–r-- 1 root root 1009 5月 6 18:22 admin.csr

-rw------- 1 root root 1.7K 5月 6 18:22 admin-key.pem

-rw-r–r-- 1 root root 1.4K 5月 6 18:22 admin.pem

-rw-r–r-- 1 root root 1001 5月 6 18:22 ca.csr

-rw------- 1 root root 1.7K 5月 6 18:22 ca-key.pem

-rw-r–r-- 1 root root 1.4K 5月 6 18:22 ca.pem

-rw-r–r-- 1 root root 1009 5月 6 18:22 kube-proxy.csr

-rw------- 1 root root 1.7K 5月 6 18:22 kube-proxy-key.pem

-rw------- 1 root root 6.2K 5月 6 18:37 kube-proxy.kubeconfig

-rw-r–r-- 1 root root 1.4K 5月 6 18:22 kube-proxy.pem

-rw-r–r-- 1 root root 1.3K 5月 6 19:25 server.csr

-rw------- 1 root root 1.7K 5月 6 19:25 server-key.pem

-rw-r–r-- 1 root root 1.6K 5月 6 19:25 server.pem

四、部署高可用Etcd集群

4.1、下载二进制包

etcd官网下载地址:https://github.com/coreos/etcd/releases

[root@k8s-master-128 ~]# mkdir soft

[root@k8s-master-128 ~]# cd soft/

[root@k8s-master-128 soft]#

[root@k8s-master-128 soft]# wget -c https://github.com/etcd-io/etcd/releases/download/v3.3.12/etcd-v3.3.12-linux-amd64.tar.gz

[root@k8s-master-128 soft]# tar zxf etcd-v3.3.12-linux-amd64.tar.gz

[root@k8s-master-128 soft]# cp -a etcd-v3.3.12-linux-amd64/{etcd,etcdctl} /opt/etcd/bin/

[root@k8s-master-128 soft]# /opt/etcd/bin/etcd --version

etcd Version: 3.3.12

Git SHA: d57e8b8

Go Version: go1.10.8

Go OS/Arch: linux/amd64

4.2、部署Etcd的配置文件

部署etcd,并配置systemd方式启动etcd进程

[root@k8s-master-128 ~]# cat etcd.sh

#!/bin/bash

example: ./etcd.sh etcd01 172.16.194.128 etcd01=https//172.16.194.128:2380,etcd02=https://172.16.194.129:2380,etcd03=https://172.16.194.130:2380

ETCD_NAME=KaTeX parse error: Expected 'EOF', got '#' at position 15: {1:-"etcd01"} #̲etcd当前节点的名称 ETC…{2:-“127.0.0.1”} # etcd当前节点的ip

ETCD_CLUSTER=KaTeX parse error: Expected 'EOF', got '#' at position 37: …7.0.0.1:2379"} #̲ 填写etcd集群的所有节点 …{WORK_DIR}/cfg/etcd

#[Member]

ETCD_NAME="

E

T

C

D

N

A

M

E

"

E

T

C

D

D

A

T

A

D

I

R

=

"

/

v

a

r

/

l

i

b

/

e

t

c

d

/

d

e

f

a

u

l

t

.

e

t

c

d

"

E

T

C

D

L

I

S

T

E

N

P

E

E

R

U

R

L

S

=

"

h

t

t

p

s

:

/

/

{ETCD_NAME}" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://

ETCDNAME"ETCDDATADIR="/var/lib/etcd/default.etcd"ETCDLISTENPEERURLS="https://{ETCD_IP}:2380"

ETCD_LISTEN_CLIENT_URLS=“https://KaTeX parse error: Expected 'EOF', got '#' at position 17: …ETCD_IP}:2379" #̲[Clustering] ET…{ETCD_IP}:2380”

ETCD_ADVERTISE_CLIENT_URLS=“https://

E

T

C

D

I

P

:

2379

"

E

T

C

D

I

N

I

T

I

A

L

C

L

U

S

T

E

R

=

"

{ETCD_IP}:2379" ETCD_INITIAL_CLUSTER="

ETCDIP:2379"ETCDINITIALCLUSTER="{ETCD_CLUSTER}”

ETCD_INITIAL_CLUSTER_TOKEN=“etcd-cluster”

ETCD_INITIAL_CLUSTER_STATE=“new”

EOF

cat </usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=-

W

O

R

K

D

I

R

/

c

f

g

/

e

t

c

d

E

x

e

c

S

t

a

r

t

=

{WORK_DIR}/cfg/etcd ExecStart=

WORKDIR/cfg/etcdExecStart={WORK_DIR}/bin/etcd \

–name=${ETCD_NAME} \

–data-dir=${ETCD_DATA_DIR} \

–listen-peer-urls=${ETCD_LISTEN_PEER_URLS} \

–listen-client-urls=${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \

–advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS} \

–initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

–initial-cluster=${ETCD_INITIAL_CLUSTER} \

–initial-cluster-token=${ETCD_INITIAL_CLUSTER_TOKEN} \

–initial-cluster-state=new \

–cert-file=

W

O

R

K

D

I

R

/

s

s

l

/

s

e

r

v

e

r

.

p

e

m

−

−

k

e

y

−

f

i

l

e

=

{WORK_DIR}/ssl/server.pem \\ --key-file=

WORKDIR/ssl/server.pem−−key−file={WORK_DIR}/ssl/server-key.pem \

–peer-cert-file=

W

O

R

K

D

I

R

/

s

s

l

/

s

e

r

v

e

r

.

p

e

m

−

−

p

e

e

r

−

k

e

y

−

f

i

l

e

=

{WORK_DIR}/ssl/server.pem \\ --peer-key-file=

WORKDIR/ssl/server.pem−−peer−key−file={WORK_DIR}/ssl/server-key.pem \

–trusted-ca-file=

W

O

R

K

D

I

R

/

s

s

l

/

c

a

.

p

e

m

−

−

p

e

e

r

−

t

r

u

s

t

e

d

−

c

a

−

f

i

l

e

=

{WORK_DIR}/ssl/ca.pem \\ --peer-trusted-ca-file=

WORKDIR/ssl/ca.pem−−peer−trusted−ca−file={WORK_DIR}/ssl/ca.pem

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

关于systemd的知识,自己补充哈。

4.3.2、检查配置文件

启动过程中,有错误去看/var/log/message日志,或者看以下两个文件是否配置正确

[root@k8s-master-128 ~]# chmod +x etcd.sh

[root@k8s-master-128 ~]# ./etcd.sh etcd01 172.16.194.128 etcd01=https://172.16.194.128:2380,etcd02=https://172.16.194.129:2380,etcd03=https://172.16.194.130:2380 #因为etcd集群通过证书通讯,所以这里需要写成https通讯协议。

4.3.2、检查配置文件

启动过程中,有错误去看/var/log/message日志,或者看以下两个文件是否配置正确

Etcd 主配置文件

[root@k8s-master-128 ~]# cat /opt/etcd/cfg/etcd

#[Member]

ETCD_NAME=“etcd01”

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS=“https://172.16.194.128:2380”

ETCD_LISTEN_CLIENT_URLS=“https://172.16.194.128:2379”

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS=“https://172.16.194.128:2380”

ETCD_ADVERTISE_CLIENT_URLS=“https://172.16.194.128:2379”

ETCD_INITIAL_CLUSTER=“etcd01=https://172.16.194.128:2380,etcd02=https://172.16.194.129:2380,etcd03=https://172.16.194.130:2380”

ETCD_INITIAL_CLUSTER_TOKEN=“etcd-cluster”

ETCD_INITIAL_CLUSTER_STATE=“new”

Etcd启动配置文件

[root@k8s-master-128 ~]# cat /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=-/opt/etcd/cfg/etcd

ExecStart=/opt/etcd/bin/etcd

–name=KaTeX parse error: Undefined control sequence: \ at position 13: {ETCD_NAME} \̲ ̲--data-dir={ETCD_DATA_DIR}

–listen-peer-urls=KaTeX parse error: Undefined control sequence: \ at position 25: …TEN_PEER_URLS} \̲ ̲--listen-client…{ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379

–advertise-client-urls=KaTeX parse error: Undefined control sequence: \ at position 30: …E_CLIENT_URLS} \̲ ̲--initial-adver…{ETCD_INITIAL_ADVERTISE_PEER_URLS}

–initial-cluster=KaTeX parse error: Undefined control sequence: \ at position 24: …ITIAL_CLUSTER} \̲ ̲--initial-clust…{ETCD_INITIAL_CLUSTER_TOKEN}

–initial-cluster-state=new

–cert-file=/opt/etcd/ssl/server.pem

–key-file=/opt/etcd/ssl/server-key.pem

–peer-cert-file=/opt/etcd/ssl/server.pem

–peer-key-file=/opt/etcd/ssl/server-key.pem

–trusted-ca-file=/opt/etcd/ssl/ca.pem

–peer-trusted-ca-file=/opt/etcd/ssl/ca.pem

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

以上两个文件都是通过etcd.sh生成的。

4.3.3、启动主进程

[root@k8s-master-128 ~]# systemctl daemon-reload

[root@k8s-master-128 ~]# systemctl enable etcd

[root@k8s-master-128 ~]# systemctl start etcd # 会启动失败,而且等待启动时间长,忽略即可,其他节点起来后这个节点就会正常了。

Job for etcd.service failed because a timeout was exceeded. See “systemctl status etcd.service” and “journalctl -xe” for details.

[root@k8s-master-128 ~]# ps -ef|grep etcd # 查看etcd进程,是启动状态即可

4.4 部署其他Etcd节点

第一个节点启动的状态就是这样,接下来需要配置第二个和第三个节点,步骤如上,需要的key自己cp到机器上即可。

记录下配置其他两个节点的命令和过程

[root@k8s-master-128 ~]# ssh k8s-node-129 mkdir -p /opt/etcd/{cfg,bin,ssl}

[root@k8s-master-128 ~]# ssh k8s-node-130 mkdir -p /opt/etcd/{cfg,bin,ssl}

[root@k8s-master-128 ~]# scp -r /opt/etcd/* k8s-node-129:/opt/etcd/

[root@k8s-master-128 ~]# scp -r /opt/etcd/* k8s-node-130:/opt/etcd/

[root@k8s-master-128 ~]# scp -r /root/etcd.sh k8s-node-129:/root/

[root@k8s-master-128 ~]# scp -r /root/etcd.sh k8s-node-130:/root/

启动etcd节点

[root@k8s-node-129 ~]# ./etcd.sh etcd02 172.16.194.129 etcd01=https://172.16.194.128:2380,etcd02=https://172.16.194.129:2380,etcd03=https://172.16.194.130:2380

[root@k8s-node-129 ~]# systemctl daemon-reload

[root@k8s-node-129 ~]# systemctl enable etcd

[root@k8s-node-129 ~]# systemctl start etcd

[root@k8s-node-130 ~]# ./etcd.sh etcd03 172.16.194.130 etcd01=https://172.16.194.128:2380,etcd02=https://172.16.194.129:2380,etcd03=https://172.16.194.130:2380

[root@k8s-node-130 ~]# systemctl daemon-reload

[root@k8s-node-130 ~]# systemctl enable etcd

[root@k8s-node-130 ~]# systemctl start etcd

当三个节点部署完成后,随即查看一个节点,启动正常啦!

4.5、查看集群健康状态

[root@k8s-master-128 ~]# cd /opt/etcd/ssl/

[root@k8s-master-128 ssl]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints=“https://172.16.194.128:2379,https://172.16.194.129:2379,https://172.16.194.130:2379” cluster-health

member 12a876ebdf52404b is healthy: got healthy result from https://172.16.194.130:2379

member 570d74cd507eba51 is healthy: got healthy result from https://172.16.194.129:2379

member f3e89385516e234d is healthy: got healthy result from https://172.16.194.128:2379

cluster is healthy

当然,你可以停掉一个节点再查看集群状态,看看会有什么变化。

Tips:

2379用于客户端通信

2380用于节点通信

五、Node节点安装Docker

其他版本安装请移步官网:https://docs.docker.com/install

yum remove docker

docker-client

docker-client-latest

docker-common

docker-latest

docker-latest-logrotate

docker-logrotate

docker-selinux

docker-engine-selinux

docker-engine

yum install -y yum-utils

device-mapper-persistent-data

lvm2

官网的repo源在中国用不了,咱们还是乖乖使用马爸爸提供的源好了

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum makecache fast

yum install docker-ce -y

mkdir /etc/docker/

cat << EOF > /etc/docker/daemon.json

{

“registry-mirrors”: [“https://registry.docker-cn.com”]

}

EOF

systemctl enable docker && systemctl start docker

六、部署Flannel网络

Overlay Network: 覆盖网络,在基础网络上叠加的一种虚拟网络技术模式,该网络中的主机通过虚拟链路连接起来。

VXLAN:将源数据包封装到UDP中,并使用基础网络的IP/MAC作为外层报文头进行封装,然后在以太网上传输,到达目的地后有隧道端点解封装并将数据发送给目标地址。

Flannel:是Overlay网络的一种,也是将源数据包封装在另一种网络包里进行路由转发和通信,目前已经支持UDP、VXLAN、Host-GW、AWS VPC和GCE路由等数据转发方式。

多主机容器网络通信其他主流方案:隧道方案(Weave、OpenSwitch),路由方案(Calico)等。

6.1、Flannel网络架构图

比较详细的可以参考下面这张:

6.2、注册Flannel信息到Etcd集群

注意:Flannel网络是部署在k8s的Node节点上

写入分配的子网段到etcd,供flanneld使用

[root@k8s-node-129 ~]# cd /opt/etcd/ssl/

[root@k8s-node-129 ssl]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints=“https://172.16.194.128:2379,https://172.16.194.129:2379,https://172.16.194.130:2379” set /coreos.com/network/config ‘{ “Network”: “172.17.0.0/16”, “Backend”: {“Type”: “vxlan”}}’

{ “Network”: “172.17.0.0/16”, “Backend”: {“Type”: “vxlan”}}

GET 查看设置

[root@k8s-node-129 ssl]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints=“https://172.16.194.128:2379,https://172.16.194.129:2379,https://172.16.194.130:2379” get /coreos.com/network/config

{ “Network”: “172.17.0.0/16”, “Backend”: {“Type”: “vxlan”}} # 能get到我们写入etcd集群的信息,即表明写入成功

6.3、下载二进制包

下载地址:https://github.com/coreos/flannel/releases

[root@k8s-node-129 ~]# wget https://github.com/coreos/flannel/releases/download/v0.11.0/flannel-v0.11.0-linux-amd64.tar.gz

[root@k8s-node-129 ~]# tar zxf flannel-v0.11.0-linux-amd64.tar.gz

[root@k8s-node-129 ~]# mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs} # 创建k8s相关的工作目录

[root@k8s-node-129 ~]# cp flanneld mk-docker-opts.sh /opt/kubernetes/bin/ # 将flanneld相关文件放到k8s工作目录

6.4、部署Flannel的配置文件

[root@k8s-node-129 ~]# cat flanneld.sh

#!/bin/bash

ETCD_ENDPOINTS=${1:-“http://127.0.0.1:2379”} #接收ETCD的参数

cat </opt/kubernetes/cfg/flanneld # 生成Flanneld配置文件

FLANNEL_OPTIONS="–etcd-endpoints=${ETCD_ENDPOINTS}

-etcd-cafile=/opt/etcd/ssl/ca.pem

-etcd-certfile=/opt/etcd/ssl/server.pem

-etcd-keyfile=/opt/etcd/ssl/server-key.pem"

EOF

cat </usr/lib/systemd/system/flanneld.service # 生成Flanneld启动文件,使Systemd管理Flannel

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq $FLANNEL_OPTIONS

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

cat </usr/lib/systemd/system/docker.service # 配置Docker启动指定子网段

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/run/flannel/subnet.env # 添加了这行(使Docker在启动时指定子网段,这个子网段是Flanneld分配给它的)

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS # 添加了DOCKER_NETWORK_OPTIONS 选项

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

EOF

6.5、启动Flanneld节点

6.5.1、生成配置文件

[root@k8s-node-129 ~]# chmod +x flanneld.sh

[root@k8s-node-129 ~]# ./flanneld.sh https://172.16.194.128:2379,https://172.16.194.129:2379,https://172.16.194.130:2379

6.5.2、检查配置文件

flanneld配置文件

[root@k8s-node-129 ~]# cat /opt/kubernetes/cfg/flanneld

FLANNEL_OPTIONS="–etcd-endpoints=https://172.16.194.128:2379,https://172.16.194.129:2379,https://172.16.194.130:2379 -etcd-cafile=/opt/etcd/ssl/ca.pem -etcd-certfile=/opt/etcd/ssl/server.pem -etcd-keyfile=/opt/etcd/ssl/server-key.pem"

Flanneld启动文件

[root@k8s-node-129 ~]# cat /usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq $FLANNEL_OPTIONS

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

Docker启动文件

[root@k8s-node-129 ~]# cat /usr/lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/run/flannel/subnet.env #指定了Flanneld网络配置文件

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

6.5.3、启动Flanneld进程

启动flanneld和Docker

systemctl daemon-reload

systemctl enable flanneld

systemctl start flanneld

systemctl restart docker

6.6、查看Flannel部署结果

Flanneld的网络配置文件(Docker会根据这个网络配置文件信息生成子网段)

[root@k8s-node-129 ~]# cat /run/flannel/subnet.env

DOCKER_OPT_BIP="–bip=172.17.73.1/24"

DOCKER_OPT_IPMASQ="–ip-masq=false"

DOCKER_OPT_MTU="–mtu=1450"

DOCKER_NETWORK_OPTIONS=" --bip=172.17.73.1/24 --ip-masq=false --mtu=1450"

[root@k8s-node-129 ~]# ip a

···略···

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:1a:42:15:83 brd ff:ff:ff:ff:ff:ff

inet 172.17.73.1/24 brd 172.17.73.255 scope global docker0

valid_lft forever preferred_lft forever

4: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default

link/ether 3a:d5:fa:a7:57:c6 brd ff:ff:ff:ff:ff:ff

inet 172.17.73.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::38d5:faff:fea7:57c6/64 scope link

valid_lft forever preferred_lft forever

能看到docker0网卡的IP地址段已经变更为我们配置的地址段。

该节点新启动了flannel.1网卡。

6.7、其他Node节点部署Flannal网络

根据前期规划,k8s有两个Node节点,上面部署了129这个节点,剩下的130节点按照上面的部署步骤来就行,多个节点同理。

查看一台Node的部署状态,如下部署正常。

[root@k8s-node-130 ~]# cat /run/flannel/subnet.env

DOCKER_OPT_BIP="–bip=172.17.16.1/24"

DOCKER_OPT_IPMASQ="–ip-masq=false"

DOCKER_OPT_MTU="–mtu=1450"

DOCKER_NETWORK_OPTIONS=" --bip=172.17.16.1/24 --ip-masq=false --mtu=1450"

[root@k8s-node-130 ~]# ip a

···略···

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:f4:21:f9:94 brd ff:ff:ff:ff:ff:ff

inet 172.17.16.1/24 brd 172.17.16.255 scope global docker0

valid_lft forever preferred_lft forever

4: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default

link/ether e2:cf:ca:a9:0f:98 brd ff:ff:ff:ff:ff:ff

inet 172.17.16.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::e0cf:caff:fea9:f98/64 scope link

valid_lft forever preferred_lft forever

6.8、查看Etcd集群记录的通讯信息

每个节点在部署Flanneld网络时,相关信息都记录在Etcd集群当中,我们来一下

ls /coreos.com/network/ 查看etcd存储的网络配置信息

[root@k8s-node-129 ssl]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints=“https://172.16.194.128:2379,https://172.16.194.129:2379,https://172.16.194.130:2379” ls /coreos.com/network/

ls /coreos.com/network/subnets 查看Flanneld分配的子网信息

[root@k8s-node-129 ssl]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints=“https://172.16.194.128:2379,https://172.16.194.129:2379,https://172.16.194.130:2379” ls /coreos.com/network/subnets

/coreos.com/network/subnets/172.17.73.0-24

/coreos.com/network/subnets/172.17.16.0-24

get /coreos.com/network/subnets/172.17.73.0-24 查看该子网的详细信息

[root@k8s-node-129 ssl]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints=“https://172.16.194.128:2379,https://172.16.194.129:2379,https://172.16.194.130:2379” get /coreos.com/network/subnets/172.17.73.0-24

{“PublicIP”:“172.16.194.129”,“BackendType”:“vxlan”,“BackendData”:{“VtepMAC”:“3a:d5:fa:a7:57:c6”}}

解读:

子网信息里记录了公共IP172.16.194.129,也就是说172.17.73.0这个网段的通讯,会通过公网IP转发出去与其他节点的网段进行通讯。

所以,只要是加入了Flanneld网络的主机节点,都可以通过etcd记录的信息,与其他子网进行通讯。

6.9、测试Flanneld网络通讯

上面我们部署了两个节点的网络,这两个节点的Flannal网段信息分别为:

k8s-node-129

flannel.1: 172.17.73.0/32

docker0: 172.17.73.1/24

k8s-node-130

flannel.1: 172.17.16.0/32

docker0: 172.17.16.1/24

通过ping检测他们之间的连通性,发现他们是不同的子网,为什么能连通呢?

[root@k8s-node-129 ~]# ping -c 2 172.17.16.1

PING 172.17.16.1 (172.17.16.1) 56(84) bytes of data.

64 bytes from 172.17.16.1: icmp_seq=1 ttl=64 time=0.503 ms

64 bytes from 172.17.16.1: icmp_seq=2 ttl=64 time=0.537 ms

[root@k8s-node-130 ~]# ping -c 2 172.17.73.1

PING 172.17.73.1 (172.17.73.1) 56(84) bytes of data.

64 bytes from 172.17.73.1: icmp_seq=1 ttl=64 time=0.382 ms

64 bytes from 172.17.73.1: icmp_seq=2 ttl=64 time=0.621 ms

带着这个思考,我们来看一下节点上的路由情况

[root@k8s-node-129 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 172.16.194.2 0.0.0.0 UG 100 0 0 eth0

172.16.194.0 0.0.0.0 255.255.255.0 U 100 0 0 eth0

172.17.16.0 172.17.16.0 255.255.255.0 UG 0 0 0 flannel.1

172.17.73.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0

解读:

docker0网卡是172.17.73.0网段,也就是节点自身的网段;

规则:172.17.16.0网段走flannel.1网卡出口,而flannel.1能与其他flannel节点网段直接通讯;

规则:172.16.194.0是宿主机的网段,出口是物理网卡eth0;

根据上面解读的路由规则,我尝试通过一个案例来理解网络请求走向:

(k8s-node-129)172.17.73.0网段向(k8s-node-130)172.17.16.0网段发起一个ping请求

[root@k8s-node-129 ~]# ping -c 2 172.17.16.1

其网络请求过程如下:

k8s-node-129节点匹配到本机路由规则:去往172.17.16.0 网段,走 flannel.1出口 ;

通过 /opt/kubernetes/bin/etcdctl get到172.17.16.0-24的公网IP是172.16.194.130,报文交由172.16.194.0网段处理;

k8s-node-129节点再次匹配到本机路由规则:去往172.16.194.0网段,走eth0(物理网卡)出口;

公网172.16.194.129通过eth0网卡将报文发送给172.16.194.130(etcd里注册了172.17.16.0网段的公网IP是172.16.194.130);

目标地址172.16.194.130接收到请求报文后转发flannel.1网卡, flannel.1网卡再转发到docker0网卡,等待报文被响应后,再原路返回。完成整个通讯。

Flanneld网络部署完毕。

382

382

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?