Besides increasing sales profits, association rules can also be in other fields. In medical diagnosis for instance, understanding which symptoms tend to co-morbid can help to improve patient care and medicine prescription.

Association rules analysis is a technique to uncover how items are associated to each other. There are three common ways to measure association.

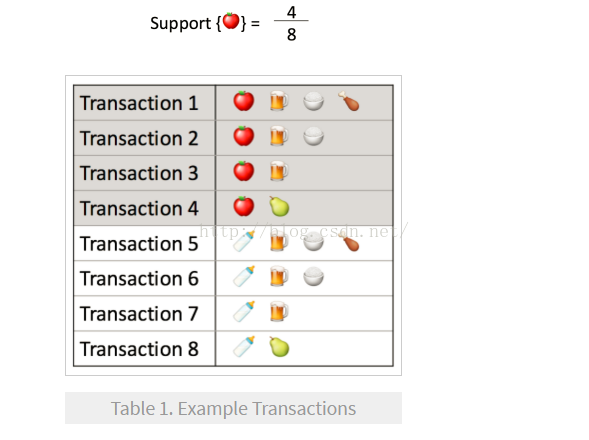

Measure 1: Support. This says how popular an itemset is, as measured by the proportion of transactions in which an itemset appears.

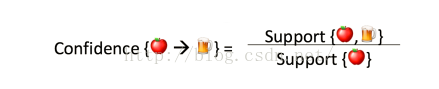

Measure 2: Confidence. This says how likely item Y is purchased when item X is purchased, expressed as {X->Y}. This is measured by the proportion of transactions with item X, in which item Y also appears.

One drawback of the confidence measure is that it might misrepresent the importance of an association. This is because it only accounts for how popular apples are, but not beers. If beers are also very popular in general, there will be a higher chance that a transaction containing apples will also contain beers, thus inflating the confidence measure. To account for the base popularity of both constituent items, we use a third measure called lift.

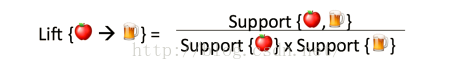

Measure 3: Lift. This says how likely item Y is purchased when item X is purchased, while controlling for how popular item Y.

In Table 1, the lift of {apple->beer} is 1, which implies no association between items. A lift value greater than 1 means that item Y is likely to be bought if item X is bought, while a value less than 1 means that item Y is unlikely to be bought if item X is bought.

It is easy to calculate the popularity of a given itemset, like {beer, soda}. However, a business owner would not typically ask about individual itemsets. Rather, the owner would be more interested in having a complete list of popular itemsets.

Apriori Algorithm:

Put simply, the apriori principle states that if an itemset is infrequent, the all its subsets must also be infrequent. This means that if {beer} was found to be infrequent, we can expect {beer, pizza} to be equally or even more infrequent. So in consolidating the list of popular itemsets, we need not consider {beer, pizza}, nor any other itemset configuration that contains beer.

Finding itemsets with high support:

Using the apriori principle, the number of itemsets that have to examined can be pruned, and the shortlist of popular itemsets can be obtained in these steps:

Step 0: Start with itemsets containing just a single item, such as {apple} and {pear}.

Step 1: Determine the support for the itemsets. Keep the itemsets that meet your minimum support threshold, and remove itemsets that do not.

Step 2: Using the itemsets you have kept from Step 1, generate all the possible itemset configurations.

Step 3: Repeat Step1 & Step2 until there are no more new itemsets.

As seen in the animation, {apple} was determine to have low support, hence it was removed and all other itemset configurations that contain apple need not be considered. This reduced the number of itemsets to consider by more than half.

Finding item rules with high confidence or lift

We have seen how the apriori algorithm can be used to identify itemsets with high support. The same principle can also be used to identify item associations with high confidence or lift. Finding rules with high confidence or lift is less computationally taxing once high-support itemsets have been identified, because confidence and lift values are calculated using support values.

Take for example the task of finding high-confidence rules. If the rule

{beer, chips->apple}

has low confidence, all other rules with the same constituent items and with apple on the right hand side would have low confidence too. Specifically, the rules

{beer->apple, chips}

{chips->apple, beer}

would have low confidence as well. As before, lower level candidate item rules can be pruned using the apriori algorithm, so the fewer candidate rules need to be examined.

95

95

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?