背景

在我们的内部 Monkey 测试中频繁发生以下特征的 watchdog、anr 等现象,某个进程所有线程都被 ptrace_stop 以及其中一线程状态处于 pipe_read。

sysTid=xx1 ptrace_stop

sysTid=xx2 ptrace_stop

sysTid=xx3 pipe_read

原问题

system_server 进程与 vold 之间的 binder 通讯上阻塞了。

"main" prio=5 tid=1 Native

| group="main" sCount=1 ucsCount=0 flags=1 obj=0x722098b8 self=0xb400006f29242c00

| sysTid=1584 nice=-2 cgrp=foreground sched=0/0 handle=0x6fdbaa84f8

| state=S schedstat=( 368940832460 343970545986 1269658 ) utm=22380 stm=14513 core=4 HZ=100

| stack=0x7ff65eb000-0x7ff65ed000 stackSize=8188KB

| held mutexes=

native: #00 pc 000eed78 /apex/com.android.runtime/lib64/bionic/libc.so (__ioctl+8) (BuildId: 5bf69b75cc8574e27203cdbd408d1e67)

native: #01 pc 000a0e0c /apex/com.android.runtime/lib64/bionic/libc.so (ioctl+156) (BuildId: 5bf69b75cc8574e27203cdbd408d1e67)

native: #02 pc 000614d8 /system/lib64/libbinder.so (android::IPCThreadState::talkWithDriver+280) (BuildId: 0bccce6a77300aaeb158148372160959)

native: #03 pc 0006289c /system/lib64/libbinder.so (android::IPCThreadState::waitForResponse+60) (BuildId: 0bccce6a77300aaeb158148372160959)

native: #04 pc 000625d8 /system/lib64/libbinder.so (android::IPCThreadState::transact+216) (BuildId: 0bccce6a77300aaeb158148372160959)

native: #05 pc 0007ae78 /system/lib64/libbinder.so (android::BpBinder::transact+232) (BuildId: 0bccce6a77300aaeb158148372160959)

native: #06 pc 001a2118 /system/lib64/libandroid_runtime.so (android_os_BinderProxy_transact+152) (BuildId: ee14d1b8806d6c50e82ba389f69ce923)

at android.os.BinderProxy.transactNative(Native method)

at android.os.BinderProxy.transact(BinderProxy.java:621)

at android.os.IVold$Stub$Proxy.abortIdleMaint(IVold.java:2022)

at com.android.server.StorageManagerService.abortIdleMaint(StorageManagerService.java:2845)

at com.android.server.StorageManagerService$2.onReceive(StorageManagerService.java:906)

at android.app.LoadedApk$ReceiverDispatcher$Args.lambda$getRunnable$0(LoadedApk.java:1866)

at android.app.LoadedApk$ReceiverDispatcher$Args.$r8$lambda$gDuJqgxY6Zb-ifyeubKeivTLAwk(unavailable:0)

at android.app.LoadedApk$ReceiverDispatcher$Args$$ExternalSyntheticLambda0.run(unavailable:2)

at android.os.Handler.handleCallback(Handler.java:958)

at android.os.Handler.dispatchMessage(Handler.java:99)

at android.os.Looper.loopOnce(Looper.java:222)

at android.os.Looper.loop(Looper.java:314)

at com.android.server.SystemServer.run(SystemServer.java:1057)

at com.android.server.SystemServer.main(SystemServer.java:725)

at java.lang.reflect.Method.invoke(Native method)

at com.android.internal.os.RuntimeInit$MethodAndArgsCaller.run(RuntimeInit.java:565)

at com.android.internal.os.ZygoteInit.main(ZygoteInit.java:1059)

然而 vold 进程的状态却被其它进程 ptrace_stop 挂起,无法查看它堆栈。

----- Waiting Channels: pid 524 at 2023-08-30 04:30:18.586759099+0800 -----

Cmd line: /system/bin/vold --blkid_context=u:r:blkid:s0 --blkid_untrusted_context=u:r:blkid_untrusted:s0 --fsck_context=u:r:fsck:s0 --fsck_untrusted_context=u:r:fsck_untrusted:s0

sysTid=524 ptrace_stop

sysTid=531 ptrace_stop

sysTid=532 ptrace_stop

sysTid=533 ptrace_stop

sysTid=619 ptrace_stop

sysTid=857 ptrace_stop

sysTid=7327 pipe_read

而 crash_dump64 进程,父进程等管道数据,子进程等某进程结束。

u:r:crash_dump:s0 root 7328 7328 524 2241480 2996 pipe_read 0 S 19 0 - 0 fg 5 crash_dump64 crash_dump64

u:r:crash_dump:s0 root 7329 7329 7328 2241608 1252 do_wait 0 S 19 0 - 0 fg 5 crash_dump64 crash_dump64

诸如此类问题,没啥好的办法,依据特征保存机器现场。

化简为繁

对 debuggerd_client.cpp 的 get_wchan_data 添加特征检测触发内核 panic 来得到该特征现场的 Ramdump 文件,然后在恢复用户态进程数据,详情参考《[浅谈安卓离线内存分析项目]

static std::string get_wchan_data(int fd, pid_t pid) {

std::vector<pid_t> tids;

if (!android::procinfo::GetProcessTids(pid, &tids)) {

log_error(fd, 0, "failed to get process tids");

return "";

}

std::stringstream data;

for (int tid : tids) {

std::string path = "/proc/" + std::to_string(pid) + "/task/" + std::to_string(tid) + "/wchan";

std::string wchan_str;

if (!ReadFileToString(path, &wchan_str, true)) {

log_error(fd, errno, "failed to read \"%s\"", path.c_str());

continue;

}

}

std::stringstream buffer;

if (std::string str = data.str(); !str.empty()) {

+ std::string cmdline = android::base::Join(get_command_line(pid), " ");

+ if (cmdline == "/system/bin/lmkd"

+ || cmdline == "/system/bin/netd"

+ || cmdline == "/system/bin/vold"

+ || cmdline == "/vendor/bin/hw/android.hardware.health-service.example") {

+ std::size_t index = str.find("ptrace_stop");

+ if (index != std::string::npos) {

+ WriteStringToFile("c", "/proc/sysrq-trigger");

+ }

+ }

buffer << "\n----- Waiting Channels: pid " << pid << " at " << get_timestamp() << " -----\n"

<< "Cmd line: " << android::base::Join(get_command_line(pid), " ") << "\n";

buffer << "\n" << str << "\n";

buffer << "----- end " << std::to_string(pid) << " -----\n";

buffer << "\n";

}

return buffer.str();

}

特征复现

测试一段时间后,终于得到一例发生 echo c > /proc/sysrq-trigger 触发内核死机问题。

[<ffffffc008f8d22c>] panic+0x190/0x388

[<ffffffc0087f4ef8>] sysrq_handle_crash+0x20/0x24

[<ffffffc0087f4a78>] __handle_sysrq+0xe8/0x1b0

[<ffffffc0087f5ca0>] write_sysrq_trigger+0xf4/0x178

[<ffffffc00842f5f4>] proc_reg_write+0xf0/0x168

[<ffffffc008382ecc>] vfs_write+0x104/0x2c8

[<ffffffc008383218>] ksys_write+0x74/0xe8

[<ffffffc0083832a4>] __arm64_sys_write+0x18/0x2c

[<ffffffc00802efe0>] invoke_syscall+0x54/0x11c

[<ffffffc00802eef4>] el0_svc_common+0x84/0xf4

[<ffffffc00802ede8>] do_el0_svc+0x28/0xb0

[<ffffffc008f94530>] el0_svc+0x28/0xa4

[<ffffffc008f944b8>] el0t_64_sync_handler+0x64/0xb4

[<ffffffc00801157c>] el0t_64_sync+0x19c/0x1a4

首先我们得确定本次 panic 是我们添加调试代码触发的,那么我们可以先将 panic 进程转储一份 Core 文件出来进行栈回溯。crash 转储 Core 可参考《[Crash 插件开发指南]》。开源版本 lp 插件比 ram2core 性能以及其它均更优,大家可尝试用这个替代公版 crash-gcore。

crash> ps -G 27252

PID PPID CPU TASK ST %MEM VSZ RSS COMM

1723 942 6 ffffff8118d8cb00 IN 1.8 15121824 166036 system_server

crash> ram2core -p 1723 -s zram -m shmem

Write ELF Header

Write Program Headers

Write Segments

>>>> 10% <<<<

>>>> 20% <<<<

>>>> 30% <<<<

>>>> 40% <<<<

>>>> 50% <<<<

>>>> 60% <<<<

>>>> 70% <<<<

>>>> 80% <<<<

>>>> 90% <<<<

Done

Saved [core.1723].

并且对 system_server 的 Core 文件 Java 部分进行修复后。

art-parser> bt 27252

"AnrConsumer" prio=10 tid=313 Native

| group="main" sCount=0 ucsCount=0 flags=0 obj=0x14641848 self=0xb400007c00374c00

| sysTid=27252 nice=<unknown> cgrp=<unknown> sched=<unknown> handle=0x7b28d7acb0

| stack=0x7b28c77000-0x7b28c79000 stackSize=0x103cb0

| held mutexes=

x0 0x00000000000004f5 x1 0x0000007b28d7a251 x2 0x0000000000000001 x3 0xffffffffffffffff

x4 0xffffffffffffffff x5 0x0000007b28d7a24c x6 0x0000000000000010 x7 0x7f7f7f7f7f7f7f7f

x8 0x0000000000000040 x9 0x0000000000000000 x10 0x0000000000000001 x11 0x0000007b28d7a080

x12 0xffffff80ffffffd0 x13 0x0000007b28d7a0b0 x14 0x0000000000000000 x15 0x0000000000000100

x16 0x0000007db6f3aae0 x17 0x0000007db6f14a20 x18 0x0000007ae673a000 x19 0x0000007b28d7a238

x20 0x0000007b28d7a251 x21 0x00000000000004f5 x22 0x0000000000000001 x23 0x0000007b28d7a221

x24 0x0000007b28d7b000 x25 0x0000007b28d7a239 x26 0x0000007b28d7a2d8 x27 0x0000007b28d7a2d0

x28 0x0000007b28d7a3f8 x29 0x0000007b28d7a140

lr 0x0000007db7cd09f4 sp 0x0000007b28d7a130 pc 0x0000007db6f14a28 pst 0x0000000000001000

FP[0x7b28d7a140] PC[0x7db6f14a28] native: #00 (write+0x8) /apex/com.android.runtime/lib64/bionic/libc.so

FP[0x7b28d7a140] PC[0x7db7cd09f4] native: #01 (android::base::WriteStringToFile(std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&, std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&, bool)+0xe4) /system/lib64/libbinder.so

FP[0x7b28d7a510] PC[0x7dd19907f4] native: #02 (dump_backtrace_to_file_timeout(int, DebuggerdDumpType, int, int)+0x698) /system/lib64/libdebuggerd_client.so

FP[0x7b28d7a590] PC[0x7dcd0651e0] native: #03 (std::__1::__tree<std::__1::__value_type<std::__1::basic_string_view<char, std::__1::char_traits<char> >, unsigned long>, std::__1::__map_value_compare<std::__1::b

asic_string_view<char, std::__1::char_traits<char> >, std::__1::__value_type<std::__1::basic_string_view<char, std::__1::char_traits<char> >, unsigned long>, std::__1::less<std::__1::basic_string_view<char, std::__1::char_traits<char> > >, true>, std::__1::allocator<std::__1::__value_type<std::__1::basic_string_view<char, std::__1::char_traits<char> >, unsigned long> > >::destroy(std::__1::__tree_node<std::__1::__value_type<std::__1::basic_string_view<char, std::__1::char_traits<char> >, unsigned long>, void*>*)+0x4a0) /system/lib64/libandroid_runtime.so

FP[0x7b28d7a5e0] PC[0x7dcd062b6c] native: #04 (android::register_android_os_Debug(_JNIEnv*)+0x176c) /system/lib64/libandroid_runtime.so

QF[0x7b28d7a5f0] PC[0x0000000000] at dex-pc 0x0000000000 android.os.Debug.dumpNativeBacktraceToFileTimeout(Native method) //AM[0x7102bd60]

QF[0x7b28d7a6a0] PC[0x009d1c7854] at dex-pc 0x7d1a34bd66 com.android.server.am.StackTracesDumpHelper.dumpStackTraces //AM[0x7c964bb5a0]

QF[0x7b28d7a750] PC[0x009d5bf91c] at dex-pc 0x7d1a34b3a2 com.android.server.am.StackTracesDumpHelper.dumpStackTraces //AM[0x7c964bb600]

QF[0x7b28d7a7e0] PC[0x009d3ec00c] at dex-pc 0x7d1a3306d0 com.android.server.am.ProcessErrorStateRecord.appNotResponding //AM[0x9b018b90]

QF[0x7b28d7a9c0] PC[0x009d4c8ab0] at dex-pc 0x7d1a2cd1bc com.android.server.am.AnrHelper$AnrRecord.appNotResponding //AM[0x7c964bb7b0]

QF[0x7b28d7aa50] PC[0x009d42045c] at dex-pc 0x7d1a2cd01c com.android.server.am.AnrHelper$AnrConsumerThread.run //AM[0x9b012068]

art-parser> bt 27252 -v

QF[0x7b28d7aa50] PC[0x009d42045c] at dex-pc 0x7d1a2cd01c com.android.server.am.AnrHelper$AnrConsumerThread.run //AM[0x9b012068]

{

StackMap[31] (code_region=[0x9d4201a0-0x9d42046c], native_pc=0x2bc, dex_pc=0x4c, register_mask=0x1800000)

Virtual registers

{

v0 = r0 v1 = r24 v2 = r26 v3 = r25

v4 = r28 v13 = r23

}

Physical registers

{

x22 = 0x9b012068 x23 = 0x14641848 x24 = 0x1a631e38 x25 = 0x152c

x26 = 0x823de91 x27 = 0x0 x28 = 0x7093eca0 x29 = 0x1a6fc2f0

x30 = 0x9d42045c

}

}

art-parser> disassemble 0x9b012068 -i 0x7d1a2cd01c

void com.android.server.am.AnrHelper$AnrConsumerThread.run() [dex_method_idx=29861]

DEX CODE:

0x7d1a2cd01c: 206e 74a8 0021 | invoke-virtual {v1, v2}, void com.android.server.am.AnrHelper$AnrRecord.appNotResponding(boolean) // method@29864

art-parser> p 0x1a631e38

Size: 0x38

Padding: 0x2

Object Name: com.android.server.am.AnrHelper$AnrRecord

iFields of com.android.server.am.AnrHelper$AnrRecord

[0x34] boolean mAboveSystem = 0x0

[0x8] java.lang.String mActivityShortComponentName = com.android.browser/.BrowserActivity

[0xc] com.android.server.am.ProcessRecord mApp = 0x1464a438

[0x10] android.content.pm.ApplicationInfo mAppInfo = 0x1a61a9b8

[0x14] java.util.concurrent.Future mFirstPidFilePromise = 0x1bf21ab8

[0x35] boolean mIsContinuousAnr = 0x1

[0x18] com.android.server.wm.WindowProcessController mParentProcess = 0x1464f868

[0x1c] java.lang.String mParentShortComponentName = com.android.browser/.BrowserActivity

[0x30] int mPid = 0x6b05

[0x20] com.android.internal.os.TimeoutRecord mTimeoutRecord = 0x1bf21ad8

[0x28] long mTimestamp = 0x823c965

[0x24] com.android.server.am.AnrHelper this$0 = 0x132d3e18

iFields of java.lang.Object

[0x0] java.lang.Class shadow$_klass_ = 0x16bbb6a0

[0x4] int shadow$_monitor_ = 0x0

(gdb) bt

#0 write () at out_sys/soong/.intermediates/bionic/libc/syscalls-arm64/gen/syscalls-arm64.S:500

#1 0x0000007db7cd09f4 in write(int, void const*, unsigned long pass_object_size0) [clone .__uniq.26443029927838627478261023667953154577] (fd=1269, buf=0x7b28d7a251, count=1)

at bionic/libc/include/bits/fortify/unistd.h:174

#2 android::base::WriteStringToFd (content=..., fd=...) at system/libbase/file.cpp:252

#3 android::base::WriteStringToFile (content=..., path=..., follow_symlinks=<optimized out>) at system/libbase/file.cpp:308

#4 0x0000007dd19907f4 in get_wchan_data (fd=801, pid=453) at system/core/debuggerd/client/debuggerd_client.cpp:127

#5 dump_backtrace_to_file_timeout (tid=tid@entry=453, dump_type=<optimized out>, dump_type@entry=kDebuggerdNativeBacktrace, timeout_secs=timeout_secs@entry=2, fd=801)

at system/core/debuggerd/client/debuggerd_client.cpp:340

#6 0x0000007dcd0651e0 in android::dumpTraces (env=0xb400007d0afc8500, pid=453, fileName=0x7b28d7a6ac, timeoutSecs=2, dumpType=kDebuggerdNativeBacktrace, dumpType@entry=224)

at frameworks/base/core/jni/android_os_Debug.cpp:830

#7 0x0000007dcd062b6c in android::android_os_Debug_dumpNativeBacktraceToFileTimeout (env=0x4f5, clazz=<optimized out>, pid=685220433, fileName=0x1, timeoutSecs=-1)

at frameworks/base/core/jni/android_os_Debug.cpp:845

#8 0x0000000071db8a6c in android::os::Debug::dumpJavaBacktraceToFileTimeout ()

还原数据可知 system_server 正在 dump com.android.browser/.BrowserActivity 的 anr_trace.txt 过程中,并且 get_wchan_data 此时捕捉到特征发生在进程 453 身上。回到 crash 环境下,查看进程 453 的所有线程都在处于 ptrace_stop,以及一个线程处于 pipe_read 中。

crash> bt -g 453

PID: 453 TASK: ffffff800f54cb00 CPU: 2 COMMAND: "lmkd"

PID: 460 TASK: ffffff801a4db840 CPU: 4 COMMAND: "lmkd_reaper0"

PID: 461 TASK: ffffff801a4dcb00 CPU: 5 COMMAND: "lmkd_reaper1"

PID: 462 TASK: ffffff801a4d8000 CPU: 5 COMMAND: "lmkd_watchdog"

#3 [ffffffc00e9fbc90] ptrace_stop at ffffffc0080ce5fc

PID: 27712 TASK: ffffff8174715dc0 CPU: 3 COMMAND: "lmkd"

#0 [ffffffc03e163b70] __switch_to at ffffffc008f9b62c

#1 [ffffffc03e163bc0] __schedule at ffffffc008f9be14

#2 [ffffffc03e163c20] schedule at ffffffc008f9c270

#3 [ffffffc03e163cc0] pipe_read at ffffffc0083910dc

#4 [ffffffc03e163d80] vfs_read at ffffffc008382758

#5 [ffffffc03e163dd0] ksys_read at ffffffc008383104

#6 [ffffffc03e163e10] __arm64_sys_read at ffffffc008383190

#7 [ffffffc03e163e20] invoke_syscall at ffffffc00802efe0

#8 [ffffffc03e163e40] el0_svc_common at ffffffc00802ef20

#9 [ffffffc03e163e70] do_el0_svc at ffffffc00802ede8

#10 [ffffffc03e163e80] el0_svc at ffffffc008f94530

#11 [ffffffc03e163ea0] el0t_64_sync_handler at ffffffc008f944b8

#12 [ffffffc03e163fe0] el0t_64_sync at ffffffc00801157c

PC: 0000007d1755f758 LR: 0000007d174947b0 SP: 0000007d16361ed0

X29: 0000007d16361f60 X28: 0000000000000000 X27: 0000007d1628a000

X26: 0000000000000000 X25: 0000000000006c41 X24: 0000000000000005

X23: 0000000000000004 X22: 0000000000000006 X21: 0000000000000003

X20: 0000007d17424bf0 X19: 0000007d175847f8 X18: 0000007d1652a000

X17: 0000007d17546280 X16: 0000007d17578e98 X15: 0000019d56bbc12f

X14: 00000000040fda32 X13: 0000000000004100 X12: 00000000ffffffff

X11: 0000000000000000 X10: 0000000000000000 X9: dbe14e7e76532670

X8: 000000000000003f X7: 7f7f7f7f7f7f7f7f X6: 0000007d17424bf0

X5: 0000007d17424bf0 X4: 0000007d17424bf0 X3: 0000007d17424bf0

X2: 0000000000000004 X1: 0000007d16361ee8 X0: 0000000000000003

ORIG_X0: 0000000000000003 SYSCALLNO: 3f PSTATE: 60001000

离线分析

从堆栈以及寄存器上下文,可以知道线程 27712 正在读取管道 FD(0x3),接下来将进程 lmkd 453 转储一份 Core 文件,以便我们分析父子进程的关系。

// 进程 453 堆栈

(gdb) bt

#0 __dl_read () at out_sys/soong/.intermediates/bionic/libc/syscalls-arm64/gen/syscalls-arm64.S:488

#1 0x0000007d174947b0 in read(int, void*, unsigned long pass_object_size0) (fd=-1, buf=0x7d16361ee8, count=4) at bionic/libc/include/bits/fortify/unistd.h:162

#2 debuggerd_dispatch_pseudothread (arg=arg@entry=0x7d17424bf0) at system/core/debuggerd/handler/debuggerd_handler.cpp:486

#3 0x0000007d17548a14 in __start_thread (fn=0x7d174942e4 <debuggerd_dispatch_pseudothread(void*)>, arg=0x7d17424bf0) at bionic/libc/bionic/clone.cpp:53

(gdb) frame 2

#2 debuggerd_dispatch_pseudothread (arg=arg@entry=0x7d17424bf0) at system/core/debuggerd/handler/debuggerd_handler.cpp:486

(gdb) info locals

input_read = {

fd_ = 3

}

crash_dump_pid = 27713

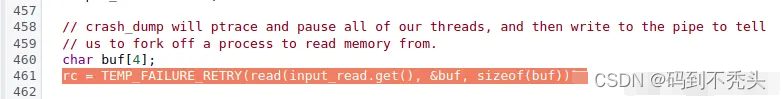

这里我们可以知道 lmkd 线程 27712 在 debuggerd_handler.cpp:486(对应 Google 原生代码如图)处等待,而这个消息需要子进程 crash_dump 发送过来,因此我们在转储进程 27713 Core 文件。

// 进程 27713 堆栈

(gdb) bt

#0 read () at out_sys/soong/.intermediates/bionic/libc/syscalls-arm64/gen/syscalls-arm64.S:488

#1 0x0000005a9ada9840 in read(int, void*, unsigned long pass_object_size0) (fd=7, buf=0x7fd9770e60, count=1) at bionic/libc/include/bits/fortify/unistd.h:162

#2 main (argc=<optimized out>, argv=<optimized out>) at system/core/debuggerd/crash_dump.cpp:453

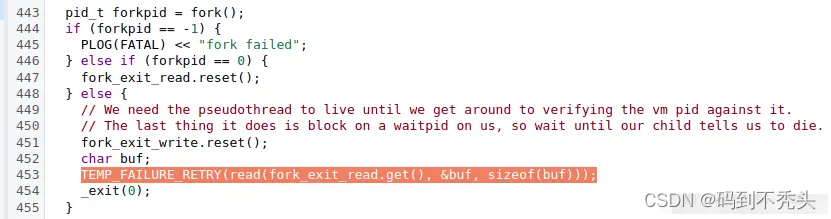

同样的 crash_dump (27713) 在读取管道 FD(0x7),获得一个字符后退出,我们需进行分析子进程 forkpid 的场景。

crash> ps | grep 27713

27713 27712 1 ffffff80c07e2580 IN 0.0 2223184 3776 crash_dump64

27732 27713 3 ffffff811881cb00 IN 0.0 2223312 1500 crash_dump64

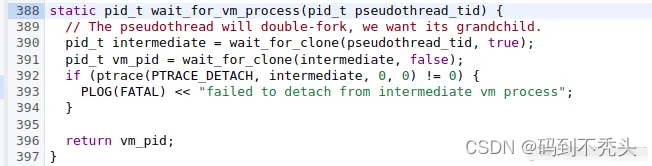

找到子进程 27732 后,进一步转储 Core 下来到 gdb 上解析。

(gdb) thread

[Current thread is 1 (LWP 27732)]

(gdb) bt

#0 wait4 () at out_sys/soong/.intermediates/bionic/libc/syscalls-arm64/gen/syscalls-arm64.S:2570

#1 0x0000005a9adaa370 in wait_for_clone (pid=27712, resume_child=true) at system/core/debuggerd/crash_dump.cpp:384

#2 0x0000005a9ada8058 in wait_for_vm_process (pseudothread_tid=27712) at system/core/debuggerd/crash_dump.cpp:424

#3 main (argc=<optimized out>, argv=<optimized out>) at system/core/debuggerd/crash_dump.cpp:605

(gdb) frame 3

#3 main (argc=<optimized out>, argv=<optimized out>) at system/core/debuggerd/crash_dump.cpp:605

605 in system/core/debuggerd/crash_dump.cpp

(gdb) info locals

output_pipe = {

fd_ = 5

}

input_pipe = {

fd_ = 6

}

从此处我们可以知道 27732 需要等待线程 27712 退出,然而 27712 却阻塞在管道中。

管道阻塞分析

crash> files 27712

PID: 27712 TASK: ffffff8174715dc0 CPU: 3 COMMAND: "lmkd"

ROOT: /first_stage_ramdisk/ CWD: /first_stage_ramdisk/

FD FILE DENTRY INODE TYPE PATH

0 ffffff81d4b6d400 ffffff8005050dd0 ffffff8003338720 CHR /first_stage_ramdisk/dev/null

1 ffffff81d4b6d400 ffffff8005050dd0 ffffff8003338720 CHR /first_stage_ramdisk/dev/null

2 ffffff81d4b6d400 ffffff8005050dd0 ffffff8003338720 CHR /first_stage_ramdisk/dev/null

3 ffffff81d4b6d7c0 ffffff806fb06410 ffffff80287c4858 FIFO

6 ffffff81d4b6de00 ffffff806fb07790 ffffff80287c74d8 FIFO

crash> files 27713

PID: 27713 TASK: ffffff80c07e2580 CPU: 1 COMMAND: "crash_dump64"

ROOT: /first_stage_ramdisk/ CWD: /first_stage_ramdisk/

FD FILE DENTRY INODE TYPE PATH

0 ffffff81d4b6d900 ffffff806fb07790 ffffff80287c74d8 FIFO

1 ffffff81d4b6c000 ffffff806fb06410 ffffff80287c4858 FIFO

2 ffffff81d4b6d400 ffffff8005050dd0 ffffff8003338720 CHR /first_stage_ramdisk/dev/null

3 ffffff8071286c80 ffffff8006b8e000 ffffff80074a8b20 REG /first_stage_ramdisk/sys/kernel/tracing/trace_marker

4 ffffff8071286140 ffffff810930b110 ffffff8017d5ae38 DIR /first_stage_ramdisk/proc/453

5 ffffff81d4b6c000 ffffff806fb06410 ffffff80287c4858 FIFO

6 ffffff81d4b6d900 ffffff806fb07790 ffffff80287c74d8 FIFO

7 ffffff8027f99b80 ffffff81237071e0 ffffff80287c5378 FIFO

crash> files 27732

PID: 27732 TASK: ffffff811881cb00 CPU: 3 COMMAND: "crash_dump64"

ROOT: /first_stage_ramdisk/ CWD: /first_stage_ramdisk/

FD FILE DENTRY INODE TYPE PATH

0 ffffff81d4b6d900 ffffff806fb07790 ffffff80287c74d8 FIFO

1 ffffff81d4b6c000 ffffff806fb06410 ffffff80287c4858 FIFO

2 ffffff81d4b6d400 ffffff8005050dd0 ffffff8003338720 CHR /first_stage_ramdisk/dev/null

3 ffffff8071286c80 ffffff8006b8e000 ffffff80074a8b20 REG /first_stage_ramdisk/sys/kernel/tracing/trace_marker

4 ffffff8071286140 ffffff810930b110 ffffff8017d5ae38 DIR /first_stage_ramdisk/proc/453

5 ffffff81d4b6c000 ffffff806fb06410 ffffff80287c4858 FIFO

6 ffffff81d4b6d900 ffffff806fb07790 ffffff80287c74d8 FIFO

8 ffffff8027f98280 ffffff81237071e0 ffffff80287c5378 FIFO

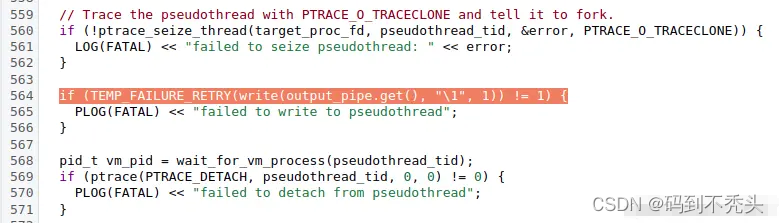

从代码上看正常情况下,目前 27732 处于函数 wait_for_vm_process,此前最后一次往管道写入的数据为 ’0x1‘ ,并且进程 27712 不应该会发生管道阻塞,而会正常退出的。

接下来分析管道里的数据状态。

crash> struct file.private_data ffffff81d4b6c000 -x

private_data = 0xffffff818a2af900,

crash> struct pipe_inode_info.head,tail,bufs 0xffffff818a2af900

head = 1,

tail = 1,

bufs = 0xffffff8016a3ac00,

从 head == tail 可知道,管道里已经没有数据可以读取了。

crash> struct pipe_buffer 0xffffff8016a3ac00 -x

struct pipe_buffer {

page = 0xfffffffe057492c0,

offset = 0x2,

len = 0x0,

ops = 0x0,

flags = 0x10,

private = 0x0

}

offset = 0x2, len = 0x0 说明已经往管道写入过两个字符并且已被读出。

crash> kmem -p 0xfffffffe057492c0

PAGE PHYSICAL MAPPING INDEX CNT FLAGS

fffffffe057492c0 19d24b000 0 0 1 4000000000000000

crash> rd -p 19d24b000

19d24b000: 0000000000000166 f.......

从管道里的数据,可以确定最后一次写入数据 0x1 已经被读过了,并且从数据上我们可以了解到写入 0x1 之前,存在一函数先往管道写入 ‘f’ 字符。 于是找到内部定制的某功能代码实现。大致如下

bool Xxxxx::Xxxxxxx(int input_read_fd) {

char buf[4];

int rc = TEMP_FAILURE_RETRY(read(input_read_fd, &buf, sizeof(buf)));

...

}

最后

原因是: 管道双方读写时序不可控,时序上刚好写者进程先发生,往缓存写入两个字符 01 66,然后读者进程,首次读取管道数据 4 个字符,取出了所有数据,导致后面管道阻塞。

bool Xxxxx::Xxxxxxx(int input_read_fd) {

- char buf[4];

+ char buf[1];

int rc = TEMP_FAILURE_RETRY(read(input_read_fd, &buf, sizeof(buf)));

...

}

4814

4814

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?