OpenEBS存储

一,OpenEBS简介

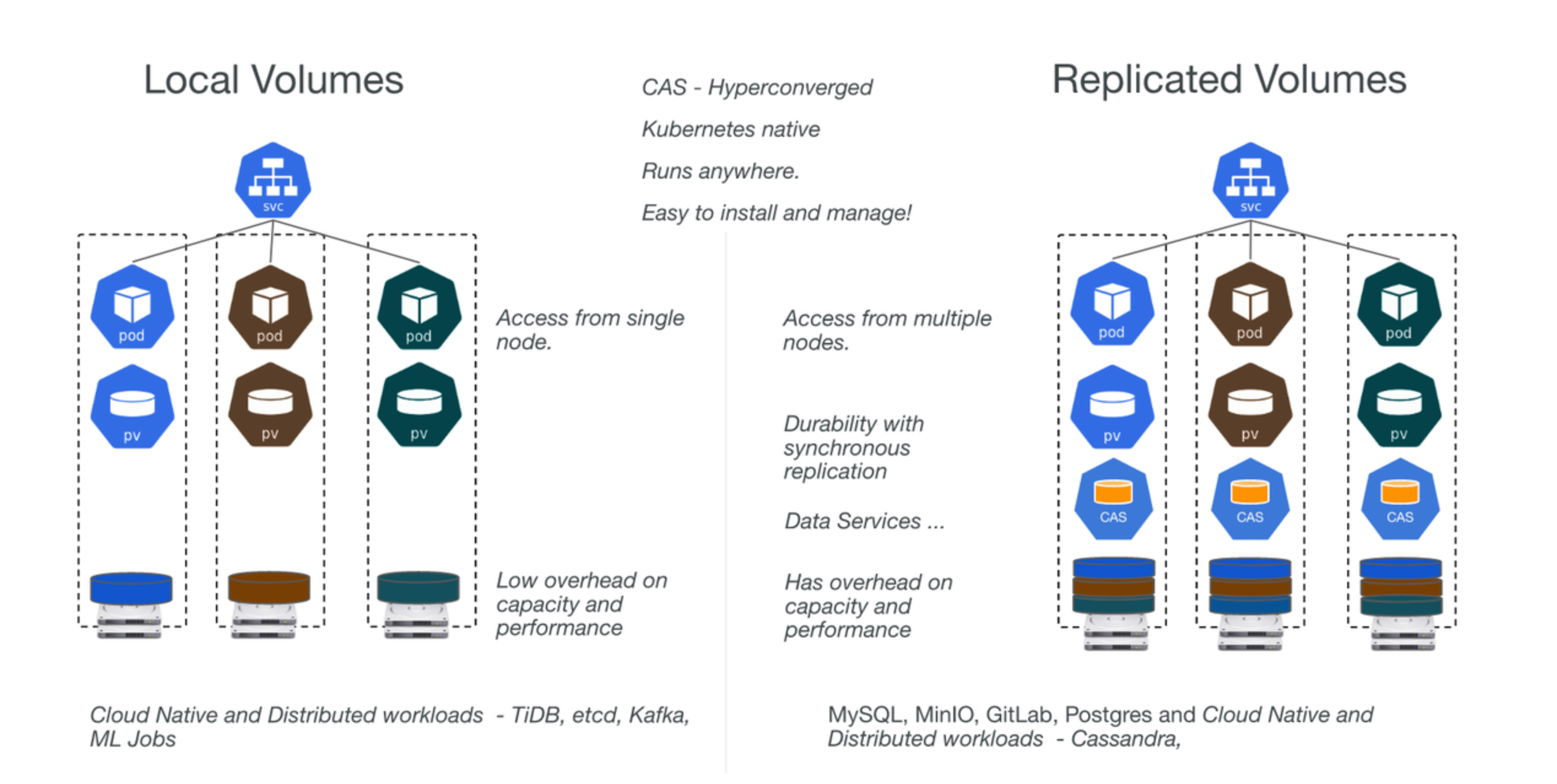

OpenEBS是一组存储引擎,工作节点可用的任何存储转换为本地或分布式 Kubernetes 持久卷, 在高层次上,OpenEBS支持两大类卷——本地卷和复制卷

OpenEBS是Kubernetes本地超融合存储解决方案,它管理节点可用的本地存储,并为有状态工作负载提供本地或高可用的分布式持久卷。 作为一个完全的Kubernetes原生解决方案的另一个优势是,管理员和开发人员可以使用kubectl、Helm、 Prometheus、Grafana、Weave Scope等Kubernetes可用的所有优秀工具来交互和管理OpenEBS,部署需要快速、高度持久、可靠和可扩展的 Kubernetes 有状态工作负载。

二,卷类型

本地卷:本地卷能够直接将工作节点上插入的数据盘抽象为持久卷,供当前节点上的 Pod 挂载使用。

复制卷:OpenEBS 使用其内部的引擎为每个复制卷创建一个微服务,在使用时,有状态服务将数据写入 OpenEBS 引擎,引擎将数据同步复制到集群中的多个节点,从而实现了高可用

三,本地卷存储引擎类型

Local PV hostpath

指定使用本地目录

Local PV device

通过配置磁盘标识信息来定义哪些设备可用于创建本地pv

ZFS Local PV K8S:1.20+

通过zfs服务创建存储池,配置卷,比如条带卷、镜像卷

LVM Local PV K8S:1.20+

通过lvm创建存储卷组

Rawfile Local PV K8s:1.21+

四,复制卷存储引擎类型(副本卷)

Mayastor

cStor K8s 1.21+

Jiva 3.2.0+稳定版本 适配k8s:1.21+

4.1 复制卷实现原理

OpenEBS使用其中一个引擎(Mayastor、cStor或Jiva)为每个分布式持久卷创建微服务

Pod将数据写入OpenEBS引擎,OpenEBS引擎将数据同步复制到集群中的多个节点。

OpenEBS引擎本身作为pod部署,并由Kubernetes进行协调。 当运行Pod的节点失败时,Pod将被重新调度到集群中的另一个节点,OpenEBS将使用其他节点上的可用数据副本提供对数据的访问

4.2 复制卷的优势

OpenEBS分布式块卷被称为复制卷,以避免与传统的分布式块存储混淆,传统的分布式块存储倾向于将数据分布到集群中的许多节点上。 复制卷是为云原生有状态工作负载设计的,这些工作负载需要大量的卷,这些卷的容量通常可以从单个节点提供,而不是使用跨集群中的多个节点分片的单个大卷

五,openebs存储引擎技术选型

| 应用需求 | 存储类型 | OpenEBS卷类型 |

|---|---|---|

| 低时延、高可用性、同步复制、快照、克隆、精简配置 | SSD/云存储卷 | OpenEBS Mayastor |

| 高可用性、同步复制、快照、克隆、精简配置 | 机械/SSD/云存储卷 | OpenEBS cStor |

| 高可用性、同步复制、精简配置 | 主机路径或外部挂载存储 | OpenEBS Jiva |

| 低时延、本地PV | 主机路径或外部挂载存储 | Dynamic Local PV - Hostpath, Dynamic Local PV - Rawfile |

| 低时延、本地PV | 本地机械/SSD/云存储卷等块设备 | Dynamic Local PV - Device |

| 低延迟,本地PV,快照,克隆 | 本地机械/SSD/云存储卷等块设备 | OpenEBS Dynamic Local PV - ZFS , OpenEBS Dynamic Local PV - LVM |

总结:

多机环境,如果有额外的块设备(非系统盘块设备)作为数据盘,选用OpenEBS Mayastor、OpenEBS cStor

多机环境,如果没有额外的块设备(非系统盘块设备)作为数据盘,仅单块系统盘块设备,选用OpenEBS Jiva

单机环境,建议本地路径Dynamic Local PV - Hostpath, Dynamic Local PV - Rawfile,由于单机多用于测试环境,数据可靠性要求较低。

六,k8s中部署openebs服务

6.1 下载yaml创建资源

mkdir openebs && cd openebs

wget https://openebs.github.io/charts/openebs-operator.yaml

kubectl apply -f openebs-operator.yaml

修改yaml中hostpath默认路径

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: openebs-hostpath

annotations:

openebs.io/cas-type: local

cas.openebs.io/config: |

#hostpath type will create a PV by

# creating a sub-directory under the

# BASEPATH provided below.

- name: StorageType

value: "hostpath"

#Specify the location (directory) where

# where PV(volume) data will be saved.

# A sub-directory with pv-name will be

# created. When the volume is deleted,

# the PV sub-directory will be deleted.

#Default value is /var/openebs/local

- name: BasePath

value: "/var/openebs/local/" #默认存储路径

6.2 检查资源创建状态

kubectl get storageclasses,pod,svc -n openebs

kubectl get storageclasses,pod,svc -n openebs

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

storageclass.storage.k8s.io/local-storage kubernetes.io/no-provisioner Delete WaitForFirstConsumer false 372d

storageclass.storage.k8s.io/nfs nfs Delete Immediate true 311d

storageclass.storage.k8s.io/nfs-storageclass (default) fuseim.pri/ifs Retain Immediate true 314d

storageclass.storage.k8s.io/openebs-device openebs.io/local Delete WaitForFirstConsumer false 3m41s

storageclass.storage.k8s.io/openebs-hostpath openebs.io/local Delete WaitForFirstConsumer false 3m41s

NAME READY STATUS RESTARTS AGE

pod/openebs-localpv-provisioner-6779c77b9d-gr9gn 1/1 Running 0 3m41s

pod/openebs-ndm-cluster-exporter-5bbbcd59d4-4nmjl 1/1 Running 0 3m41s

pod/openebs-ndm-llc54 1/1 Running 0 3m41s

pod/openebs-ndm-node-exporter-698gw 1/1 Running 0 3m41s

pod/openebs-ndm-node-exporter-z522n 1/1 Running 0 3m41s

pod/openebs-ndm-operator-d8797fff9-jzrp7 1/1 Running 0 3m41s

pod/openebs-ndm-prk5g 1/1 Running 0 3m41s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/openebs-ndm-cluster-exporter-service ClusterIP None <none> 9100/TCP 3m41s

service/openebs-ndm-node-exporter-service ClusterIP None <none> 9101/TCP 3m41s

#配置hostpath本地卷为默认存储类

#kubectl patch storageclass openebs-hostpath -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

七,Local PV hostpath动态供给

7.1 创建hostpath测试pod

kubectl apply -f demo-openebs-hostpath.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

labels:

name: nginx

namespace: openebs

spec:

replicas: 1

selector:

matchLabels:

name: nginx

template:

metadata:

labels:

name: nginx

spec:

containers:

- resources:

limits:

cpu: 0.5

name: nginx

image: nginx

ports:

- contai

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

1278

1278

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?