Overview

在上篇文章中详述了我们在hadoop配置过程需要涉及到修改的参数。本节将实战相关配置。

部署

守护进程配置

hadoop-env.sh

#添加-Xmx512m

export HADOOP_NAMENODE_OPTS="-Xmx512m"

export HADOOP_DATANODE_OPTS="-Xmx256m"

export HADOOP_SECONDARYNAMENODE_OPTS="-Xmx512m"

export HADOOP_PORTMAP_OPTS="-Xmx512m"

export HADOOP_CLIENT_OPTS="-Xmx128m"

#修改PID文件路径到/var/run。默认是在/tmp下面,正常来说PID文件应该放在只有写权限(X)的路径下面。

export HADOOP_PID_DIR=/var/run

#修改默认日志文件路径到/var/log/hadoop-log下面

export HADOOP_LOG_DIR=/var/log/hadoop-log/$USERyarn-env.sh

JAVA_HEAP_MAX=-Xmx512m

export YARN_RESOURCEMANAGER_HEAPSIZE=512

export YARN_TIMELINESERVER_HEAPSIZE=512

export YARN_NODEMANAGER_HEAPSIZE=512

YARN_LOG_DIR="/var/log/hadoop-log/yarn"mapred-env.sh

export HADOOP_JOB_HISTORYSERVER_HEAPSIZE=512

export HADOOP_MAPRED_LOG_DIR="/var/log/hadoop-log/mappred"注意

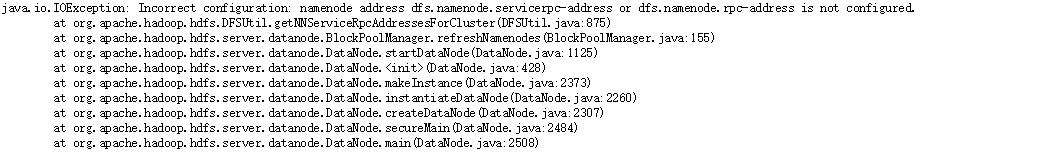

在集群配置完毕之后按照单节点模式配置hdfs-site.xml发现的

集群配置

core-site.xml

<configuration>

<!--HDFS路径-->

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop-master:9000</value>

</property>

<!--缓冲区大小-->

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

</configuration>hdfs-site.xml

<configuration>

<!--NameNode Config Start -->

<!--namenode的namespace和事物日志本地存储路径-->

<property>

<name>dfs.namenode.name.dir</name>

<value>/data/hadoop/hdfs/namenode</value>

</property>

<!--复本数量-->

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<!--HDFS block size -->

<property>

<name>dfs.blocksize</name>

<value>268435456</value>

</property>

<!--namenode允许Datanode最大的RPC连接数-->

<property>

<name>dfs.namenode.handler.count</name>

<value>1000</value>

</property>

<!--NameNode Config End -->

<!--DataNode Config Start -->

<!--datanode的本地存储路径 -->

<property>

<name>dfs.datanode.data.dir</name>

<value>/data/hadoop/hdfs/datanode</value>

</property>

<!--DataNode Config End -->

</configuration>yarn-site.xml

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<!--YARN common Config Start -->

<!--是否启用ACLs?默认是false -->

<property>

<name>yarn.acl.enable</name>

<value>false</value>

</property>

<!--权限控制,默认是*表示允许全部用户访问 -->

<property>

<name>yarn.admin.acl</name>

<value>*</value>

</property>

<property>

<name>yarn.log-aggregation-enable</name>

<value>false</value>

</property>

<!--YARN common Config End -->

<!--YARN ResouceManager Config Start -->

<!--host配置,可以供以后所有的yarn.resourcemanager.*使用-->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>hadoop-master</value>

</property>

<!--客户端提交job的地址 -->

<property>

<name>yarn.resourcemanager.address</name>

<value>${yarn.resourcemanager.hostname}:8032</value>

</property>

<!--applicationMaster获取scheduler的资源的接口地址-->

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>${yarn.resourcemanager.hostname}:8030</value>

</property>

<!--NodeManagers地址 -->

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>${yarn.resourcemanager.hostname}:8031</value>

</property>

<!--管理员地址 -->

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>${yarn.resourcemanager.hostname}:8033</value>

</property>

<!-- WEB UI地址 -->

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>${yarn.resourcemanager.hostname}:8088</value>

</property>

<!--调度类-->

<property>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler</value>

</property>

<!--为每个容器请求resource manager后分配到的最小内存,单位MB-->

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>1024</value>

</property>

<!--为每个容器请求resource manager后分配到的最大内存,单位MB-->

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>3096</value>

</property>

<!--YARN ResourceManager Config End -->

<!--YARN NodeManager Config Start -->

<!-- nodemanager一共实际能够分配的内存 -->

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>3096</value>

</property>

<!-- 虚拟内存,一般是实际物理内存的2.1倍 -->

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>2.1</value>

</property>

<!--中间结果存放位置,类似于1.0中的mapred.local.dir。注意,这个参数通常会配置多个目录,已分摊磁盘IO负载 -->

<property>

<name>yarn.nodemanager.local-dirs</name>

<value>/data/hadoop/hdfs/nodemanager/nm-local-dir</value>

</property>

<!-- 日志文件路径,逗号分隔,多个路径有助于分散磁盘I/O -->

<property>

<name>yarn.nodemanager.log-dirs</name>

<value>/var/log/hadoop-log/nodemanager</value>

</property>

<!-- NodeManager上的日志保留多长时间,这个参数只有在log-aggregation参数为false状态才有效 -->

<property>

<name>yarn.nodemanager.log.retain-seconds</name>

<value>10800</value>

</property>

<!-- 当应用程序运行结束后,日志被转移到的HDFS目录(启用日志聚集功能时有效) -->

<property>

<name>yarn.nodemanager.remote-app-log-dir</name>

<value>/tmp/logs</value>

</property>

<!-- 添加到的远程日志目录的路径(必须是在启用日志聚合功能时候有效),路径yarn.nodemanager.remote−app−log−dir/{user}/${该参数的路径}。 -->

<property>

<name>yarn.nodemanager.remote-app-log-dir-suffix</name>

<value>logs</value>

</property>

<!-- Shuffle服务,需要设置Map Reduce应用程序-->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!--YARN NodeManager Config End -->

<!--YARN History Server Config Start -->

<!-- 设置聚合后的日志在HDFS保留多久,我这里设置为7天 -->

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>604800</value>

</property>

<!-- 设置多久检查一次日志,设置太小会污染namenode的,这里我设置为一天一次 -->

<property>

<name>yarn.log-aggregation.retain-check-interval-seconds</name>

<value>86400</value>

</property>

<!--YARN History Server Config End -->

</configuration>mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<!--MapReduce Applications Config Start -->

<!--允许框架,现在默认都是yarn了 -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<!-- Mapreduce允许的MAP内存 -->

<property>

<name>mapreduce.map.memory.mb</name>

<value>512</value>

</property>

<!-- 在map中JVM的堆大小,一般是mapreduce.map.memory.mb的80%-->

<property>

<name>mapreduce.map.java.opts</name>

<value>-Xmx409M</value>

</property>

<!--MapReduce允许的reduce的最大内存,一般是map的三倍 -->

<property>

<name>mapreduce.reduce.memory.mb</name>

<value>1536</value>

</property>

<!--在reduce中JVM的堆大小,一般是mapreduce.reduce.memory.mb的80% -->

<property>

<name>mapreduce.reduce.java.opts</name>

<value>-Xmx1024M</value>

</property>

<!-- 限制排序所能够使用的内存 -->

<property>

<name>mapreduce.task.io.sort.mb</name>

<value>256</value>

</property>

<!--限制文件排序时候一次所允许同时最多多少个文件可以并行 -->

<property>

<name>mapreduce.task.io.sort.factor</name>

<value>50</value>

</property>

<!-- reduce shuffle阶段允许并行传输数据的数量 -->

<property>

<name>mapreduce.reduce.shuffle.parallelcopies</name>

<value>25</value>

</property>

<!--MapReduce Applications Config End -->

<!--MapReduce JobHistory Server Config Start -->

<!--job history的地址,默认是0.0.0.0:10020 -->

<property>

<name>mapreduce.jobhistory.address</name>

<value>localhost:10020</value>

</property>

<!--WEB UI的地址,默认是0.0.0.0:19888 -->

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>localhost:19888</value>

</property>

<property>

<name>mapreduce.jobhistory.intermediate-done-dir</name>

<value>${yarn.app.mapreduce.am.staging-dir}/history/done_intermediate</value>

</property>

<property>

<name>mapreduce.jobhistory.done-dir</name>

<value>${yarn.app.mapreduce.am.staging-dir}/history/done</value>

</property>

<!--MapReduce JobHistory Server Config End -->

</configuration>参照

如何在hadoop2.0上面配置YARN和MapReduce2

http://zh.hortonworks.com/blog/how-to-plan-and-configure-yarn-in-hdp-2-0/

Hadoop YARN如何调度内存和CPU

http://www.searchbi.com.cn/showcontent_78166.htm

YARN在本地的资源处理详解

http://zh.hortonworks.com/blog/resource-localization-in-yarn-deep-dive/

734

734

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?